AMD to present Neural Texture Block Compression — rivals Nvidia's texture compression research

Tune into EGSR next week for this and more.

AMD's GPUOpen team has announced its plans to present its new paper, "Neural Texture Block Compression," at the Eurographics Symposium on Rendering (EGSR) on July 2nd. The research is reminiscent of Nvidia's previously announced Neural Texture Compression, which was first published in a paper last May.

The AMD GPUOpen team, represented by S. Fujieda and T. Harada at the conference, will present its new method to "compress textures using a small neural network, reducing data size." AMD's research will likely either replicate or expand on Nvidia's Random-Access Neural Compression of Material Textures paper; both papers focus on neural network-based image compression for video game textures, saving storage space with little visual degradation.

We'll present "Neural Texture Block Compression" @ #EGSR2024 in London. Nobody likes downloading huge game packages. Our method compresses the texture using a neural network, reducing data size.Unchanged runtime execution allows easy game integration. https://t.co/gvj1D8bfBf pic.twitter.com/XglpPkdI8DJune 25, 2024

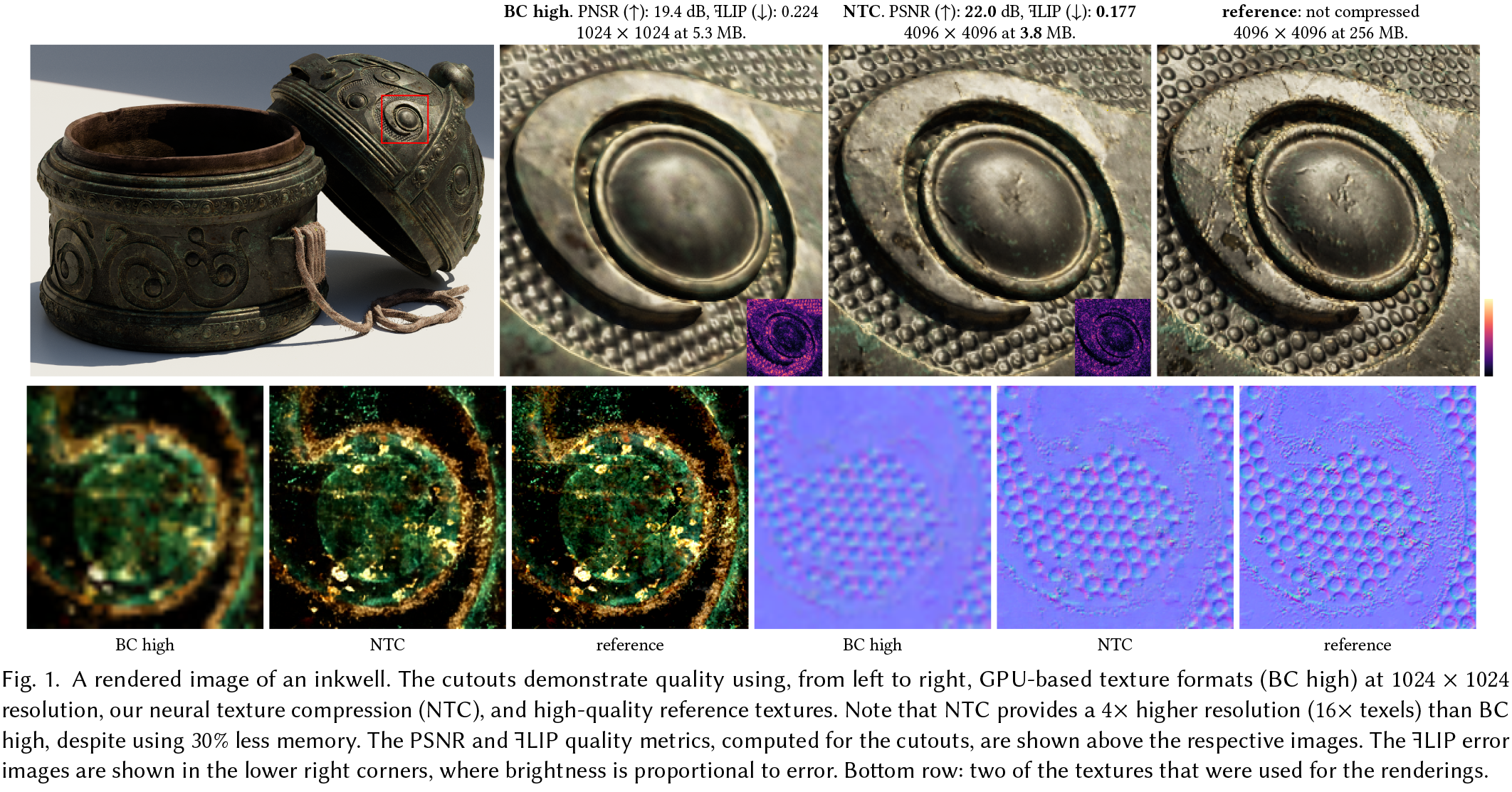

While we haven't seen anything from Team Red about what its current neural texture compression looks like in action, we have seen very exciting things from Nvidia about its neural texture compression (NTC) research. Nvidia's NTC works by "compress[ing] multiple material textures and their mipmap chains collectively and then decompress[ing] them using a neural network that is trained for a particular pattern it decompresses." From last year's progress, NTC produces a much higher-definition image than traditional "BC" block compression but takes longer; NTC rendered a 4K texture in 1.15 ms compared to 0.49 ms to render a 4K image with BC.

AMD's choice of words when naming its presentation is also noteworthy. "Neural texture block compression" suggests some level of fusion of the traditional block compression used in games today and the neural network-based style of the future. Whether this paper will blend the two technologies or include block compression for easier name recognition remains to be seen.

For better or for worse, AI seems to be the future of innovation in the gaming and graphics world. Nvidia CEO Jensen Huang spoke at a Computex 2024 Q&A about the future of AI in gaming (specifically DLSS, or deep learning super sampling) and shared his goal is for DLSS and similar technologies to do more than upscale graphics but to build out even more a game world. Huang shared that DLSS will eventually fully generate textures and objects and create fresh AI NPCs. AMD's FidelityFX Super Resolution isn't very far behind Nvidia's DLSS in performance today, so next week's presentation will likely indicate how close AMD is to catching up to Nvidia's performance in the software end of graphics.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

bit_user Reply

This seems like a pure win, if it can be implemented in hardware with similar efficiency as conventional texture compression! Given that textures are compressed at all, wouldn't you want the most efficient (i.e. best PSNR per bit) compression possible?The article said:For better or for worse, AI seems to be the future of innovation in the gaming and graphics world. -

At this point these are just research papers and neural compression for textures isn’t a feature available in any game yet.Reply

NVIDIA’s Neural Texture Compression still hasn’t found its way in any game, though this might change in future iteration of the DLSS tech, but that's just an assumption for now.

Intel also followed up with a paper of its own that proposed an AI-driven level of detail (LoD) technique that could make models look more realistic from a distance, but this never panned out.

https://www.intel.com/content/www/us/en/developer/articles/technical/neural-prefiltering-for-correlation-aware.html

Although, AMD didn't mention the benefits of its texture compression technology compared to conventional techniques, the only advantage AMD's tech might have over NVidia's neural texture compression is that it will be quite easy to implement.

Because AMD's tech uses unmodified "runtime execution" which can allow for easy game integration, but this also does not come without its own caveats. AMD is trying to catch up with NVIDIA in AI-based neural techniques, but it's not going to be an easy feat. -

usertests Reply

AMD already has higher VRAM size, but there's no reason to not improve compression.umeng2002_2 said:How is this faster than just higher VRAM bandwidth and size? -

bit_user Reply

If you can get more texels/s from whatever VRAM size & speed you have (and keep in mind this applies to not just the flagship models), how is that not a good thing?umeng2002_2 said:How is this faster than just higher VRAM bandwidth and size?

I sort of wonder whether this might be like a 2-tiered compression scheme. Like, what if there's a texture cache, and as textures are paged in, the "neural block compression" is removed to convert it to some regular block-based texture compression scheme. Because the thing about inferencing is that it's a lot more compute intensive than standard texture lookups, and if this scheme supposedly works on existing hardware, then it must be implemented via shaders and the only way that's probably fast enough is if you're not doing it directly inline with all your texture lookups.

Let's not forgot that texture lookups are so compute-intensive that they're one of the few parts of the rendering pipeline that are cast into silicon.