Nvidia Uses Neural Network for Innovative Texture Compression Method

Nvidia proposes to use neural networks for innovative texture compression method.

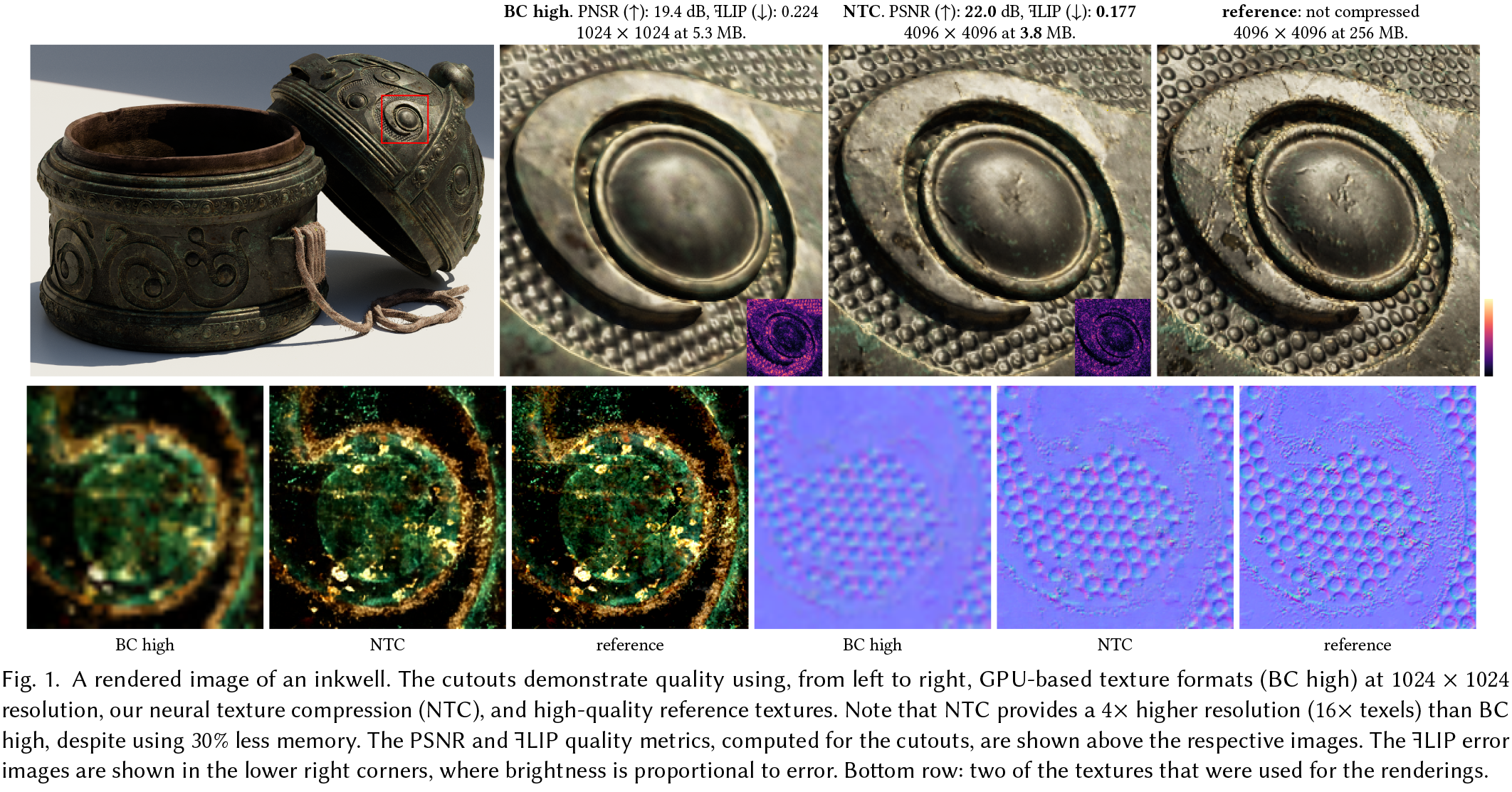

Nvidia this week introduced its new texture compression method that provides four times higher resolution than traditional Block Truncation Coding (BTC, BC) methods while having similar storage requirements. The core concept of the proposed approach is to compress multiple material textures and their mipmap chains collectively and then decompress them using a neural network that is trained for a particular pattern it decompresses. In theory, the method can even impact future GPU architectures. Yet, for now the method has limitations.

New Requirements

Recent advancements in real-time rendering for video games have approached the visual quality of movies due to usage of such techniques as physically-based shading for photorealistic modeling of materials, ray tracing, path tracing, and denoising for accurate global illumination. Meanwhile, texturing techniques have not really advanced at a similar pace mostly because texture compression methods essentially remained the same as in the late 1990s, which is why in some cases many objects look blurry in close proximity.

The reason for this is because GPUs still rely on block-based texture compression methods. These techniques have very efficient hardware implementations (as fixed-function hardware to support them have evolved for over two decades), random access, data locality, and near-lossless quality. However, they are designed for moderate compression ratios between 4x and 8x and are limited to a maximum of 4 channels. Modern real-time renderers often require more material properties, necessitating multiple textures.

Nvidia's Method

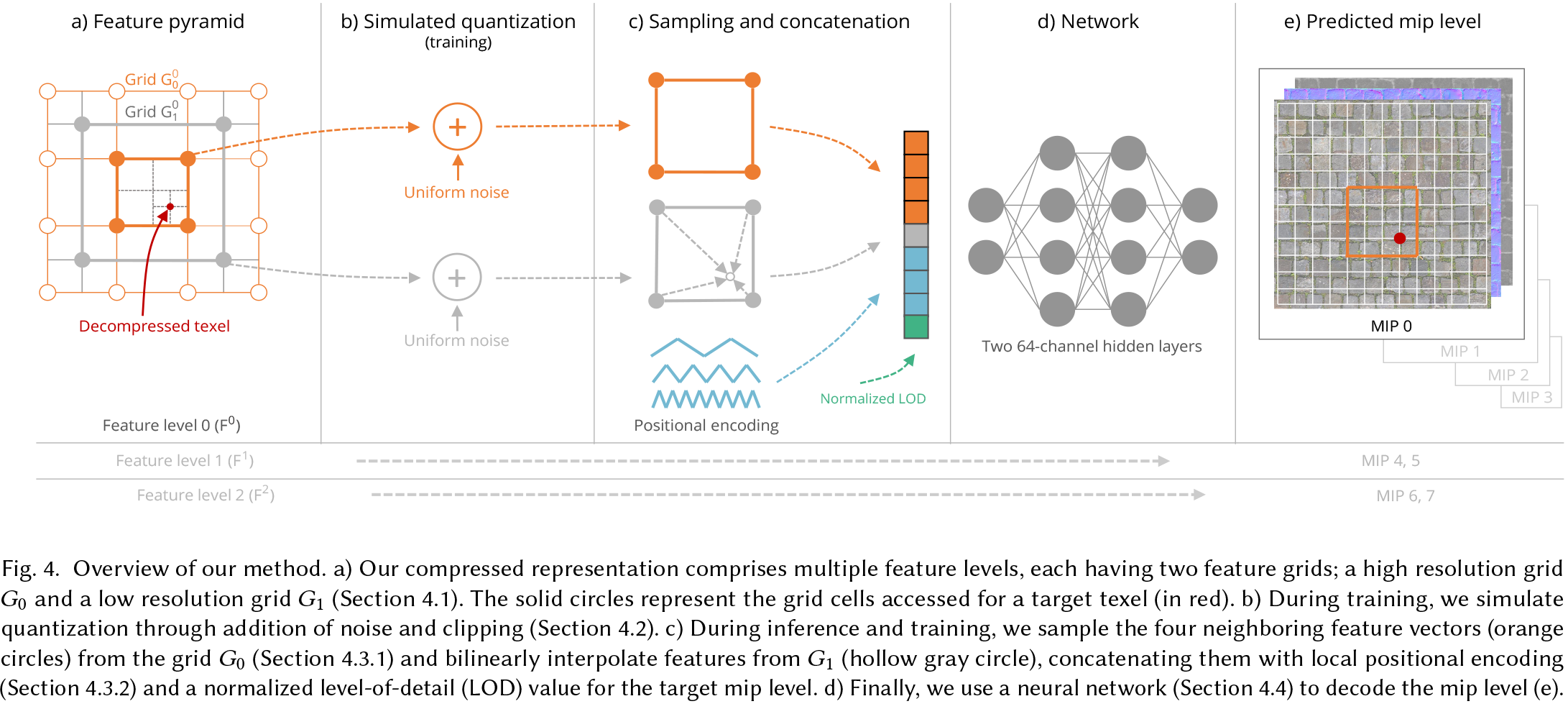

This is where Nvidia's Random-Access Neural Compression of Material Textures (NTC) comes into play. Nvidia's technology enables two additional levels of detail (16x more texels, so four times higher resolution) while maintaining similar storage requirements as traditional texture compression methods. This means that compressed textures with per-material optimization with resolutions up to 8192 x 8192 (8K) are now feasible.

To do so, NTC exploits redundancies spatially, across mipmap levels, and across different material channels. This ensures that texture detail is preserved when viewers are in close proximity to an object, something that modern methods cannot enable.

Nvidia claims that NTC textures are decompressed using matrix-multiplication hardware such as tensor cores operating in a SIMD-cooperative manner, which means that the new technology does not require any special purpose hardware and can be used on virtually all modern Nvidia GPUs. But perhaps the biggest concern is that every texture requires its own optimized neural network to decompress, which puts some additional load on game developers.

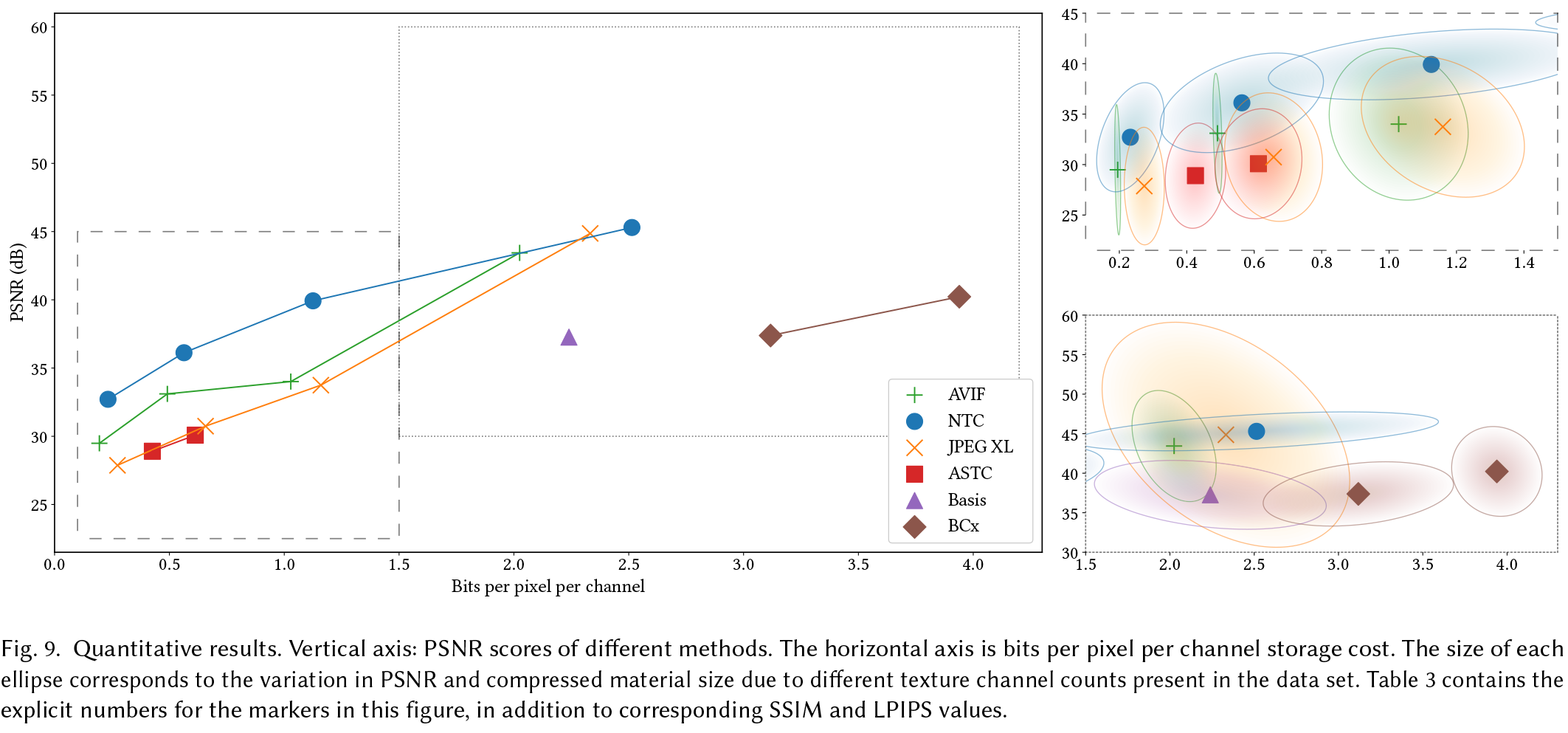

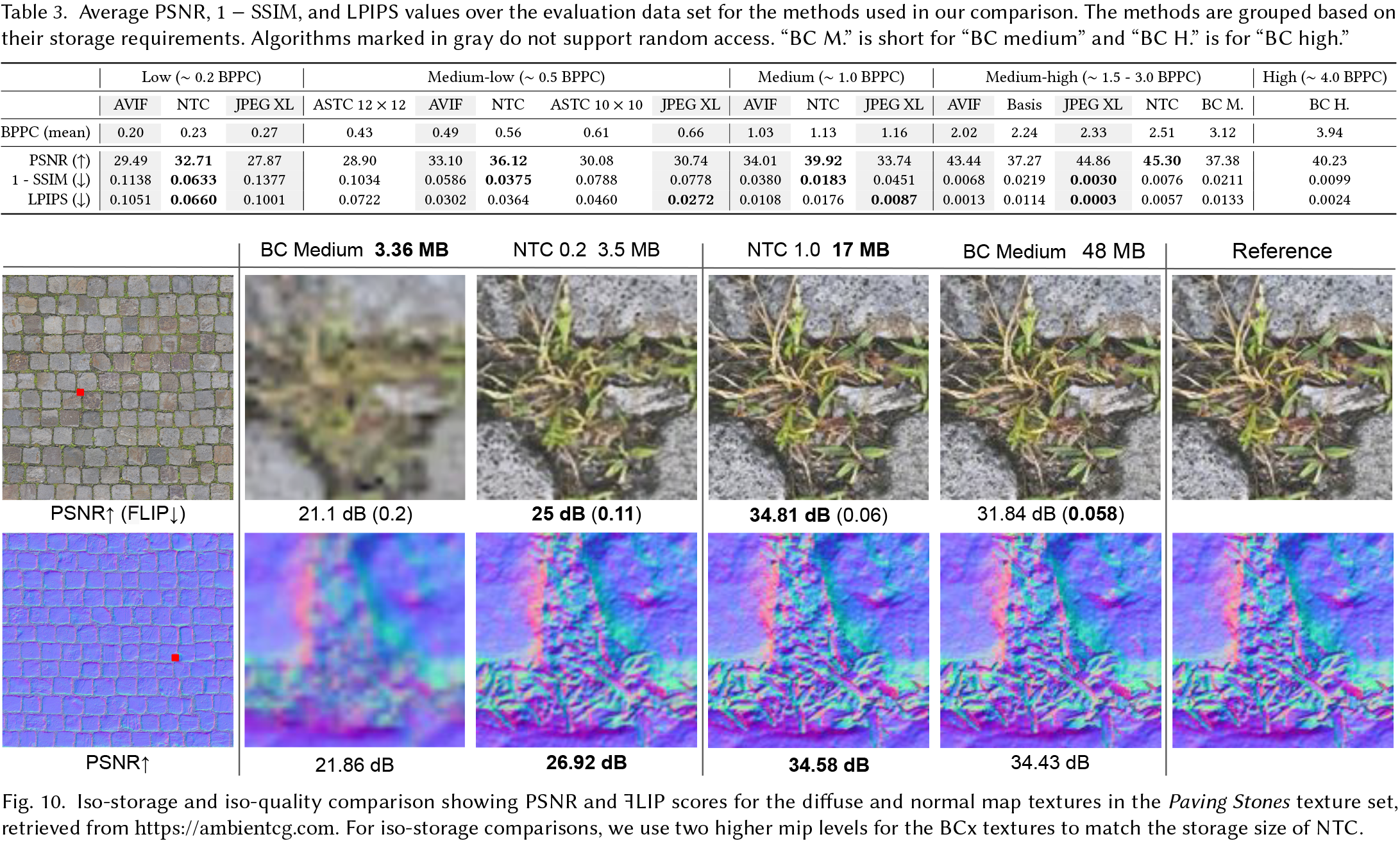

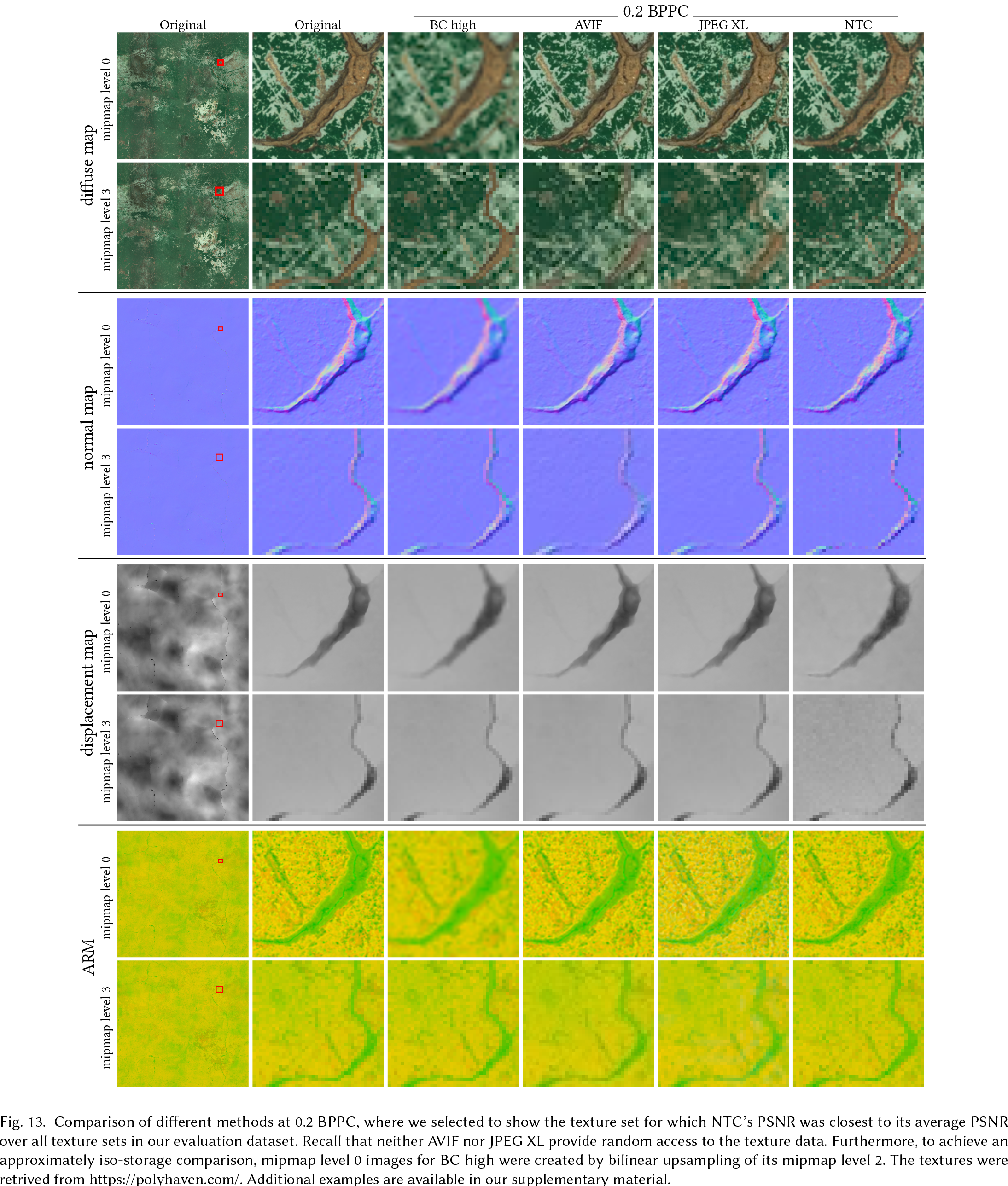

Nvidia says that resulting texture quality at these aggressively low bitrates is said to be comparable to or better than recent image compression standards, such as AVIF and JPEG XL, which are not designed for real-time decompression with random access anyway.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Practical Advantages and Disadvantages

Indeed, images demonstrated by Nvidia clearly show that NTC is better than traditional Block Coding-based technologies. However, Nvidia admits that its method is slower than traditional methods (it took a GPU 1.15 ms to render a 4K image with NTC textures and 0.49 ms to render a 4K image with BC textures), but it provides 16x more texels albeit with stochastic filtering.

While NTC is more resource-intensive than conventional hardware-accelerated texture filtering, the results show that it delivers high performance and is suitable for real-time rendering. Moreover, in complex scenes using a fully-featured renderer, the cost of NTC can be partially offset by the simultaneous execution of other tasks (e.g., ray tracing) due to the GPU's ability to hide latency.

Meanwhile, rendering with NTC can be accelerated new hardware architectures, increased number of dedicated matrix-multiplication units that might used, increased cache sizes, and register usage. Actually, some of the optimizations can be made on the programmable level.

Nvidia also admits that NTC is not a completely lossless method of texture compression and produce visual degradation at low bitrates and has some limitations, such as sensitivity to channel correlation, uniform resolution requirements, and limited benefits at larger camera distances. Furthermore, advantages are proportional to channel count and may not be as significant for lower channel counts. Also, since NTC is optimized for material textures and always decompresses all material channels, it makes it potentially unsuitable for use in different rendering contexts.

While the advantage of NTC is that it does not use fixed-function texture filtering hardware to produce its superior results, this is also its key disadvantage. Texture filtering cost is computationally expensive, which is why for now anisotropic filtering with NTC is not feasible for real-time rendering. Meanwhile, stochastic filtering can introduce flickering.

But despite limitations, NTC's compression of multiple channels and mipmap levels together produces a result that exceeds industry standards. Nvidia researchers believe that its approach is paving the way for cinematic-quality visuals in real-time rendering and is practical for memory-constrained graphics applications. Yet, it introduces a modest timing overhead compared to simple BTC algorithms, which impacts performance.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.