Intel Arc B580 "Battlemage" GPU boxes appear in shipping manifests — Xe2 may be preparing for its desktop debut in December

A December launch is on the cards.

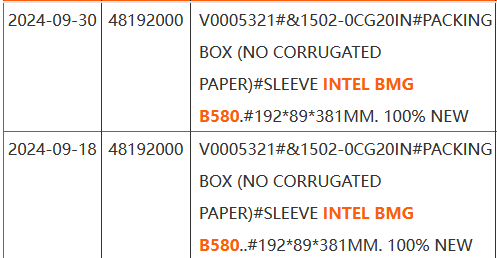

Yesterday, we reported that Intel might be gearing up to unleash Battlemage early in December - ahead of AMD's RDNA 4 and Nvidia's Blackwell GPUs. Today, a shipping manifest at NBD - highlighted by user josefk972 at X indicates that Team Blue has been dispatching what appears to be GPU boxes for a never-before-seen "BMG B580" card - likely referring to the Arc B580 based on Battlemage.

Per our testing, while a GPU's retail box is probably the least likely thing you'd expect in a pre-launch leak, it does highlight that Intel is hard at work shipping these boxes to OEMs. Battlemage, or Xe2, first debuted with Intel's Lunar Lake chips and stood firm against AMD's Strix Point offerings. The drivers have improved greatly—at least when compared to Alchemist—but a few kinks still need ironing.

It seems this manifest slipped from our eyes as it dates back to September 18. Intel has been sending out packing or retail boxes - measuring around 192mm x 89mm x 381mm for the Arc B580 - a budget offering from the Battlemage lineup. The packaging is relatively small but should be enough to accommodate a compact dual-slot GPU.

We are still unaware of the specifications, although Battlemage is rumored to feature three GPUs: Arc BMG-31, Arc BMG-20, and Arc BMG-G10. The BMG-31 reportedly offers 32 Xe cores, netting us 256 Xe Vector Engines or 4096 ALUs.

Intel will target the budget gaming segment, like AMD, with Xe2 on the desktop to penetrate the mainstream market better. Team Blue needs some serious value and incentives to win the average consumer's trust, such as higher VRAM capacities and competitive pricing. Initial leaks paint a positive picture as a 20-Xe2 core dGPU (Dedicated GPU) beat the 28-Xe core-based Arc A750 in a benchmark recently. RDNA 4 will likely be the more mature architecture as it builds upon RDNA 3.5 - Battlemage's competitor and is poised to make substantial changes in the RT department and AI capabilities.

With more leaks surfacing daily, Battlemage's potential announcement next month seems likely, but we still advise users to take this rumor with a pinch of salt. It makes sense for Intel to capitalize on the holiday season and make hay while the sun shines, but for this to transpire, it must be able to offer Battlemage in volume—at least until RDNA 4 and Blackwell hit shelves.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

rluker5 That can't be right, I kept hearing they were cancelled. Well, definitely cancelled after this gen, for sure this time.Reply

JK, I plan to buy a Battlemage. Hopefully their high end will still come in 2 slot coolers. But to be fair, the novelty of seeing some things done worse and some better is probably worth more to me than many others, and I can always spend more on a different 50 series if I have to. But I do expect pretty good performance for the price. -

bit_user Reply

I hope it comes back to bite the clowns who kept spreading those rumors. I'm sure it won't, but I'd really like to see some egg on their faces.rluker5 said:That can't be right, I kept hearing they were cancelled. Well, definitely cancelled after this gen, for sure this time.

JK -

Eximo I will be happy with 4070 like performance, which is the old rumor. It will replace my EVGA 3080 Ti so that can go into my collection.Reply

Also, someone poke EVGA until they start making monitors. I want a monitor that says EVGA because that is hilarious. -

User of Computers BTM should have a better launch than ACM ever did, with the more established drivers. LNL with the BMG uarch has already been in the market, and the drivers have probably been cooking for much much longer than that.Reply -

Mama Changa Reply

You're getting that out of the B580 IMO, that would at the very best from a G10 card like B780 and I seriously doubt that will occur either. If you get regular 4070/7800XT performance, it'll be as good as it gets. It would need to be $400 max, but Intel can't really afford to give away these cards like they did with Alchemist.Eximo said:I will be happy with 4070 like performance, which is the old rumor. It will replace my EVGA 3080 Ti so that can go into my collection.

Also, someone poke EVGA until they start making monitors. I want a monitor that says EVGA because that is hilarious. -

P.Amini Can we stop calling "Graphics Card" or "VGA" a GPU please? It's so freaking confusing and wrong, it's like calling a PC a CPU!Reply -

CelicaGT Reply

While you are technically correct, GPU is heavily implanted in the PC enthusiast lexicon, dating back to the late 90's. I highly doubt you will see this change. Personally I use "graphics card" as it's the most correct term. GPU is the processor on the card specifically, and VGA is an out of date term that was also a standard at the time (mid-late 80's), generally referring to the cards of the time, as the term "GPU" does now. VGA, VGA cable, or VGA resolution are the terms I recall. D-sub was/is also referred to as a VGA port.P.Amini said:Can we stop calling "Graphics Card" or "VGA" a GPU please? It's so freaking confusing and wrong, it's like calling a PC a CPU! -

bit_user Reply

I think the issue is really that you can't buy a GPU separately from the graphics card it's on. Plus, virtually all functional aspects of the graphics card are determined by the GPU model, other than a little bit of variation in clock speeds and sometimes with two choices of memory capacity. That's how they end up being virtually synonymous.CelicaGT said:While you are technically correct, GPU is heavily implanted in the PC enthusiast lexicon,

By contrast, a CPU is quite distinct from the motherboard or most other aspects of a PC. Telling me which CPU model you have doesn't tell me about the memory capacity, the secondary storage, its graphics card (if any), etc. So, a CPU is more distinct from a PC than a GPU is from a graphics card. Still, even if the analogy is imperfect, I get your point.P.Amini said:it's like calling a PC a CPU!

I agree that the authors of this site should all know well enough to say "graphics card" when they're talking about the entire card, and GPU when they're talking about just its processing unit. -

usertests Replyrluker5 said:That can't be right, I kept hearing they were cancelled. Well, definitely cancelled after this gen, for sure this time.

It's "effectively cancelled".bit_user said:I hope it comes back to bite the clowns who kept spreading those rumors. I'm sure it won't, but I'd really like to see some egg on their faces.