Jensen says DLSS 4 'predicts the future' to increase framerates without introducing latency (Updated: Nope)

Multi frame generation will be very different from framegen.

After the Blackwell RTX 50-series announcement last night, there's been some confusion. In a live Q&A session today we Nvidia CEO Jensen Huang, we were able to ask for clarification on DLSS 4 and some of the other neural rendering techniques that Nvidia demoed. With AI being a key element of the Blackwell architecture, it's important to better understand how it's being used and what that means for various use cases.

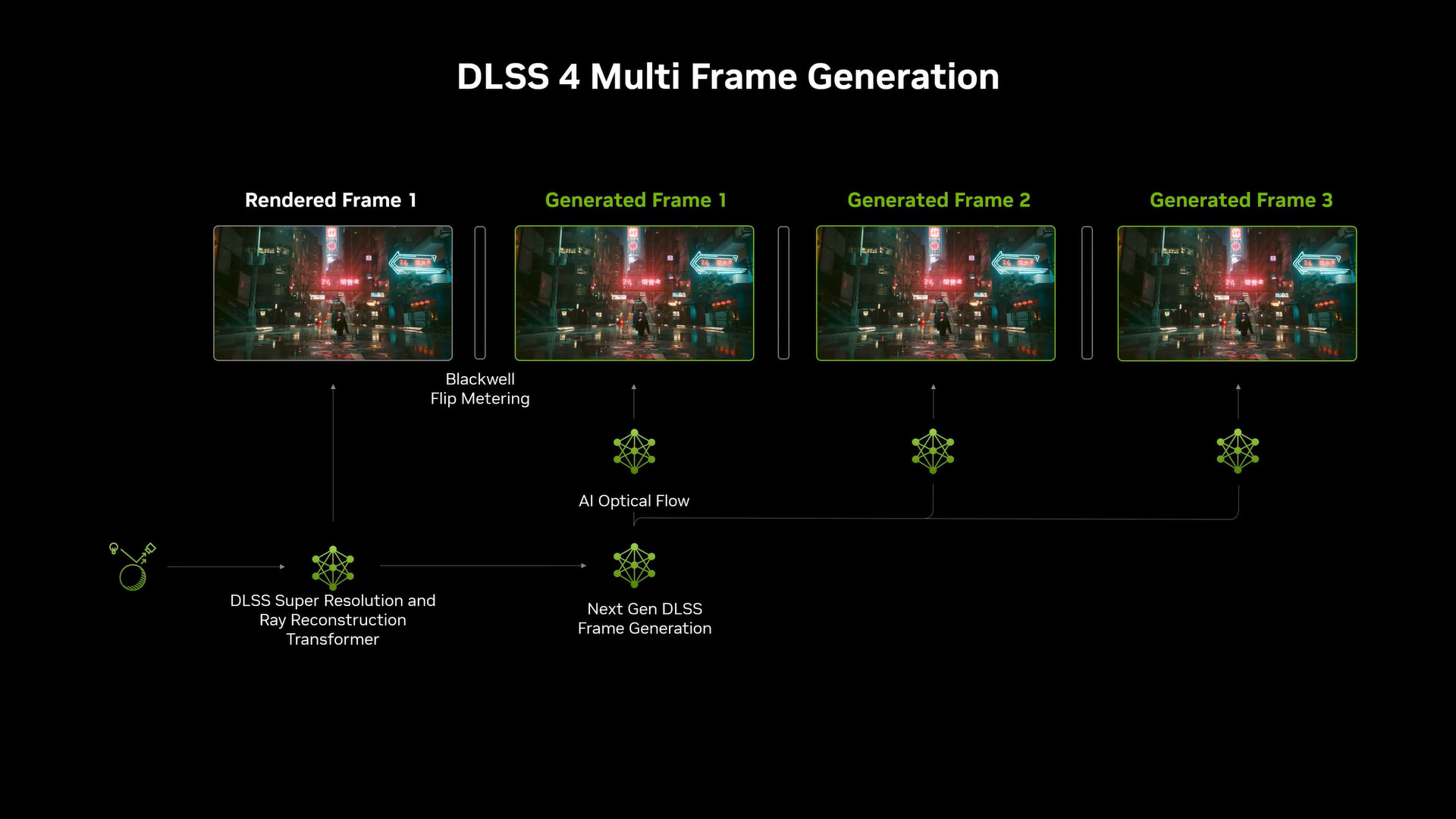

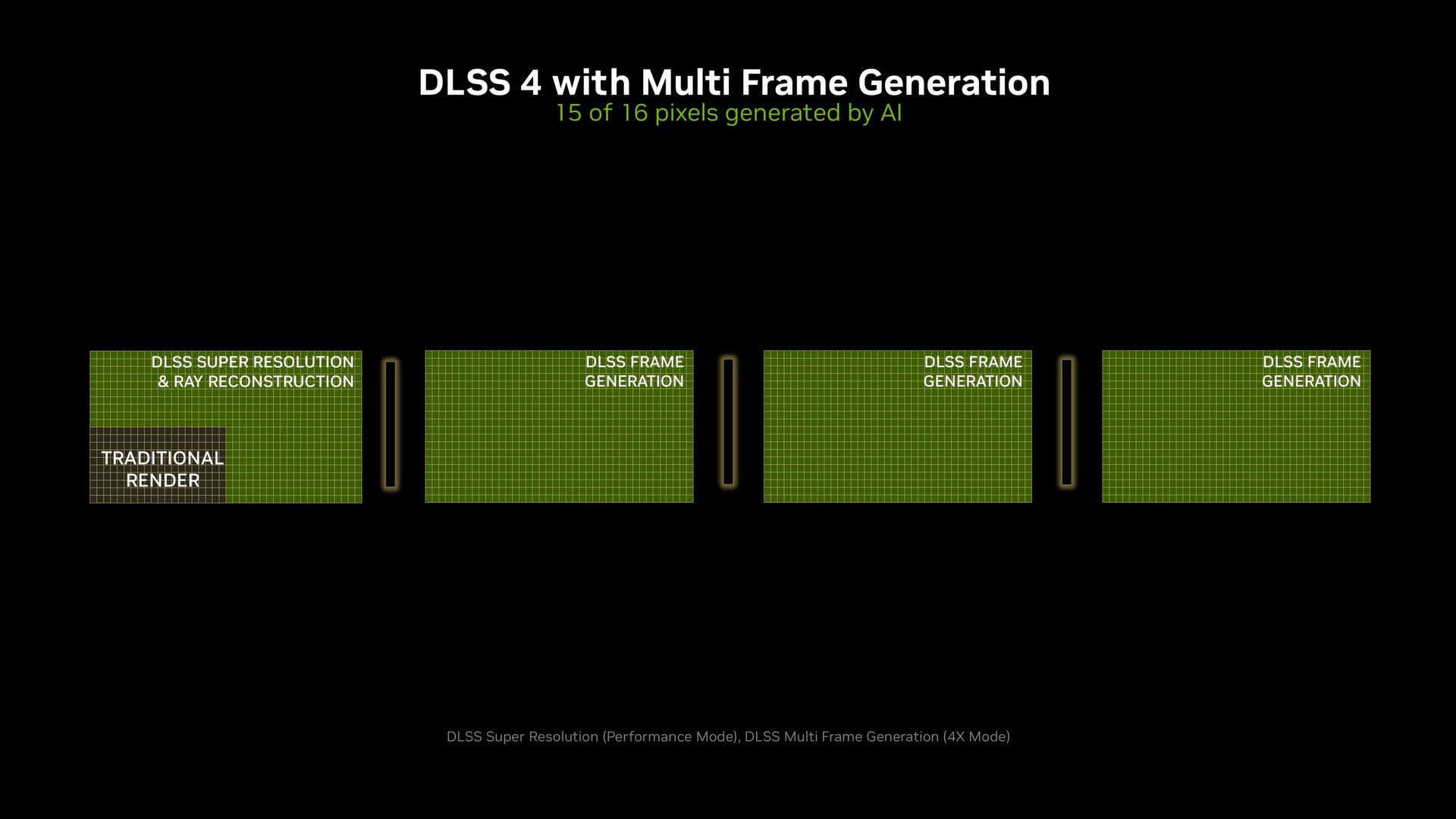

One of the big performance "multipliers" with DLSS 4 is multi frame generation. With DLSS 3, Nvidia would render two frames and then use AI to interpolate an intermediate frame. This adds some latency to the game rendering pipeline and also causes some frame pacing issues. On the surface, it sounded as though DLSS 4 would do something similar, but instead of generating one frame it would generate two or three frames — multiple frames of interpolation, in other words. It turns out that's incorrect.

Update: No, it's not. The initial understanding that multi frame generation uses interpolation is the correct one. That's all we can say for now, and hopefully this clears up any confusion. More details to come at a later date.

When we asked how DLSS 4 multi frame generation works and whether it was still interpolating, Jensen boldly proclaimed that DLSS 4 "predicts the future" rather than "interpolating the past." That drastically changes how it works, what it requires in terms of hardware capabilities, and what we can expect in terms of latency.

There's still work being done without new user input, though the Reflex 2 warping functionality may at least partially mitigate that. But based on previously rendered frames, motion vectors, and other data, DLSS 4 will generate new frames to create a smoother experience. It also has new hardware requirements that help it maintain better frame pacing.

[We think the misunderstanding or incorrect explanation stems from the overlap between multi frame generation and Reflex 2. There's some interesting stuff going on with Reflex 2, which does involve prediction of a sort, and that probably got conflated with frame generation.]

We haven't been able to try it out in person, yet, so we can't say for certain how DLSS 4 multi frame generation compares to DLSS 3 framegen and normal rendering. It sounds as though there's still a latency penalty, but how much that will be felt — and particularly how it feels on different tiers of RTX 50-series GPUs — is an important consideration.

We know from DLSS 3 that if you're only getting a generated FPS of 40 as an example, it can feel very sluggish and laggy, even if it looks reasonably smooth. That's because the user input gets sampled at 20 FPS. With DLSS 4, potentially that means you could have a generated framerate of 80 FPS with user sampling of 20 FPS. Or to put it in different terms, we've generally felt that you need sampling rates of at least 40–50 FPS for a game to feel responsive while using framegen.

With multi frame generation, that would mean we could potentially need generated framerates of 160–200 FPS for a similar experience. That might be great on a 240 Hz monitor and we'd love to see it, but by the same token multi frame generation on a 60 Hz or even 120 Hz monitor might not be that awesome.

Our other question was in regards to the neural textures and rendering that was shown. Nvidia showed some examples of memory use of 48MB for standard materials, and slashed that to 16MB with "RTX Neural Materials." But what exactly does that mean, and how will this impact the gaming experience? We were particularly interested in whether or not there was potential to help GPUs that don't have as much VRAM, things like the RTX 4060 with 8GB of memory.

Unfortunately, Jensen says these neural materials will require specific implementation by content creators. He said that Blackwell has new features that allow developers and artists to put shader code intermixed with neural rendering instructions into materials. Material descriptions have become quite complex, and describing them mathematically can be difficult. But Jensen also said that "AI can learn how to do that for us."

Some of these new features may not be available on previous generation GPUs, as they don't have the required hardware capabilities to mix shader code with neural code. Or perhaps, they'll work but won't be as performant. So to get the full benefit, you'll need a new 50series GPU, and neural materials will require content side work and thus developers will need to specifically adopt these new features.

That means, for one, that if we do end up with an RTX 5060 8GB card as an example, for a whole host of existing games, the 8GB of VRAM could still prove to be a limiting factor. It also means RTX 4060 and 4060 Ti with 8GB won't get a new lease on life thanks to neural rendering. Or at least, that how we interpret things. But maybe an AI network can learn how to do some of these things for us in the future.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Alvar "Miles" Udell Jensen used a lot of words to attempt to justify not using at least 16gb VRAM on everything above the 5060 and why it's not a bad thing there is very little on paper (and in practice perhaps) difference, outside of the Titan class 5090, for the 5000 series over the 4000 series.Reply

Perhaps their 5% stock drop today is a result of that as well. -

Jame5 When did we just give up on, you know... actually rendering the frames that the game generates? Faking resolutions, making up frames that never existed, that doesn't sound like magical new tech. It makes it sound like you can't figure out how to make better hardware so you are trying to hide the fact with trickery.Reply -

ingtar33 to this day i find it surreal how rare it is that journalists don't mention how bad DLSS looks visually.Reply

this isn't tech anyone who actually plays games uses. (and just because the amd version looks worse, that doesn't excuse NVIDIA for pushing this shovelware at us.) -

redgarl Blackwell is offering 30% uplift in raster performances... 30%...Reply

It is the worst generational uplift ever from Nvidia...

https://i.ibb.co/4fks6Gt/reality.jpg -

redgarl Reply

The market is down 2%... it is not a significant indicator of anything. CES is not the prime focus of investors. Investors care about the datacenter and AI chips revenue.ingtar33 said:to this day i find it surreal how rare it is that journalists don't mention how bad DLSS looks visually.

this isn't tech anyone who actually plays games uses. (and just because the amd version looks worse, that doesn't excuse NVIDIA for pushing this shovelware at us.) -

OllyR "Jensen says DLSS 4 "predicts the future" to increase framerates without introducing latency" ...and just as he was talking about the card that can "predicting the future" without introducing (or increasing latency)...latency everywhere...lolReply

DLSS 4 on Nvidia RTX 5080 First Look: Super Res + Multi Frame-Gen on Cyberpunk 2077 RT Overdrive! -

hotaru251 if it does "predict the future" it will be impossible to use in games where you can cancel what would happen.Reply -

Heiro78 Reply

This is exactly it. When Jensen said Moore's law (not a real law and more a word of mouth accepted concept) was dead more than 2 years ago, he was hinting at hardware advancements not keeping the same pace as in the past. So Nvidia is trying two different things. Use machine learning to increase performance (DLSS) and create an alternate method of rendering for people to focus on (i.e. Ray tracing). There is the physics limit to how small the transistors can get before electrons don't "flow" how we expect them to. I remember reading somewhere it's smaller than a nanometer.Jame5 said:It makes it sound like you can't figure out how to make better hardware so you are trying to hide the fact with trickery. -

umeng2002_2 Even with Reflex and great frame pacing, you need to be able to render 35+ "real" FPS natively for DLSS Frame Generation to feel good enough outputting at 70+ FPS.Reply

Who knows if their warping tech can bring that down. Obviously, the new 4x frame gen will really really only be for high refresh rate monitors.

This why RT performance is still important. The only time I'd use frame gen is to cover for the performance impact of RT/ PT. Get the RT performance up, and you don't really need FG. -

HyperMatrix Replyingtar33 said:to this day i find it surreal how rare it is that journalists don't mention how bad DLSS looks visually.

this isn't tech anyone who actually plays games uses. (and just because the amd version looks worse, that doesn't excuse NVIDIA for pushing this shovelware at us.)

I’m on 4K and have a water cooled overclocked 666W 4090 and I use both DLSS and also Frame Gen when the implementation isn’t broken in combination with DLDSR. It’s an amazing tech and I look forward to seeing how this new version works. DLDSR + DLSS Quality provide superior detail and anti-aliasing vs. Native rendering.