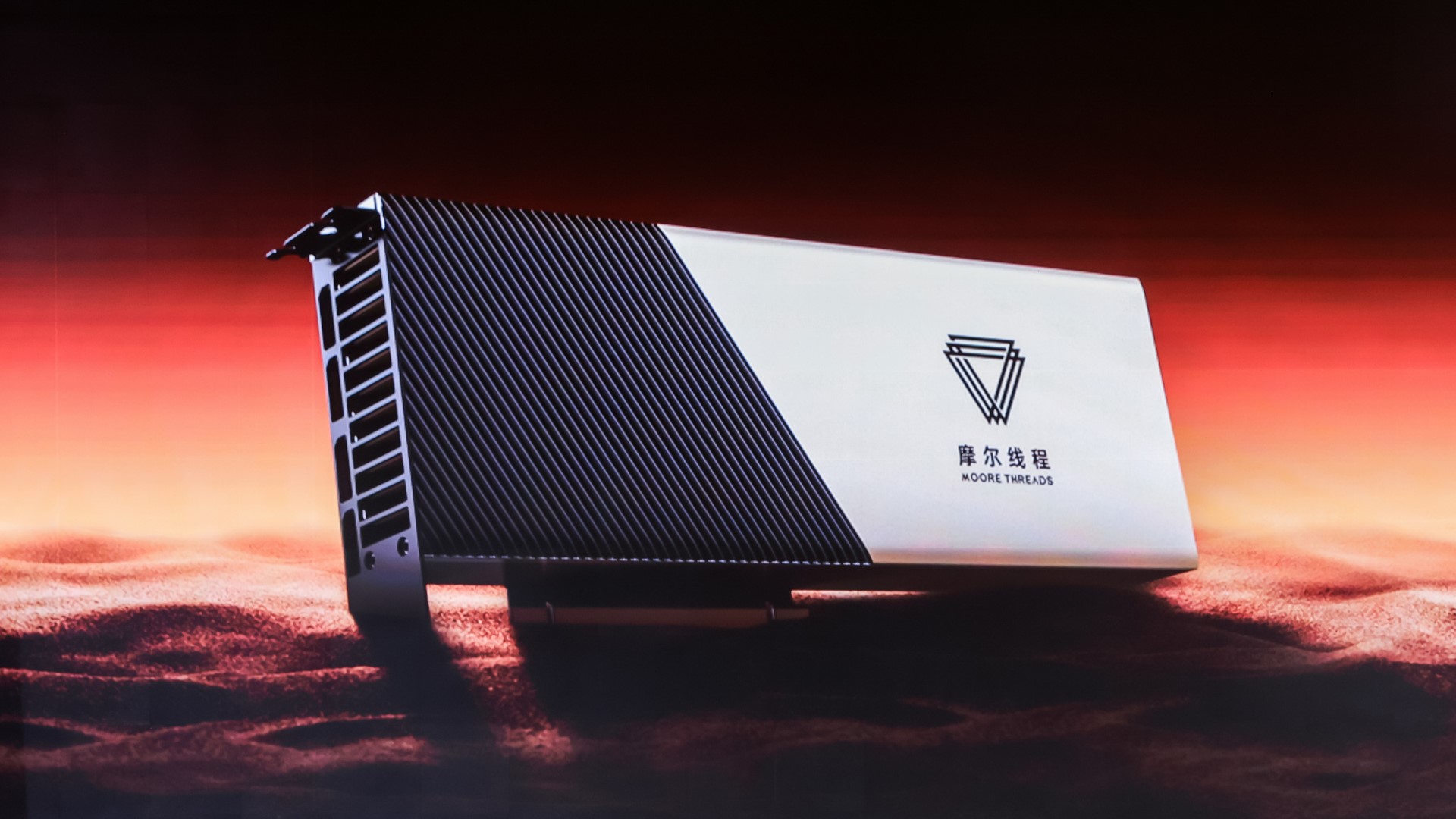

Nvidia's biggest Chinese competitor unveils cutting-edge new AI GPUs — Moore Threads S4000 AI GPU and Intelligent Computing Center server clusters using 1,000 of the new AI GPUs

Beefy clusters with 200 petaops of AI compute.

Chinese GPU manufacturer Moore Threads announced the MTT S4000, its latest graphics card for AI and data center compute workloads. The company's brand-new flagship will feature in the KUAE Intelligent Computing Center, a data center containing clusters of 1,000 S4000 GPUs each. Moore Threads is also partnering with many other Chinese companies, including Lenovo, to get its KUAE hardware and software ecosystem off the ground.

| GPU | MTT S4000 | MTT S3000 | MTT S2000 |

|---|---|---|---|

| Architecture | 3rd gen MUSA | 1st gen MUSA | 1st gen MUSA |

| SPUs (GPU cores) | ? | 4096 | 4096 |

| Core Clock | ? | 1.8–1.9 GHz | ~1.3 GHz |

| TFLOPs (FP32) | 25 | 15.2 | 10.6 |

| TOPs (INT8) | 200 | 57.6 | 42.4 |

| Memory Capacity | 48GB GDDR6 | 32GB | 32GB |

| Memory Bus Width | 384-bit | 256-bit | 256-bit |

| Memory Bandwidth | 768 GB/s | 448 GB/s | Unknown |

| TDP | ? | 250W | 150W |

Although Moore Threads didn't reveal everything there is to know about its S4000 GPU, it's certainly a major improvement over the S2000 and S3000. Compared to the S2000, the S4000 has over twice the FP32 performance, five times the INT8 performance, 50% more VRAM, and presumably lots more memory bandwidth too. The new flagship also makes use of the second generation MUSA (Moore Threads Unified System Architecture) architecture, while the S2000/S3000 used the first generation architecture.

(Disclaimer: Moore Threads lists both the S2000 and S3000 as "First Generation MUSA," but others have said the S2000 and S80 used a "second generation Chunxiao architecture." It would make more sense for S3000 to be "second generation," as Moore Threads specifically calls the S4000 "third generation," though we don't have a product page for it yet.)

Compared to models from Nvidia, the S4000 is better than the Turing-based Tesla server GPUs from 2018 but still behind Ampere and Ada Lovelace, which launched in 2020 and 2022, respectively. The S4000 is especially lacking in raw horsepower, but it still has quite a bit of memory capacity and bandwidth, which may come in handy for the AI and large language model (LLM) workloads Moore Threads envisions its flagship will be used for.

The S4000 also has critical GPU-to-GPU data capabilities, with a 240 GB/s data link from one card to another and RDMA support. This is a far cry from NVLink's 900 GB/s bandwidth on Hopper, but the S4000 is presumably a much weaker GPU, making such a high amount of bandwidth overkill.

Alongside the S4000, Moore Threads also revealed its KUAE Intelligent Computing Center. The company describes it as a "full-stack solution integrating software and hardware," with the full-featured S4000 GPU as the centerpiece. KUAE clusters use MCCX D800 GPU servers, which each have eight S4000 cards. Moore Threads says each KUAE Kilocard Cluster has 1,000 GPUs, which means a total of 125 MCCX D800 servers per cluster.

On the software side, Moore Threads claims KUAE supports mainstream large language models like GPT and frameworks like DeepSpeed. The company's MUSIFY tool apparently allows the S4000 to work with the CUDA software ecosystem based on Nvidia GPUs, which saves Moore Threads and China's software industry from having to reinvent the wheel.

A KUAE cluster can apparently train an AI model in roughly a month, though it greatly depends on some particulars. For instance, Moore Threads says the Aquila2 model with 70 billion parameters takes 33 days to train, but bumping the parameters up to 130 billion will increase the time to train to 56 days.

Supporting this kind of hardware and software ecosystem would be challenging for any company, but it would be nearly impossible for Moore Threads to go it alone, especially after it had to lay off many of its employees. That's presumably why the company has established the Intelligent Computing and Large Model Ecological Alliance, a partnership between Moore Threads and several other Chinese companies. The domestic Chinese GPU manufacturer most notably got support from Lenovo, which has an international presence as well.

Although Moore Threads certainly won't be going toe-to-toe with the likes of Nvidia, AMD, or Intel any time soon, that isn't entirely necessary for China. US sanctions have prevented the export of powerful GPUs to China, which has not only given China's native semiconductor industry a good reason to exist, but also weakens the competition for companies like Moore Threads and its competitor Biren. Compared to Nvidia's China-specific cards, the S4000 and KUAE might have good odds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

bit_user The S2000 was found to be based on IP licensed from Imagination Technologies. I wonder if the S4000 is, as well. If so, also how they're doing on addressing the performance problems?Reply -

TCA_ChinChin Reply

I imagine the reason the S4000 isn't really a great challenger to the recent Nvidia offerings is due to that Imagination Tech limitations. Maybe they'll improve on it further with more iterations, but I'm not getting my hopes up. It'll probably be fine for smaller scale and lower performance compute and AI, but I can't see them getting past the sanctioned GPUs performance anytime soon.bit_user said:The S2000 was found to be based on IP licensed from Imagination Technologies. I wonder if the S4000 is, as well. If so, also how they're doing on addressing the performance problems? -

Am curious, Lenovo is a Chinese co and yet they are allowed the latest tech to sell why is that?Reply

-

umeng2002_2 I wish all competitors the best of luck. Competition benefits everyone but the leader's stock value.Reply -

das_stig Reply

Your wrong, for China this is a big step up in home grown technology and shows that they are catching up with Western technology to be self sufficient, they don't have to better but just as good and once that happens, you can guarantee they will flood the Western markets with mass produced cheap datacenter technology, like they do with all the other markets.JTWrenn said:Sorry but 2019 tech is not cutting edge. That is a click bait title. -

thullet Nvidia A40 48GB seems to be 695 GB/s, 299 TOPS INT8. With this 48GB 768 GB/s 200 TOPS INT8 they kinda got a direct replacement depending on TDP. Would be really nice to know the price, might be a good option for hobbyists elsewhere too.Reply -

bit_user Reply

If you live in a non-sanctioned country, then your best option at that level is a Nvidia RTX 6000.thullet said:Nvidia A40 48GB seems to be 695 GB/s, 299 TOPS INT8. With this 48GB 768 GB/s 200 TOPS INT8 they kinda got a direct replacement depending on TDP. Would be really nice to know the price, might be a good option for hobbyists elsewhere too.

https://www.nvidia.com/en-us/design-visualization/rtx-6000/

48 GB, 960 GB/s, and 1457 TFLOPS (FP8 w/ sparsity) in 300 W. Granted, they're not cheap. $6800, if ordered directly from Nvidia.