Nvidia Announces Tesla T4 GPUs With Turing Architecture

Nvidia CEO Jensen Huang took to the stage at GTC Japan to announce the company's latest advancements in AI, which includes the new Tesla T4 GPU. This new GPU, which Nvidia designed for inference workloads in hyperscale data centers, leverages the same Turing microarchitecture as Nvidia's forthcoming GeForce RTX 20-series gaming graphics cards.

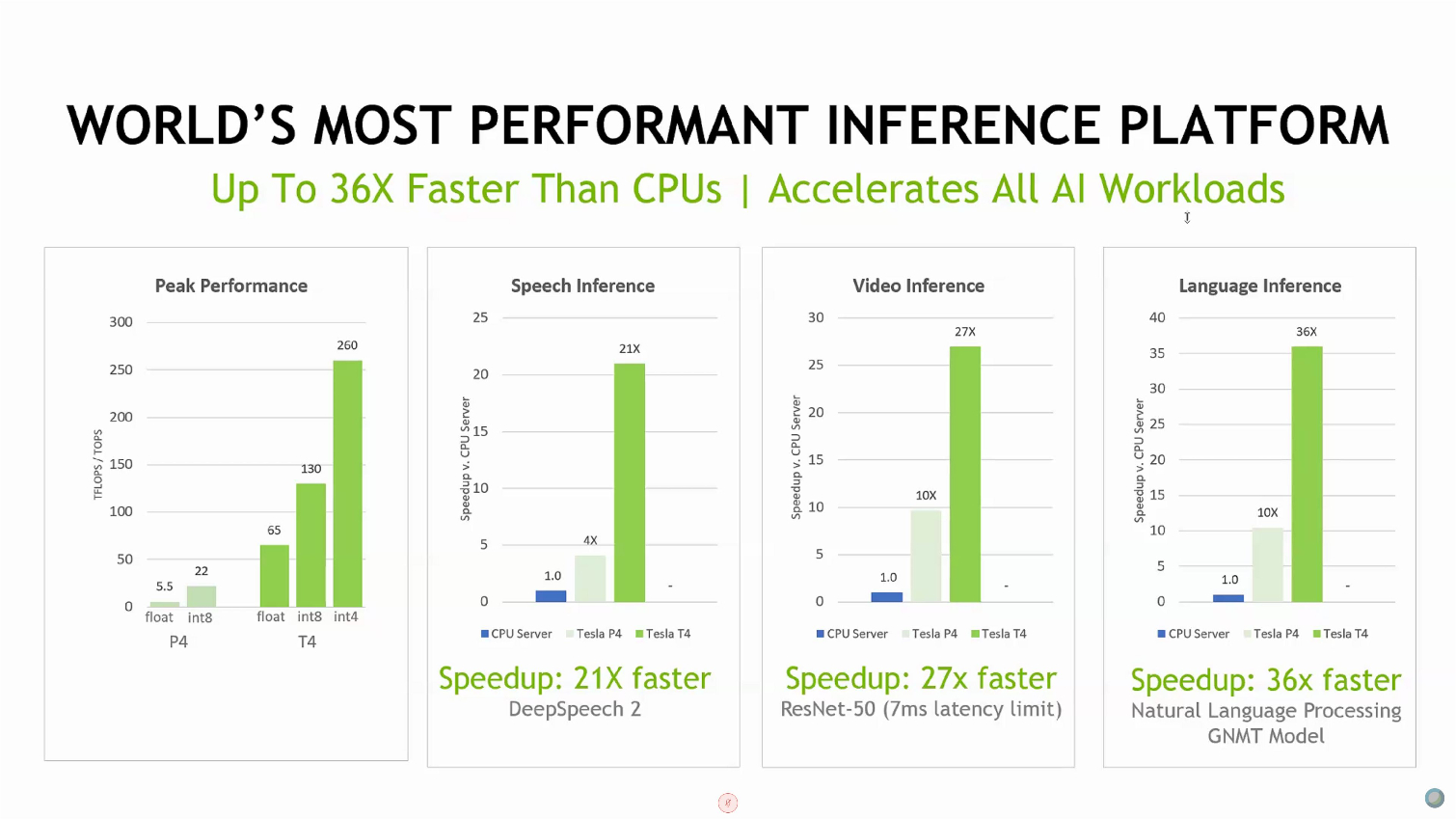

But the Tesla T4 is a unique graphics card designed specifically for AI inference workloads, like neural networks that process video, speech, search engines, and images. Nvidia's previous-gen Tesla P4 fulfilled this role in the past, but Nvidia claims the new model offers up to 12 times the performance within the same power envelope, possibly setting a new bar for power efficiency in inference workloads.

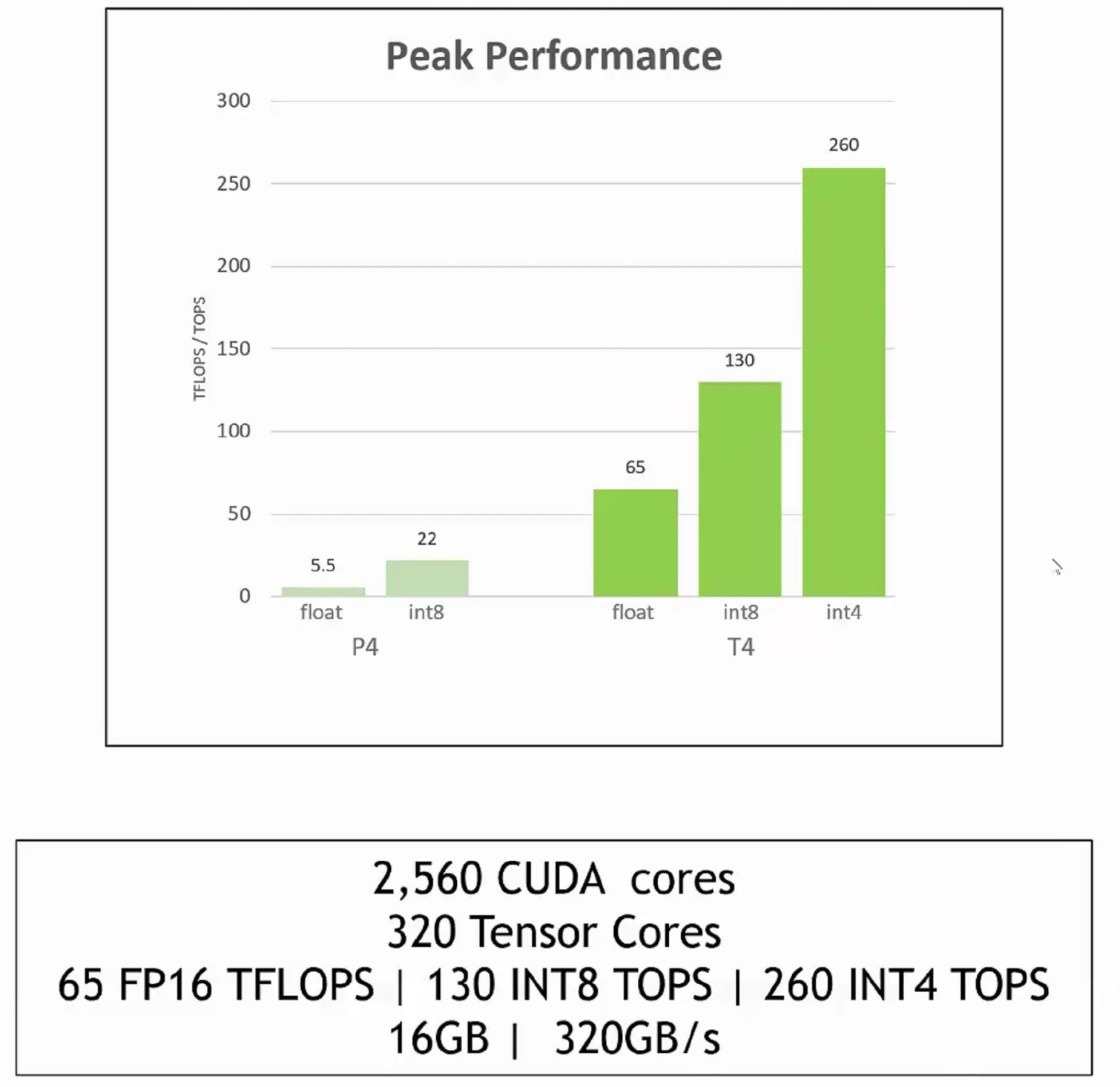

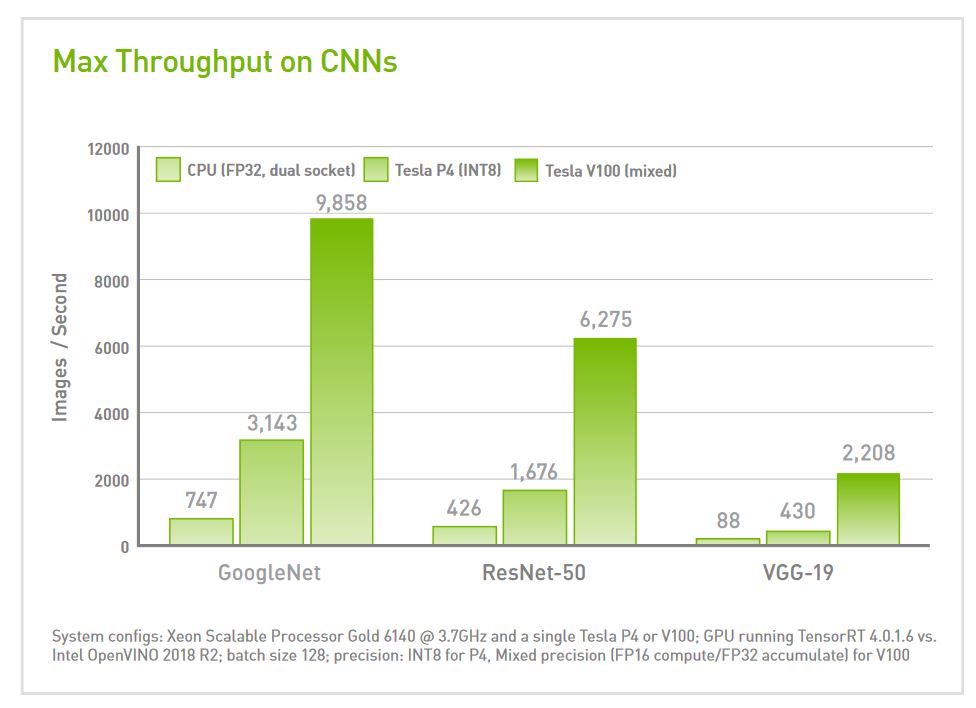

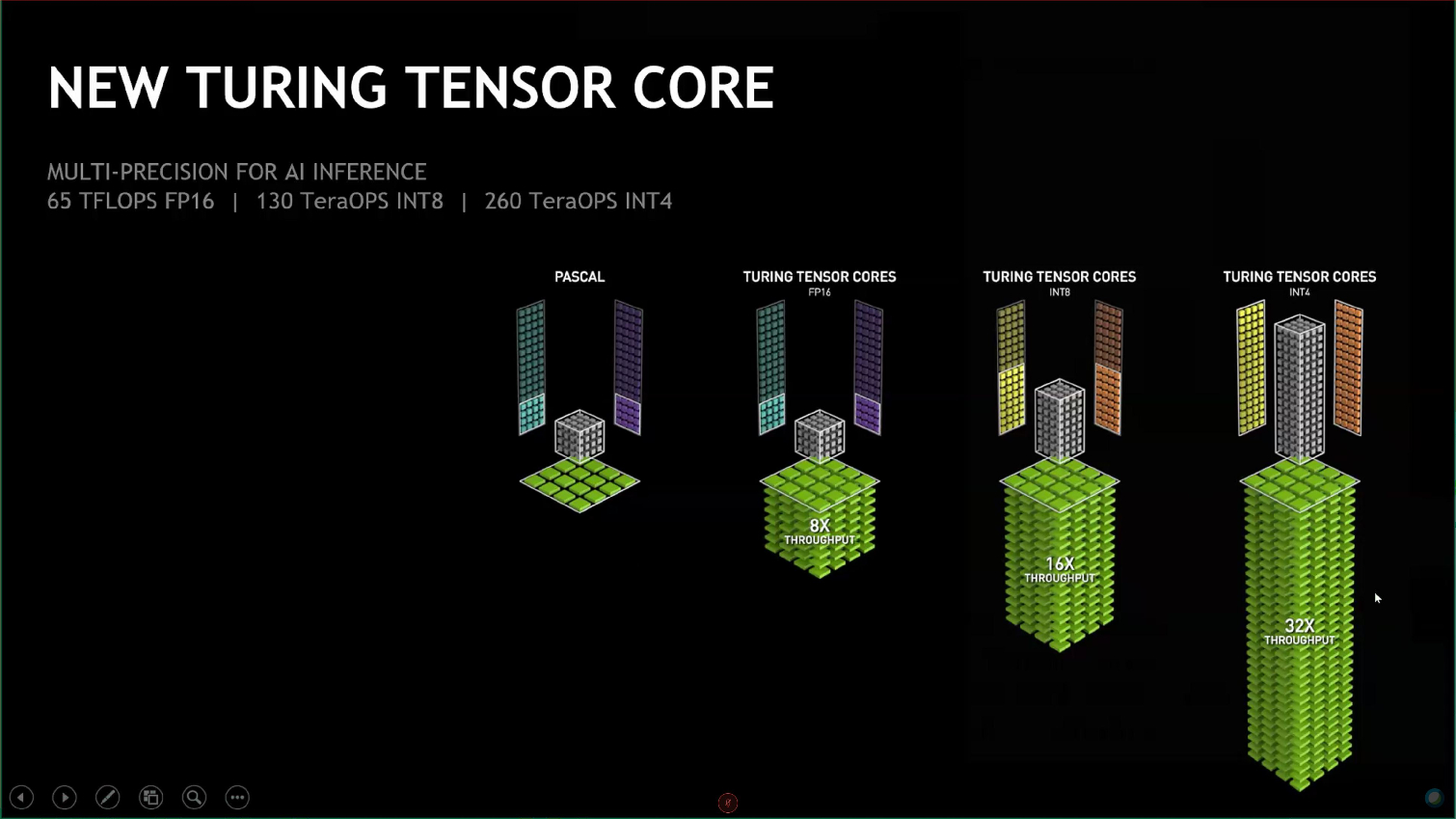

| Row 0 - Cell 0 | FP16 | INT8 | INT4 |

| Nvidia Tesla T4 (TFLOPS) | 65 | 130 | 260 |

| Nvidia Tesla P4 (TFLOPS) | 5.5 | 22 | - |

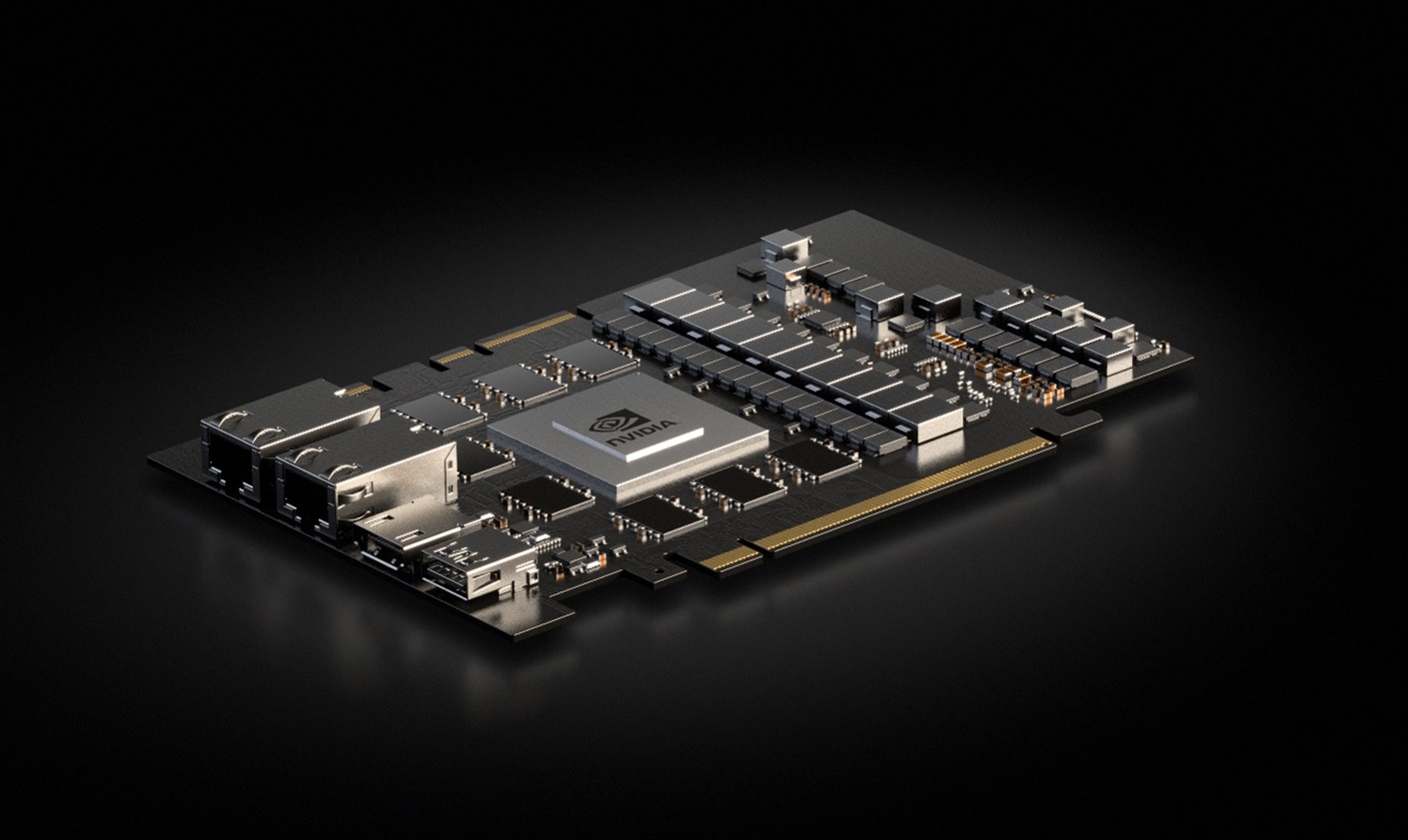

The Tesla T4 GPU comes equipped with 16GB of GDDR6 that provides up to 320GB/s of bandwidth, 320 Turing Tensor cores, and 2,560 CUDA cores. The T4 features 40 SMs enabled on the TU104 die to optimize for the 75W power profile.

The GPU supports mixed-precision, such as FP32, FP16, and INT8 (performance above). The Tesla T4 also features an INT4 and (experimental) INT1 precision mode, which is a notable advancement over its predecessor.

Like its predecessor, the low-profile Tesla T4 consumes just 75 watts and slots into a standard PCIe slot in servers, but it doesn't require an external power source (like a 6-pin connector). The cards' low-power design doesn't require active cooling (like a fan)–the high linear airflow inside of a typical server will suffice. Nvidia tells us that the die does come equipped with RT Cores, just like the desktop models, but that they will be useful for raytracing or VDI (Virtual Desktop Infrastructure), implying they won't be used for most inference workloads.

The Tesla T4 also features optimizations for AI video applications. These are powered by hardware transcoding engines that provide twice the performance of the Tesla P4. Nvidia says the cards can decode up to 38 full-HD video streams simultaneously.

Nvidia's TensorRT Hyperscale platform is a collection of technologies wrapped around the T4. As expected, the card supports all the major deep learning frameworks, such as PyTorch, TensorFlow, MXNet, and Caffee2. Nvidia also offers its TensorRT 5, a new version of Nvidia's deep learning inference optimizer and runtime engine that supports Turing Tensor Cores and multi-precision workloads. Nvidia also announced the Turing-optimized CUDA 10, which includes optimized libraries, programming models, and graphics API interoperability.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

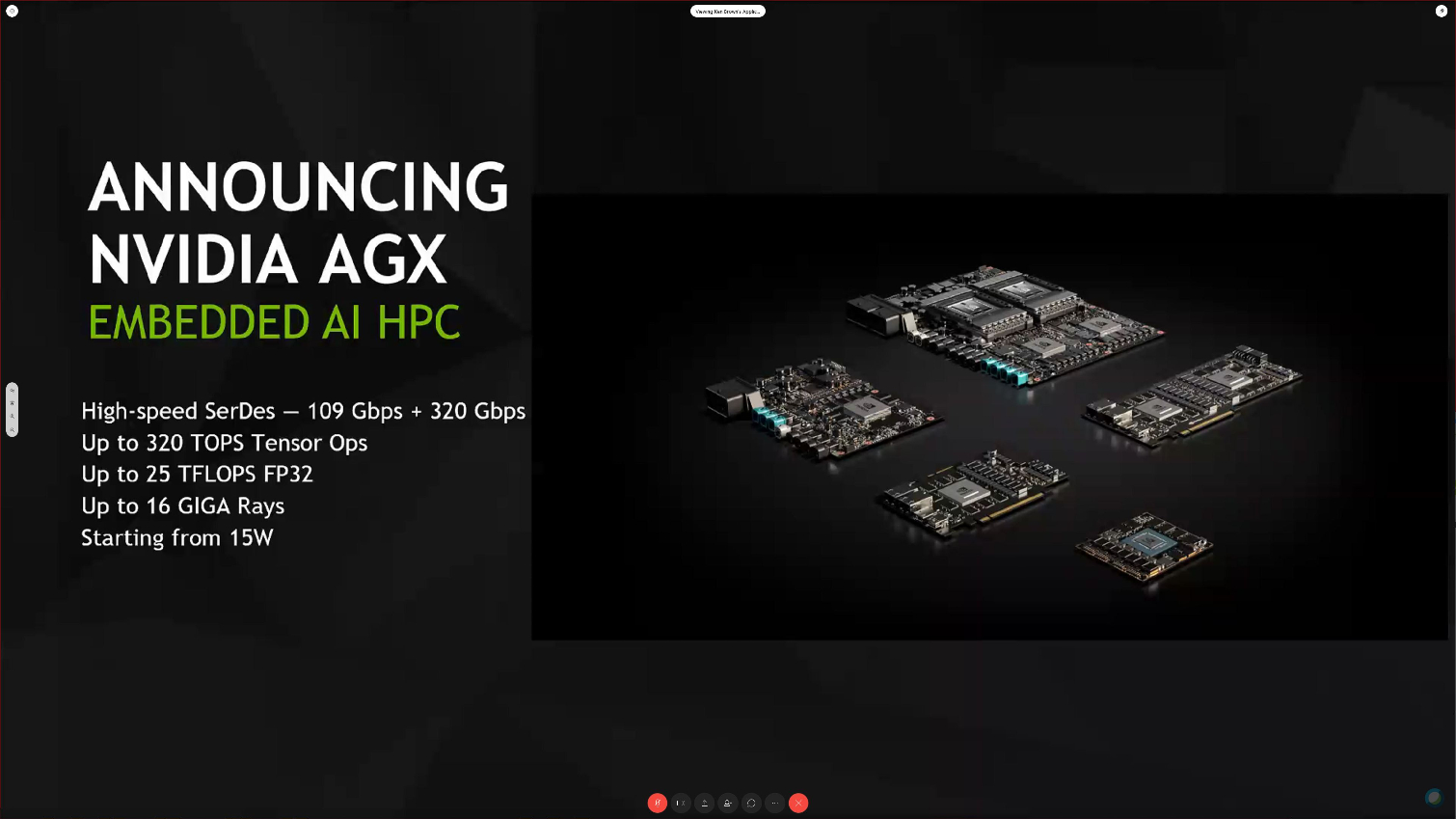

Nvidia also announced the AGX lineup, which is a new name for Nvidia's line of Xavier-based products that are designed for autonomous machine systems that range from robots to self-driving cars. The lineup includes Drive Xavier and the newly-finalized Drive Pegasus that originally featured two Xavier processors and two Tesla V100 GPUs. Nvidia has now updated the GPUs to Turing models. Nvidia is also offering a similar design, called the Clara Platform, for medical applications. The Clara Platform features a single Xavier processor and Turing GPU.

Thoughts

Nvidia's focus on boosting performance in inference workloads is a strategic move: the company projects the inference market will grow to a $20 billion TAM over the next five years. Meanwhile, Intel claims that most of the world's inference workloads run on Xeon processors, which is likely true given Intel's presence in ~96% of the world's servers. Intel announced during its recent Data-Centric Innovation Summit that the company sold $1 billion in processors for AI workloads in 2017 and expects that number to grow quickly over the coming years.

Inference workloads will be a hotly contested battleground between Nvidia, Intel, and AMD in the future, with Intel having the initial advantage due to its server attach rate. However, low-cost and low-power inference accelerators, such as Nvidia's new Tesla T4, pose a tremendous threat due to their performance-per-watt advantages, and AMD has its 7nm Radeon Instinct GPUs for deep learning coming soon. Several companies, such as Google with its TPUs, are developing their own custom silicon for inferencing workloads. That means it will likely be several years before the clear winners become apparent.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Paul Alcorn Reply21316002 said:why these type of card doesnt has cooler?

It's only 75W and will live in a server, so the linear airflow will keep it cool. Servers are like tornadoes inside, usually at least 200LFM. I'll add something to the article to explain that. -

bit_user Reply

Indeed, TU104 is the same silicon used in RTX 1070 and RTX 1080. All they did was down-clock and scale it back to fit a 75 W power envelope. It is then fitted with double the RAM (ECC, too), a passive heatsink, and a several $k price tag.21315862 said:This new GPU, which Nvidia designed for inference workloads in hyperscale data centers, leverages the same Turing microarchitecture as Nvidia's forthcoming GeForce RTX 20-series gaming graphics cards.

It's a stretch to call it a graphics card. While it can do desktop virtualization, note the lack of any display outputs.21315862 said:the Tesla T4 is a unique graphics card designed specifically for AI inference workloads

This seems like wishful thinking.21315862 said:Intel claims that most of the world's inference workloads run on Xeon processors

Well, Nvidia is clearly winning. The question is whether anyone building AI-specific chips can unseat them. We already know Vega 7 nm won't.21315862 said:it will likely be several years before the clear winners become apparent. -

bit_user Reply

Eh, it doesn't really have anything to do with being only 75 W, as their 250 W Tesla V100 PCIe cards are also passively cooled.21316014 said:21316002 said:why these type of card doesnt has cooler?

It's only 75W and will live in a server, so the linear airflow will keep it cool. Servers are like tornadoes inside, usually at least 200LFM. I'll add something to the article to explain that.

https://www.nvidia.com/en-us/data-center/tesla-v100/ -

milkod2001 Looks like new 2050 with flashed firmware, acting like pro GPU and sold at 3x premium because why not.Reply -

jimmysmitty Reply21316179 said:

This seems like wishful thinking.21315862 said:Intel claims that most of the world's inference workloads run on Xeon processors

I wouldn't be surprised actually since there is not only server CPUs but also the Knights series Xeons which are pretty much what Tesla competes with although Intel is said to not be continuing those in the future.

Eh, it doesn't really have anything to do with being only 75 W, as their 250 W Tesla V100 PCIe cards are also passively cooled.21316185 said:21316002 said:why these type of card doesnt has cooler?

It's only 75W and will live in a server, so the linear airflow will keep it cool. Servers are like tornadoes inside, usually at least 200LFM. I'll add something to the article to explain that.

https://www.nvidia.com/en-us/data-center/tesla-v100/

The card has no fans but they are designed to go into a server rack which has fans spinning at full speed pushing air through the heatsinks out the back. Even right now with the door closed I can hear my servers spinning in my office.

The design is probably due to the way servers are built. I doubt you could throw a V100 into a mid tower or full tower and run it like in a server chassis without running into thermal problems.

21316302 said:Looks like new 2050 with flashed firmware, acting like pro GPU and sold at 3x premium because why not.

Except it has more VRAM thats also ECC and the drivers are mush more refined for what they do. Most GPUs start off as HPC based chips that get slowly trickled down to consumer ends after being cut off.

Its the same with CPUs. Most CPUs have a server variant that cost quite a bit more than the desktop counterpart does. -

Scott_123 The 16GB DDR4 recommenedation is terrible. On Newegg you can get better ram for $30 DOLLARS LESS!Reply

https://m.newegg.com/products/N82E16820232180 -

bit_user Reply

Yeah, I think that's what Paul was saying, and I agree. Nvidia specifies how much CFM (or m^3 / sec) are required for their passively-cooled Tesla cards.21317014 said:The card has no fans but they are designed to go into a server rack which has fans spinning at full speed pushing air through the heatsinks out the back. Even right now with the door closed I can hear my servers spinning in my office.

The design is probably due to the way servers are built. I doubt you could throw a V100 into a mid tower or full tower and run it like in a server chassis without running into thermal problems.

It's not just Nvidia, either. AMD makes passively-cooled server cards, as did Intel, when they offered Xeon Phi on a PCIe card.

That's twisting it, somewhat. I don't think it's really true to say it's a server chip before gaming, or vice versa. The past few generations have had the consumer cards released (or, in this case, simply announced) first. But Nvidia obviously collects requirements for each new chip. Some of those are for server applications, while others are for gaming and workstation uses. Then, all their chips (except for GP100 and GV100) are built to fill niches in all of these markets and sold on the appropriate vehicle (Tesla, for server; Quadro, for workstation; GeForce for consumer).21317014 said:Most GPUs start off as HPC based chips that get slowly trickled down to consumer ends after being cut off.

No, not in the same way as Nvidia is doing with GPUs. Intel's actual server chips are LGA 3647, and use different silicon than their workstation or desktop chips. AMD happened to use the same Zepplin die, in first gen Ryzen, Threadripper, and Epyc. But that's a first, for them, and I'm not sure if the dies from Epyc 7 nm will trickle down to desktop, or if they are going to bifurcate their silicon.21317014 said:Its the same with CPUs. Most CPUs have a server variant that cost quite a bit more than the desktop counterpart does. -

bit_user Reply

Don't be fooled by the size. As I said above, it uses the same chip as the RTX 2070 and RTX 2080, but with double the RAM.21316302 said:Looks like new 2050 with flashed firmware, acting like pro GPU and sold at 3x premium because why not.

Nvidia did the same thing with the P4, which was also a passively-cooled, low-profile card with the GP104 chip used on the GTX 1070 and GTX 1080.

Probably one of the ways they squeeze it onto such a small board is that the VRM needed for 75 W is just a lot smaller than what the desktop versions require. Not having a fan should also save a little area, since you don't have the fan header & controller, plus perhaps some accommodations for the airflow, etc. -

jimmysmitty Reply21318274 said:

Yeah, I think that's what Paul was saying, and I agree. Nvidia specifies how much CFM (or m^3 / sec) are required for their passively-cooled Tesla cards.21317014 said:The card has no fans but they are designed to go into a server rack which has fans spinning at full speed pushing air through the heatsinks out the back. Even right now with the door closed I can hear my servers spinning in my office.

The design is probably due to the way servers are built. I doubt you could throw a V100 into a mid tower or full tower and run it like in a server chassis without running into thermal problems.

It's not just Nvidia, either. AMD makes passively-cooled server cards, as did Intel, when they offered Xeon Phi on a PCIe card.

That's twisting it, somewhat. I don't think it's really true to say it's a server chip before gaming, or vice versa. The past few generations have had the consumer cards released (or, in this case, simply announced) first. But Nvidia obviously collects requirements for each new chip. Some of those are for server applications, while others are for gaming and workstation uses. Then, all their chips (except for GP100 and GV100) are built to fill niches in all of these markets and sold on the appropriate vehicle (Tesla, for server; Quadro, for workstation; GeForce for consumer).21317014 said:Most GPUs start off as HPC based chips that get slowly trickled down to consumer ends after being cut off.

No, not in the same way as Nvidia is doing with GPUs. Intel's actual server chips are LGA 3647, and use different silicon than their workstation or desktop chips. AMD happened to use the same Zepplin die, in first gen Ryzen, Threadripper, and Epyc. But that's a first, for them, and I'm not sure if the dies from Epyc 7 nm will trickle down to desktop, or if they are going to bifurcate their silicon.21317014 said:Its the same with CPUs. Most CPUs have a server variant that cost quite a bit more than the desktop counterpart does.

https://ark.intel.com/products/93794/Intel-Xeon-Processor-E7-4809-v4-20M-Cache-2_10-GHz

Intel does have server CPU variants. The one you specified is HPC though. My point is technologies and ideas typically push to HPC/server first where money will be spent then come to consumer. Turing for example has some ideas from Volta which consumers never saw.