Breaking: Intel Data-Centric Innovation Summit

We're here at Intel's headquarters in Santa Clara, CA for the company's Data-Centric Innovation Summit. Intel hasn't provided the press with any indication of new products or announcements. We're covering the event live to share the announcements as they happen, so refresh your browser or revisit this article periodically as the event unfolds.

Raja Koduri and Jim Keller have arrived.

Lisa Spelman, GM of Datacenter Marketing, has taken the stage to provide us with details of the agenda.

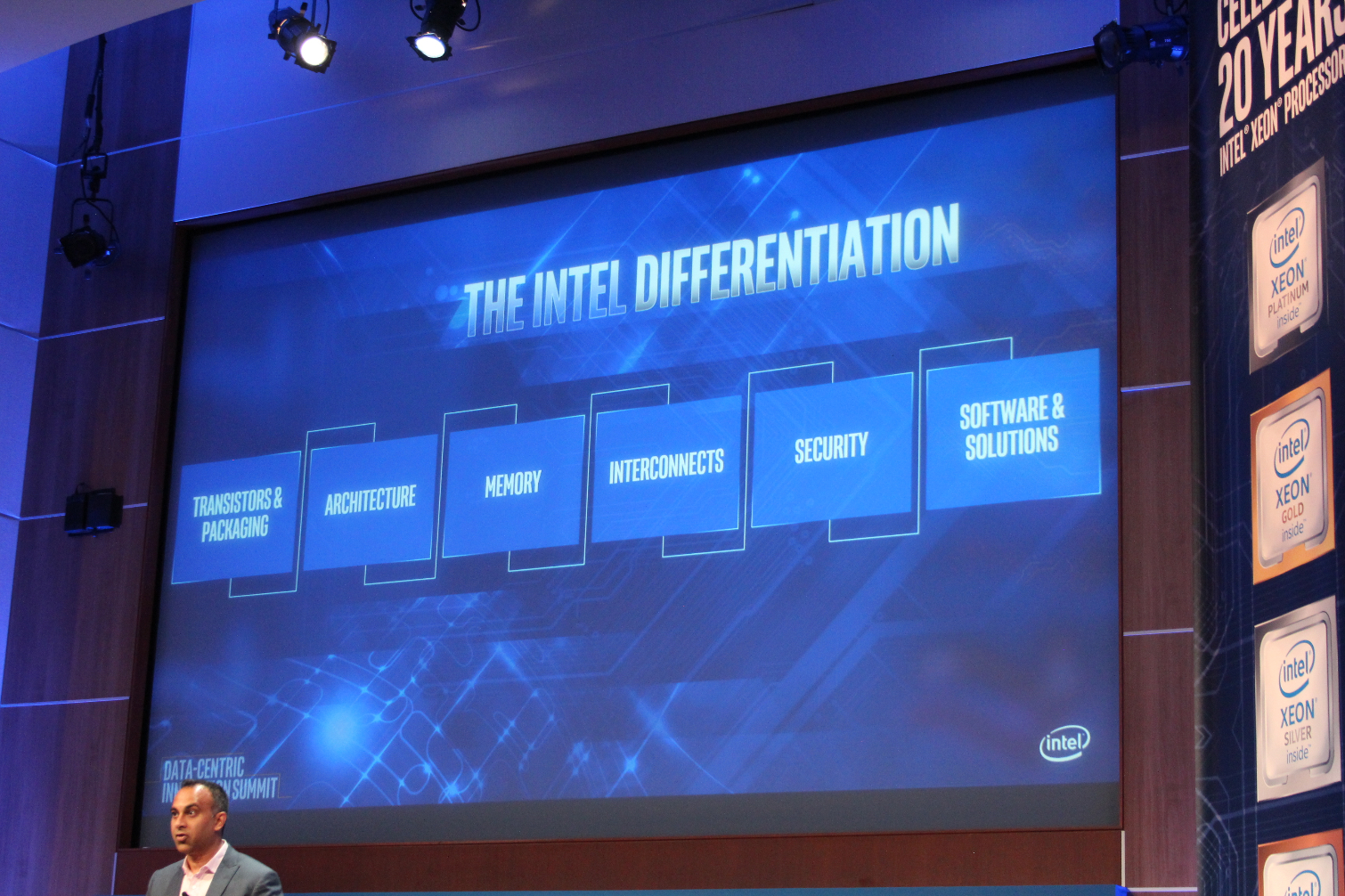

Navin Shenoy, the executive vice president and general manager of the Data Center Group at Intel, is now delivering the keynote. Intel will provide roadmap updates shortly.

Shenoy is explaining how new technologies, such as autonomous driving, are generating unprecedented amounts of data. Cars collect 4TB of data per hour, and then shared to the cloud to train AI models. Intel and partners are developing the technologies to record, transmit, store, and process the data.

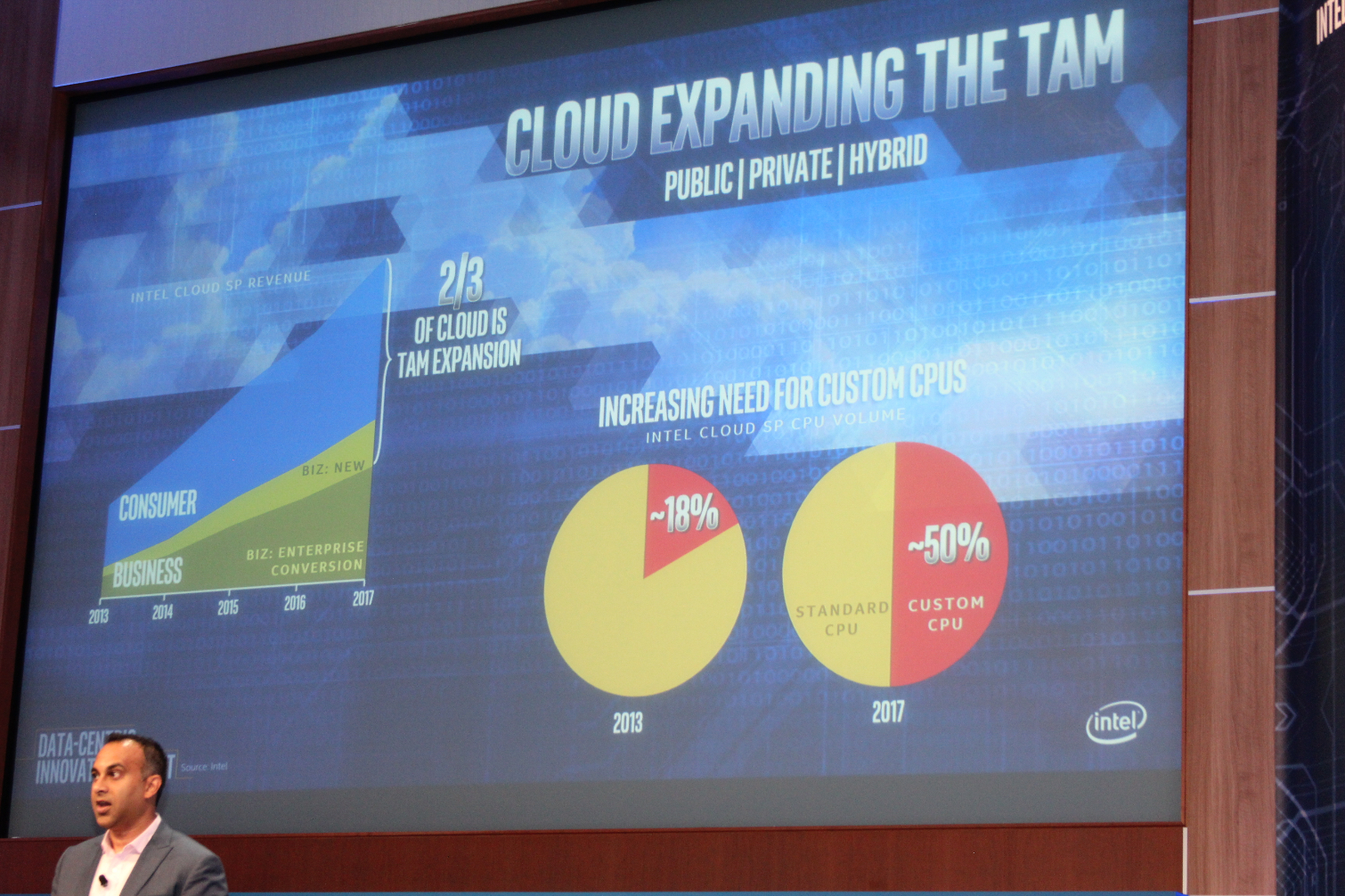

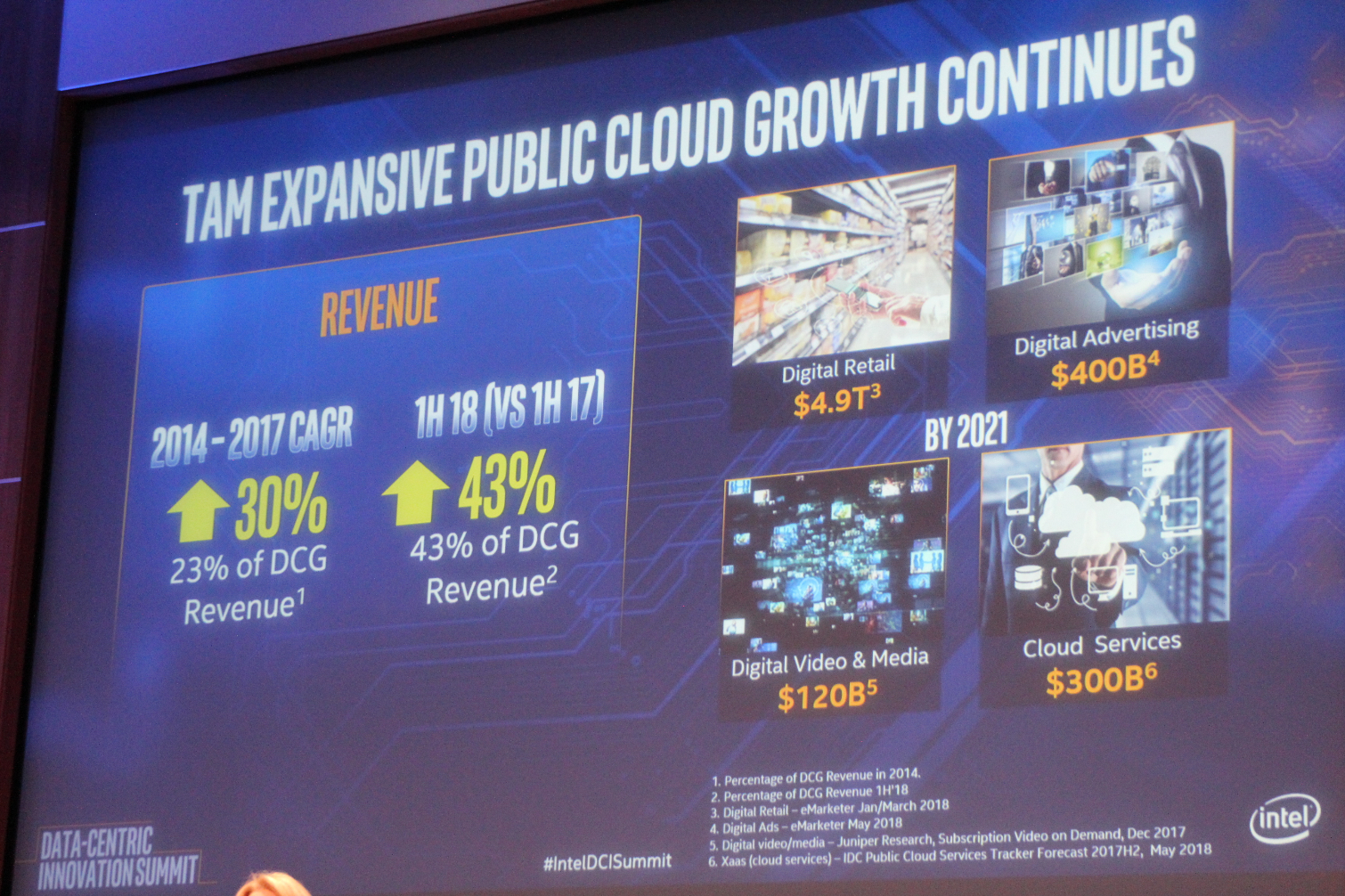

Intel has updated its total addressable market (TAM) projections based upon its newfound focus on the cloud, networking, and AI.

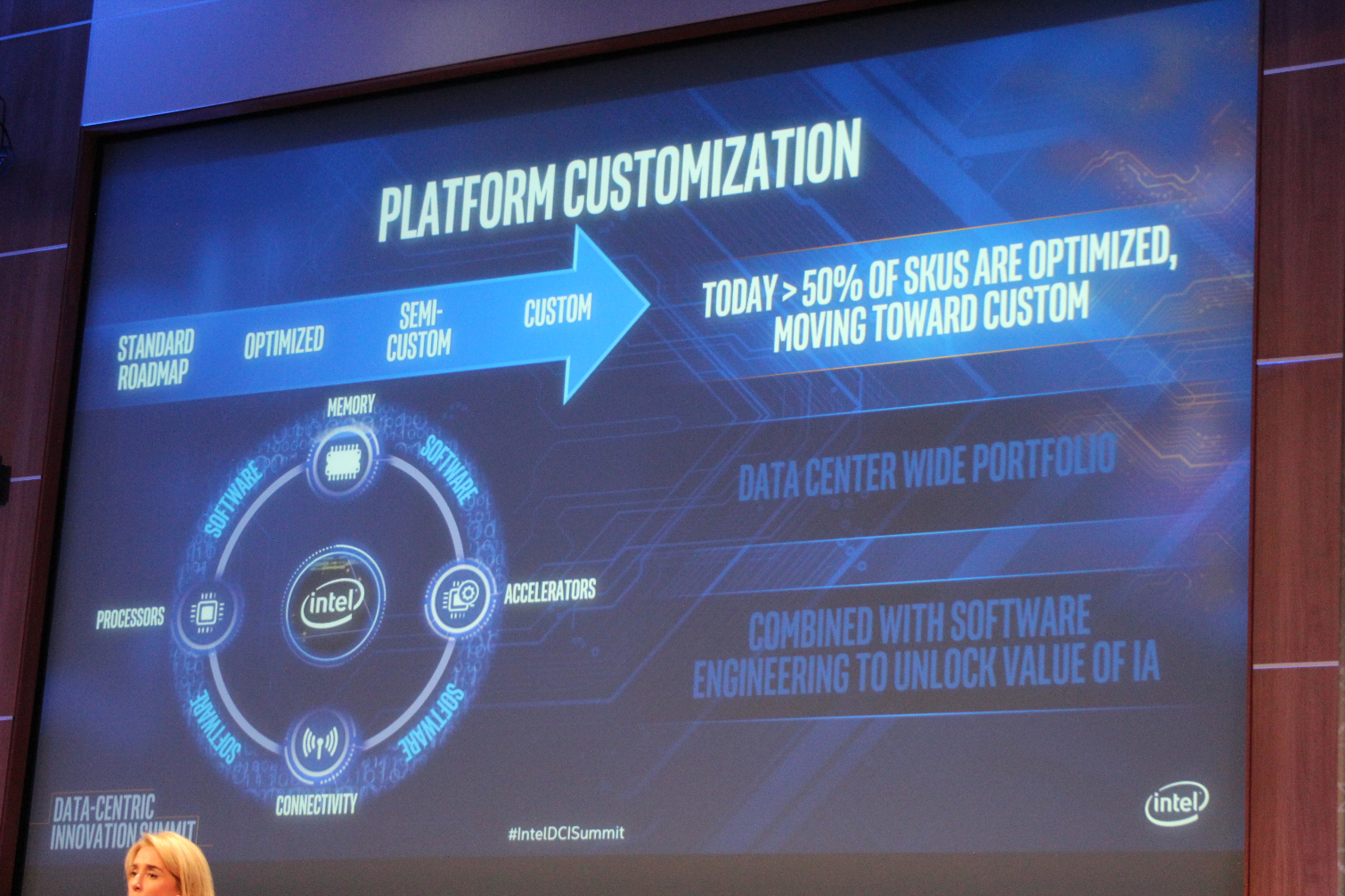

Intel's custom processor business is growing rapidly. Now 50% of the CPUs that it sells to cloud service providers are custom designs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

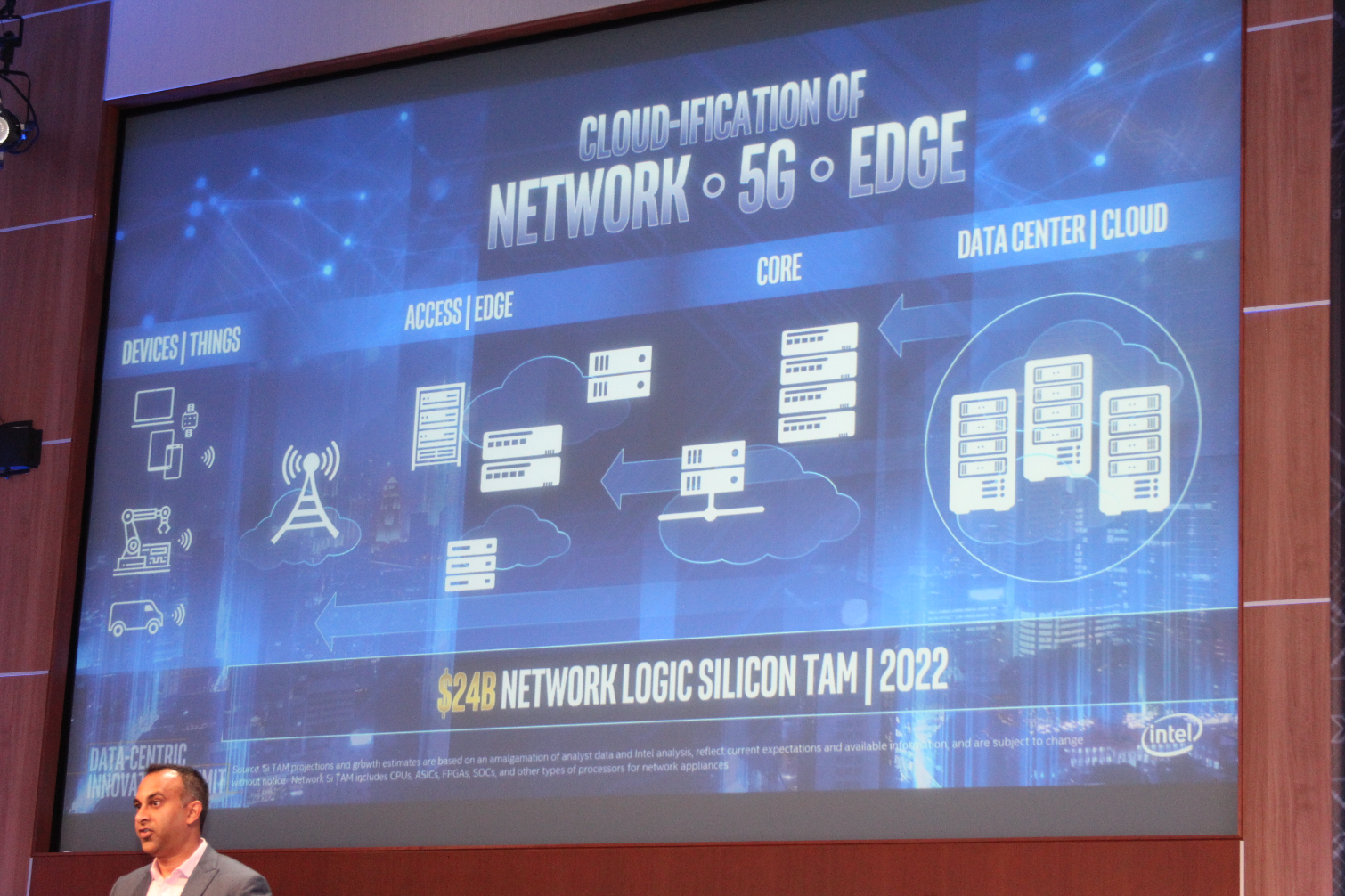

5G is also a big revenue driver. Shenoy is talking about a small server for edge 5G applications.

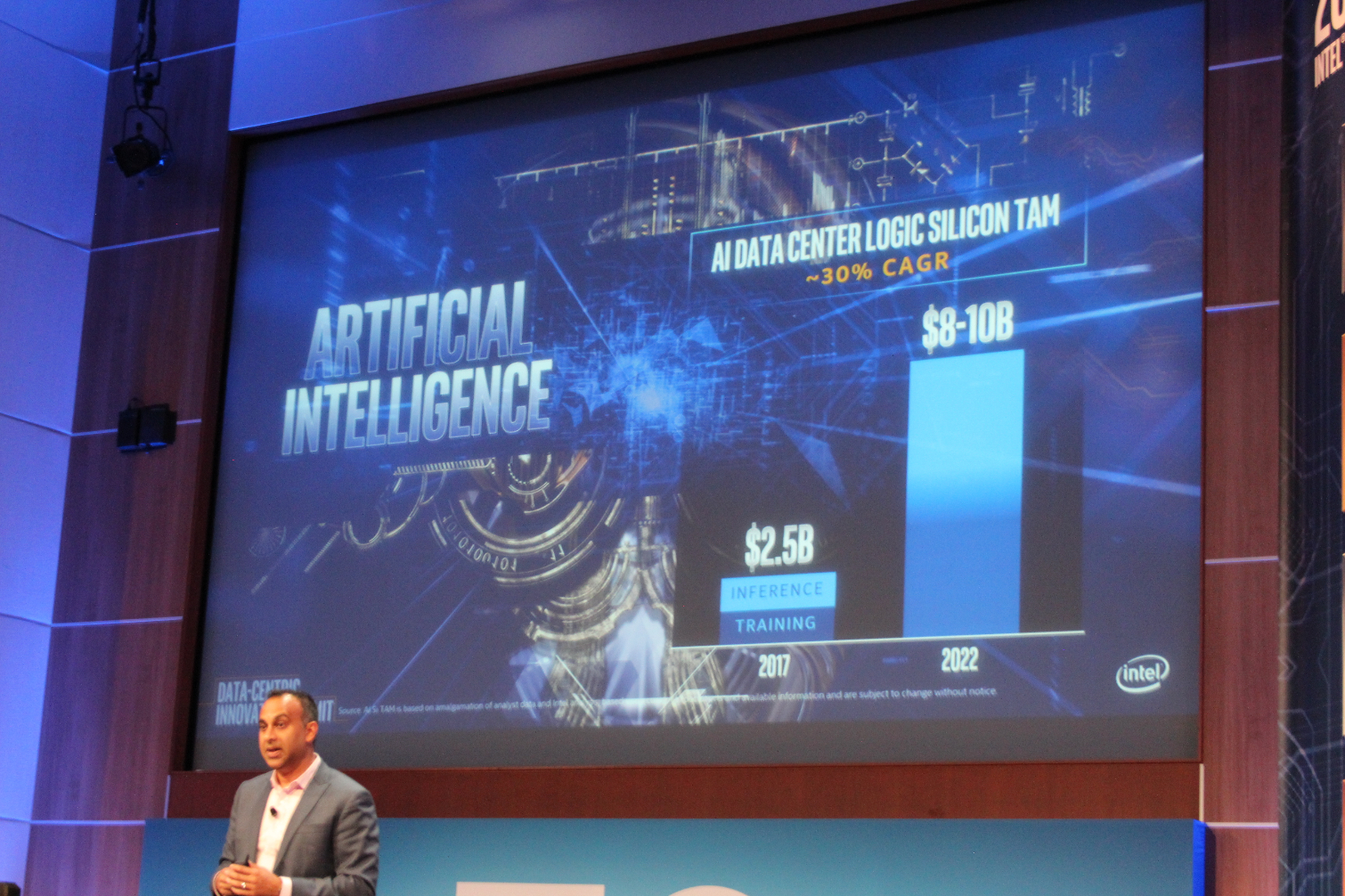

AI is also growing rapidly.

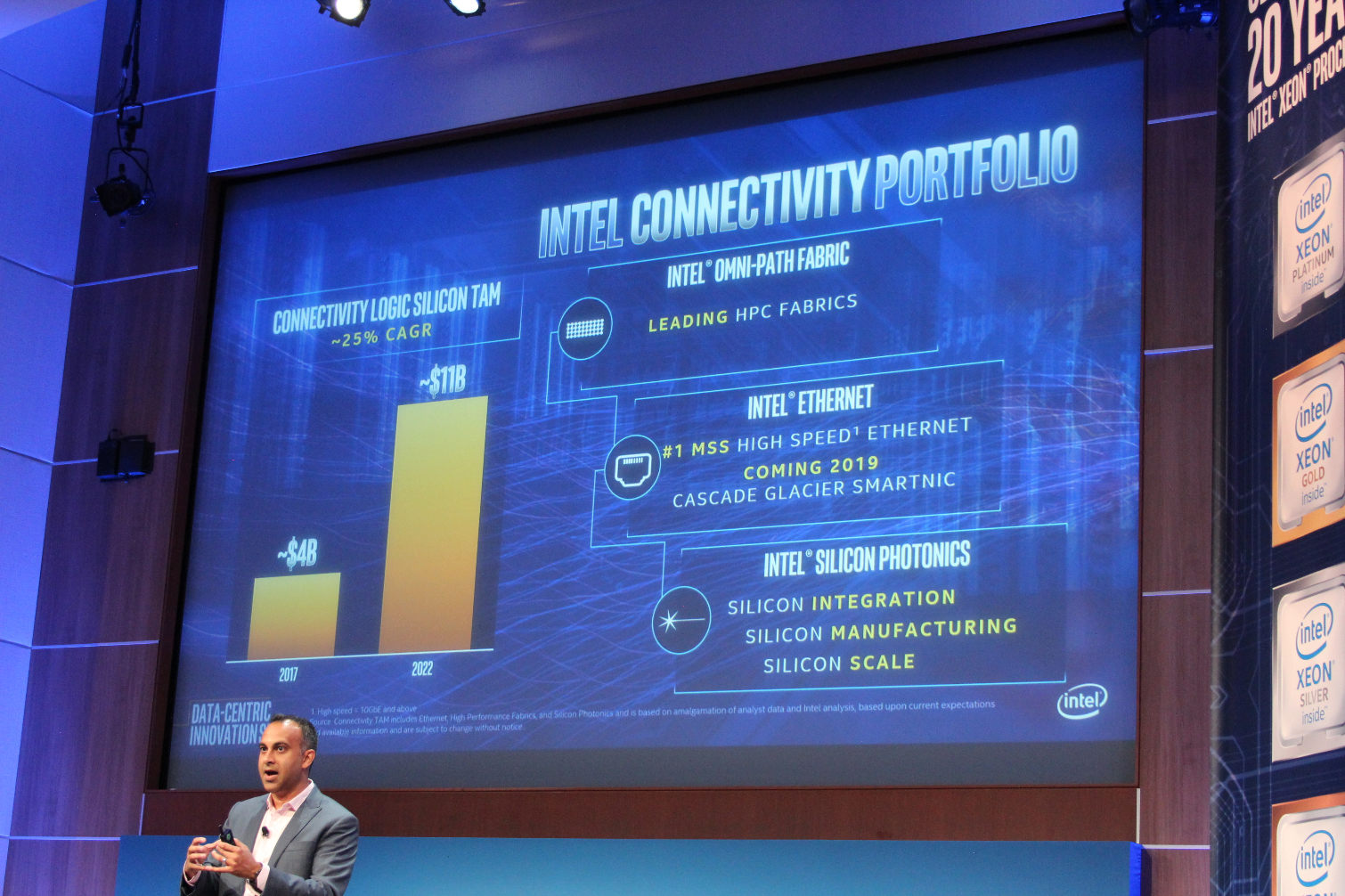

Intel is expanding its networking efforts with new Intel smart NICs. This new device combines FPGA and Ethernet technology into one package.

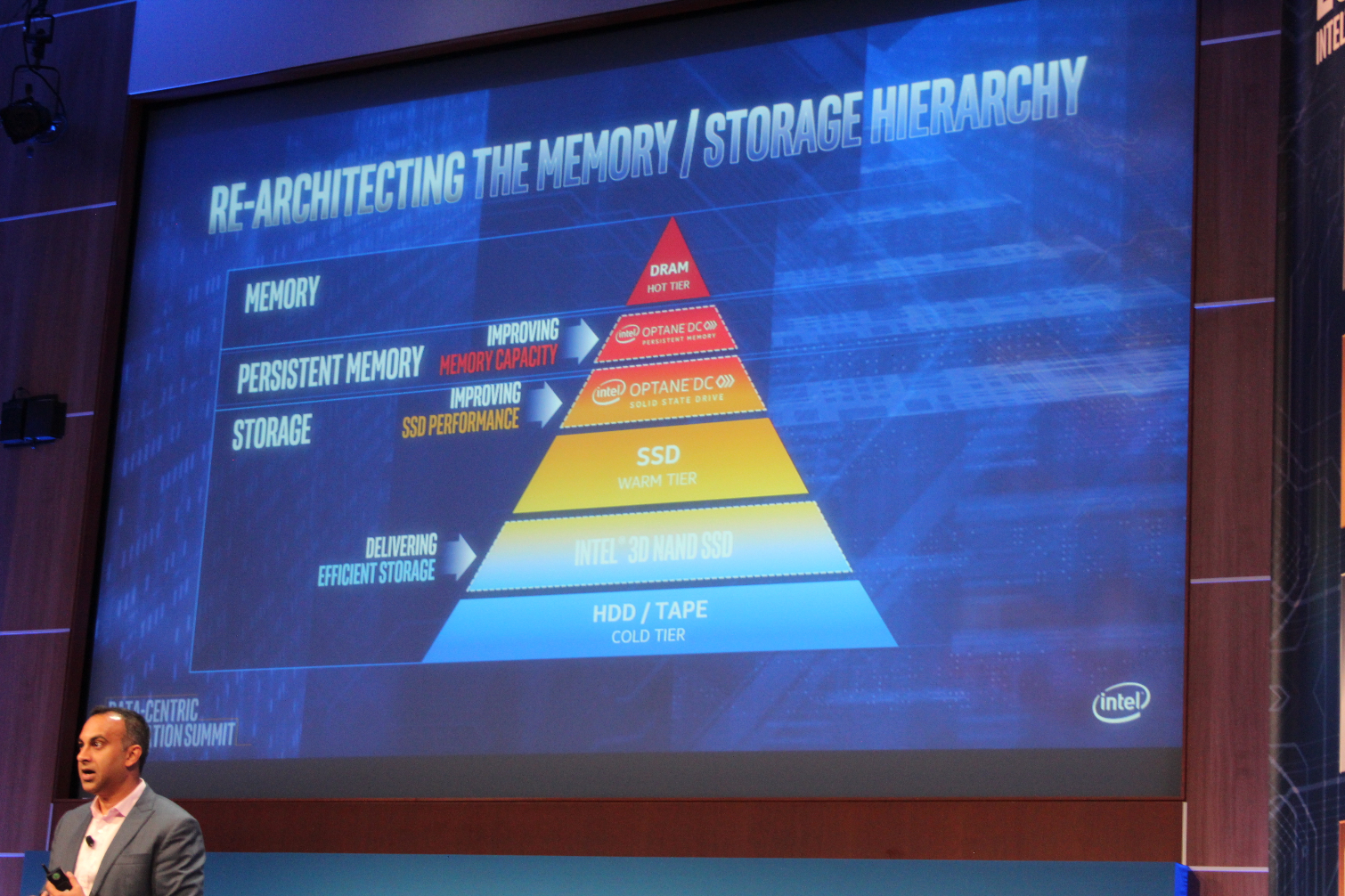

Intel is continuing to re-architect the storage hierarchy. The company has introduced Optane products, based on 3D XPoint, for both storage and memory applications. Typical 3D NAND comes in many flavors, such as MLC, TLC, and QLC to plug other gaps in the hierarchy.

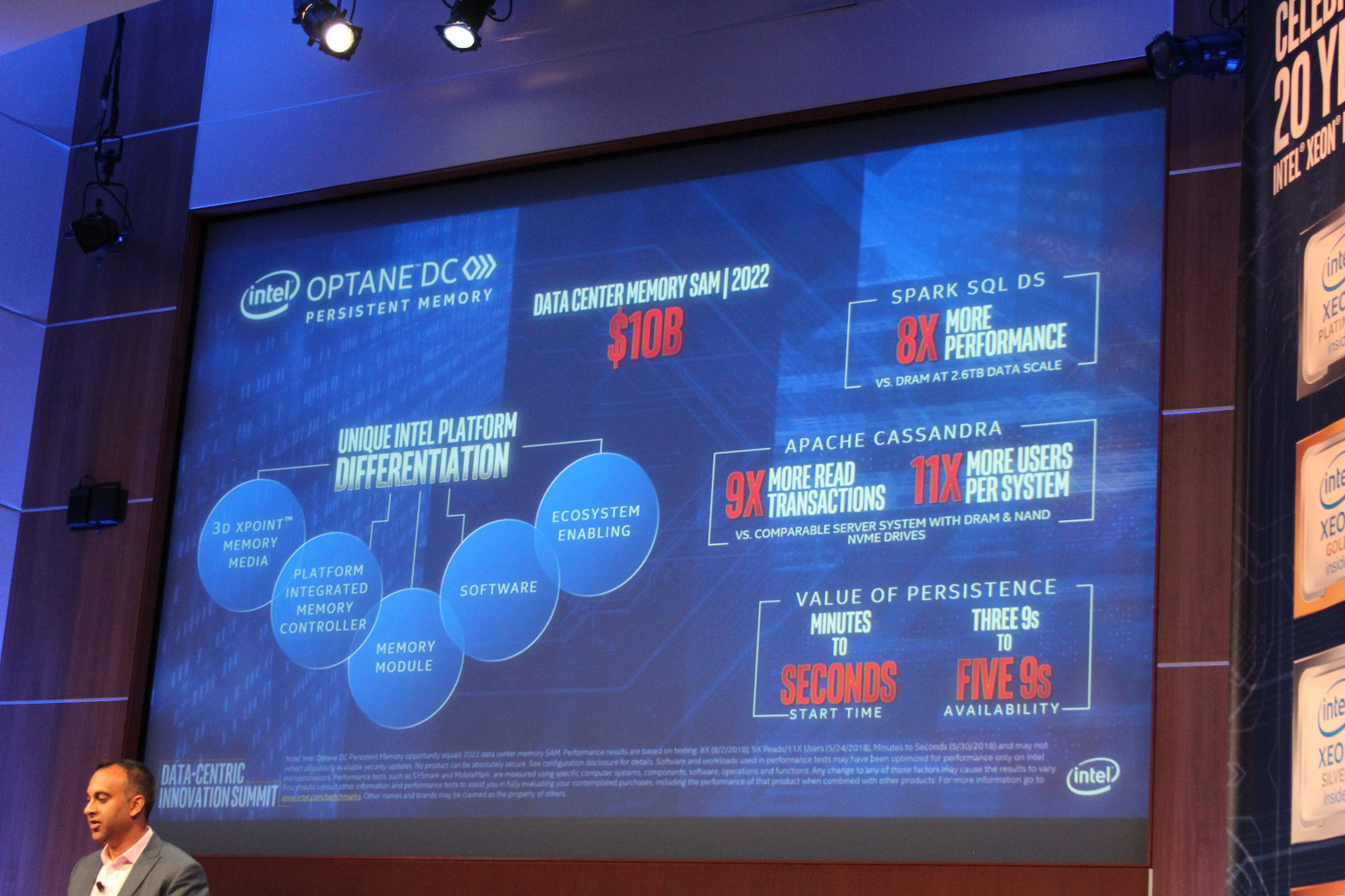

Optane Persistent Memory, which is 3X Point used as memory, should be able to redefine the industry.

Bart Sano, VP of platforms at Google, has joined Shenoy on the stage. He is here to speak about the company's work with Google with Optane Persistent Memory DIMMs. Google recently announced that Intel had won the infrastructure partner of the year award.

Google, SAP, and Intel are working together to run SAP HANA workloads on the Optane Persistent Memory DIMMs, in tandem with Cascade Lake Xeons. Sano says this new technology provides many advantages over normal DRAM.

Intel has shipped its first production DIMMs yesterday. This is a new announcement. Shenoy presented Sano with the first Intel Persistent DIMM to come off the production line. That's quite the collectible.

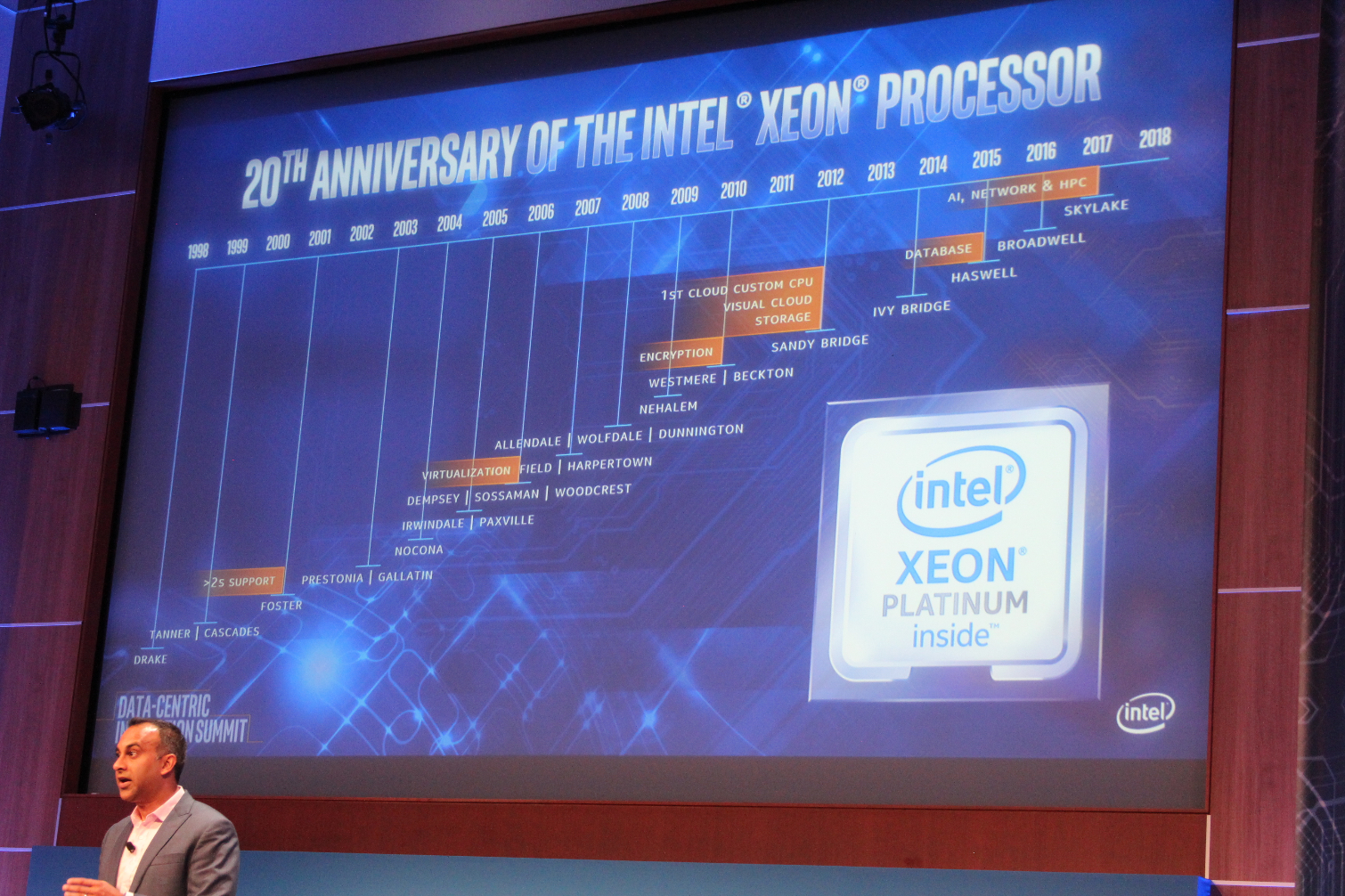

Now the company is talking CPUs. The company has delivered 220 million Xeons over 20 years. The chart above outlines all the radical developments over the years.

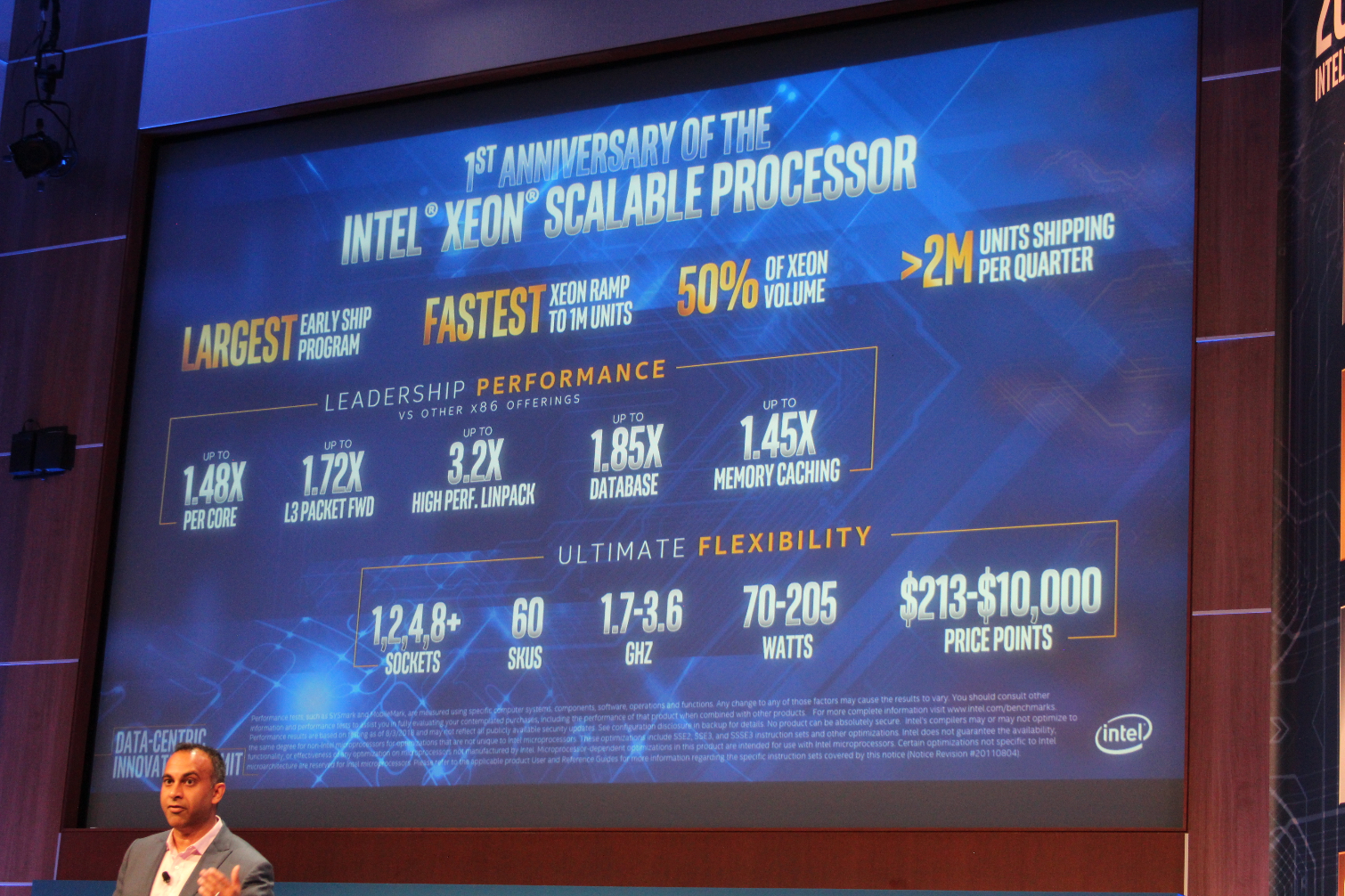

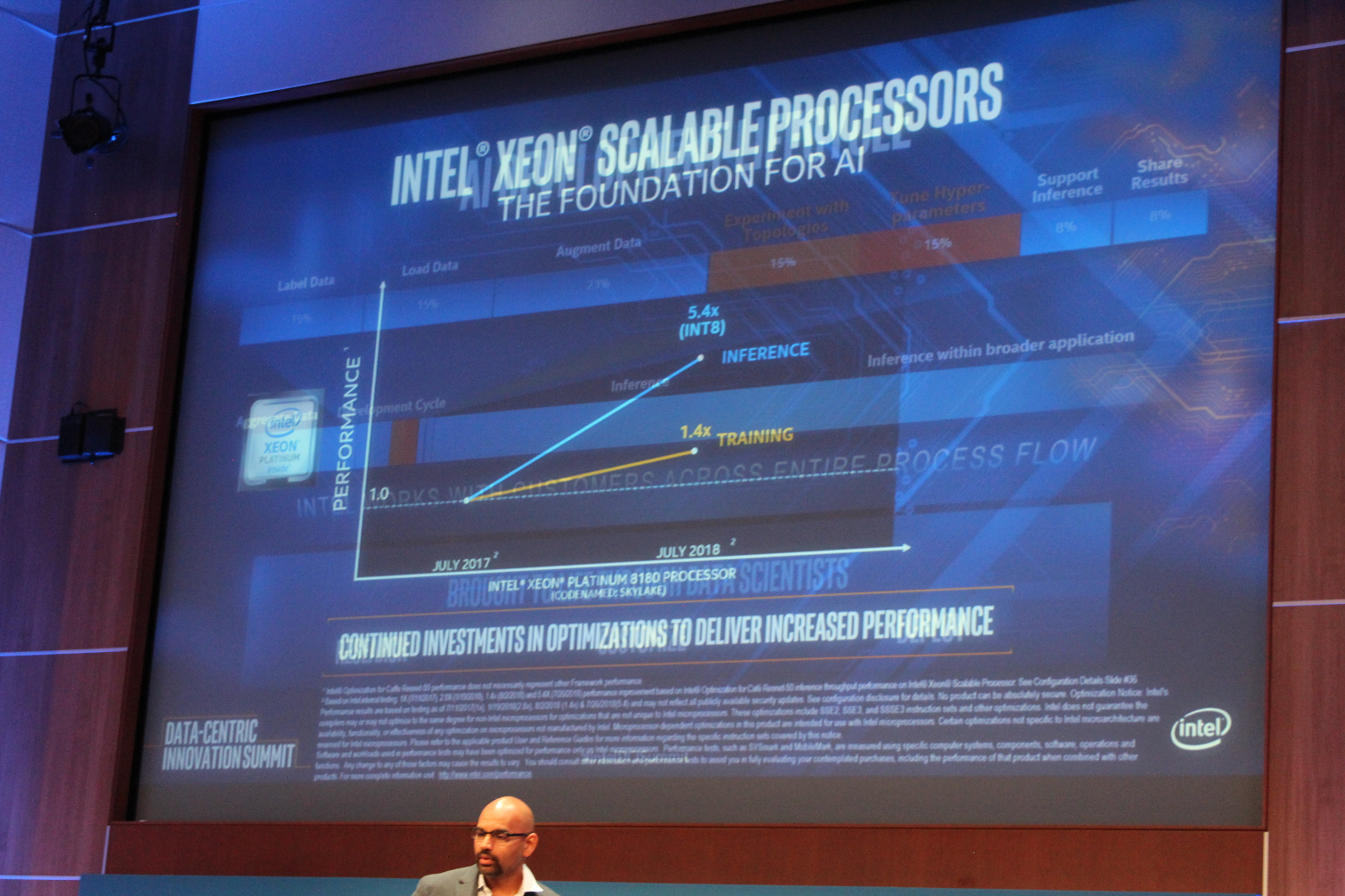

Xeon Scalable is Intel's biggest update to its Xeon family in a decade. The rate of adoption is record setting, and continues to accelerate.

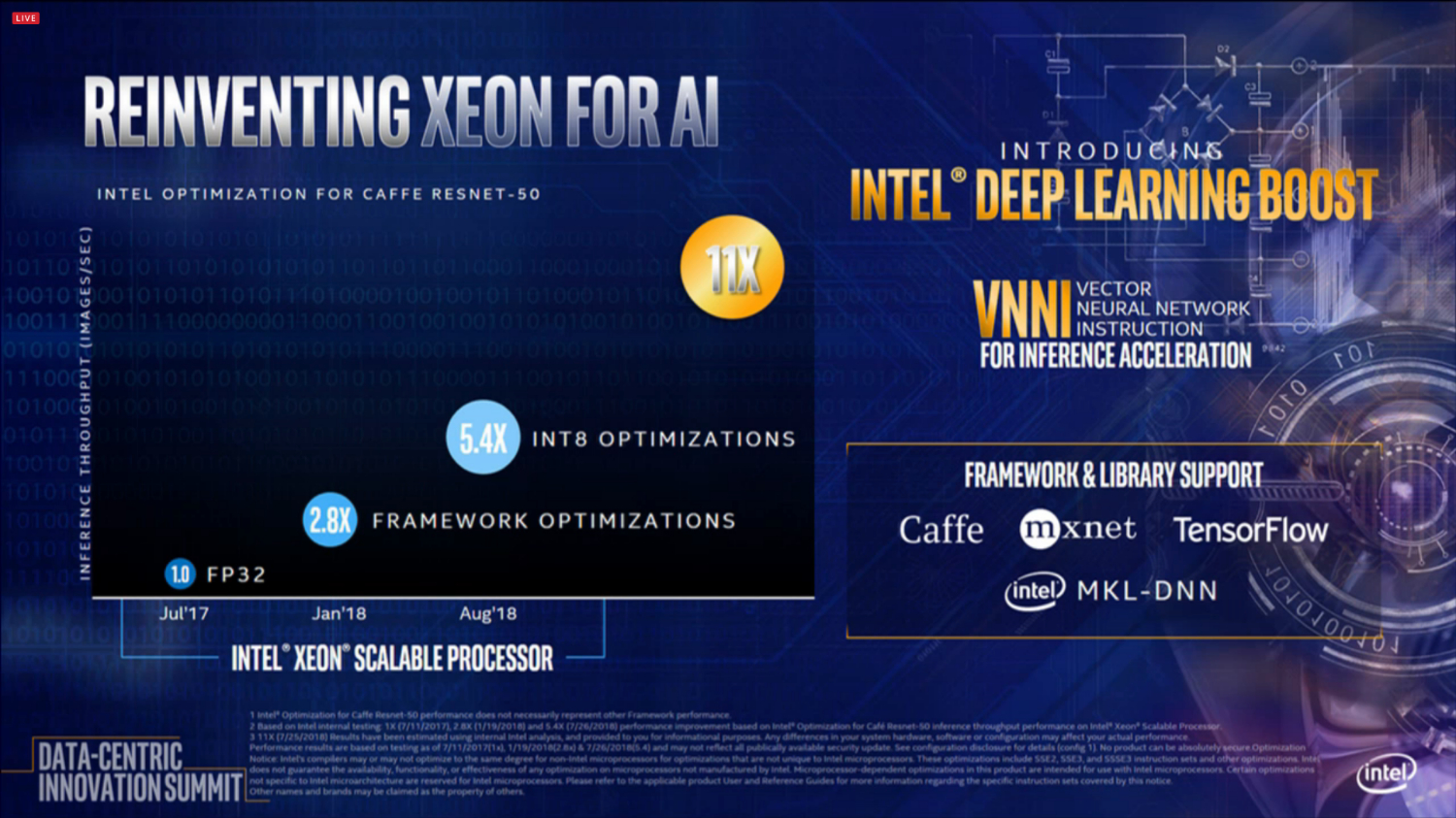

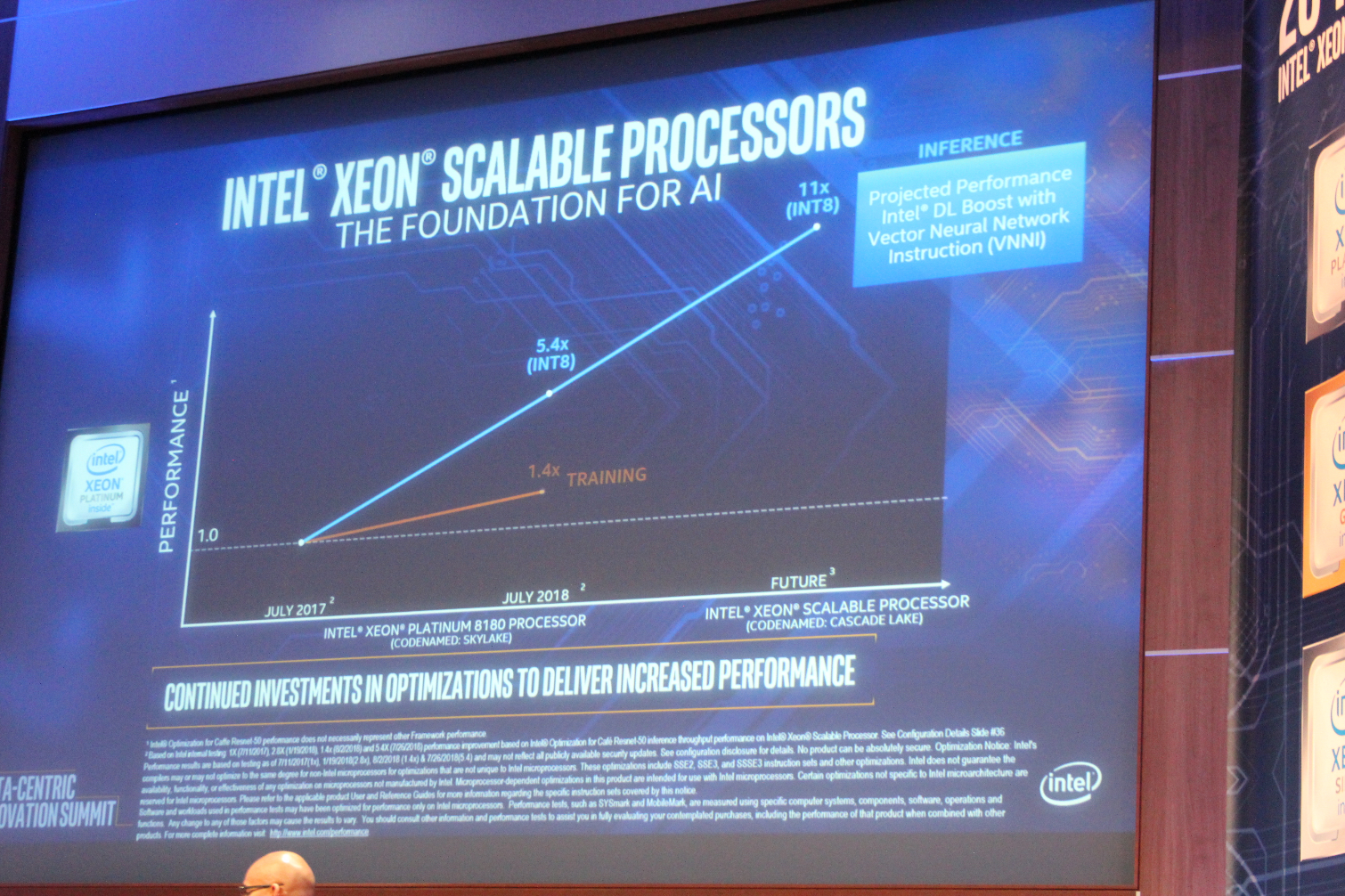

Intel has increased AI performance for Xeon by 277X with inference workloads and 240X in training performance in one year. Much of this came on the back of AVX-512. Intel sold $1 billion worth of Xeon processors used for AI.

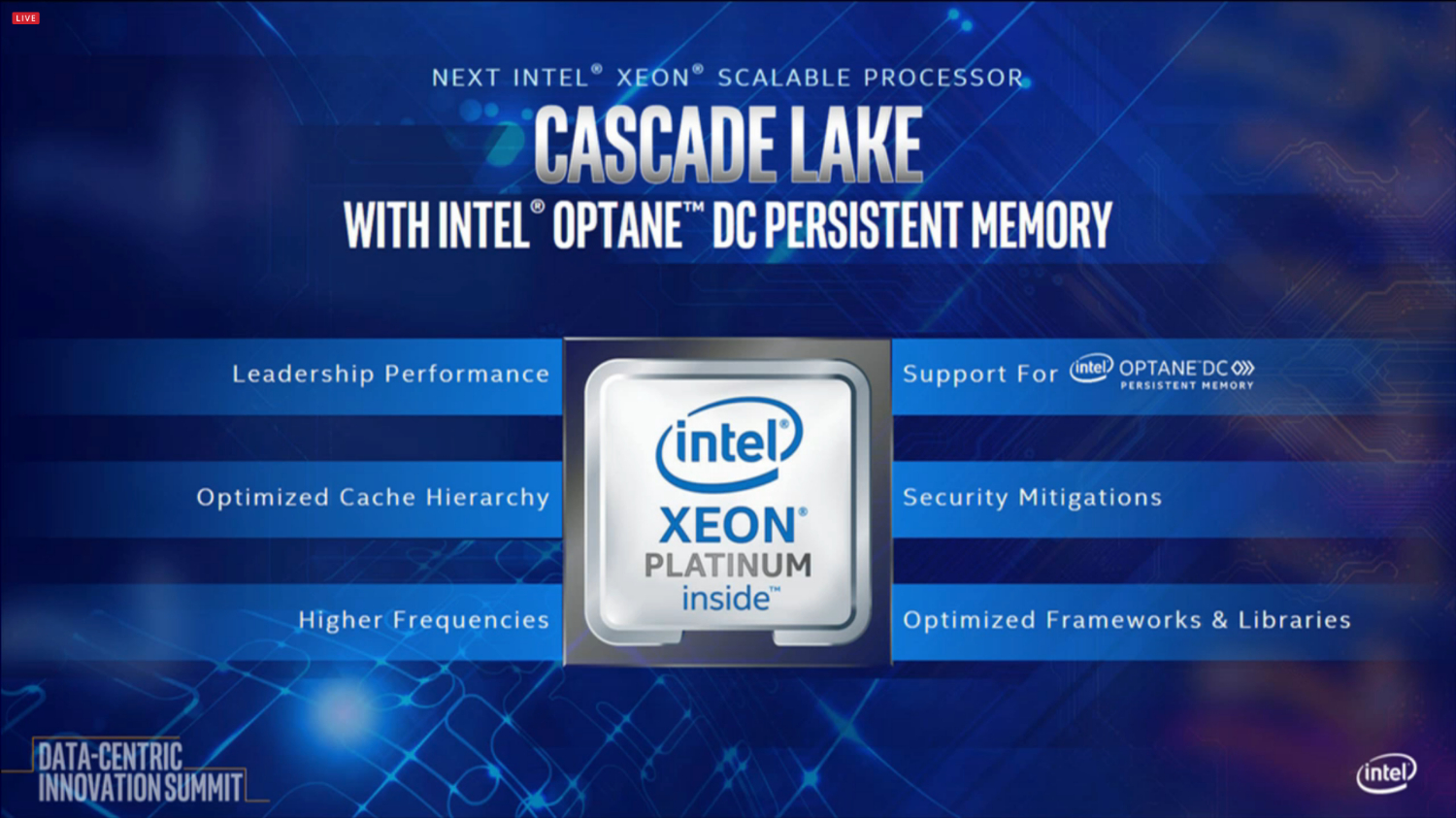

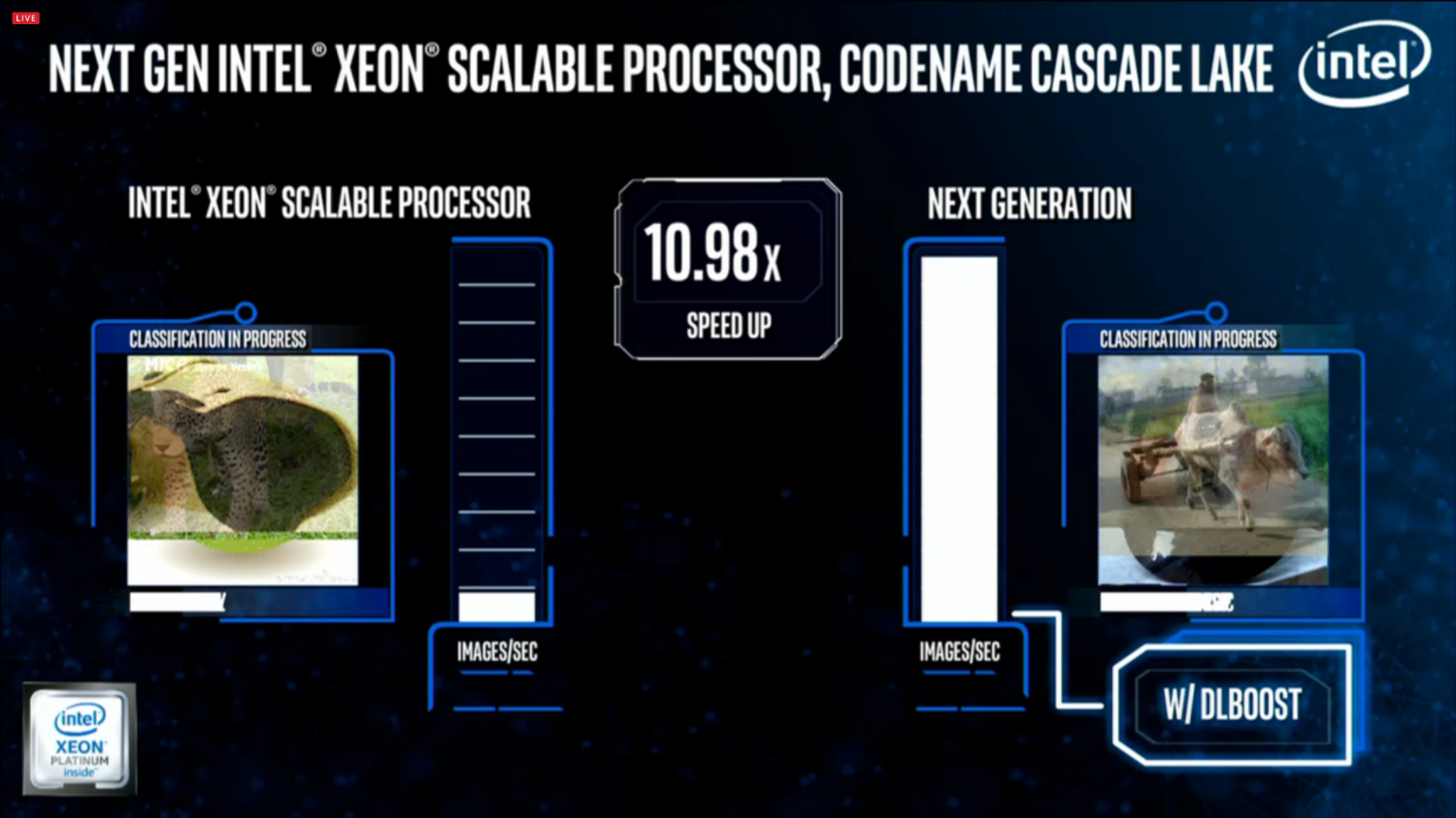

Cascade Lake Xeons are coming at the end of the year. It will have hardware-based silicon mitigations, new memory controllers, higher frequencies, a new AI extension called Intel DL boost. This provides an 11X performance boost over Skylake.

Intel conducted the first live demo of Cascade Lake. The demo highlighted the boosted AI performance.

Shenoy is talking about new interconnects that the company has developed. This will include EMIB, silicon photonics, etc.

Here comes Jim Keller.

Keller explained that the scale of Intel's company and the diverse technology portfolio are key reasons he joined the company. He says that there is a great thirst to succeed at Intel. He wants to contribute to succeeding and is focused on yield and performance.Keller says Intel has a broad range of products that range from half a watt to a mega-watt.

This is Intel's new server roadmap. Intel's new approach is a new 14nm Cooper Lake processor, which comes at the end of 2019. The Ice Lake processor, which is 10nm, will be a fast follower in 2020. The platforms are socket compatible. Cooper Lake will support Bfloat 16 for AI training workloads.

Shenoy made his closing remarks. Another speaker is taking the stage to discuss AI.

Naveen Rao, of Altera fame, has taken the stage. Rao is the VP and GM of Intel's artificial intelligence products group.

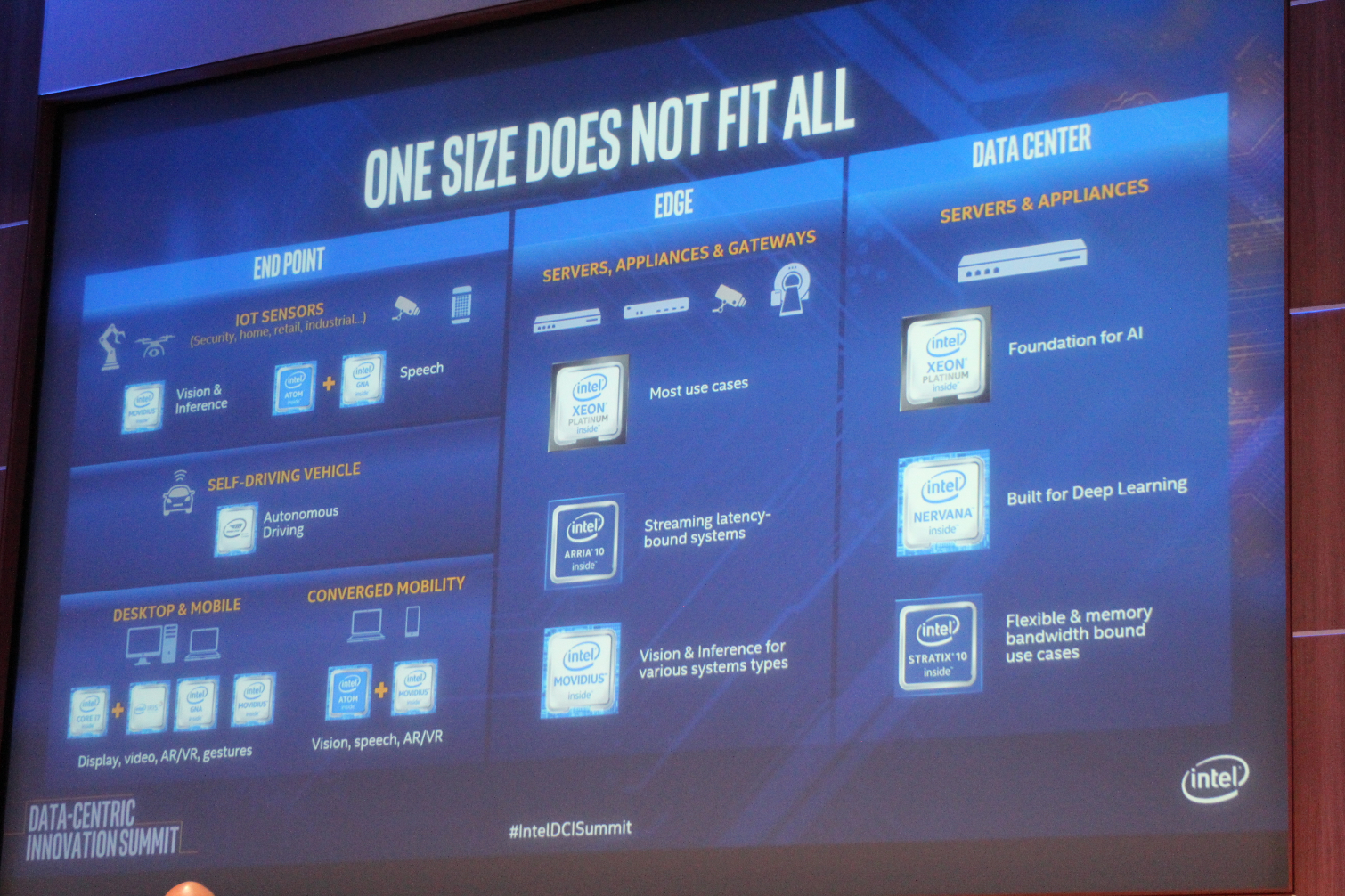

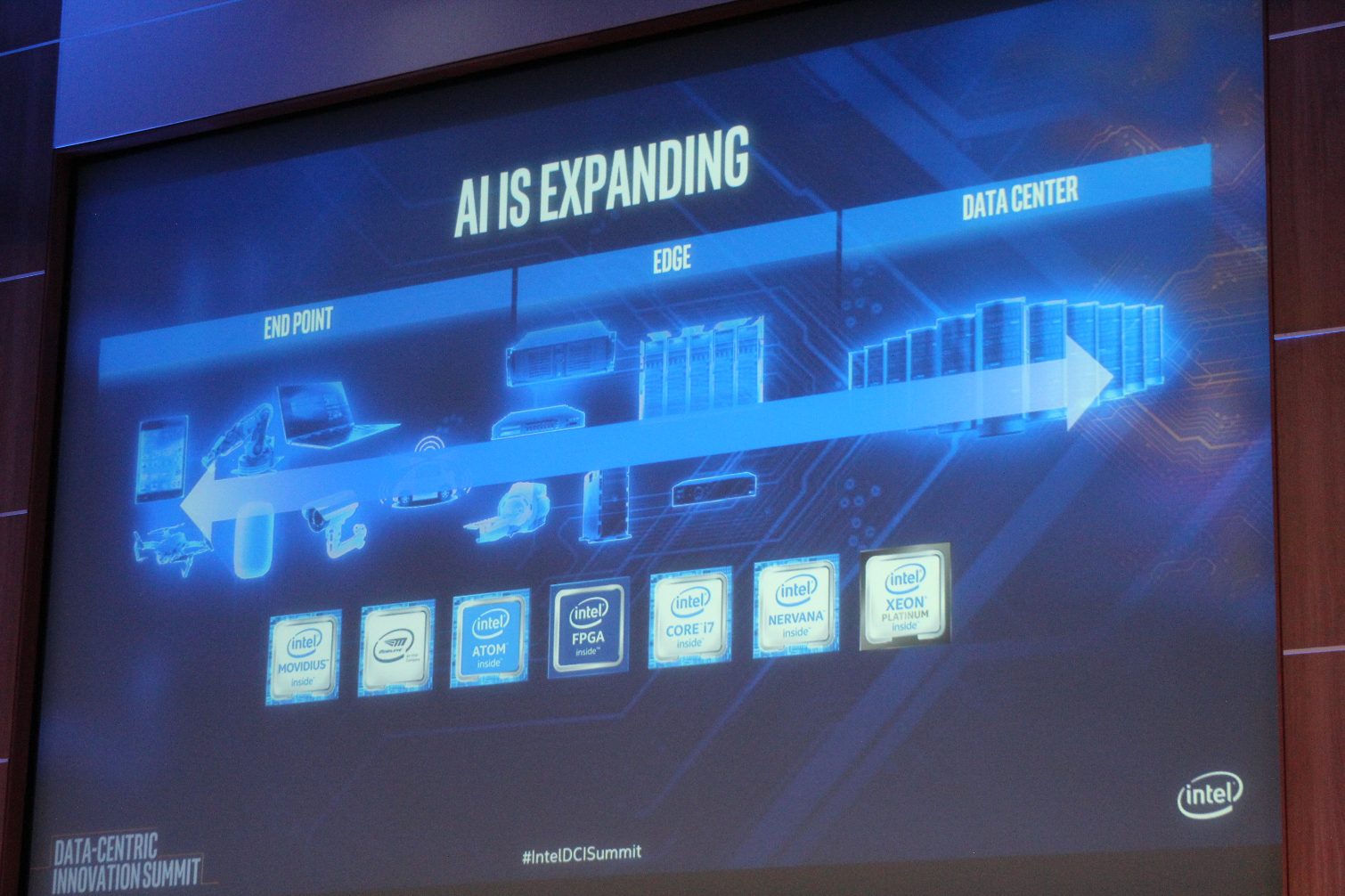

Reo says AI is becoming a general-purpose paradigm, and evolution is rapid. Applications are expanding to more use-cases. The applications span from the edge to the data center.

It takes multiple architectures to address the full spectrum of AI workloads. Rao says Intel's portfolio is uniquely well-suited for the task.

Intel is trying to build the best tools to address the broad range of applications. AI is still in the very early stages. A typical AI development cycle begins with data collection, Rao says these workloads run on Xeon.

Intel wants to bust the myth that GPUs are the best solution for AI.Intel is standardizing on bfloat, which was introduced by Google. Allows lower-precision to run effectively, thus boosting performance.

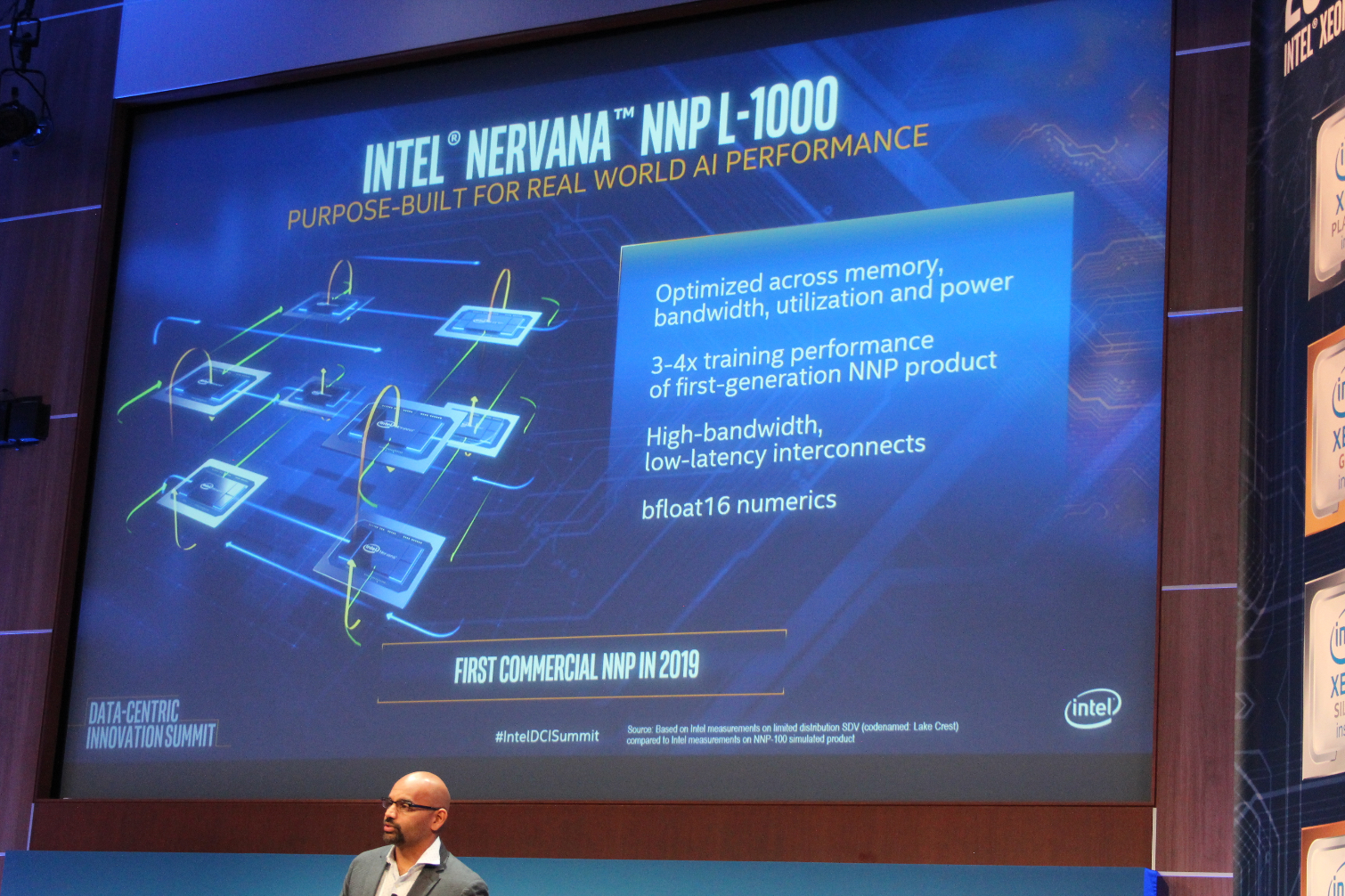

First commercially-available NPP coming in 2019. 3-4X gain over first-gen Lake Crest.

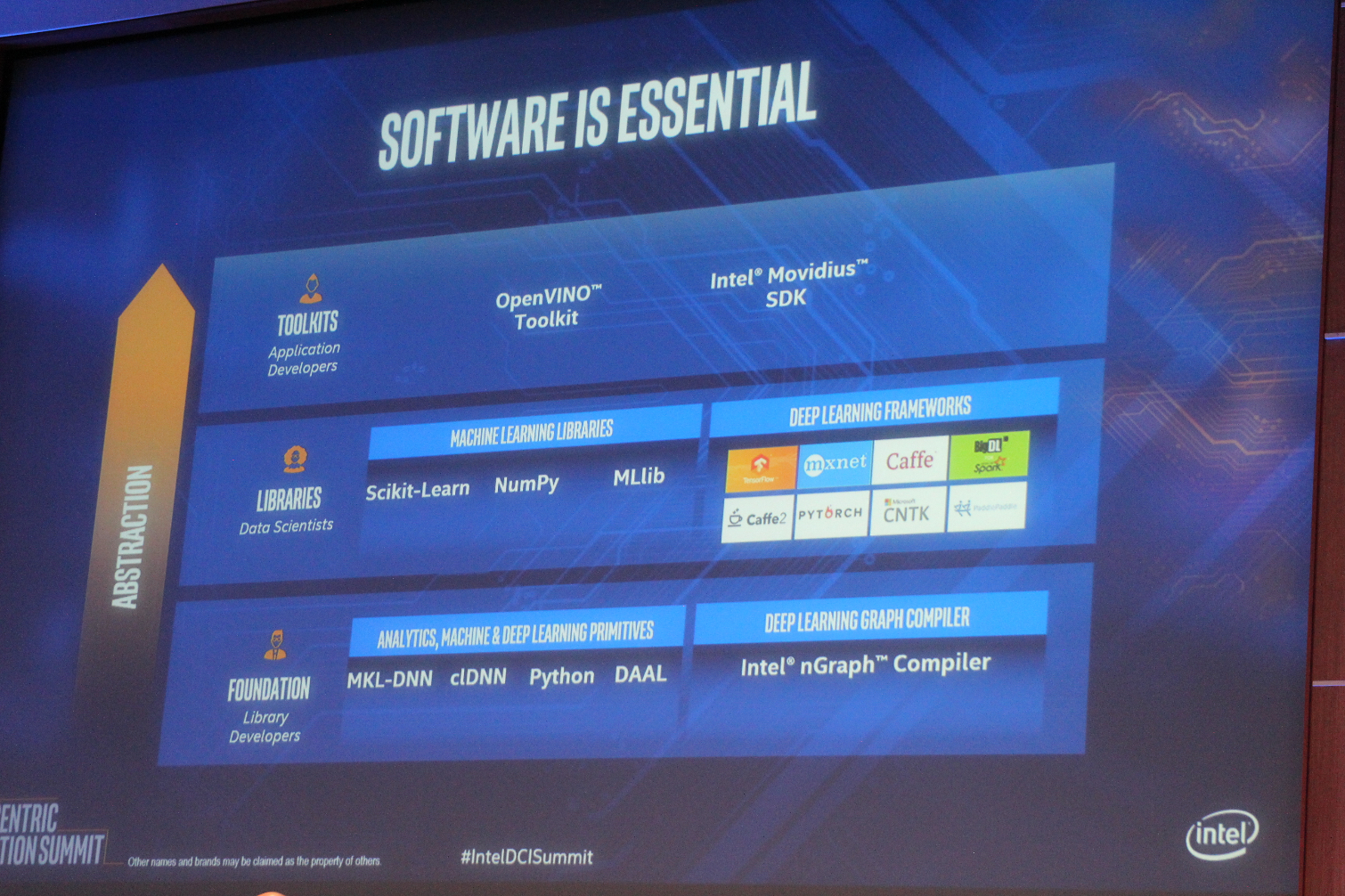

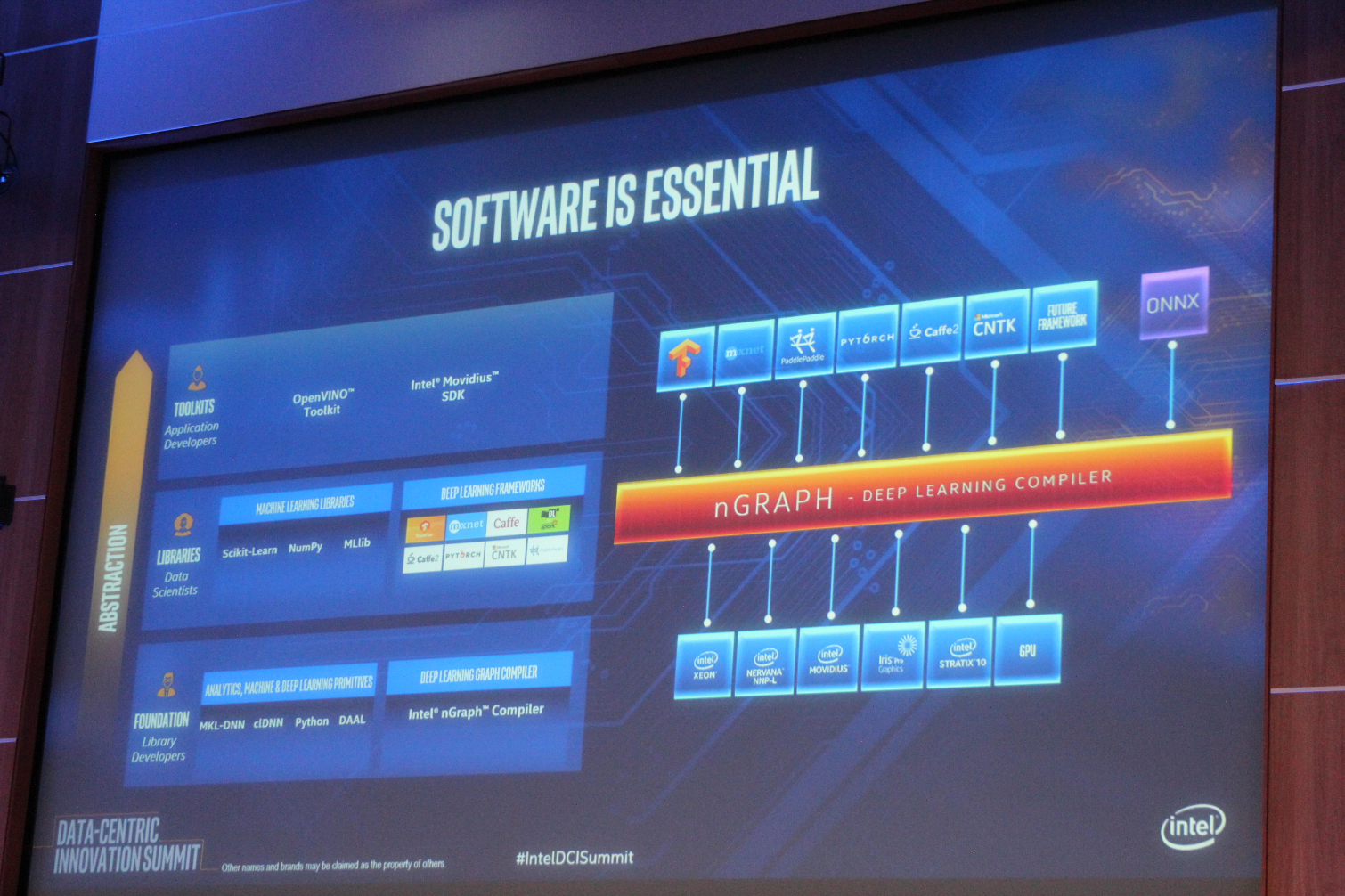

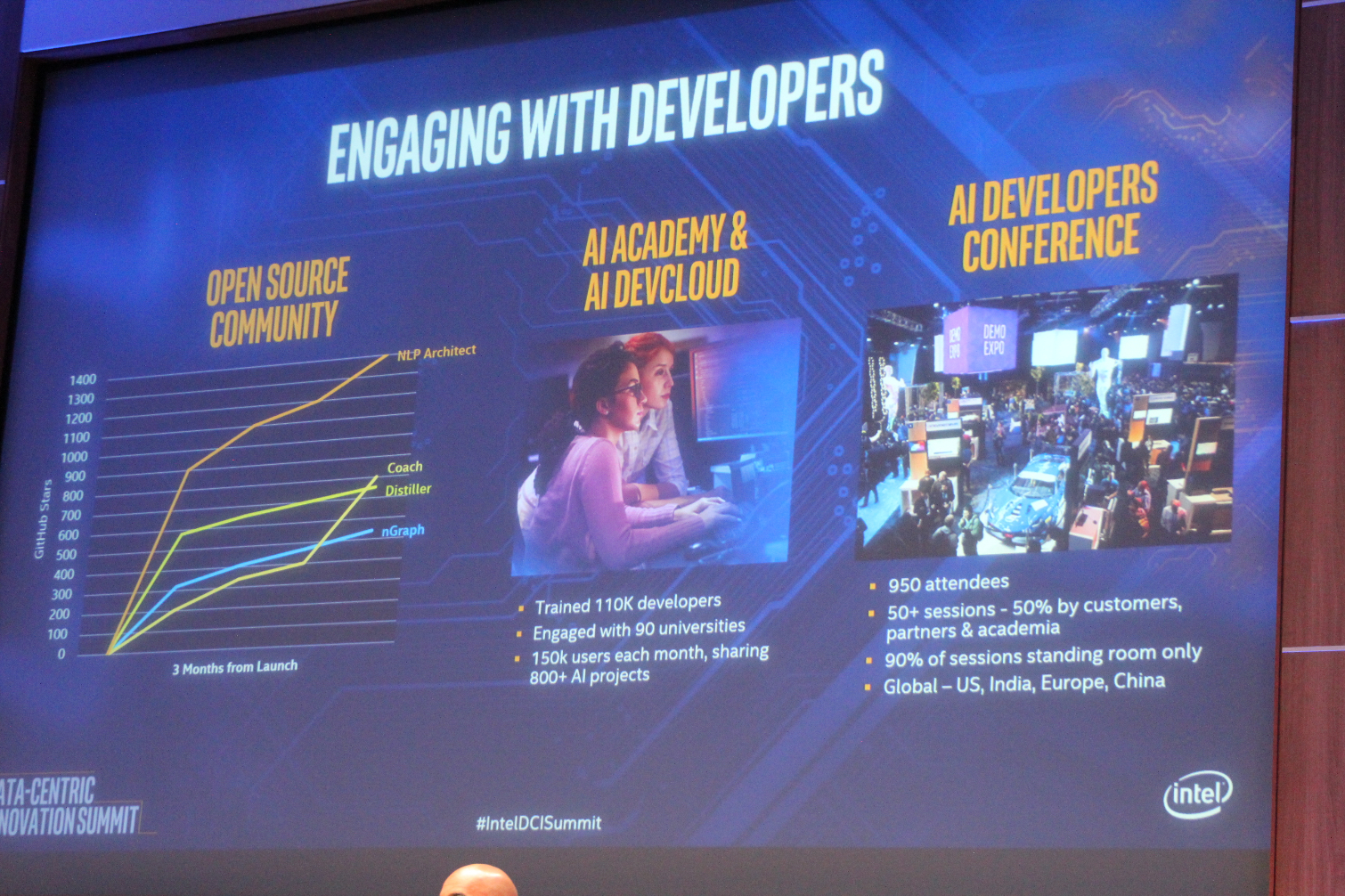

50% of Intel's AI Group is dedicated to software. The foundation begins with library developers, then the libraries move upstream into the open source network. The stack is evolving rapidly. The goal is to abstract away complexity, thus making the technology more accessible to garden-variety developers.

Intel's nGRAPH allows applications to be applied to any type of hardware. That plays well with Intel's multi-pronged hardware approach to AI.

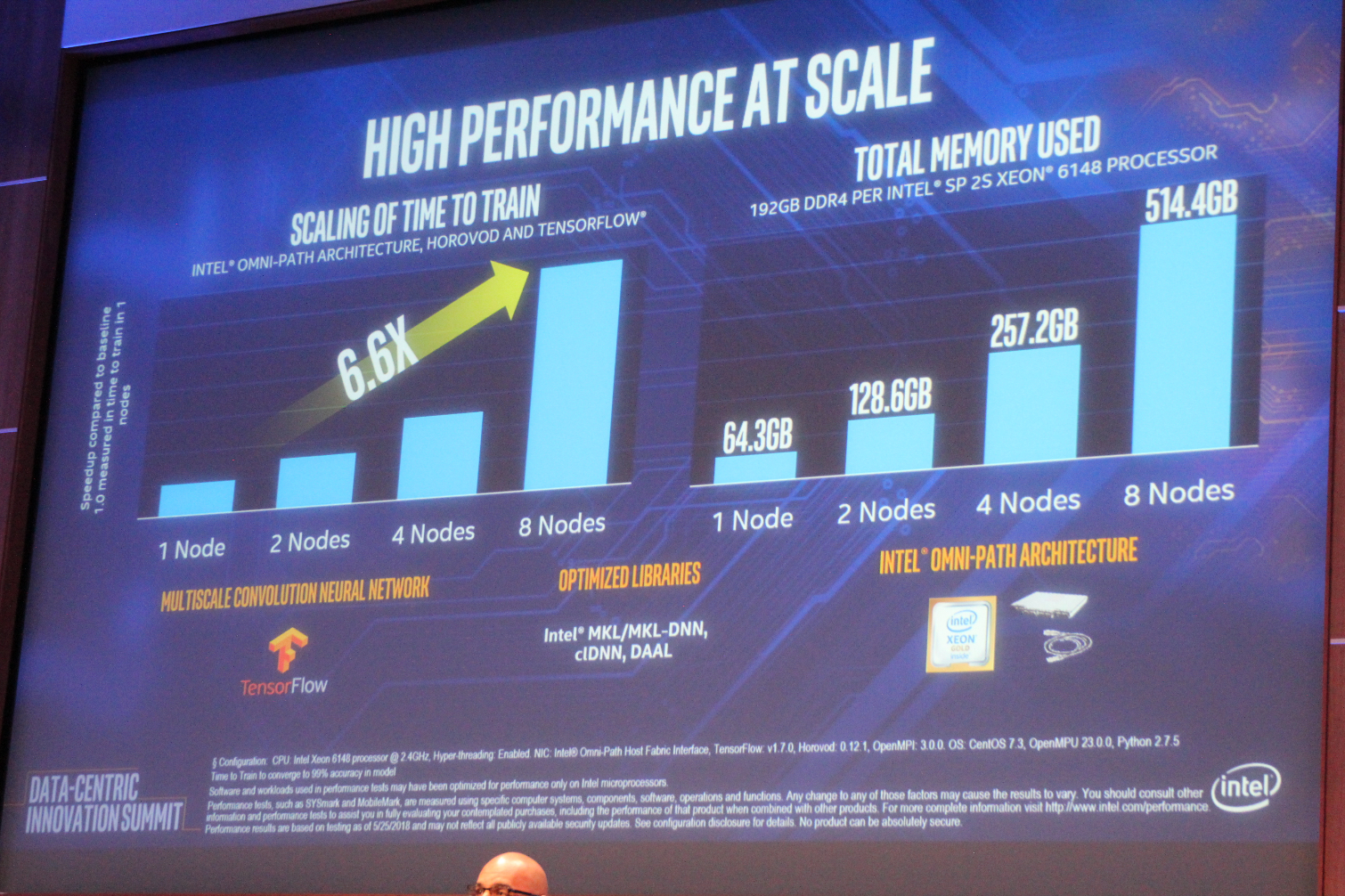

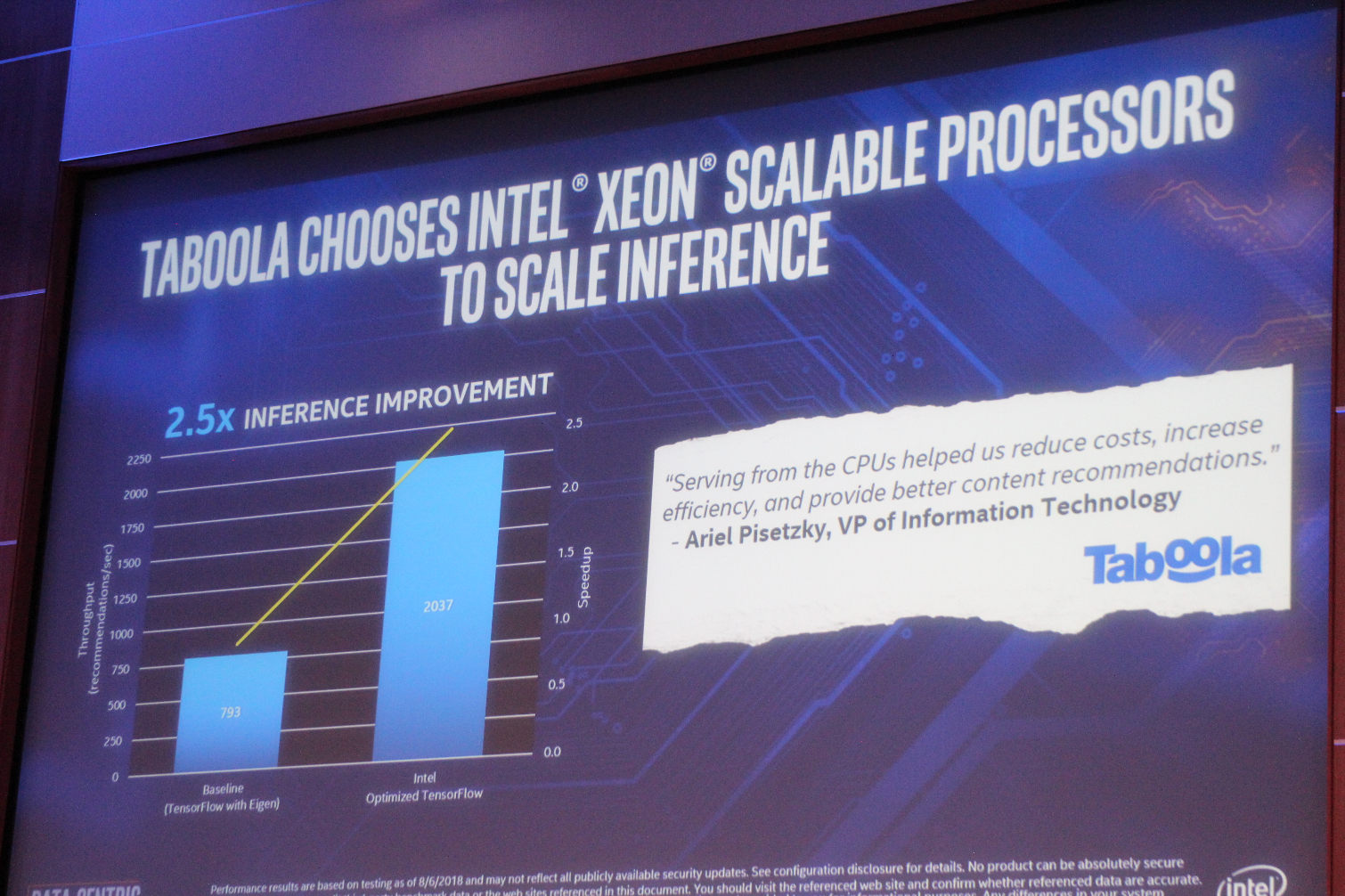

A few slides from the demo and a few of Intel's partners. Highlights include integration of various AI workloads that leverage multiple types of Intel hardware simultaneously. Such as Xeon CPUs and Omni-Path networking.

Intel is taking a multi-faceted approach to building the AI ecosystem.

Raejeanne Skillern, VP of the DCG and GM of CSP platform group, has taken the stage. This is one of the most important market segments for Intel's Xeon processors.

Skillern feels the cloud is still a growing field, even after a decade of development. "The cloud is the foundation of the digital transformation." All of these things translate to big revenue for Intel. The impressive revenue growth in this area is accelerating. This growth is fueled by the different segments listed on the right side of the slide.

Intel's CPUs, combined with 'adjacencies' like networking and storage, help the company design custom solutions for its customers. Intel also develops custom Xeons for the large cloud service providers. Intel has 30 different custom SKUs, off-roadmap, customized uniquely for the CSP. Intel has ten large customers in this business. Intel can also use its own IP and use third-party IP to create semi-custom chips. This approach also extends to ASICs.

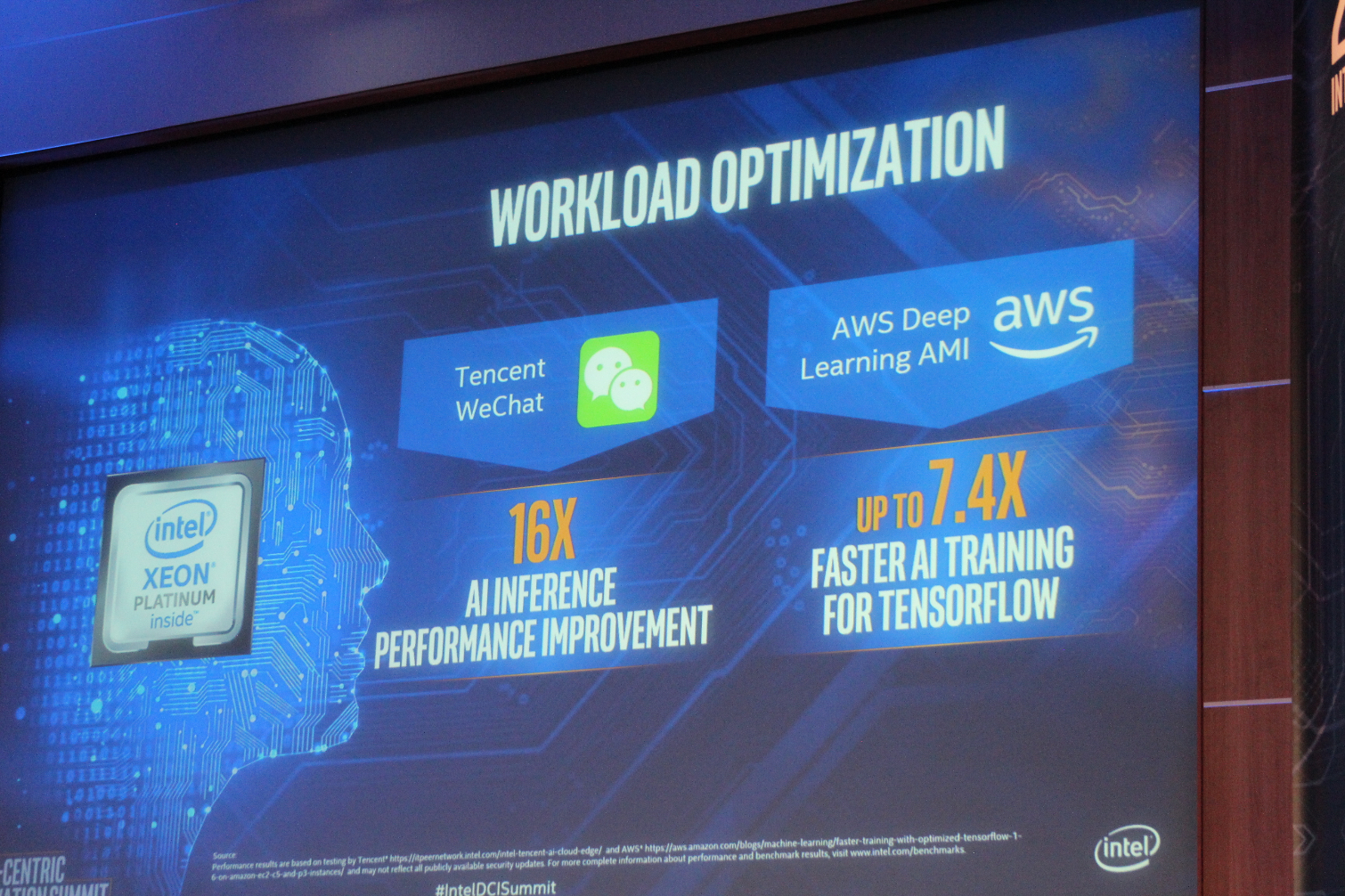

Intel has 200 engineers working on 130 different custom projects. Intel has worked with Tencent to optimized its AI workload performance for the WeChat platform, which has 1 billion users worldwide. Delivered a 16X AI inference performance boost. This project is among several examples, such as a project with AWS that boosted performance for AI training workloads by 7.4X.

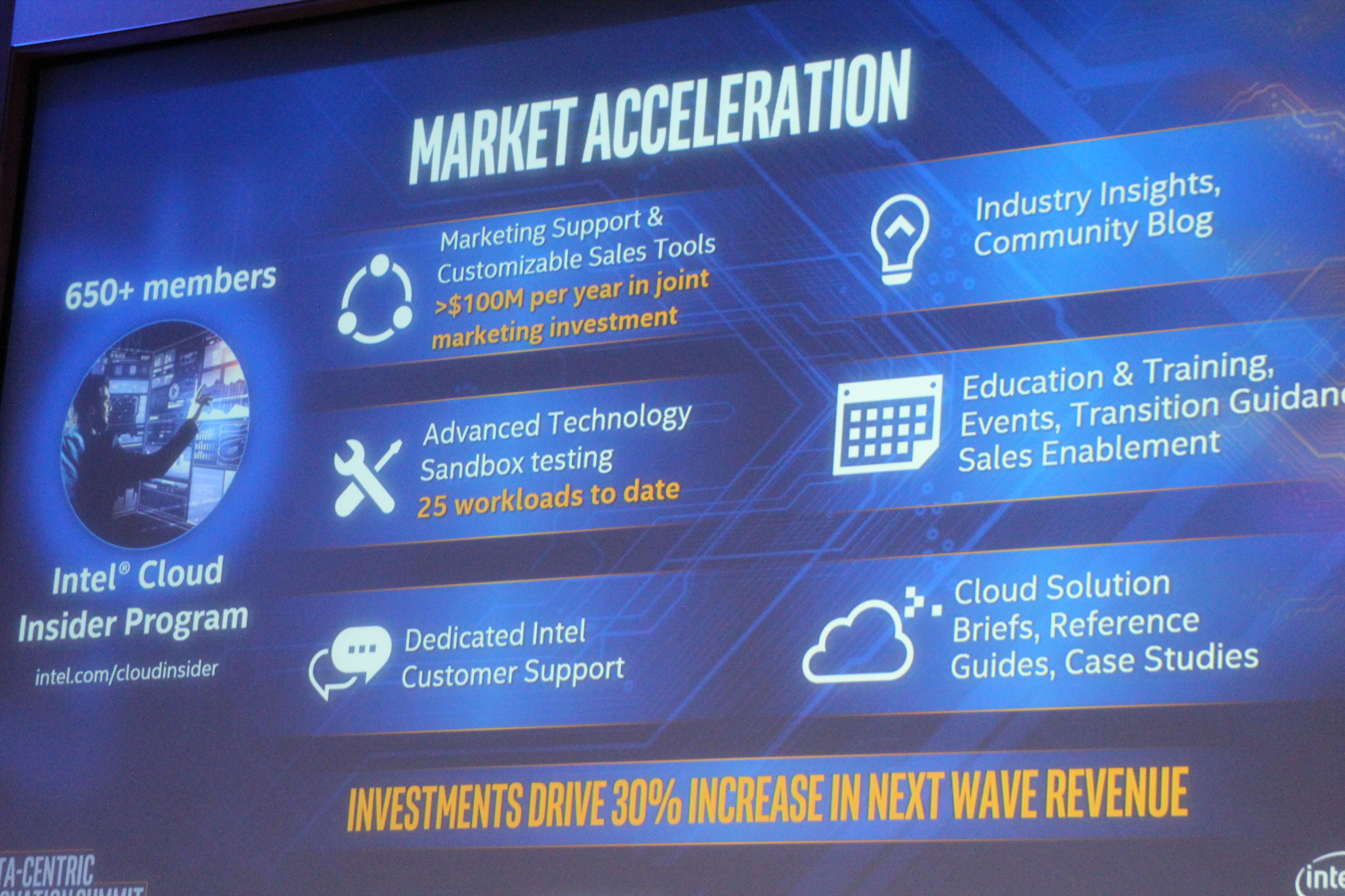

Intel is going to spend $100 million on helping its cloud service provider customers promote their services. Intel is also allowing the large-scale customers to come into its labs and test and qualify early hardware.

Skillern is summarizing her presentation, which is a wrap for the presentations. We have technical sessions for the rest of the day, stay tuned for more coverage.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

derekullo What catches my eye is the high speed ethernet.Reply

If you look at the super small caption at the bottom.

High speed in this case means 10 gigabit ethernet and above.

Can't wait for more motherboards to start shipping with 10Gb. -

bit_user Reply

Nice summary. Thanks for putting it together.21214699 said:We're here at Intel's headquarters in Santa Clara, CA for the company's Data-Centric Innovation Summit.

Breaking: Intel Data-Centric Innovation Summit : Read more -

bit_user Reply

This being a datacenter conference, I'm sure they're talking about server ethernet.21214780 said:What catches my eye is the high speed ethernet.

If you look at the super small caption at the bottom.

High speed in this case means 10 gigabit ethernet and above.

Can't wait for more motherboards to start shipping with 10Gb.

For consumers, it looks like Intel's next move will be supporting 2.5 Gbps chipset-integrated ethernet, I think. -

jimmysmitty Reply21216323 said:

This being a datacenter conference, I'm sure they're talking about server ethernet.21214780 said:What catches my eye is the high speed ethernet.

If you look at the super small caption at the bottom.

High speed in this case means 10 gigabit ethernet and above.

Can't wait for more motherboards to start shipping with 10Gb.

For consumers, it looks like Intel's next move will be supporting 2.5 Gbps chipset-integrated ethernet, I think.

Integrated into chipset, yes. However there are a few 10G boards out there using an additional Intel chip:

https://www.asus.com/us/Motherboards/WS-C422-SAGE-10G/specifications/

Not a normal consumer board though. Still 2.5/5 will probably hit first then 10Gb will eventually hit unless something faster and cheaper comes out first.