Nvidia GTC 2018 Highlights: AI Trucks, Mega-Video Cards & Ray Tracing

The GTC 2018 Slideshow

Nvidia's yearly GPU Technology Conference (GTC) was overflowing with demos, presentations, tutorials, and sessions. As expected, AI and deep learning topics dominated the show. And much to our disappointment--even though we pretty much knew it wasn't happening--Nvidia didn't announce new cards for gaming. The company did announce a new Tesla-powered Quadro professional graphics card and what it bills as "The World's Largest GPU," which is actually sixteen Tesla GV100 GPUs crammed into a single chassis that draws a mind-bending 10kW of power.

We'll cover all that, and more, as we take you on a trip through Nvidia's bustling tech showcase.

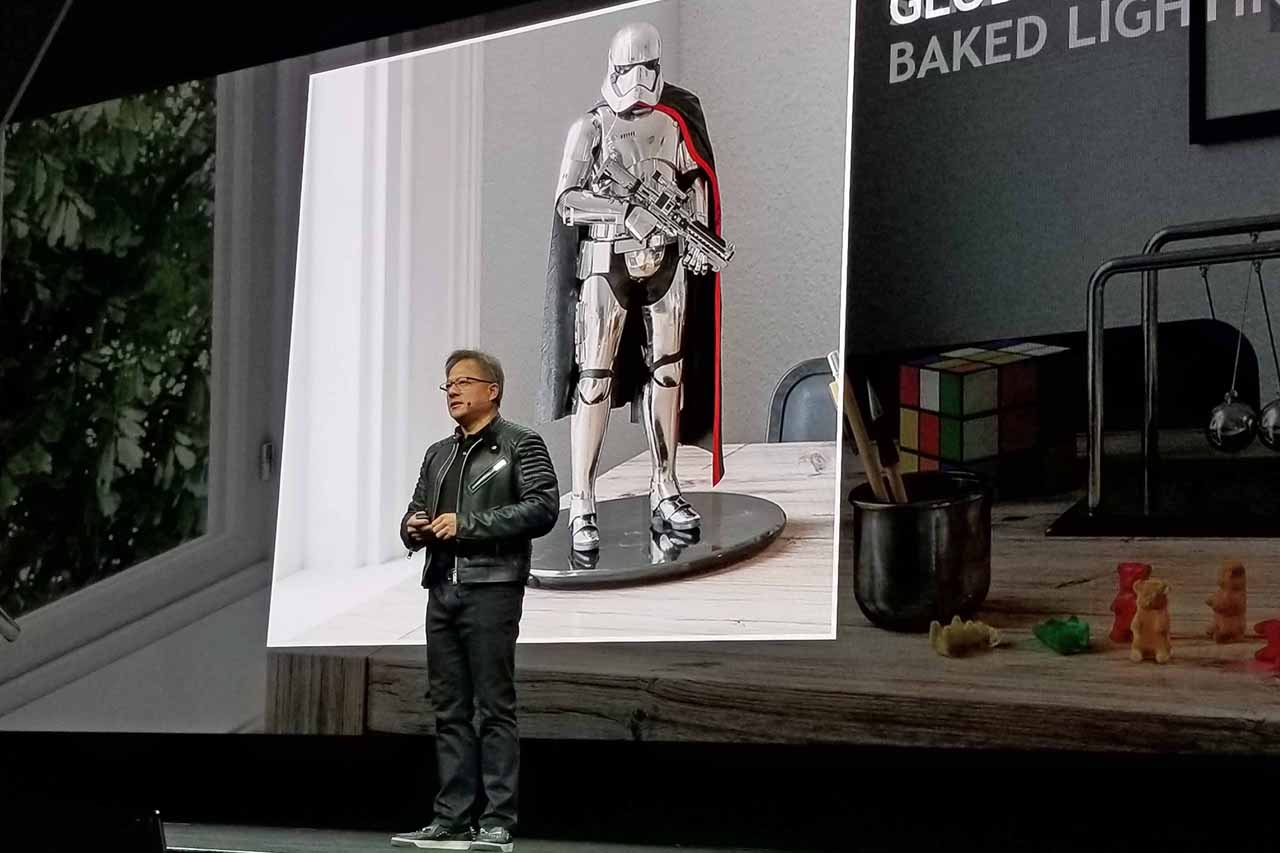

Jensen Huang's Keynote

Jensen Huang, Nvidia's CEO, took to the stage in a freewheeling keynote to announce several new products, including the "world's largest GPU," new photo-realistic real-time ray-tracing advances, the Volta-powered Quadro GV100, and the company's latest advances in autonomous driving and robotics.

Huang's keynote came just days after an autonomous Uber vehicle was involved in a fatal accident with a pedestrian. The accident has sent shockwaves through the industry as Uber pieces together the details of the incident. Huang struck a somber note while he discussed the company's approach to autonomous vehicle safety, explaining that it designs its autonomous driving platforms with both redundancy and diversity to ensure the highest level of safety. Huang later revealed that the company had suspended portions of its autonomous driving program even though Uber's vehicle didn't use Nvidia's DRIVE technology.

Nvidia Unveils "The World's Largest GPU"

Nvidia's new DGX-2 server, which Huang bills as the "World's Largest GPU," comes bearing 16 Tesla V100s wielding a total of 512GB of HBM2. Nvidia's new NVSwitch interconnect fabric ties it all together into a cohesive unit with a unified memory space. The DGX-2 server also features two Intel Xeon Platinum CPUs, 1.5TB of memory, and up to 60TB of NVMe storage all crammed into a chassis weighing 350 pounds.

The system pulls 10 kW of power and provides twice the performance of the previous-generation DGX-1. The 16 NVSwitches provide up to 2.4TB/s of throughput between the GPUs, thus powering the system up to two petaFLOPS (via the tensor cores). External connectivity comes in the form of eight EDR InfiniBand or eight 100GbE NICs that provide up to 1,600Gb/s of bandwidth.

Nvidia claims the beast can replace 300 Intel servers that occupy 15 racks. And more importantly, the system is purportedly 18 times more power efficient than those 300 servers. Bold claims indeed. You can get your own DGX-2 to test out Nvidia's claims, but it'll set you back $400,000.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

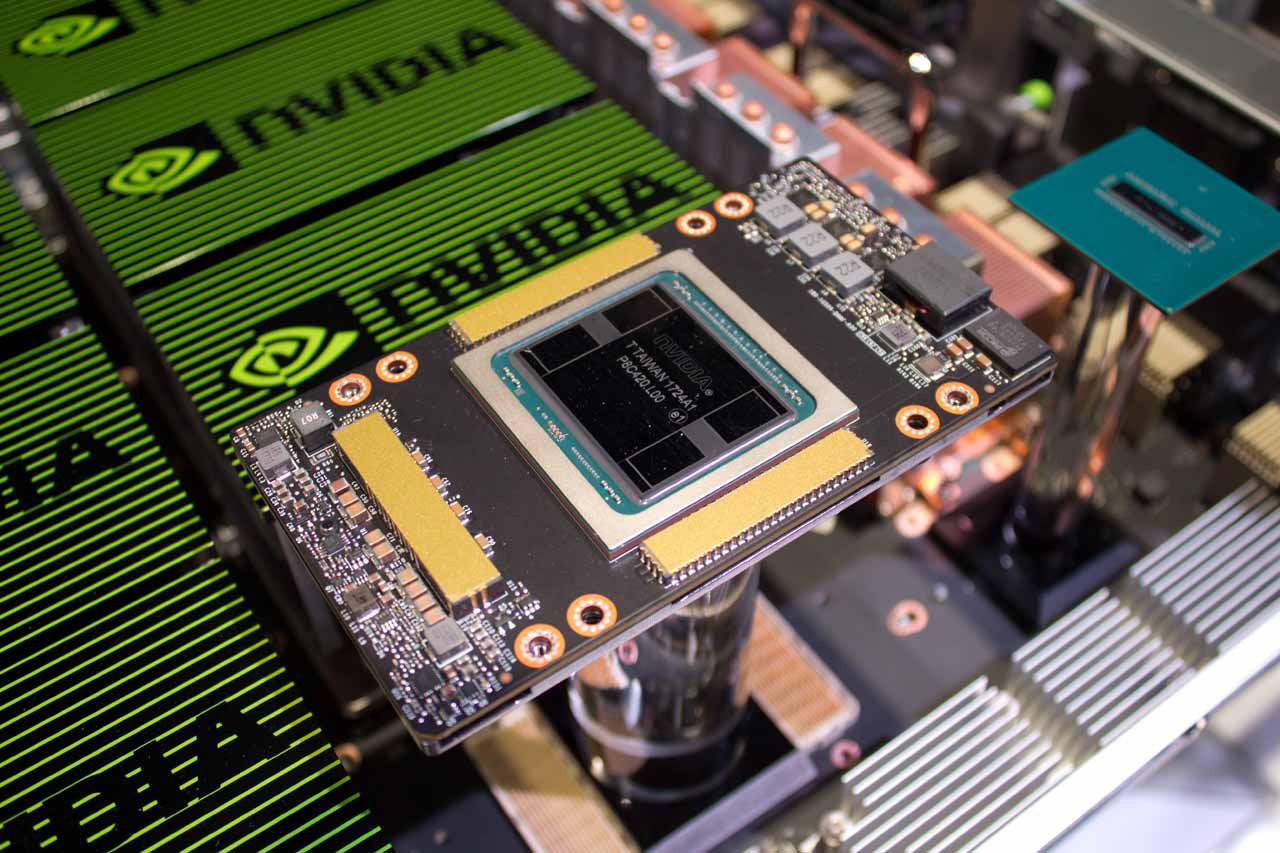

Boosting The Tesla V100

The Tesla V100 is a formidable beast: the 815mm2 die is the size of a full reticle and wields 21 billion transistors. Here we see the massive die on Nvidia's new SXM3 package found in the DGX-2 system. The updated package runs at 350W (a 50W increase over the older model) and comes with 32GB of HBM2.

Nvidia announced that it would bump all new Tesla V100's up to 32GB of HBM2 memory, effective immediately. The nagging memory shortage has been a key factor in the current GPU shortage, so the Tesla V100's increased memory allocation could exacerbate the shortage. Nvidia is also taking the unusual step of suspending sales of the 16GB model, so all new models will feature the increased memory capacity.

Several blue-chip OEMs (like Dell EMC, HPE, and IBM) have announced that new systems with the beefed-up card will appear in Q2. So we expect the units will fly off shelves as the data center continues its transition to AI-centric architectures.

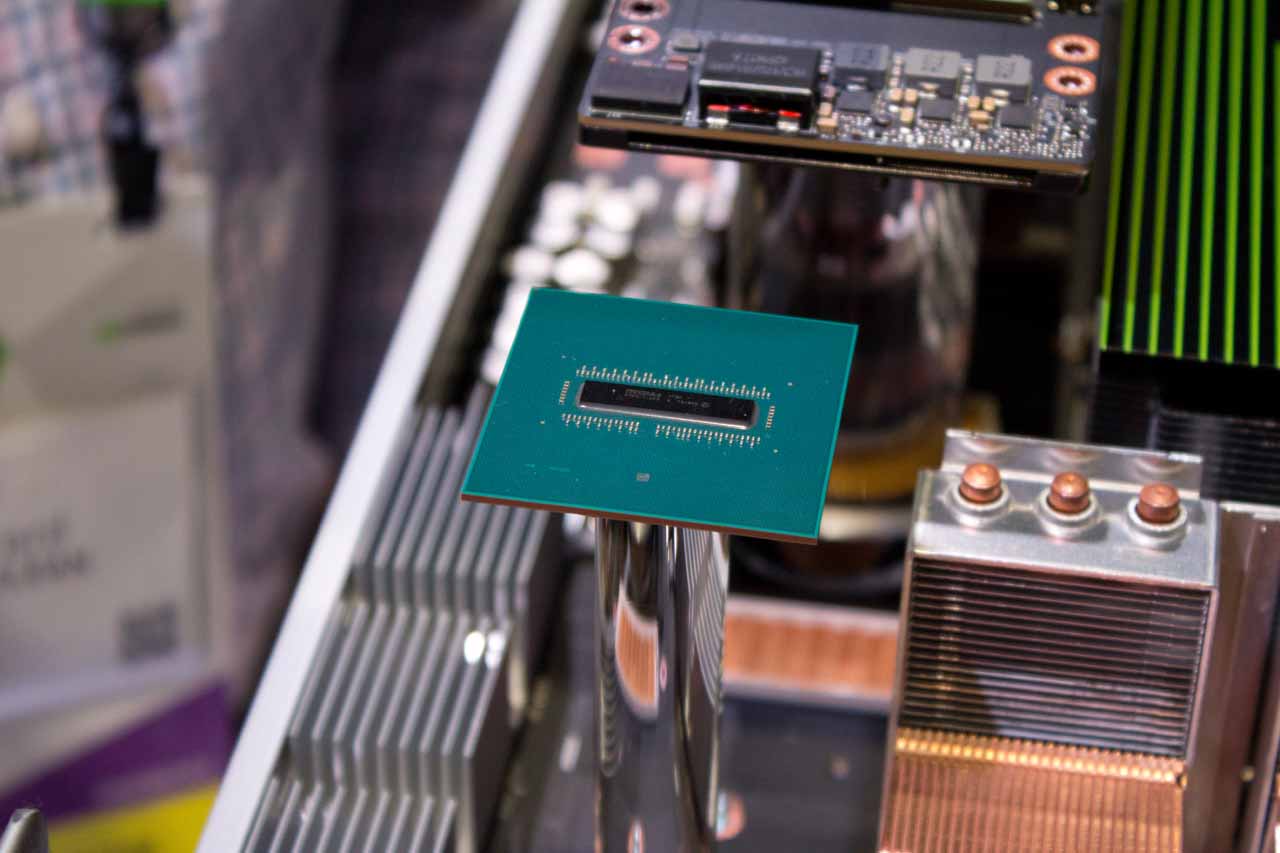

Hitting The NVSwitches

Nvidia's new NVSwitch is a breakthrough achievement for the company that allows it to tie 16 GPUs together in the DGX-2 server. The system features 12 NVSwitches that deliver an aggregate of 14.4TB/s of bandwidth.

Nvidia has developed its NVLink fabric to sidestep PCIe's bandwidth limitations, but scalability is limited due to the six NVLink ports available per GPU. The new NVSwitch bypasses those limitations with 18 8-bit links per switch that deliver a total of 300GB/s of bandwidth between each GPU. That's 10 times the speed of standard PCIe 3.0 connections. Huang equated this to having the equivalent of 200 NICs connecting the DGX-2's top and bottom trays.

The switches feature two billion transistors fabbed on TSMC's 12nm FinFET process. The resulting fabric uses memory semantics to avoid disrupting the existing GPU programming models. The fabric is a non-blocking crossbar, meaning it delivers the full 300GB/s of bandwidth to each GPU regardless of how many are actively transmitting data, and provides a unified memory space that allows for larger AI models. As with all switches and fabric topologies, there will be a latency penalty for traversing the interconnect, but Nvidia hasn't shared specifics yet.

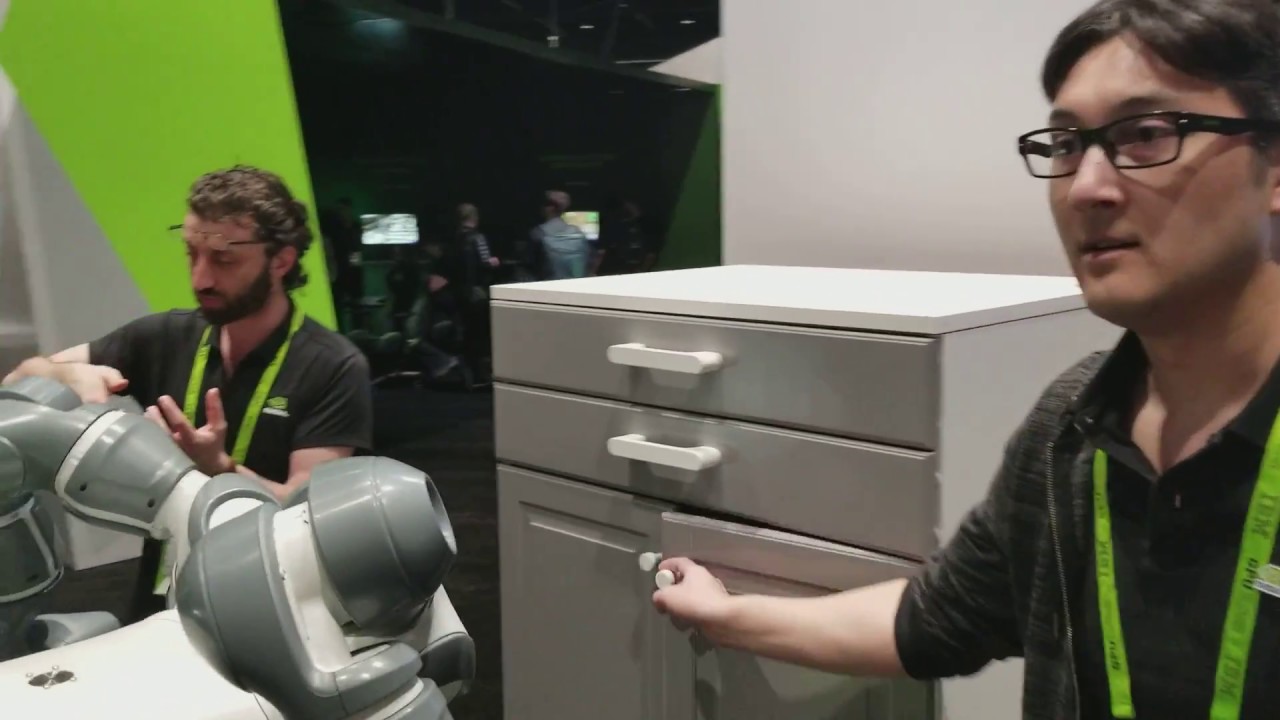

Let Me Do That For You

Nvidia's new Isaac SDK is a suite of libraries, drivers, APIs and other tools designed to allow manufacturers to develop next-generation robots that leverage AI for perception, navigation, and manipulation.

Nvidia's demo showcased a 7DoF robot from ABB opening and closing cabinet drawers in real time. The system uses a simple Xbox Kinect sensor to detect its environment, highlighting how AI can enable the use of cheaper components for next-generation robots. Nvidia also debuted its new Isaac Sim, which is a testing suite that allows developers to take their new robots for a spin in a simulated testing environment, thus saving us humans from costly accidents--or robot uprisings.

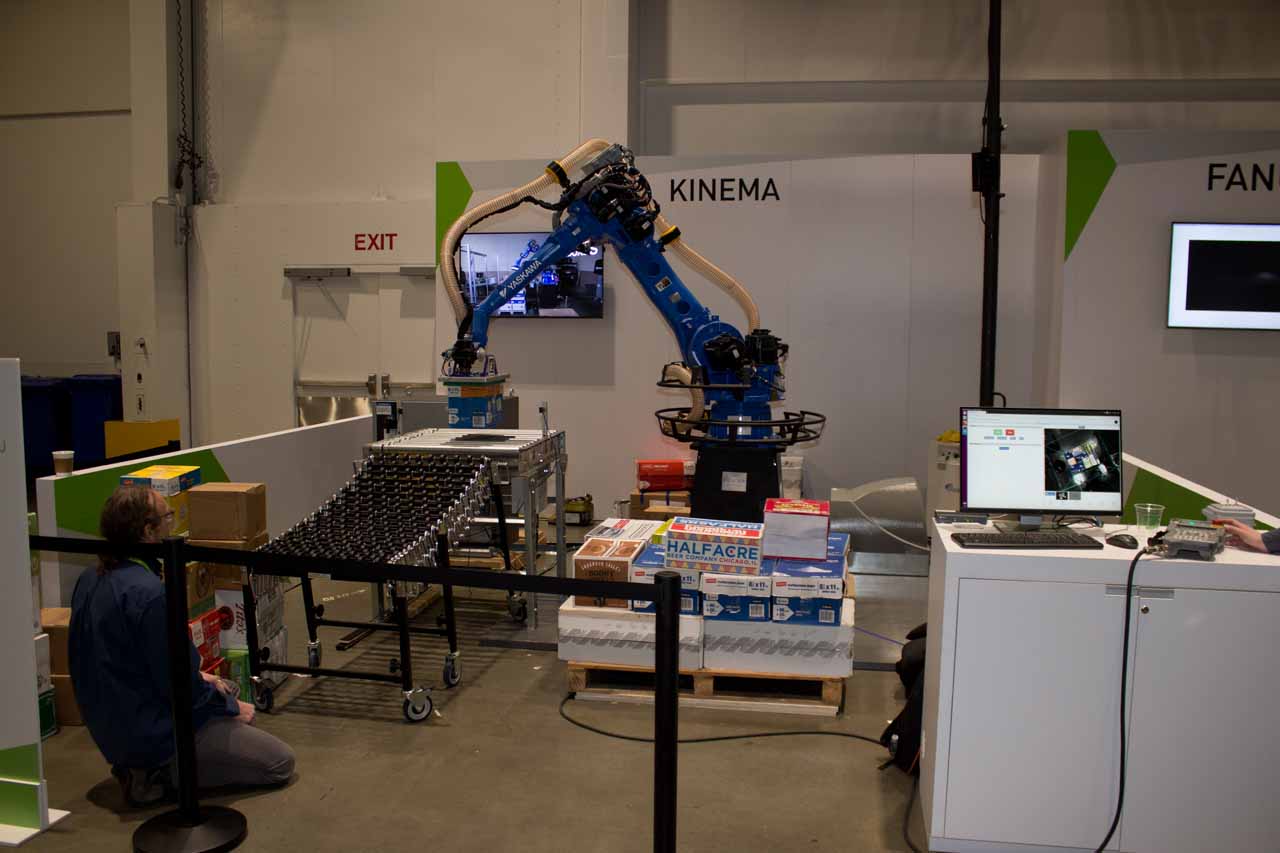

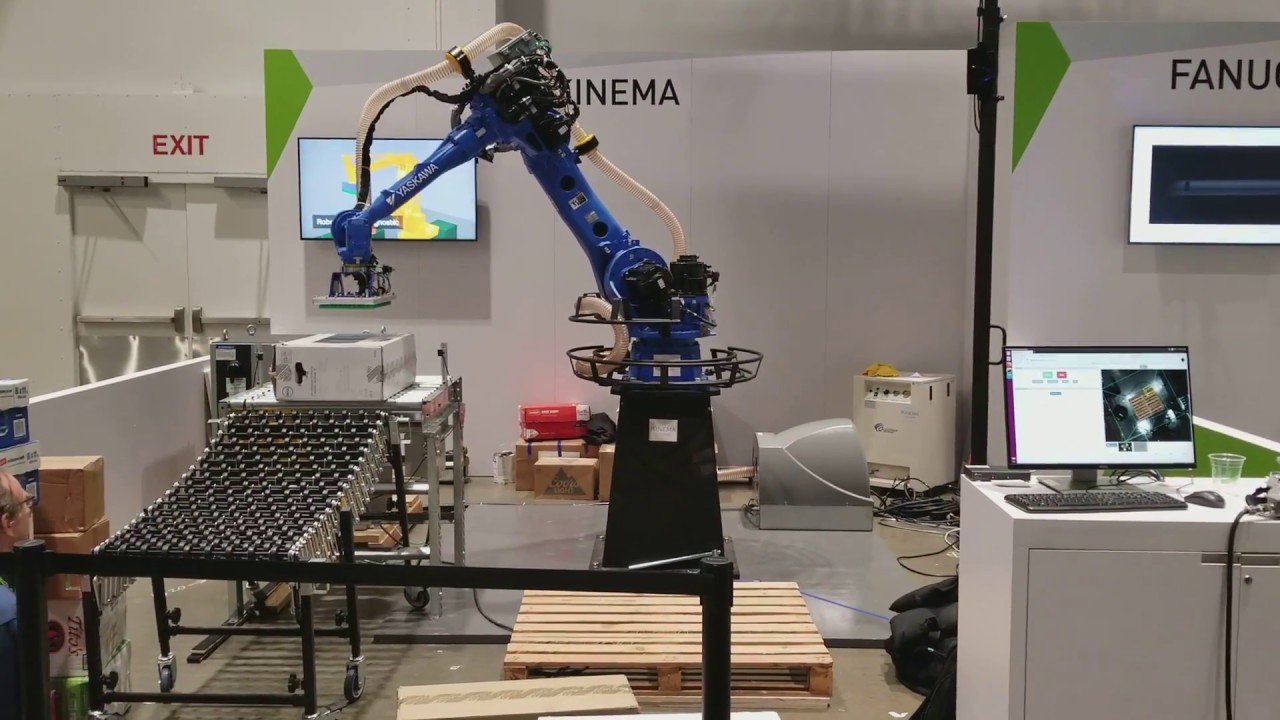

Revolutionizing The Warehouse

If you're wondering where all of the GeForce GTX 1060's have gone, we've got your answer. Kinema Systems brought its rather large robot to the show to demonstrate how it can detect and unload assorted items from a pallet, and it's all powered by that popular graphics card.

Multi-SKU picking involves sorting and moving packages of various sizes and weights from a randomly-packed pallet. In the past, training robots for these types of tasks was almost impossible because they couldn't sense the difference between different boxes.

AI changes that. Kinema trains deep-learning models to detect boxes and identify them automatically with information the system gathers online. The system then employs a video camera above the robot and runs an inference workload to analyze the video output, thus allowing the robot to detect the size, shape, and weight of the randomly-placed boxes. The company uses a GTX 1060 to power the robot. Nvidia recently changed its EULA for consumer devices to forbid their use in data centers. But we aren't aware of any restrictions on industrial use, so more of our gaming cards could be destined for the local robot-equipped warehouse.

Step Aboard The Holodeck

Jensen Huang debuted the photo-realistic Holodeck during last year's GTC keynote. This year's GTC found the company opening the new technology up to the public. Warner Brothers' Ready Player One movie debuts this week at theaters nationwide, but Nvidia provided a sneak peek with a VR rendition of "Aech's Basement," which is an escape room that requires teams of four to interact in virtual reality to solve various puzzles that eventually allow them to escape. Nvidia also had Koenigsegg Automotive AB's Regera Hypercar demo open to the public.

Quadro GV100 GPU, Yours For $9,000

Nvidia also debuted its new Quadro GV100 GPU at this year's GTC. The latest Quadro brings the Volta architecture to Nvidia's line of professional graphics cards. The GV100 comes packing 32GB of HBM2 memory, 5,120 CUDA cores, and 640 Tensor cores. Nvidia claims the beastly card delivers up to 7.4 TeraFLOPS of double-precision and 14.8 TeraFLOPS of single precision. Nvidia says the card can also provide up to 118.5 TeraFLOPS of deep learning performance (a measure of Tensor Core performance).

The company plans for workstation users to leverage its RTX technology. This will open up real-time raytracing to broader use cases, such as media creation.

If one GV100 isn't enough, Nvidia has also infused the new cards with NVLink 2, which means you can combine two cards into a single unit (much like SLI). Pairing two of the cards will cost you, though. A single card retails for $9,000.

First We Map The Site, Then We Build

No, that's not an Nvidia-branded bulldozer. AI pioneers foresee automation eventually breaking free of the bounds of standard vehicles and taking over construction equipment. To the relief of construction workers everywhere, that future is still off on the distant horizon. For now, enterprising companies like Skycatch are using intelligent drones (pictured to the left) to map construction sites using Nvidia's Jetson development kit.

The DRIVE PX Pegasus

Nvidia had its beastly DRIVE PX Pegasus module on display. Nvidia designed the supercomputer to power the latest Level 5 autonomous vehicles. The system comes outfitted with dual Tesla V100's and Xavier SoCs. The Pegasus crunches through 320 trillion operations per second to analyze the crushing amount of data flowing from the 360-degree cameras and LIDAR.

The system only consumes 500W of power, which is important because power consumption equates directly to mileage in electric vehicles. Pegasus is still in development. The final version will feature two of Nvidia's unnamed next-generation GPUs instead of the GV100 modules. Nvidia claims Pegasus will be the first Level 5 system to attain ASIL-D certification, which is the automotive industry's most stringent integrity certification.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

dudmont Without a new GPU release to report, I'm betting draft day for the Chiefs(or my Vikings) will be markedly more interesting. Lemonade from lemons?Reply -

mischon123 "overflowing with demos, presentations, tutorials, and sessions".Reply

Screw that.

We only want speed, low latency and 100% compatiblity. @Nvidia cut the expensive marketing out. If you have a good product in the pipeline - make it an engineers product. Fluff just adds to your cost and ours. 5 star ratings and good reviews sell products. The guys and girls in skinnies are just leeches. -

envy14tpe I'm sitting on my 2.5yr old 980ti waiting to hear what the new Nvidia GPU I will buy is. In the meantime, GO PACK.Reply -

bit_user Robotics is one of those cases where you really want ECC memory (i.e. a Tesla GPU). A robot that big could take somebody's head off.Reply

-

bit_user Reply

I'm not sure what you're talking about, but this event was aimed squarely at professionals, engineers, and researchers. Try browsing the agenda.20845282 said:"overflowing with demos, presentations, tutorials, and sessions".

Screw that.

We only want speed, low latency and 100% compatiblity. @Nvidia cut the expensive marketing out. If you have a good product in the pipeline - make it an engineers product. Fluff just adds to your cost and ours. 5 star ratings and good reviews sell products. The guys and girls in skinnies are just leeches.

https://2018gputechconf.smarteventscloud.com/connect/search.ww -

bit_user Reply

How do you think they got so big in deep learning and high-performance computing? It's because they invested a lot in those markets, via things like GTC. Those investments are now paying dividends, which will in turn benefit gamers.20846139 said:@Nvidia - stop wasting money on pointless adverstisement and hyperbole. You will not make any money for shareholders by wasting their money more than you can recover in imaginary profit. Serve the real people that make your company money; the middle income population. Sheesh, you think Nvidia would know things after all these years O.o

So, you're complaining that they haven't released two full generations in 2.5 years? Talk about entitled...20846583 said:I'm sitting on my 2.5yr old 980ti waiting to hear what the new Nvidia GPU I will buy is.

Right. So, this conference (GTC = GPU Technology Conference) is not for you. In case you haven't noticed, the world doesn't revolve around gamers. Sure, gamers helped fund the early development of programmable GPUs, but the technology has grown beyond gaming, and that's what this is about.20846583 said:In the meantime, GO PACK.

For gaming-oriented GPU news, see their GDC (Game Developers Conference) coverage:

http://www.tomshardware.com/picturestory/824-gdc-2018-highlights-vr.html

Also, unlike GTC, it's not a Nvidia-centric event.