Nvidia Announces Quadro GV100, New NVSwitch, 32GB Tesla V100 At GTC 2018

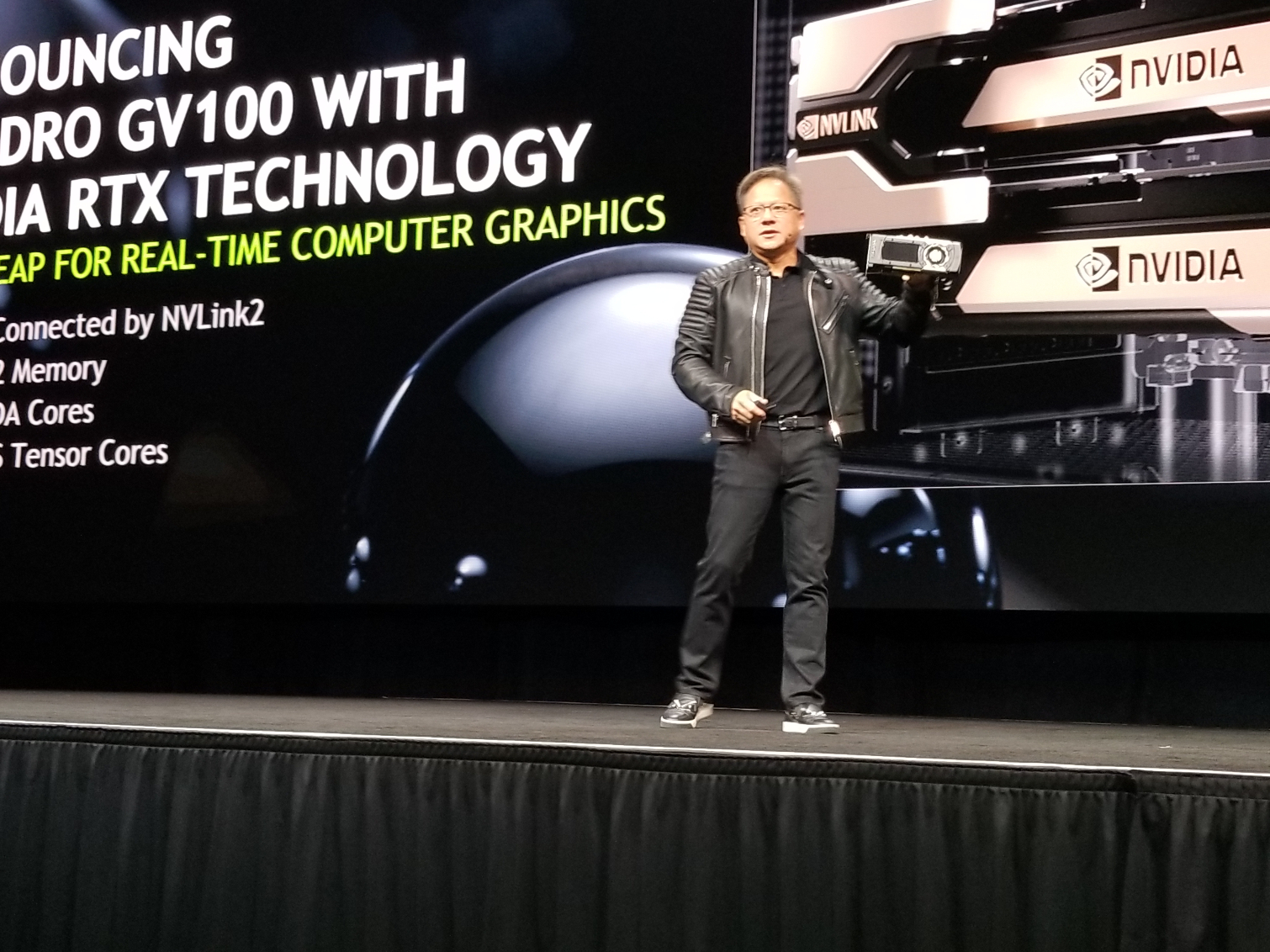

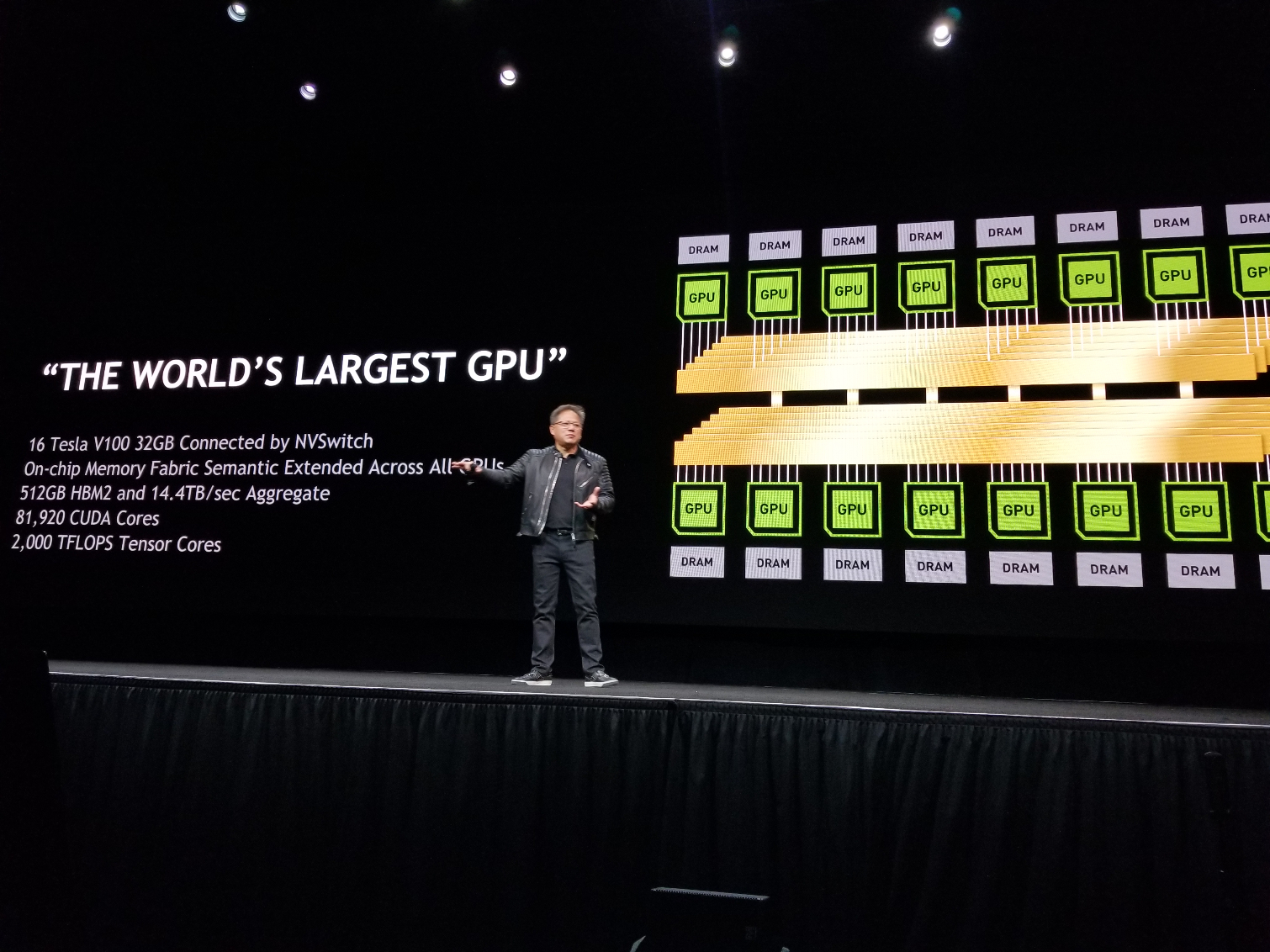

Nvidia CEO Jensen Huang made his keynote presentation here at GTC 2018 in San Jose. Huang announced the company's new DGX-2 system, which Huang calls the "World's Largest GPU." He also unveiled a new Quadro GV100 GPU, a 32GB V100 GPU, and Nvidia's new NVSwitch technology that allows up to 16 GPUs to work in tandem. Huang also outlined advances in several key areas, such as deep learning and autonomous driving.

Jensen's keynote comes as the company fights to restore supply to a devastated graphics card market that is weathering the deepest shortage in history. The shortage has found both Nvidia and AMD enjoying record sales this year (every card they manufacture is a guaranteed sale), but the nagging memory shortage has restricted supply. That's led to radically increased pricing in the consumer market, but it has also had a big impact on Nvidia's customers in the lucrative high-margin enterprise space.

Nonplussed, Nvidia has continued to diversify its portfolio as it penetrates into autonomous driving and IoT applications, all the while continuing to press its data center dominance in deep learning and inference applications. Here are a few of the announcements Huang made during his keynote.

The Nvidia Quadro GV100 GPU

Huang announced the new Quadro GV100 GPU that Nvidia designed to bring the company's recently announced RTX ray-tracing technology into workstations. Nvidia's RTX ray-tracing technology is a combination of software and hardware that allows applications to generate real-time ray tracing effects.

The Quadro GV100 comes with 32GB of memory, meaning it features the same underlying design as the revamped Tesla V100, which we cover below. The GV100 can deliver up to 7.4 TeraFLOPS of double-precision and 14.8 TeraFLOPS of single precision. Nvidia says the card can also provide up to 118.5 TeraFLOPS of deep learning performance (a measure of Tensor Core performance).

The cards also support the NVLink 2 interconnect technology, which makes it possible to pair two of these devices together. That brings a total of 64GB of HBM2 memory, 10,240 CUDA cores, and 236 Tensor Cores together into a single workstation.

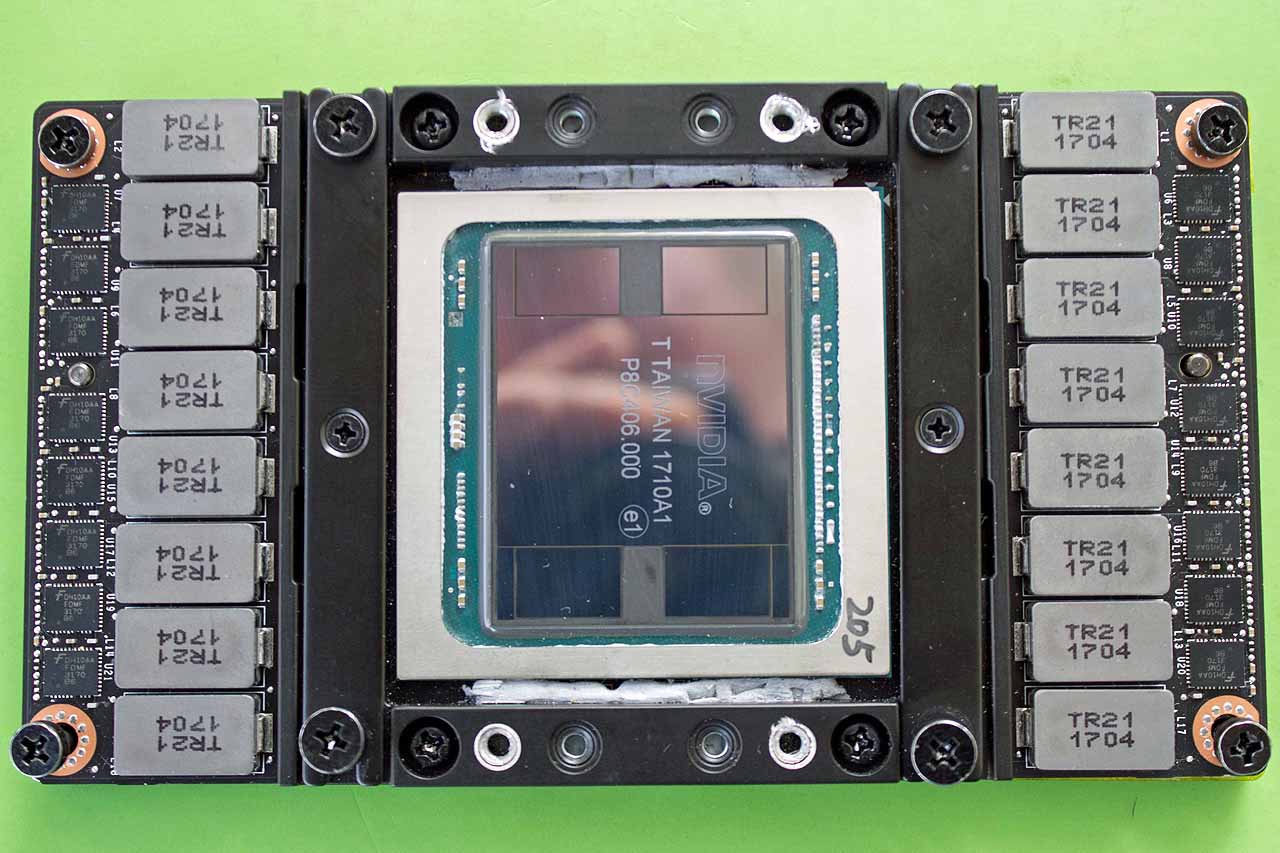

Nvidia Doubles Tesla V100 Memory

The Tesla V100 is fabbed with the largest single die that has ever been produced. The 815mm2 Volta die, which wields 21 billion transistors built on TSMC's 12nm FFN process, is almost the size of a full reticle. The GPU comes packing 5,120 CUDA cores for AI workloads, as we covered earlier last year, but while it has plenty of processing power, Nvidia has bolstered the card with an additional 16GB of HBM2 memory. Nvidia says the beefier 32GB of memory can double performance in memory-constrained HPC workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The V100 serves as the linchpin for a constellation of the most cutting-edge systems on the planet, like IBM's Power System AC922 that will help the US regain its supercomputing lead, but it also serves as a key component in Nvidia's DGX systems. The beefed-up Tesla V100 32GB GPU is available now for DGX systems. Several blue-chip OEMs, like Dell EMC, HPE, and IBM, have announced that new systems with the beefed-up card will appear in Q2. Nvidia hasn't shared technical details of the new memory subsystem, which likely uses taller 8-Hi stacks of HBM2, but we're digging for more info.

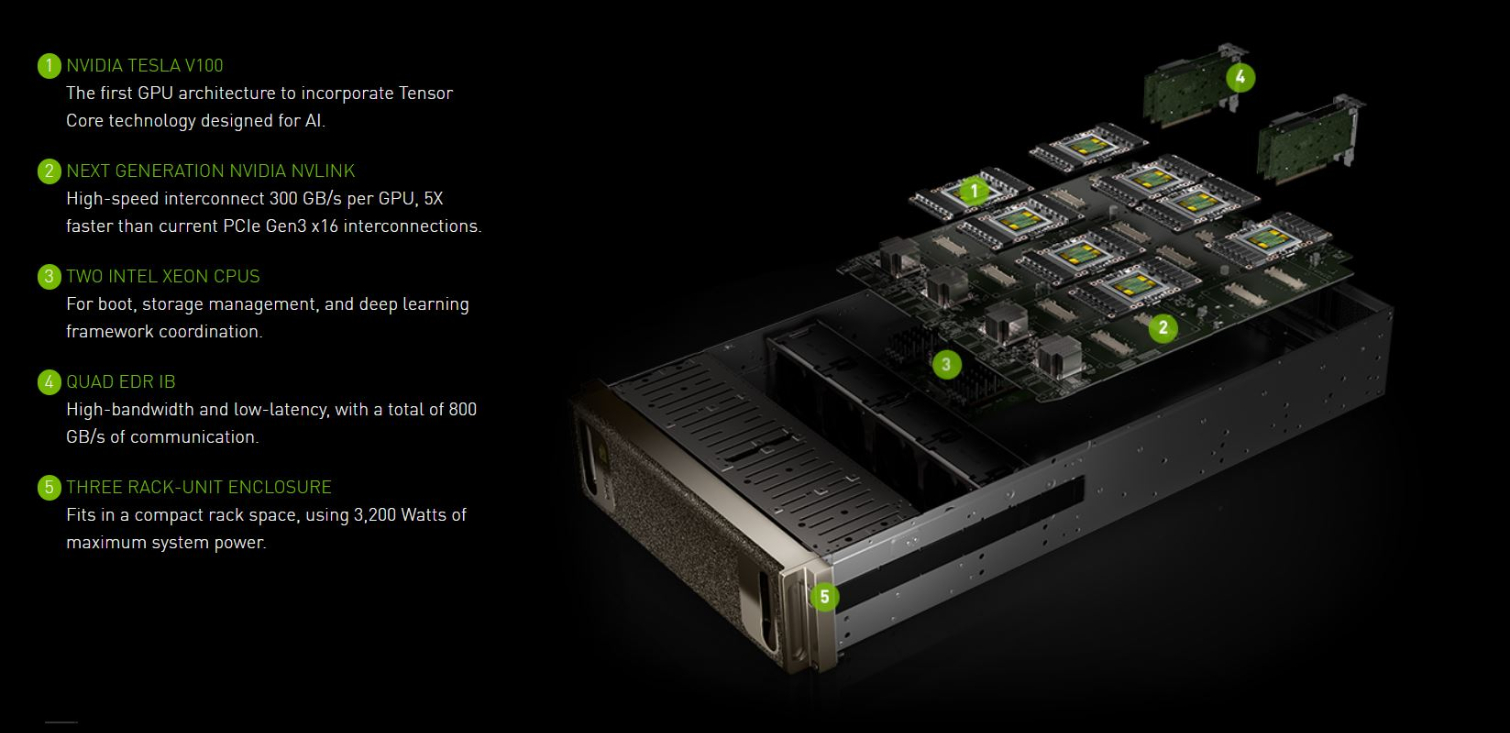

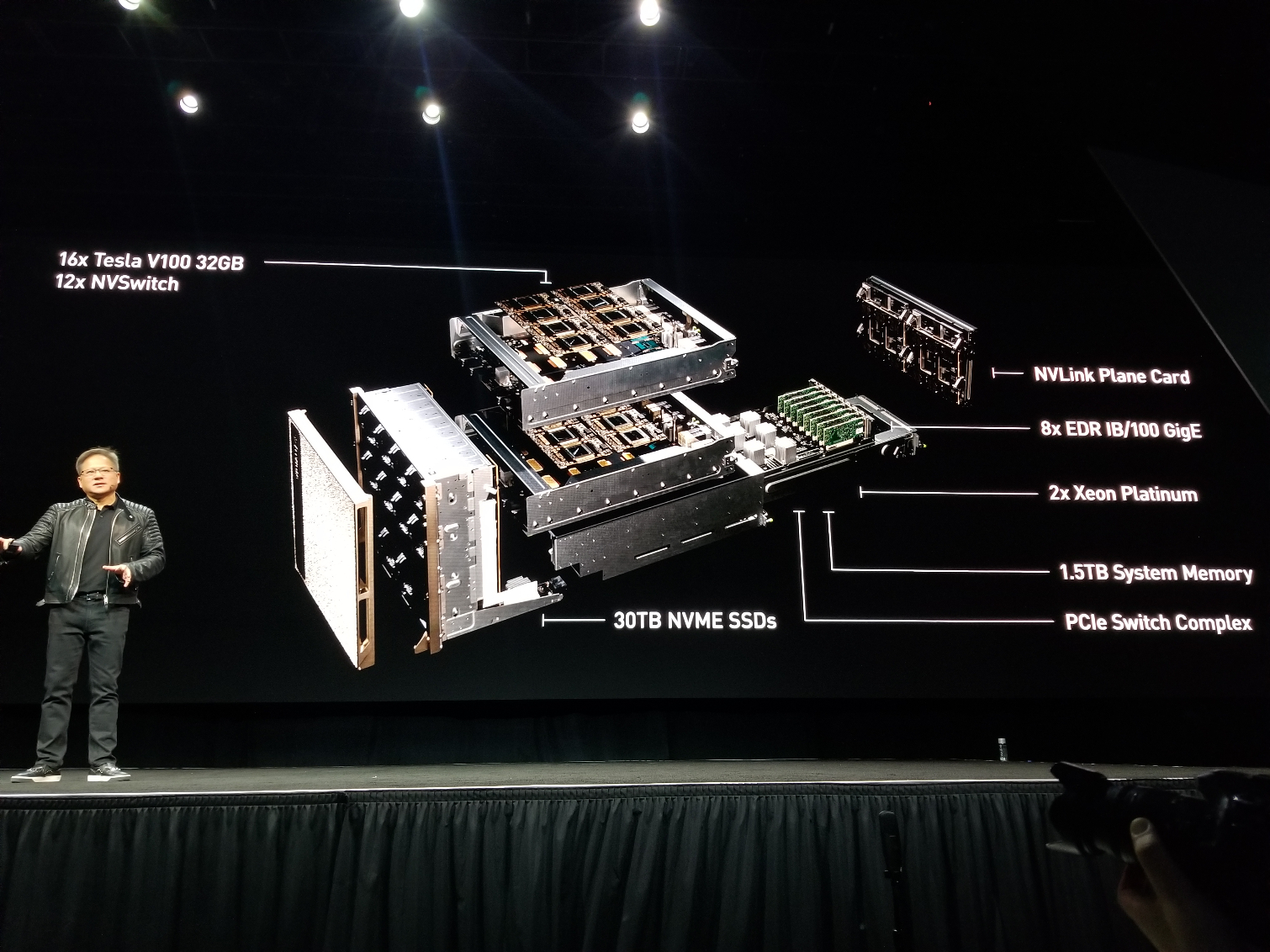

NVSwitch Powers DGX-2 System up to 2 PetaFLOPS

Nvidia's original DGX systems come outfitted with eight V100s crammed into a single chassis that delivers over 960 TFLOPs of power from 40,960 CUDA cores.

The DGX-2 system brings that up to two PetaFLOPS with sixteen of the new 32GB Tesla V100s. The system features up to 1.5TB of RAM, InfiniBand or 100GBe networking, and two Xeon Platinum processors. The system draws 10KW of power.

The DXG-2 is also the first system to use Nvidia's NVSwitch technology, which is a flexible new GPU interconnect fabric. The fabric works as a non-blocking crossbar switch that allows up to 16 GPUs to communicate at speeds up to 2.4 TB/s.

The NVSwitch topology ties 16 GPUs together into a single cohesive unit with a unified memory space, creating what Jensen touted as "The World's Largest GPU." The system features a total of 512GB of HBM2 memory that delivers up to 14.4TB/s of throughput. It has a total of 81,920 CUDA cores.

The new system is designed to allow developers to expand the size of their neural networks. Huang reveled that the DGX-2 features 12 NVSwitches, each of which features 12 billion transistors fabbed on TSMC's 12nm FinFET process. Each switch features eighteen 8-bit NVLink connections. IBM has announced Power9 systems that will debut in 2019 with NVLink 3.0, so we expect NVSwitch to capitalize on that complementary interconnect.

Huang announced that the DGX-2 system is available now for $399,000.

Jensen also discussed the company's partnership with ARM to integrate Nvidia's open-source Deep Learning Accelerator (NVLDA) architecture into ARM's Project Trillium platform for machine learning and detailed the DRIVE Constellation Simulation System, which is a dual-server computing platform for testing autonomous vehicles.

We're here at the show to scour the floor for the latest and greatest in GPU technology, so stay tuned.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

bit_user Thanks for the coverage.Reply

I'd love to know the NVLink topology of the DGX-2.

Anyone wishing to learn more about NVDLA should probably head over to nvdla.org