New HAMR lasers could usher in 30TB+ HDDs, Seagate and Sony team up for production: Report

Sony set to produce HAMR lasers for Seagate's HAMR drives, report says.

Update 17/02 05:20 PT: Seagate has contacted us to notify us that not all components of its Mozaic 3+ HDDs are made in-house. Some are outsourced.

When Seagate formally introduced its first high-volume hard disk drives featuring its heat-assisted magnetic recording (HAMR), it implied that all of their components would be produced in-house. However, a new report from Nikkei says that Seagate has teamed up with Sony Group to produce laser diodes for its HAMR write heads. This may signal that Seagate will not put all its eggs in one basket and will rely on laser diodes from Sony as a secondary source.

These laser diodes will be used in 3.5-inch HDDs capable of holding 30+ TB of data, which points to Seagate's Mozaic 3+ hard drive platform. Sony Semiconductor Solutions is set to begin manufacturing the diodes in May, which likely points to SSS being the second source for these diodes, as the first Mozaic 3+ HAMR drives are set to ship in the first quarter.

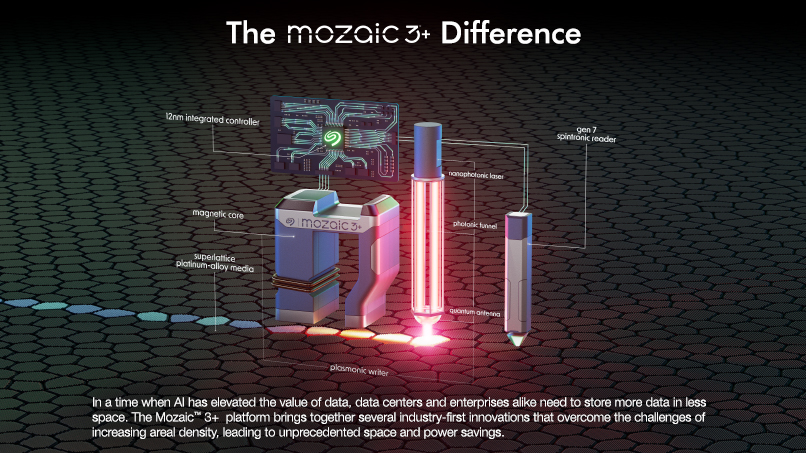

In HAMR HDDs, the nanophotonic laser diode heats tiny portions of drive media to temperatures of 400°C ~ 450°C to reduce its magnetic coercivity before the plasmonic writer writes data to this area. Seagate does not disclose the exact characteristics of its write heads, and the information about exact temperatures comes from old publications.

To set up new production lines for these diodes, Sony plans to invest approximately ¥5 billion ($33 million) in a facility in Miyagi Prefecture, located in the northern part of Japan's main island, and a factory in Thailand.

Seagate's Mozaic 3+ HAMR-based platform could enable hard disk drives that feature capacities of 30TB and higher. Seagate said back in January that its HAMR-powered Exos hard drives with capacities of 30TB and higher will be available in large quantities later this quarter, following the completion of customer evaluations of the new HDDs. These drives will be primarily aimed at hyperscale cloud datacenters and bulk storage. While these HDDs provide ultimate capacity points, sales volumes of HAMR-based drives aren't forecast to balloon immediately. Seagate predicts a million of the drives will be shipped in the first half of 2024.

Meanwhile, the HAMR-based Mozaic 3+ storage technology will support a variety of products, including enterprise HDDs, NAS drives, and video and imaging applications (VIA) markets. As a result, plans are in place for IronWolf and SkyHawk HDDs powered by HAMR technology. That said, volumes of HAMR-based drives are poised to rise, and this is when Seagate will need additional diodes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

hotaru251 I am curious as to the maximum size of HDD before they are pointless due to how large size is that you can't reliably copy files within the "safe" time window in case of a drive failure to rebuild your array.Reply -

hotaru251 Reply

28 i believe was highest HDD prior.jpeckinp said:but don't we already have 30TB HDD's?

we have much larger SSD (liek 60TB i believe?) but not hdd. -

Vanderlindemedia Replyhotaru251 said:I am curious as to the maximum size of HDD before they are pointless due to how large size is that you can't reliably copy files within the "safe" time window in case of a drive failure to rebuild your array.

Would take a dual actuator at some point to "cope up with" the ever demanding speeds and data intensity.

At some point NVME's will make HDD's obsolete. The only selling point a HDD has is the space. -

Grobe Reply

That is a valid design challenge for the manufacturers. However, I'm more interesting in how to mitigate the SMR bad random write performance. The only kind of solution I'm aware of today is there is a specific way to format ext4 file system.Vanderlindemedia said:Would take a dual actuator at some point to "cope up with" the ever demanding speeds and data intensity.

On that note, the new hamr based disk drives - wonder if those disks are CMR based? I couldn't tell from the article. -

Vanderlindemedia Or use a SSD/HDD hybrid design, where the SSD part operates as a cache level in between.Reply -

leclod Reply

I understand your question. But being rational, how can this be an issue when HDDs are made to run for years. What's 1 day/2days against 5+years ? How is 2days much worse than 1day ? (or say 6days against 3days). Anyway those data centers can do the math.hotaru251 said:I am curious as to the maximum size of HDD before they are pointless due to how large size is that you can't reliably copy files within the "safe" time window in case of a drive failure to rebuild your array. -

leclod Reply

I'm pretty sure CMR stays the standard while SMR can be used for niche products. Their are even hybrid drives where you can choose.Grobe said:That is a valid design challenge for the manufacturers. However, I'm more interesting in how to mitigate the SMR bad random write performance. The only kind of solution I'm aware of today is there is a specific way to format ext4 file system.

On that note, the new hamr based disk drives - wonder if those disks are CMR based? I couldn't tell from the article.

Nobody (nearly) wants a bigger drive with much slower transfer rates. -

minnion Reply

100tb SSD, but they are around $40k per drive.hotaru251 said:28 i believe was highest HDD prior.

we have much larger SSD (liek 60TB i believe?) but not hdd. -

hotaru251 Reply

because the longer it takes the more chance of data loss.leclod said:How is 2days much worse than 1day ? (or say 6days against 3days). Anyway those data centers can do the math.

if you take days to rebuild an array and lose more drives that is a big issue.