In Pictures: 16 Of The PC Industry's Most Epic Failures

We've endured a great many annoyances in the 16 years since Tom's Hardware first appeared online. What follows is a list of 16 of them. Although it's by no means all-inclusive, it represents one seasoned reviewer's worst experiences in technology.

The Original Logitech G15 Gaming Keyboard (2005)

Don't get me wrong, I'm a fan of Logitech's newest G15 gaming keyboard.

It's the original version that left something to be desired, as the black paint on the back-lit keys quickly wore out after only moderate use. The only reason you can read the keys with white letters in this picture is because they're covered by stickers I bought on eBay, and they block out the illuminated effect that sold me on the product in the first place. If Logitech sold replacement keys, I would have bought them. But it's the company's policy not to. While it's true that Logitech sent out replacements to anyone who complained early on, I bought later, missed the RMA window, and was left with a shoddy-looking jumble of illegible keys.

ATI Radeon HD 2900 XT (2007)

Unfortunately, Nvidia's GeForce FX 5800 wasn't the only graphics card that fell flat on its face. In isn't the only poorly received graphics card ever released. In 2007, ATI struggled with its Radeon HD 2900 XT (Ed.: I'd even argue that there were more epic failures than these two choices. Remember S3's Savage 2000 with its broken T&L engine?)

ATI's flagship DirectX 10 card was slower than Nvidia's GeForce 8800 GTX, and it even succumbed to the more mid-range GeForce 8800 GT. To make matters worse, the Radeon was power-hungry and loud, too.

ATI managed to tweak its VLIW architechture into better products that emerged as the Radeon HD 3800 family. But its Radeon HD 2900 XT is still remembered as a letdown by graphics card enthusiasts.

Ageia PhysX Card (2006)

When the Ageia PhysX hardware accelerator card launched in 2006, it sold for $300. After paying that painfully-high price, there were a handful of pretty weak physics effects made available in an even smaller line-up of games. Fair enough, right? You bought the card thinking it'd be even more useful in the future.

Nvidia bought Ageia in 2008. By 2009, the newest PhysX drivers disabled the technology if a Radeon graphics card was detected. In other words, your $300 Aegia PhysX card was useless unless it ran next to one a GeForce board. To add insult to injury, Nvidia's own GPUs were already accelerating the middleware SDK by that point. All of this went down right around the time the first interesting PhysX-enabled titles were emerging, too (Batman: Arkham Asylum and Mirror's Edge).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In the end, everyone who paid for the original physics processing unit were hung out to dry. Nvidia dropped driver support for boards based on Ageia's PPU entirely in 2010.

AMD Phenom CPU (2007)

The first Phenom CPU promised to be the world's first quad-core chip on a single die, but it performed poorly compared to Intel's Core 2 on release. Its introduction was also marred by a translation lookaside buffer (TLB) bug discovered right after launch that could cause a crash under certain conditions. Most motherboard vendors offered a BIOS workaround to circumvent the issue, but it imposed up to a 10% performance hit.

AMD fixed the bug in hardware with its B3 stepping, but the CPU design was still never able to challenge Intel's Core 2-based chips. AMD didn't have a competitive product until the company released its Phenom II family in 2008. The original Phenoms were quickly phased out.

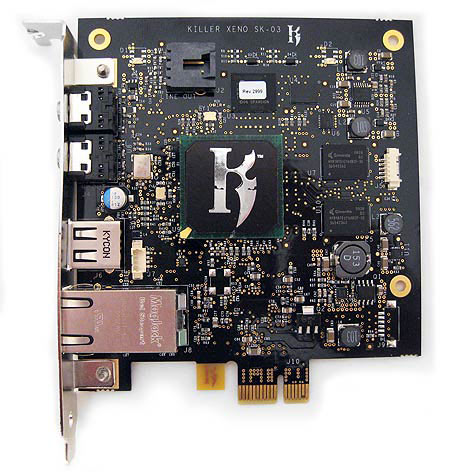

Killer Gaming Network Cards (2009)

Killer's line of gamer-oriented network cards include impressive hardware specifications, including a Freescale-based network processing unit, on-board RAM, and a software suite responsible for extensive customization of network settings. None of that improves actual game performance, as far as our testing has shown, though.

At best, the company's network management software can be commended for prioritizing gaming traffic over other processes, which may improve latency if you're competing online while feeding a peer-to-peer network. But wouldn't it make more sense to just pause those downloads before you fire up your favorite shooter? That sure would have beaten paying almost $300 for Killer's technology when it first emerged.

AMD's FX CPU (2011)

That's the Zambezi-based FX, not the Sledgehammer-based one, which we actually rocked back in the day.

We waited a long time for AMD's next-generation CPU architecture, and, on paper, it looked great. Unfortunately, in its initial incarnation, the expected performance isn't there, power consumption is super-high, and pricing isn't even all that compelling.

AMD managed to improve some aspects of the new CPU over the Phenom II generation. The buzz from Microsoft is that Windows 8 will handle the Bulldozer module concept more elegantly, and we're still getting promises from AMD that its follow-on to Bulldozer, code-named Piledriver, will introduce a number of fixes. For now, though, we can't help but think this isn't the way AMD's architects envisioned this situation going down.

Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

runswindows95 I find it funny that two technologies (RDRAM and the first P4) that launched together are on this list. Overall, I agree with everything on the list.Reply -

face-plants I still have Rambus memory and those darn blank 'continuity modules' laying around that I should have never bought for inventory. I hated the first gen Pentium 4's and wish I had skipped them completely with my system builds at the time.Reply

Also, amen to the comments about Intel's boxed Heatsink/Fan HSF. Not only were those two clear plastic halves of the pin extremely easy to split apart but, some mainboards required me to push entirely way tooo hard before hearing the "click" of the black clip finally seating properly. I still have pictures of a few Intel boxed boards that were so incredibly warped by the force of the HSF retaining clips that they caused internal damage to the boards (opened traces or something) and were never able to POST properly. I believe that mess ended with Intel cross-shipping at least a dozen new mainboards and my store just eating the cost of some after-market Zalman coolers to get the builds out on time. From then on, I only used the boxed coolers for replacement parts and simple bench-testing until the design improved a little bit years later. They're solution is still far worse than AMD's IMO though. -

ta152h Some of these weren't great, but probably don't deserve to be on this list.Reply

RDRAM failed, not because of RDRAM, but because of the Pentium III. Which brings us to the Willamette too. The Williamette reached 2 GHz on the same process technology that the Pentium III/Coppermine reached 1.1 GHz, and outperformed it EASILY at virtually everything at that clock speed, and even when introduced at 1.5 GHz (compared to 1 GHz Pentium III) beat it in virtually all benchmarks. Why? A good part of it was the performance of RDRAM, which finally was attached to a processor that could use the bandwidth. The bad was the x87 was greatly weakened so developers would use SSE 2, which was very powerful and considerably better. I guess that's a plus and a minus.

Ironically, RDRAM died just when it was finally better than the competition. The price had finally come down to the price of other memory, and the performance was better for the Pentium 4 than DDR. But, when they released it for the Pentium III, which couldn't use the bandwidth (although even then, the i840 had very good performance by using interleaving to reduce latency), the performance was bad because of the processor. The cost was excessive too, although it was wrong attributed to royalty payments.

While the Willamette wasn't a great processor, the really bad one in the Pentium 4 line was Prescott. I think most people would pick that one as the worst.

Others might be all the Super 7 chipsets. The 386 kind of sucked (added a lot, but the performance wasn't that great). The K5 was a big headache, being very late, lacking MMX (which was a selling point by the time it came out, due to be late) and stuck at 116.7 MHz. AMD 486/DX2 80 MHz had that nasty tendency to have the internal cache go bad. i820 really sucked, being late, using the wrong memory (RDRAM) for the processor, and then having bugs with the MTH. Worse than that, for only a little more, the i840 had much better performance, and more features. Cyrix should have been known as Cryix, because you would if you bought one. The headaches those things caused ... -

I wouldn't call the zip drive a failure, epic or otherwise. At one point several major PC vendors shipped with internal zip drives and there was quite a fury to own them. It was perceived as cheap, decent-sized storage that was more cost-effective than hard drives. The problem of course was that the zip drive format was owned by Iomega rather than being vendor-neutral.Reply

-

e56imfg I already know Bulldozer and Vista are going to be here without even going through the articles. Now to go to the next page...Reply