Nvidia's DLSS Technology Analyzed: It All Starts With Upscaling

DLSS Performance, Tested

As we already know, DLSS is much faster than TAA at any given resolution. After successfully running Nvidia's DLSS demos without anti-aliasing, we collected performance results with every configuration possible. At least for now, DLSS is limited to 2560 x 1440 and 3840 x 2160. And the demos are quite inflexible (especially Final Fantasy XV, which is locked at 4K).

Most surprising is that 4K with DLSS enabled runs faster than 4K without any anti-aliasing. The fact of the matter is that performance improves before any post-processing is applied, and the only way that happens is through a simplification of the render or a loss of resolution. Nvidia already claims that "...Turing GPUs use half the samples for rendering and use AI to fill in [the missing] information."

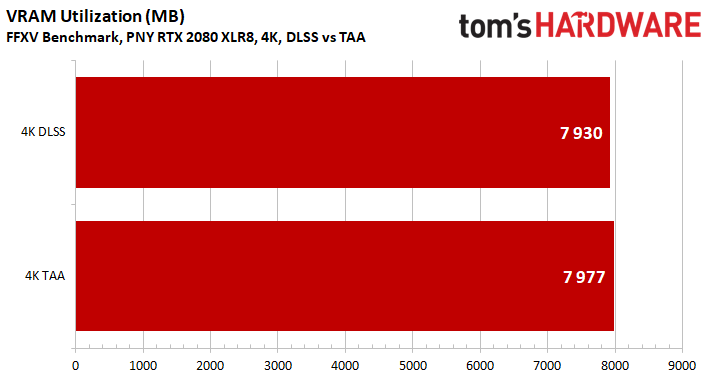

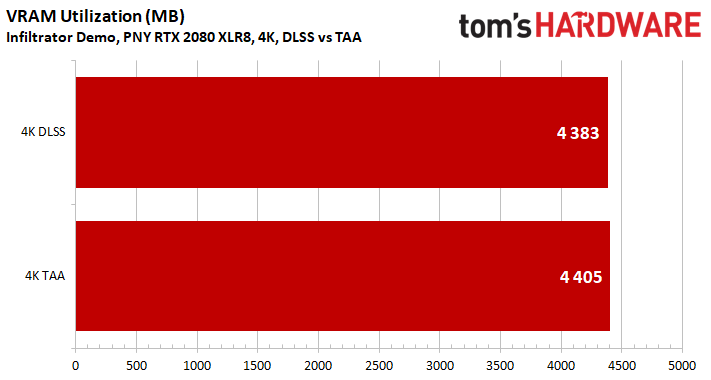

Notice that there is very little difference in GDDR6 usage between the runs with and without DLSS at 4K. We certainly weren't expecting that. Processor and main memory utilization don't change much either.

MORE: Best Graphics Cards

MORE: ;Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: DLSS Performance, Tested

Prev Page Nvidia's Deep Learning Super Sampling Technology, Explored Next Page Analysis of the Rendering Pipeline-

hixbot OMG this might be a decent article, but I can't tell because of the autoplay video that hovers over the text makes it impossible to read.Reply -

richardvday I keep hearing about the autoplay videos yet i never see them ?Reply

I come here on my phone and my pc never have this problem. I use chrome what browser does that -

bit_user Reply

Thank you! I was waiting for someone to try this. It seems I was vindicated, when I previously claimed that it's upsampling.21435394 said:Most surprising is that 4K with DLSS enabled runs faster than 4K without any anti-aliasing.

Now, if I could just remember where I read that... -

bit_user Reply

You only compared vs TAA. Please compare against no AA, both in 2.5k and 4k.21435394 said:Notice that there is very little difference in GDDR6 usage between the runs with and without DLSS at 4K. -

bit_user Reply

I understand what you're saying, but it's incorrect to refer to the output of an inference pipeline as "ground truth". A ground truth is only present during training or evaluation.21435394 said:In the Reflections demo, we have to wonder if DLAA is invoking the Turing architecture's Tensor cores to substitute in a higher-quality ground truth image prior to upscaling?

Anyway, thanks. Good article! -

redgarl So, 4k no AA is better... like I noticed a long time ago. No need for AA at 4k, you are killing performances for no gain. At 2160p you don't see jaggies.Reply -

coolitic So... just "smart" upscaling. I'd still rather use no AA, or MSAA/SSAA if applicable.Reply -

bit_user Reply

That's not what I see. Click on the images and look @ full resolution. Jagged lines and texture noise are readily visible.21436668 said:So, 4k no AA is better... like I noticed a long time ago.

If you read the article, DLSS @ 4k is actually faster than no AA @ 4k.21436668 said:No need for AA at 4k, you are killing performances for no gain.

Depending on monitor size, response time, and framerate. Monitors with worse response times will have some motion blurring that helps obscure artifacts. And, for any monitor, running at 144 Hz would blur away more of the artifacts than at 45 or 60 Hz.21436668 said:At 2160p you don't see jaggies. -

s1mon7 Using a 4K monitor on a daily basis, aliasing is much less of an issue than seeing low res textures on 4K content. With that in mind, the DLSS samples immediately gave me the uncomfortable feeling of low res rendering. Sure, it is obvious on the license plate screenshot, but it is also apparent on the character on the first screenshot and foliage. They lack detail and have that "blurriness" of "this was not rendered in 4K" that daily users of 4K screens quickly grow to avoid, as it removes the biggest benefit of 4K screens - the crispness and life-like appearance of characters and objects. It's the perceived resolution of things on the screen that is the most important factor there, and DLSS takes that away.Reply

The way I see it, DLSS does the opposite of what truly matters in 4K after you actually get used to it and its pains, and I would not find it usable outside of really fast paced games where you don't take the time to appreciate the vistas. Those are also the games that aren't usually as demanding in 4K anyway, nor require 4K in the first place.

This technology is much more useful for low resolutions, where aliasing is the far larger problem, and the textures, where rendered natively, don't deliver the same "wow" effect you expect from 4K anyway, thus turning them down a notch is far less noticeable.