How Tom's Hardware Tests, Rates and Reviews Tech Products

Our expert advice is based on extensive testing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

To choose the best technology or keep up with the market, you need expert advice based on solid evidence and copious experience. Sure, you could just read spec sheets and assume that, when a company says its laptop provides "all day" battery life or its GPU delivers great 4K gaming, it must be true. Or you could comb through hundreds of user reviews to see what previous buyers have to say. But at Tom’s Hardware, we believe that rigorous testing is the key to understanding the latest gear.

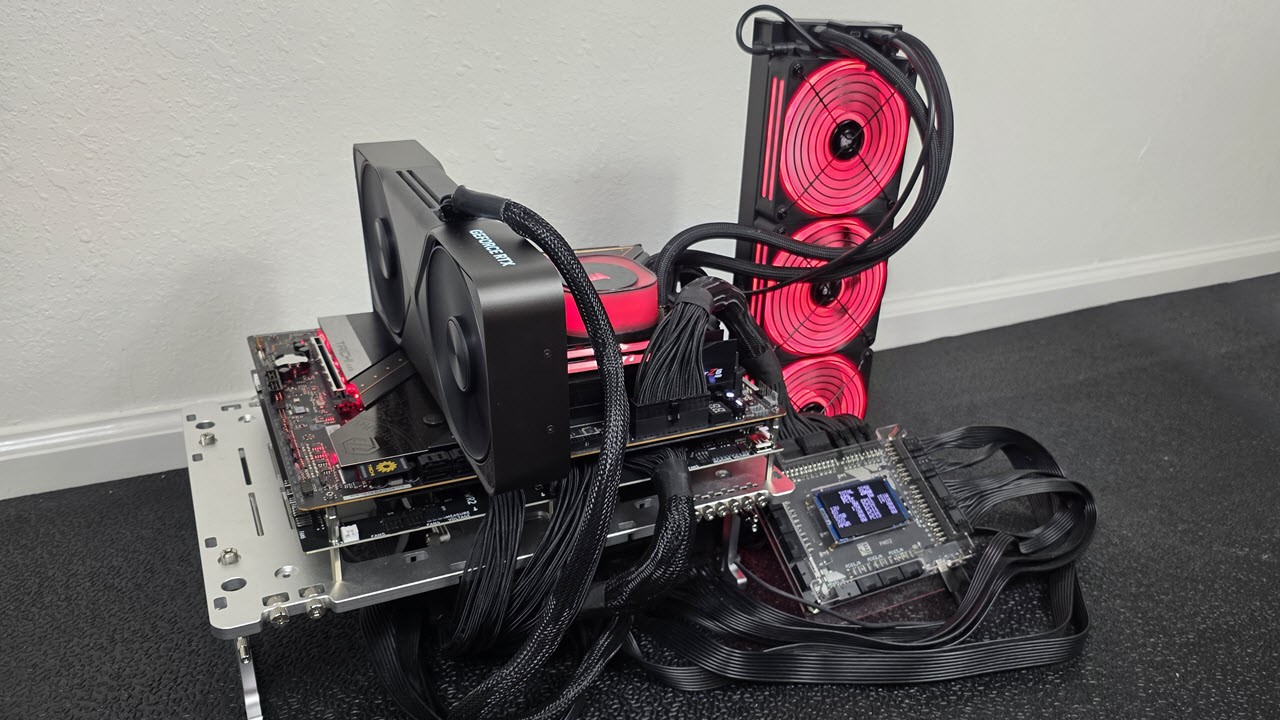

As a leading PC hardware and enthusiast technology site founded in the mid-1990s, Tom's Hardware has been evaluating the latest and greatest CPUs, graphics cards, motherboards, and much more for 30 years. From the latest desktop PC graphics cards, CPUs, SSDs, hard drives, 3D printers, and power supplies, once we get it in our hands, we benchmark the hell out of it using software, sensors, and anecdotal testing. The Tom’s Hardware staff and freelancers have more than a century of experience pushing technology to its limits — and sometimes even breaking it.

Tom’s Hardware Promise: Telling it Like it Is

Our recommendations can’t be bought. In an ideal world, every user would have the time, equipment, expertise, and access to products they needed to do their own testing and draw their own conclusions about a whole category of hardware. But in the real world, we have the rare privilege of doing this professionally and standing in for our readers. With that privilege comes a sacred responsibility: to give you the unvarnished truth about what we experienced when using and benchmarking a product.

Tom’s Hardware Test Methods

Transparency is key. If we conduct a test, you should be able to replicate that experience. As such, we describe how we tested that particular product, including both synthetic benchmarks and anecdotal use. Some product categories, such as CPUs, GPUs, and SSDs, for example, utilize highly scientific methods for testing, while others, like gaming chairs, can only be evaluated based on the reviewer’s experiences.

We test too many types of tech to list them all here, and that list often changes as we delve into new and emerging areas of consumer and enthusiast hardware, or when once-hot product categories wither (remember dedicated sound cards or netbooks?) or become stagnant.

But to give you a sense of what we test and how we test it, below we'll list some of our key categories, explain the basics of how we test those products, and, if we have a dedicated how we test page for that product category, we'll link you out to it for much more detail.

- CPUs: We use a demanding suite of 16 real-world gaming benchmarks in two resolutions, six synthetic gaming benchmarks, 116 desktop PC productivity benchmarks, 51 professional workstation benchmarks, thermal, power, and efficiency tests. We also measure overclocking performance on unlocked chips. To ensure a level playing field and avoid bottlenecks from other components, we use carefully maintained test images paired with the best GPUs, motherboards, RAM, SSDs, cooling systems, and power supplies.

- Graphics Cards: We test with a collection of recent games. For each game, we run once to "warm up" the GPU and then perform at least two passes at each setting and resolution combination. We check for any anomalies and retest as needed to ensure we get the most accurate data possible. We also periodically retest cards with the latest updated drivers and occasionally add new games to the test suite when suitable candidates are released.

- Laptops: We run laptops through a varied gauntlet of benchmarks, including synthetic and real-world tests, to see how systems perform. We use a colorimeter and light meter to see just how vibrant and bright displays are, and also run our in-house battery test to test longevity on a charge. Our reviewers also use each laptop to test the keyboard, speakers, and webcams, measure heat under load, and open the laptops to see what components can be upgraded or repaired. We also consider the pre-loaded software and whether or not it's worthwhile to use (or keep at all). We also measure heat during periods of intense work. All of these tests are combined with real-world impressions of the system’s usability, design, and input devices.

- Gaming Laptops: Gaming laptops largely go through the same testing as productivity laptops, except that we add a number of gaming tests. These include a number of synthetic and real-world gaming benchmarks. We also play games ourselves to consider the keyboard, speakers, display, heat, and fan noise in the context of gaming.

- Gaming Desktop PCs: Prebuilt desktops undergo similar testing as laptops, except that there is no screen or battery to test, and we don't need to worry about skin temperatures. In addition to our benchmarks, we focus on air flow, upgradeability, and cable management, ensuring that these systems can be used and customized long into the future.

- Gaming Monitors: As we explain in detail in our article on how we test gaming monitors, we use a variety of high-end equipment, including a spectrophotometer and a colorimeter. We also use a DVDO generator to create accurate test patterns. We test brightness and contrast, grayscale tracking to ensure white is neutral at all brightness levels, color gamut accuracy and volume for sRGB and DCI-P3 gamuts, viewing angles, and response time. We also show results both before and after calibration.

- SSDs: We employ a balanced set of tests that includes real-world file transfer, gaming workloads, synthetic benchmarks, and power and thermal testing. Each SSD is prefilled to 50% capacity to simulate long-term use (an empty drive runs faster) and tested as a secondary device to avoid interference from the operating system and other programs running in the background.

- 3D Printers: After unboxing each 3D printer, we run several test prints, such as the 3D Benchy, a well-known calibration print. We slice prints with the provided software to test the new user experience, then see if the printer is supported by well-known 3D party slicers like Cura, PrusaSlicer, and OrcaSlicer. We will test the printer’s Wi-Fi capabilities and, if there is an app, see how easy it is to use to send files and monitor prints. The printer is also tested using typical models popular with our readers, from practical prints to toys. Multiple filaments are run, including PLA, PETG, and TPU. Enclosed printers will also be tested with high-temperature materials such as ASA and nylon.

- Keyboards and Mice: The best way to test input devices is to use them in a variety of common situations, preferably for several days. We install any relevant software and tweak the options. If a device is meant for gaming, we use it to play games. If it's meant for productivity, we use it for work.

What Our Ratings Mean

All reviewed products are rated on a scale of 1 to 5, with 5 being the best. Each product may also receive an Editor's Choice badge, which designates it as the best within its niche. The ratings mean the following:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

5 = Practically perfect

4.5 = Superior

4 = Totally worth it

3.5 = Very good

3 = Worth considering

2.5 = Meh

2 = Not worth the money

1.5 = Buy for an enemy

1 = Fails horribly

0.5 = Laughably bad

How We Choose and Obtain Products for Review

There's a nearly infinite universe of tech products, ranging from the thousands of generic cables on Amazon to the two dozen PC processors made by Intel and AMD. While we'd love to thoroughly benchmark and rate them all, we have to be selective. Our editors determine which products will be of the greatest interest to our audience and request that companies provide us with samples for testing purposes. In some cases, when a company is unwilling or unable to provide us with an important product, we will use our budget to purchase it from a retailer such as Amazon or Best Buy.

While companies often pitch us products to review, deciding which to review or cover is an exclusively editorial decision, based on what we believe our readers need to know. We do not accept payment of any kind for reviews, nor do manufacturers receive preferential treatment for being advertisers. We also do not show our reviews to manufacturers before publication.

Tom's Hardware is the leading destination for hardcore computer enthusiasts. We cover everything from processors to 3D printers, single-board computers, SSDs and high-end gaming rigs, empowering readers to make the most of the tech they love, keep up on the latest developments and buy the right gear. Our staff has more than 100 years of combined experience covering news, solving tech problems and reviewing components and systems.

-

bit_user @apiltch thanks for the article.Reply

I followed a link from one of your product reviews, hoping to find some detail on how CPU & GPU power consumption is measured. It would be nice if you could update this article with additional such details.

One reason I'm interested is that I'm wondering if there's a way I can take isolated CPU (or, at least motherboard) measurements, in my own system, to evaluate the efficiency of my cooling solution.

The other reason is just to know how to interpret the power measurements in your reviews, especially when it comes to comparing your results to those published elsewhere. -

rabbit4me1 Sorry guys I really don't believe that your tests are completely unbiased You're always going to support your sponsors you might do a little negativity on them but you always tend to be pushing Dell products and most of them are junk.Reply -

bit_user Reply

I guess you're talking about laptops or monitors, because I think that's the only Dell-branded stuff they review. Here's a recent Dell laptop they rated at only 4 stars:rabbit4me1 said:you always tend to be pushing Dell products

https://www.tomshardware.com/reviews/dell-xps-17-9730

Meanwhile, here's a Framework laptop they reviewed about the same time, granting it 4.5 stars and an Editors Choice award:

https://www.tomshardware.com/reviews/framework-laptop-13-intel-2023

It's easy to cry foul, but you should be prepared to substantiate your allegations.

Regarding sponsors, I assume their advertising is handled through a 3rd party intermediary. Do you know differently? -

apiltch We rate products based on how good we think they are and whether we think they are a good choice for readers who are in the market for that type of product at that budget. A lot of companies buy ads on our site, but the editorial team are not in sales. What matters to us is giving you good advice. I know that sounds trite, but it's the truth.Reply

Nobody here got into tech journalism to get rich. We're lucky to make a living doing it and we never forget how lucky we are to get to test out tech and share our observations with you. Our obligation, always, is to tell the truth.