System Builder Marathon Q1 2016: $1275 Professional Workstation

This month I break the SBM mold by using a true workstation processor and graphics.

Overclocking, Testing & Analysis

Overclocking

Since quite a few professional users usually prefer stability, over the ability to gain a small boost in speed, overclocking options with professional hardware are usually very limited, if they exist at all. Since the bios on my C236 WSI motherboard is extremely lacking in features I won’t be getting to overclock my CPU or RAM for this build, which leaves me with just the graphics card.

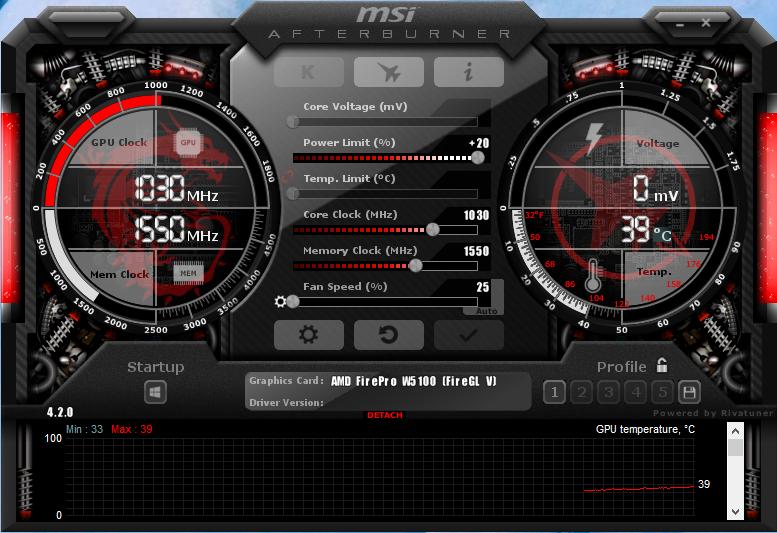

After messing around with the settings in MSI Afterburner, I was able to gain access to some of the W5100’s settings. Still, since I wasn’t allowed to raise the temperature limit setting, I was only able to get a +100MHz core overclock and a +50MHz memory overclock before the card hit its 70C temperature ceiling and throttled back.

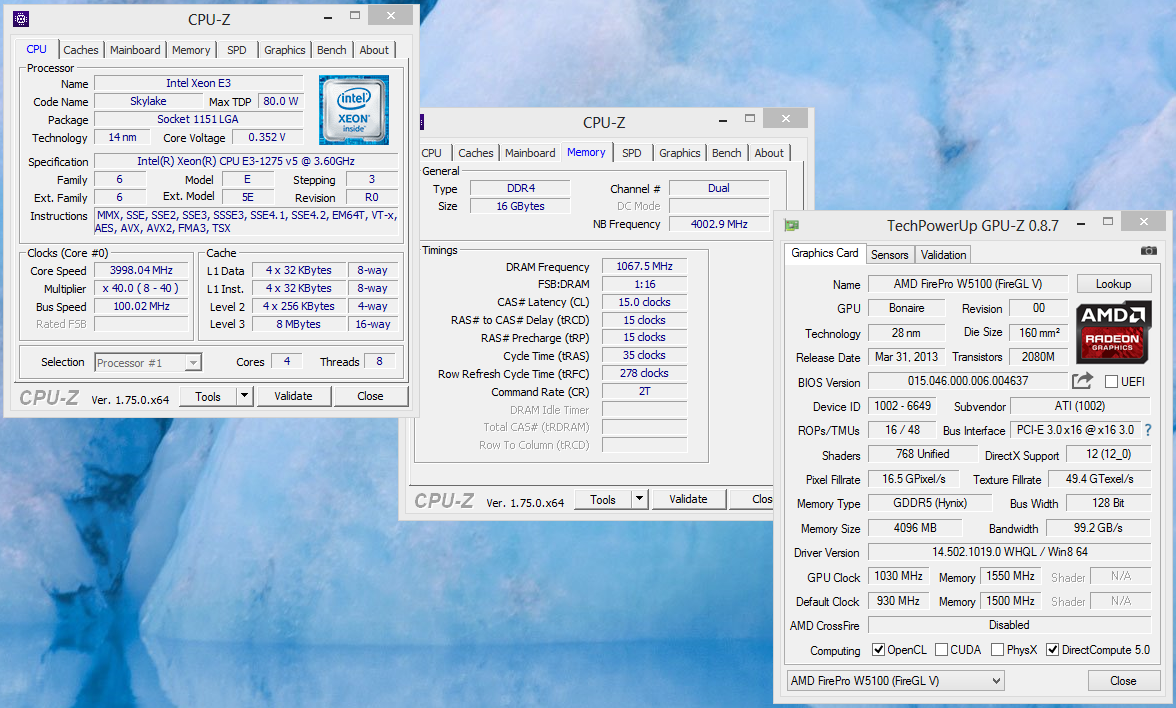

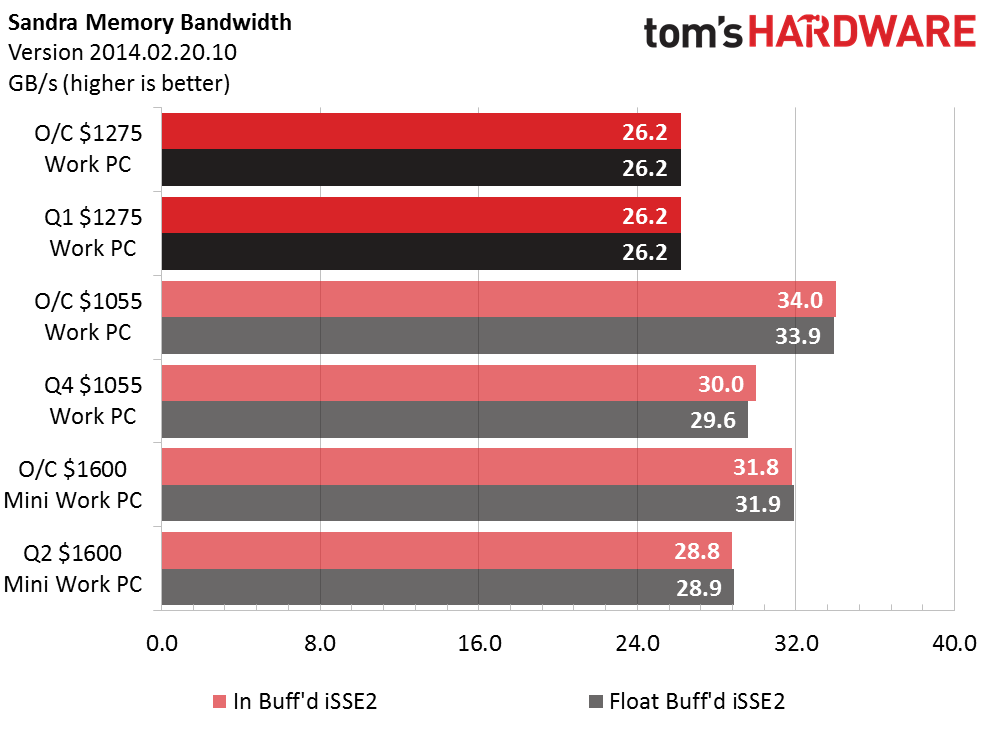

A quick look at CPU-Z confirms that my RAM was indeed forced to run at the motherboards slower speed of 2133MHz, although it did shave one clock cycle off the stock 16-16-16-39 timings. [Without XMP it reverts to the JEDEC standard-Ed.]

Component Settings

| Test Hardware Configurations | |||

|---|---|---|---|

| Row 0 - Cell 0 | 2016 Q1 $1275 Professional Workstation | 2015 Q4 $1055 Prosumer PC | Q2 $1600 Mini Work PC |

| Processor (Overclock) | Intel Xeon E3-1275 V5: 3.60 GHz -4.00 GHz, Four Physical CoresStock Settings | Intel Core i5-6600k: 3.5 GHz - 3.7GHz, Four Physical CoresO/C to 4.4 GHz, 1.30V | Intel Core i7-5820K: 3.30 GHz - 3.60 GHz, Six Physical CoresO/C to 4.3GHz, 1.20V |

| Graphics (Overclock) | AMD FirePro W5100: <930MHz Core, 1500MHz Memory O/C to <1030MHz Core, 1550MHz Memory | ASUS GTX 970: <1228MHz GPU, GDDR5-7010 OC to <1390MHz, GDDR5-7880 | PNY GTX 970: <1178 MHz GPU, GDDR5-7012 O/C to <1378 MHz, GDDR5-7512 |

| Memory (Overclock) | 16GB Crucil DDR4-2400 CAS 16-16-16-39, Motherboard Limited toDDR4-2133 CL 15-15-15-35, 1.20V | 16GB PNY DDR4-2400 CAS 15-15-15-35, O/C to DDR4-2666 CL 14-14-14-28, 1.24V | 16GB G.Skill DDR4-2400 CAS 15-15-15-35, O/C to DDR4-2666 CL 15-15-15-35, 1.325V |

| Motherboard (Overclock) | ASRock Rack C236 WSI: LGA 1151, Intel C236 Stock 100 MHz BCLK | Gigabyte Z170M-DH3: LGA 1151, Intel Z170Stock 100 MHz BCLK | ASRock X99E-ITX/ax: LGA 2011-v3, Intel X99Stock 100 MHz BCLK |

| Case | Lian Li PC-Q04B | DIYPC MA01-G | DIYPC HTPC-Cube-BK |

| CPU Cooler | Stock Intel Cooler | Cooler Master Hyper T4 | Corsair H60 Closed-Loop |

| Hard Drive | Samsung 850 Evo 250GB SATA 6Gb/s SATA III SSD | Samsung 850 Evo 250GB SATA 6Gb/s M.2 SSD | Samsung 850 Evo 250GB SATA 6Gb/s SSD |

| Power | Antec EarthWatts Green EA-380D, 80 PLUS Bronze | SeaSonic SS-400ET: 400W, 80 PLUS Bronze | Rosewill RG630-S12: 630W, 80 PLUS Bronze |

| Software | |||

| OS | Microsoft Windows 8 Pro x64 | Microsoft Windows 8 Pro x64 | Microsoft Windows 8 Pro x64 |

| Graphics | AMD FirePro 14.502.1019 | Nvidia GeForce 359.06 | Nvidia GeForce 352.86 |

| Chipset | Intel INF 10.1.1 | Intel INF 10.1.1 | Intel INF 9.4.2.1019 |

Synthetics

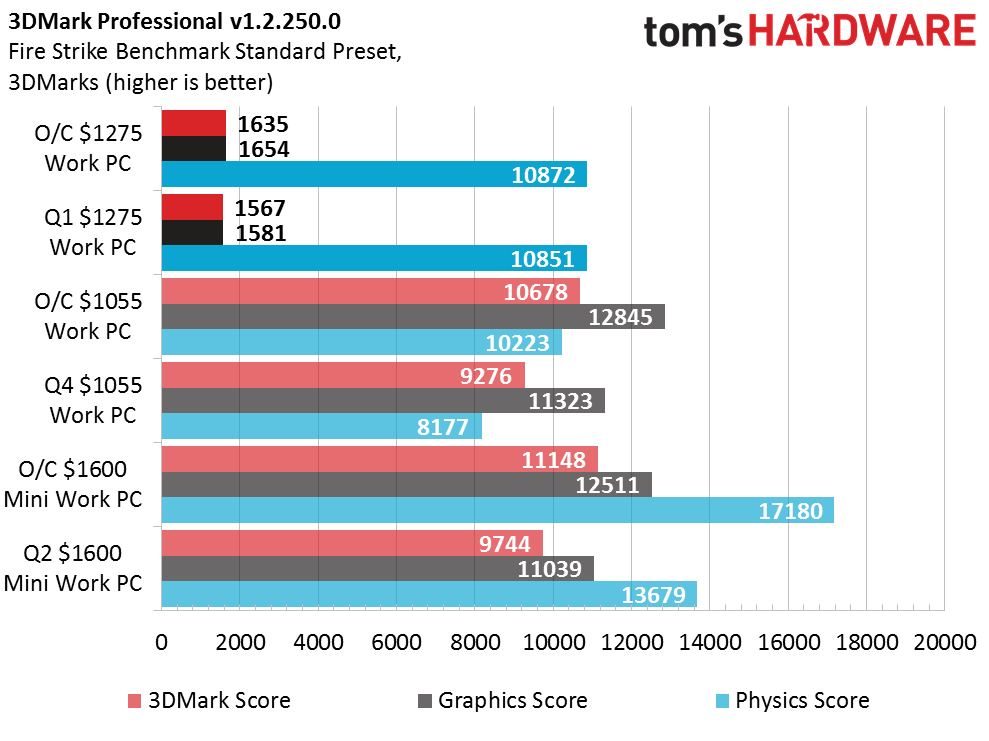

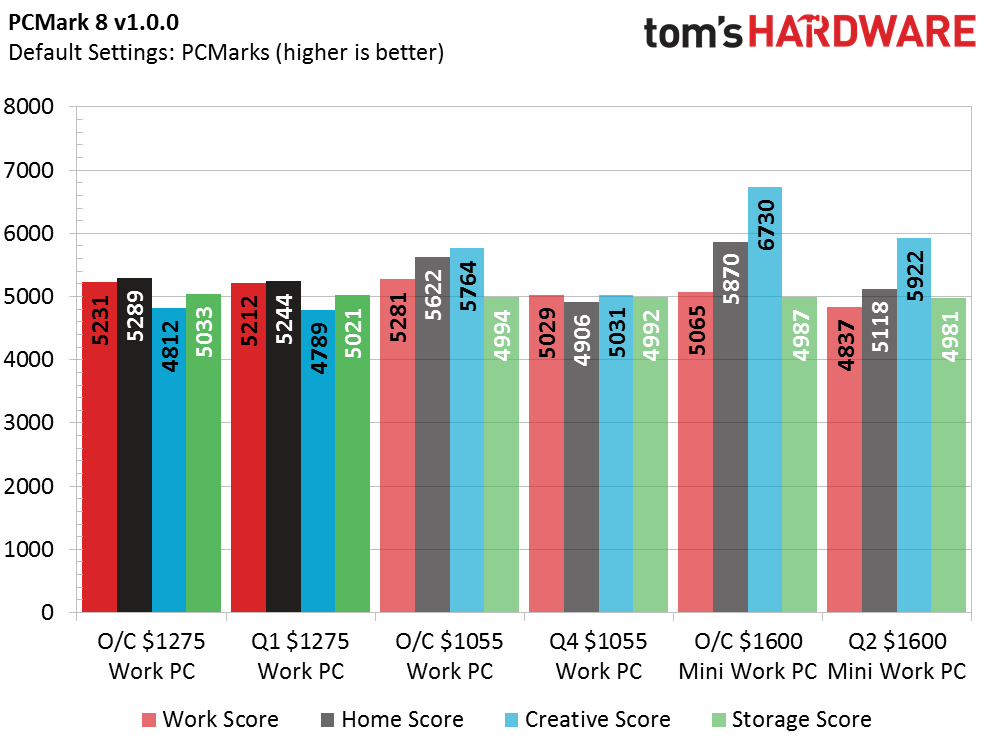

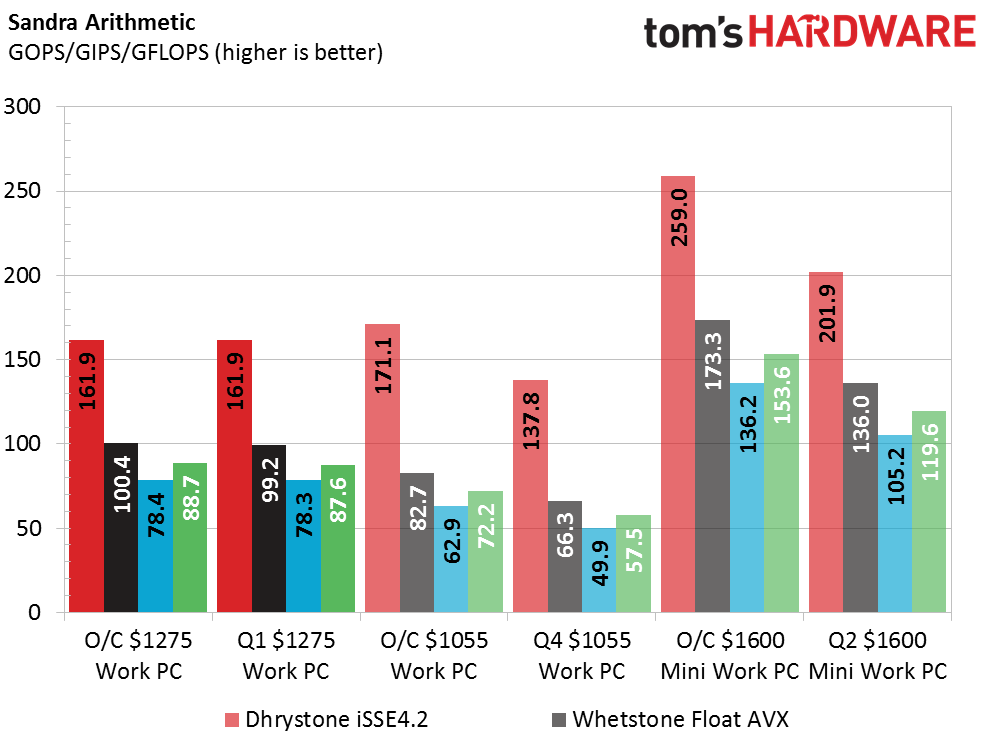

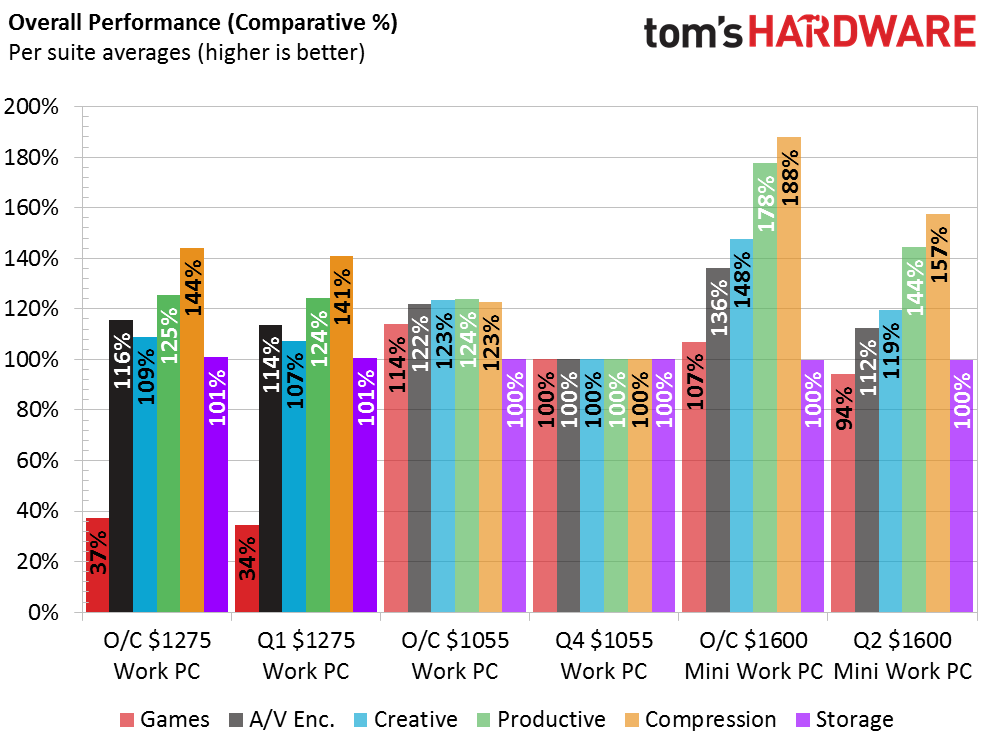

I’m not sure if it’s because we haven’t updated our version of 3DMark in several years or because the drivers for the W5100 aren’t optimized for this type of workload, but this quarter’s build fell flat on its face during the GPU intensive part of 3DMark’s tests. All of the other numbers look good though, and aside from some slightly lower numbers in the rest of the graphics benchmarks, my quad-core Xeon holds strong against the previous builds in the CPU benchmarks.

Gaming

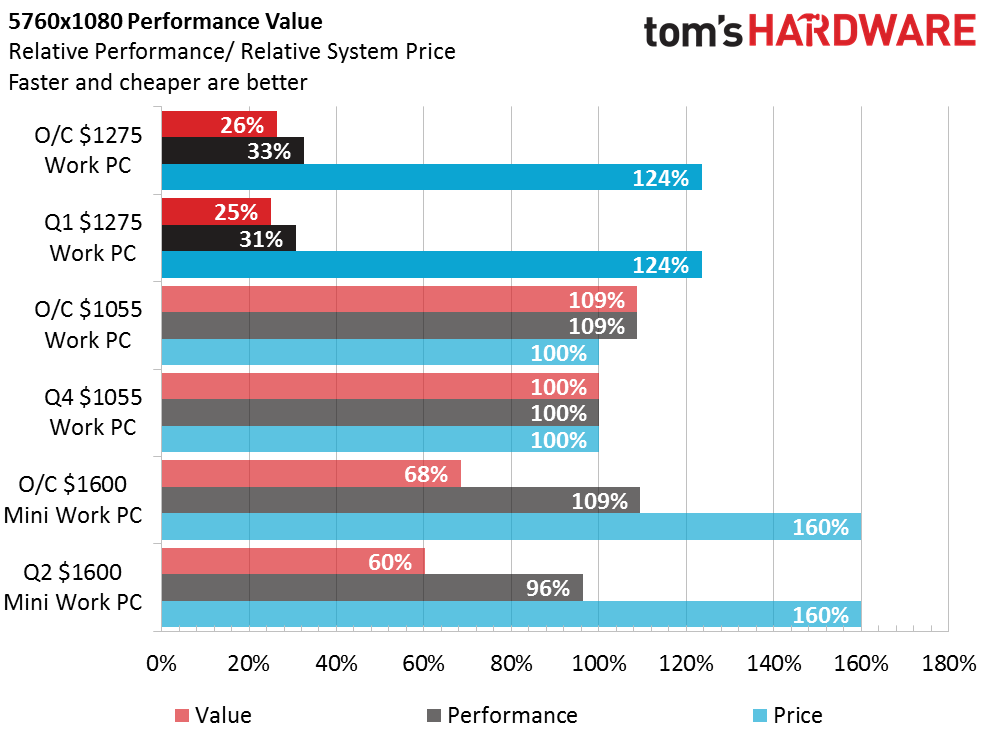

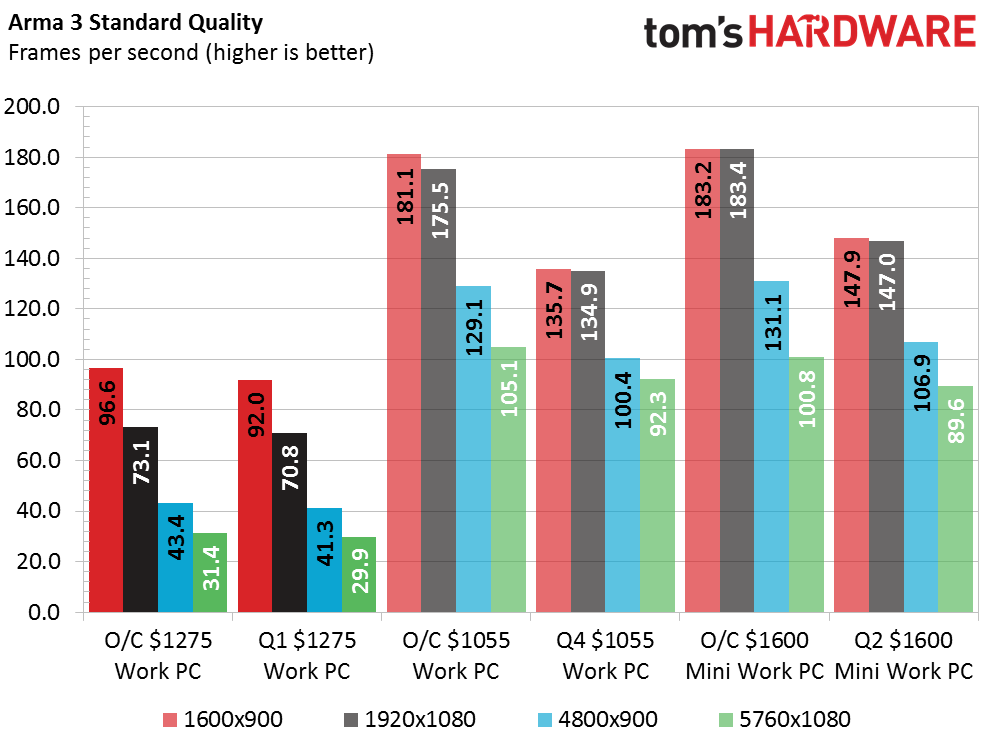

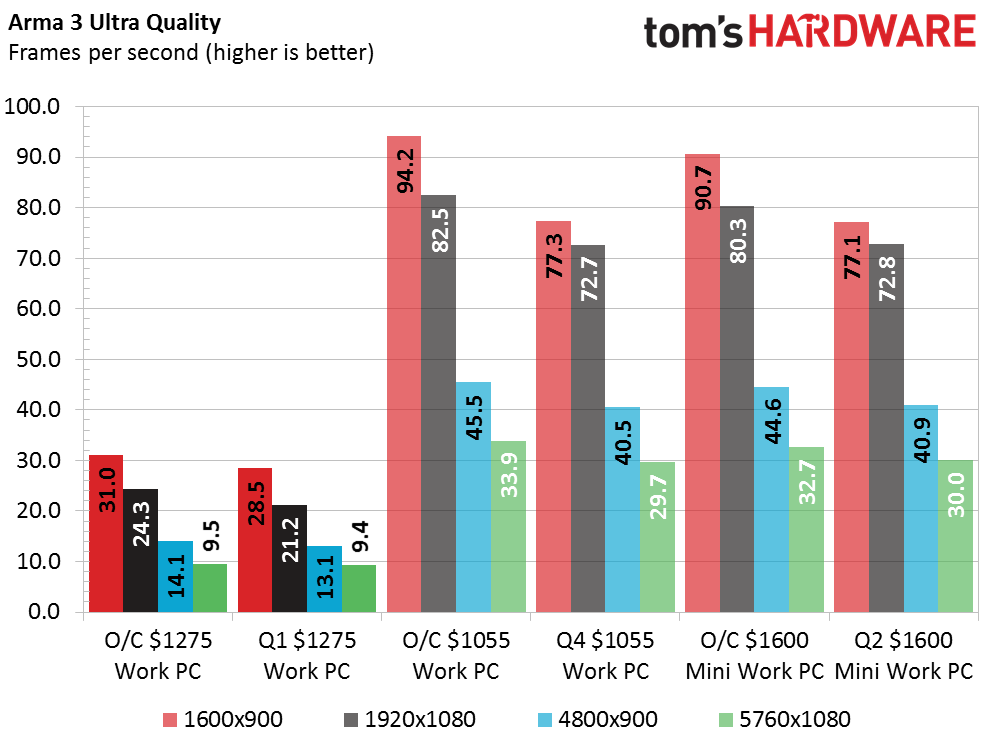

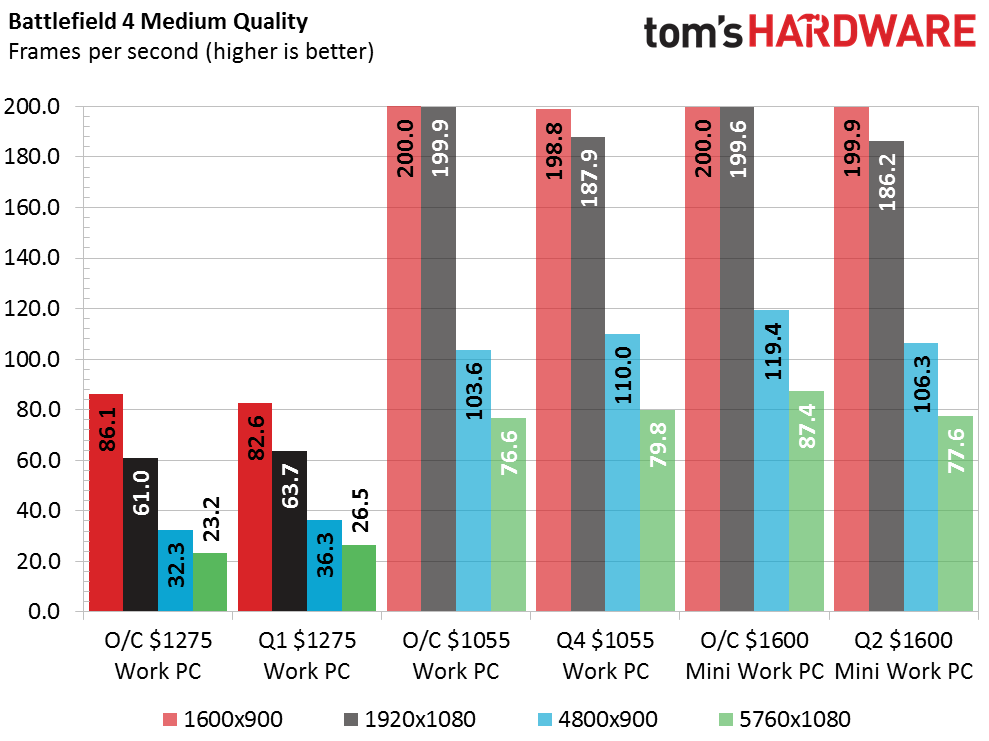

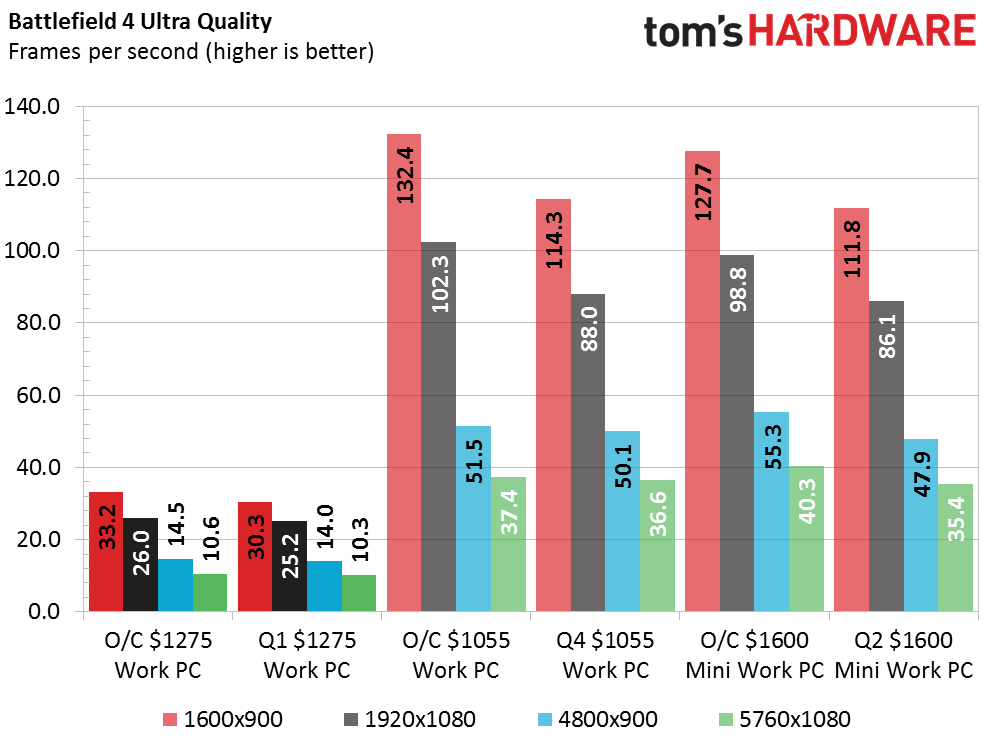

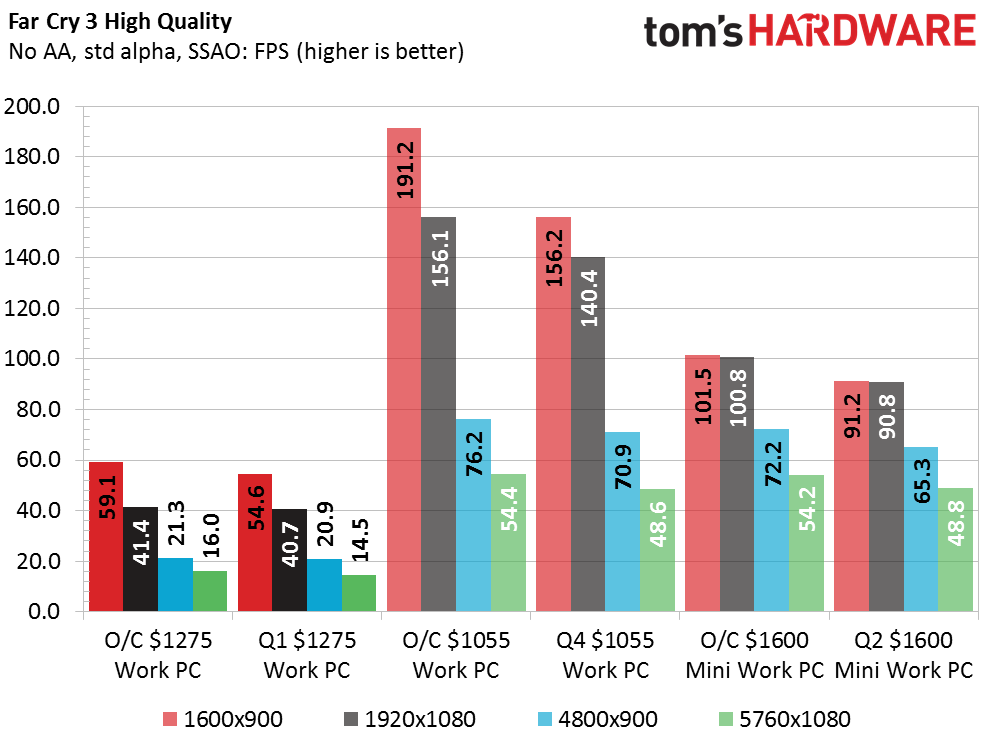

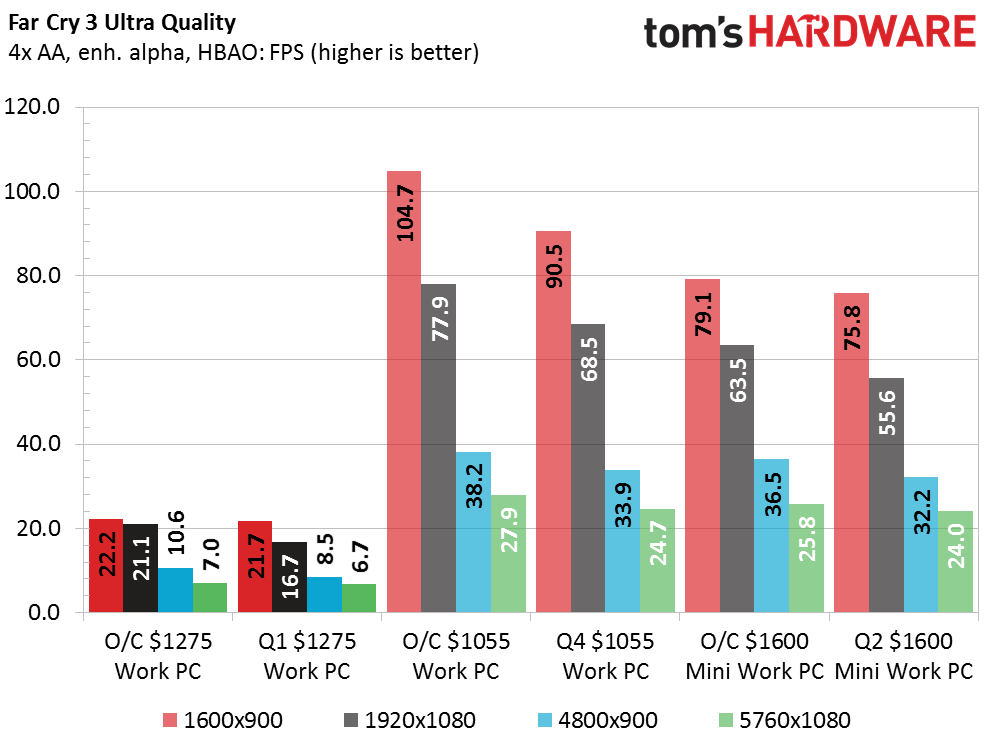

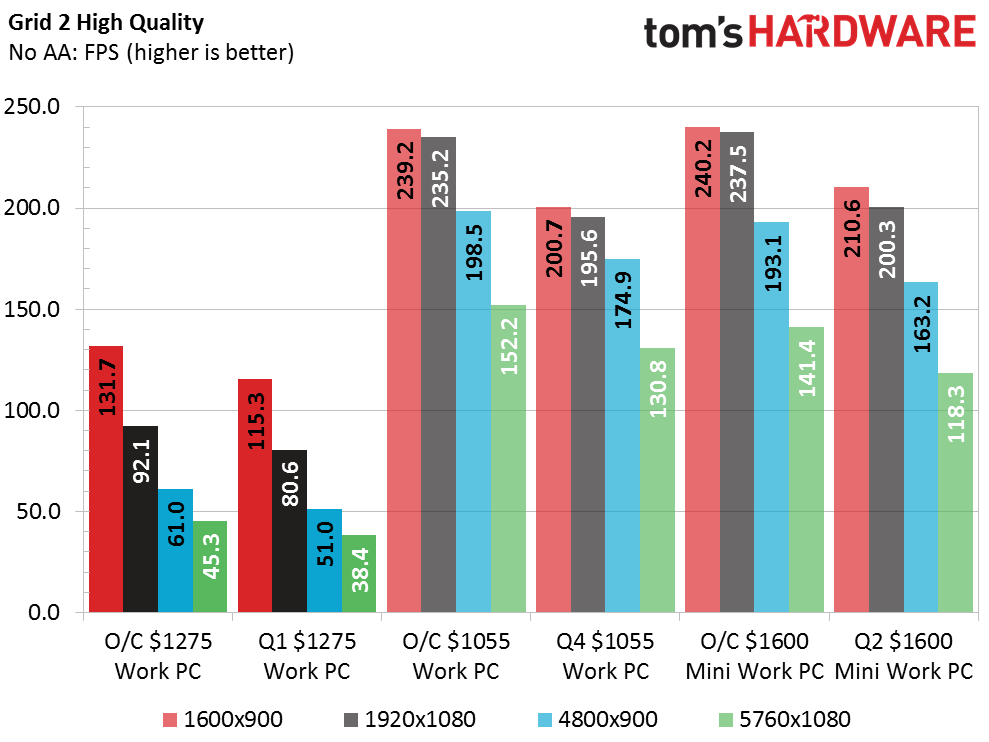

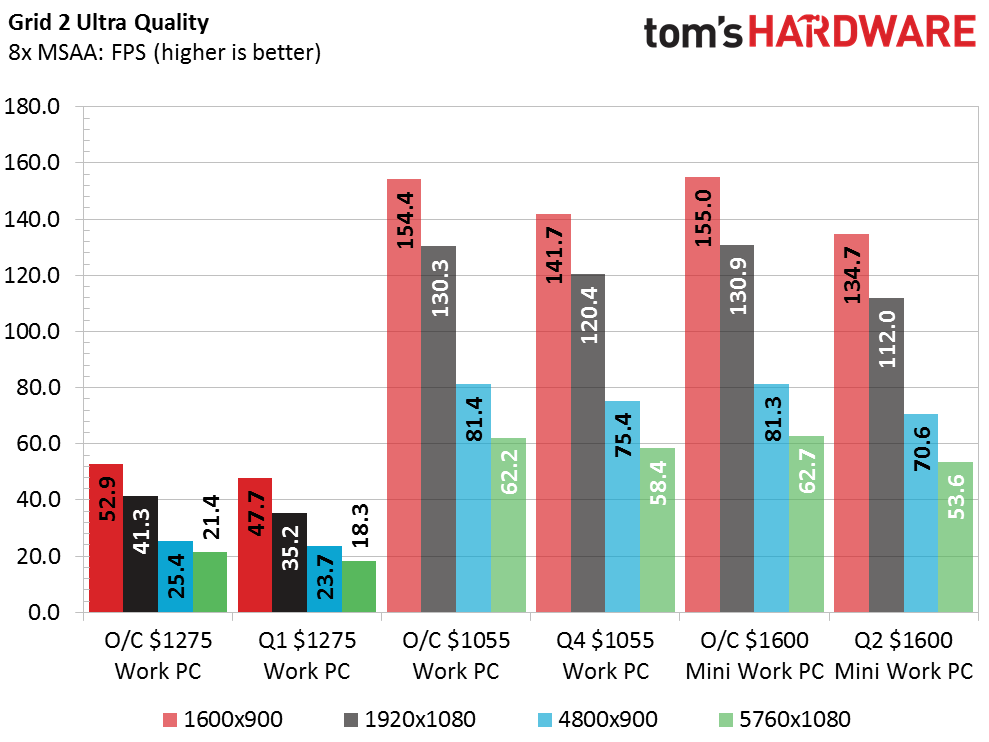

Here’s where things turn ugly for this quarter’s build. It quickly becomes apparent that using a workstation card for gaming is a really terrible idea. In fact, it’s so bad that I’m convinced that my GTX 650m equipped laptop from 2012 could wipe the floor with this build in the gaming section. Still, the entire point of this build was to get hard performance numbers for this kind of hardware, which is exactly what we got. Hopefully it won’t be as bad as it looks later on in the performance-value chart.

Applications

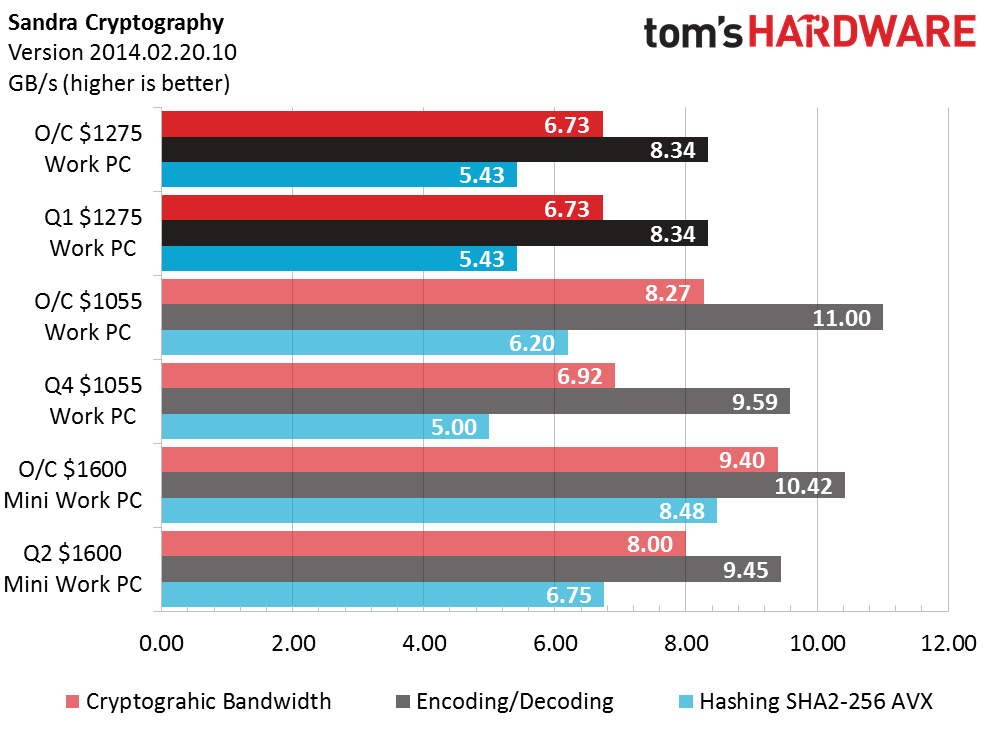

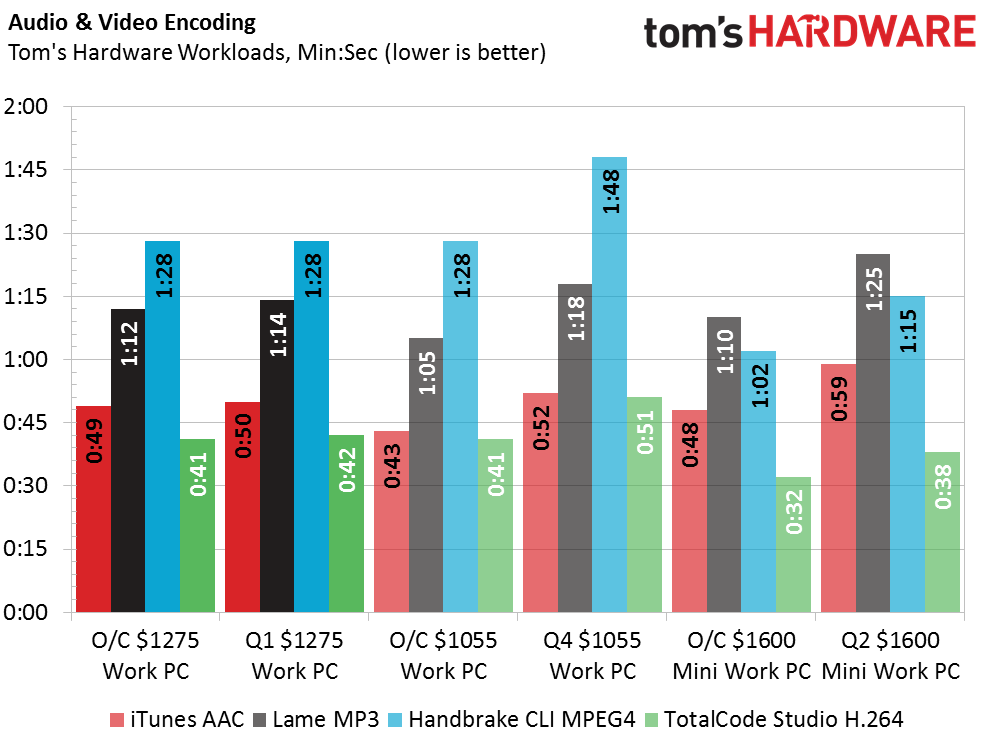

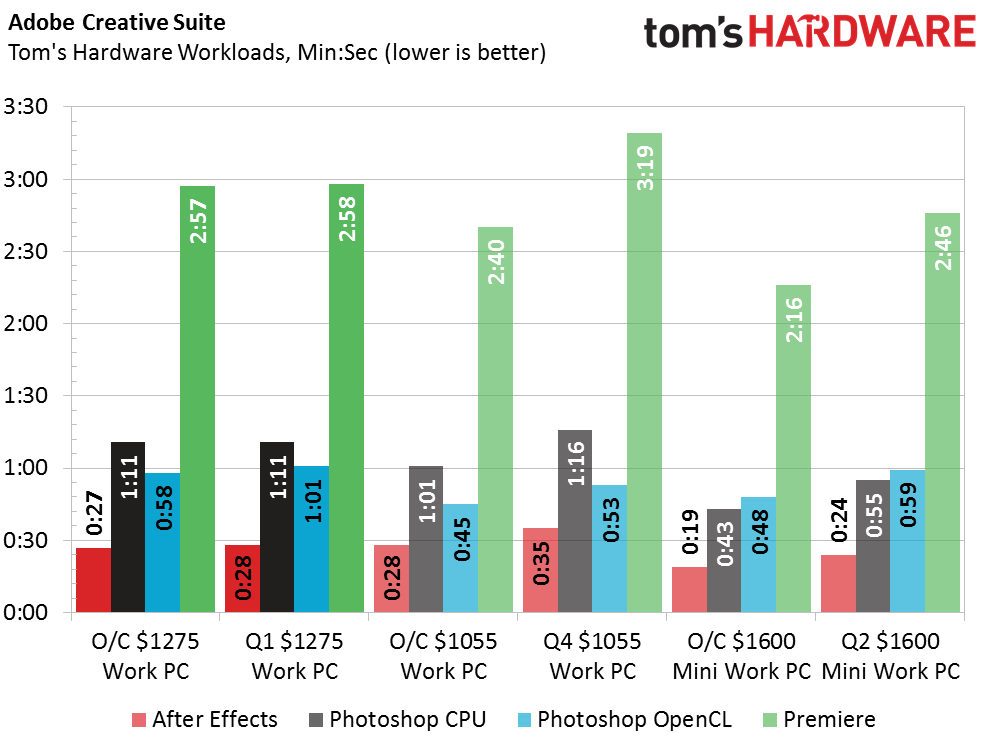

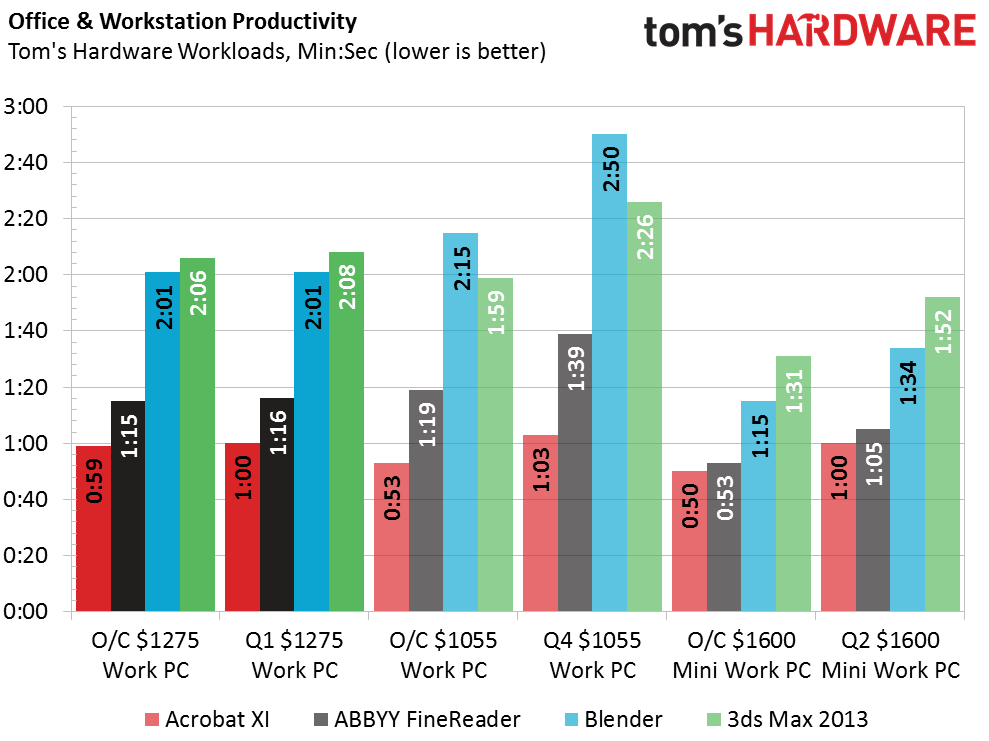

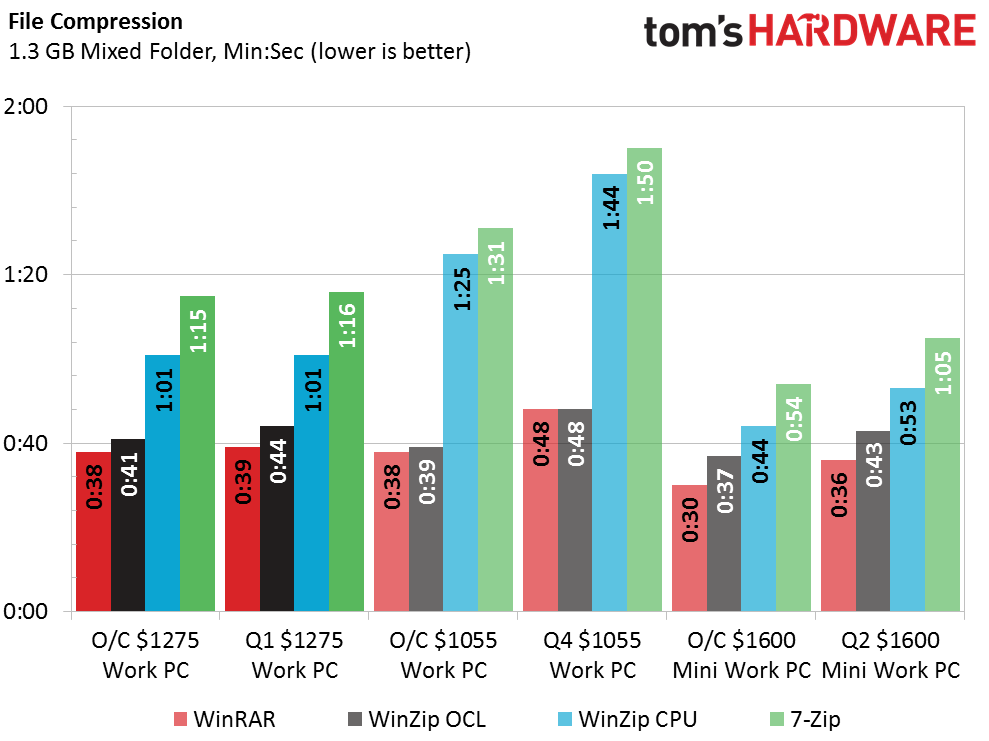

The stock 4GHz clock speed of my Xeon gives me a little bit of an advantage over last quarter’s Prosumer build, but only at stock settings. It would have been nice to see what I could have pulled off with a CPU overclock, but that’s just not going to happen today. Similarly, the W5100 gives me the upper hand in some of the OpenCL workloads, though not in as many of them as I would have liked.

Power, Heat & Efficiency

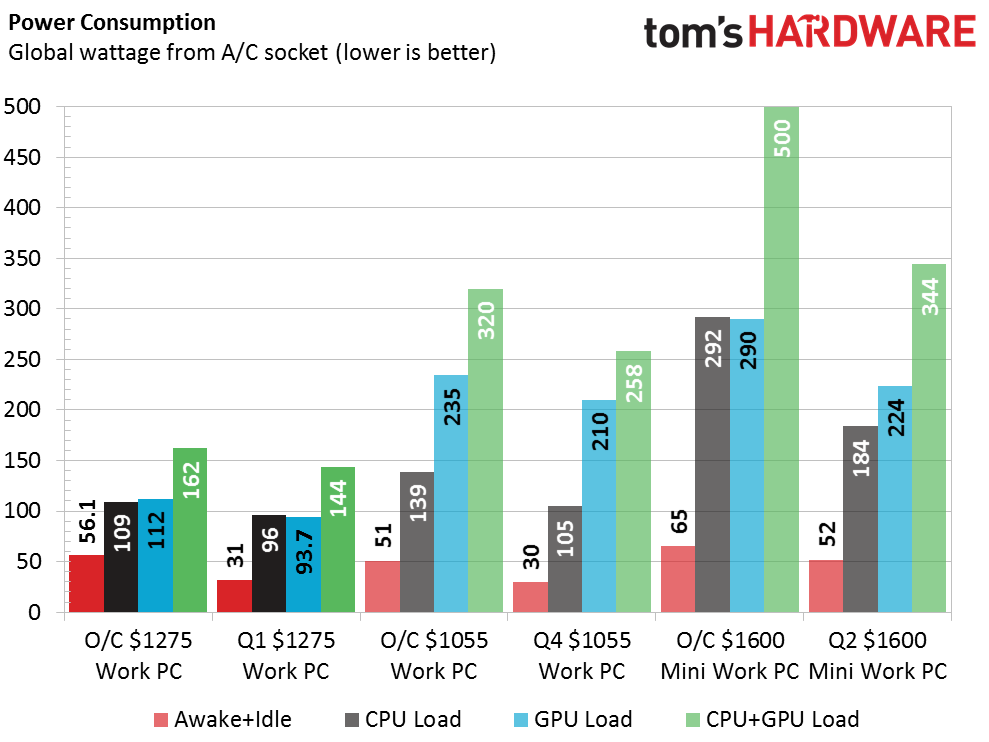

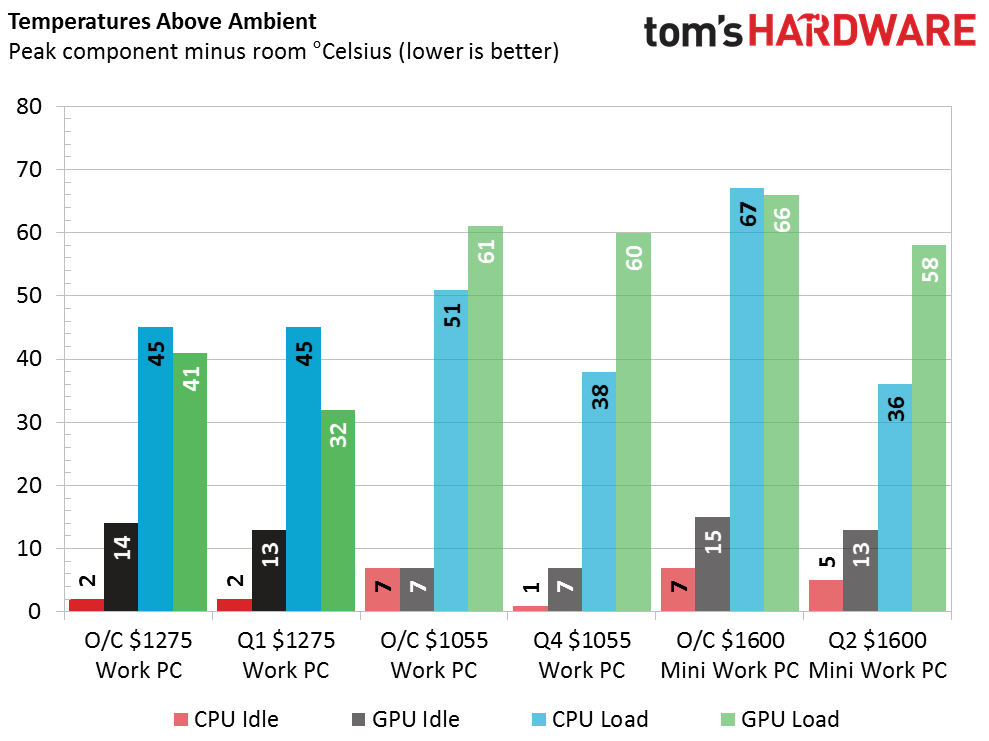

Thanks to the low power draw of the W5100, even at full CPU and GPU load I managed to use less than 50 percent of the EA-380D’s rated power output. That low power draw also helps to keep the GPU temperatures low, although the mediocre performance of the stock CPU cooler does offset some of that benefit.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

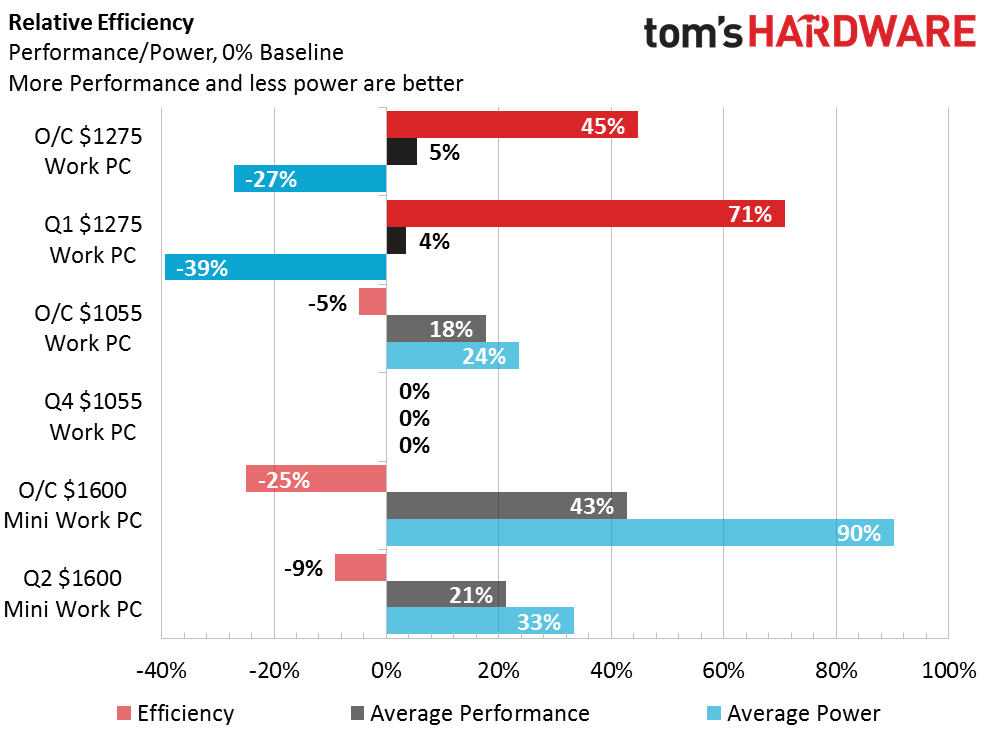

Overlooking the beat down I took in the gaming benchmarks, the rest of the performance numbers look pretty strong, though honestly for the money I spent, they could have been better. Still, my far below average power draw allows me to shoot past the other builds when it comes to efficiency.

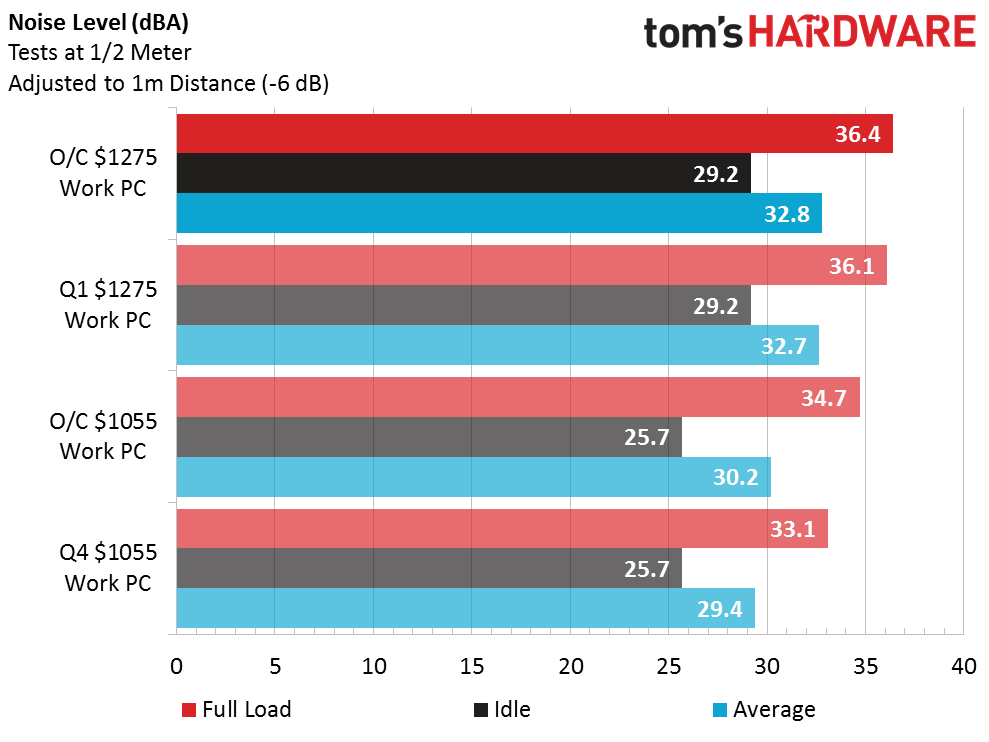

The noise numbers aren’t that bad either considering that I’m using a stock CPU cooler, a GPU with a very small fan and a case with a wide-open side panel.

Value

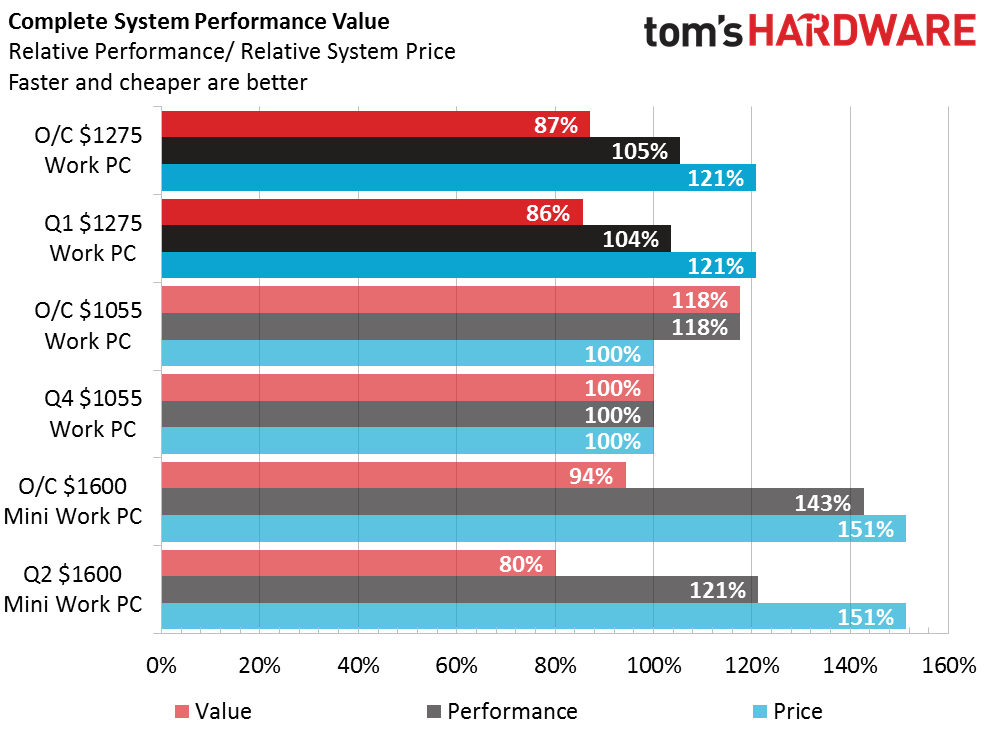

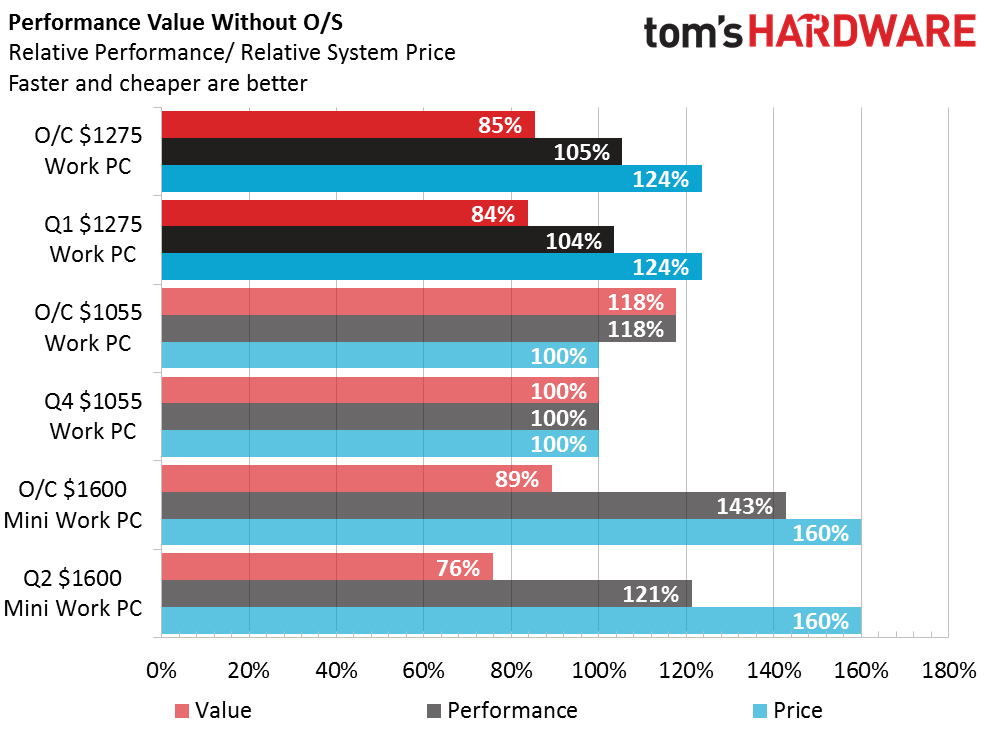

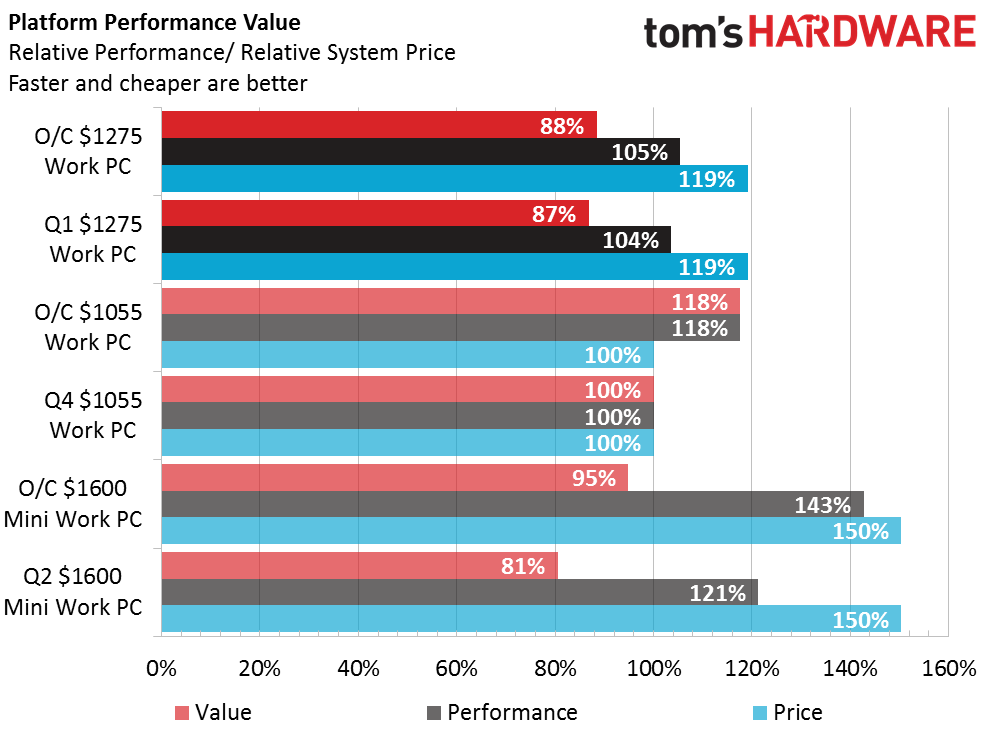

Despite all the money I spent and my rig’s mediocre performance, I somehow managed to come in second place, but only at stock performance levels. However, had I been able to overclock my CPU, I bet I would have been able to solidify my second place standing in overclocked performance as well.

I gain a slight value boost when the OS is taken out of the equation, but it’s not a big enough change to make any difference.

Getting rid of the $65 I spent on non-platform components also isn’t enough to significantly change anything.

As expected, I’ve come in dead last when it comes to high gaming resolutions, but that’s not exactly as bad as it sounds. This build was never intended to be used for gaming, so its performance in that aspect isn’t surprising. Overall, even with my part changes, I mostly accomplished what I set out to do, which was to get hard data on how true professional components perform in our benchmarks. I’ve even learned a couple of things in the process.

The first of which is that we could really use some additional “professional” application benchmarks, i.e. CAD and other GPU intensive applications, so that we can get more meaningful data in situations like this one and also to help further quantify GPU performance in general. We’ve got a few applications in our benchmarks now that put our GPU choices to the test, but they simply scratch the surface rather than give us any true understanding of how well certain GPUs work with professional applications.

The second thing, which ended up being more of a demonstration than a learning experience for me, was that using workstation-grade hardware outside of a limited set of applications is mostly a waste of time and money. Unless you use a specific set of programs or need to be very precise with your data, you’re better off choosing consumer grade hardware.

MORE: Latest Systems NewsMORE: Systems in the Forums

Chris Miconi is an Associate Contributing Writer for Tom's Hardware, covering Cases.

-

cadder It is interesting to see a build with workstation components and how they compare to enthusiast-oriented components. I work in the building construction industry and almost everybody uses Revit for CAD. Revit is slow and power-hungry, only uses one core, doesn't need much GPU but needs a single core running as fast as you can get. This is a misconception about workstation users, many of them don't need a lot of slow cores. I wonder how many people that use workstations benefit from lots of cores and how many don't. This would be an interesting study. My company is switching from desktops to laptops- my Dell desktop workstation with dual Xeons is 3/4 as fast as my Dell Precision workstation laptop.Reply

If you are loading a lot of apps like we do, a 250GB drive is not enough. When my newly configured and loaded workstation was put on my desk it had about 350GB of files on the hard drive.

When I built CAD workstations for my previous company about 6 years ago, I used i5 processors and overclocked them. I took them as high as I could go and then backed down 0.2GHz for a little safety margin. The machines ran 24/7 with no problems. -

edlivian i understand you couldn't get ecc memory for this review, but the whole point of a serious workstation is not performance as you have said, its about precision with no errors. There is no way you could quantify this with benchmarks. ECC memory is an invaluable requirement in the server market, or anything you can not live with an error. And 90% of the workstation and server market are not custom builds, they mostly come from Dell, HP, Lenovo and AppleReply -

Onus Kudos for your bravery and sacrifice, your system dying horribly in the charts, but not before heroically yielding some usable data. As you said at the outset, it was nice to see something other than a gaming build.Reply -

Valentin_L I have been managing PC builds for our office for several years. We have a dozen seats of Solidworks with some simulation softwares. For productivity, we ditched the ECC requirement for faster compute... We ended up with overclocked mainstream cpu 2700K, 3770K and I'll continue with 6700K. The $$ saved from not going ECC goes into SSD and other productivity "enhancer". Staff wait less, they are happier, complain less about PC freezing over and over. Then, do we have memory issues, surely, I mean, I don't have ways to measure it, but if somebody raised a hand and say that he takes the same file twice from the server and once it works, once it crash, we know there is an issue somewhere. But it never seems to happen consistently enough that it hinders productivity. Plus... I am not building rockets...Reply

If you browse through solidworks own forum (not sure it's open to public) you will see a lot of report of people moving to mainstream overclocked cpu + professional card.

For the server side... YES, ECC everywhere, Xeon (or the equivalent AMD) everywhere, redundancy like our life depends on it... that, we can't screw up. -

DouglasThurman I think this build was a little doomed to begin with. Very nice small set of parts though and I like the use of a workstation graphics card. Most times when I come across a laptop with a workstation card in it in a refurb sale I'd choose it over one with a lesser regular gaming graphics card. Performance is a little slower but still rock solid and better power consumption.Reply -

MartenKL We have 32GB in our current workstation builds and I would like to double that to our next build, and we are going back to ECC and no overclock this time due to low gains in performance and added crashes with overclocked systems. Also running Autodesk Building Design Suite with Revit and Autocad. Main focus will be single threaded performance. Hopefully I will find a solution for striping two 500GB samsung Pro 950 as when all software is installed we approach 500 GB.Reply -

AllanMeineche Regarding the choice of CPUs supporting ECC; Intel has a handy tool for making that decision - http://ark.intel.com/Search/Advanced?s=t&ECCMemory=trueReply -

ralanahm ReplyRegarding the choice of CPUs supporting ECC; Intel has a handy tool for making that decision - http://ark.intel.com/Search/Advanced?s=t&ECCMemory=true

That list has a lot of CPU -

If your RAM had been ECC, your results would have been even lower. It's too old now, but the AMD FX series supports ECC (unregistered only) out of the box, if the motherboard also supports it. So you could have gotten an FX-8370 for about 200$, with an Asus motherboard that supports ECC RAM for about 150$, then went for a Firepro w7100 or such with the money saved.Reply

Nice try, but if you didn't get it running ECC in the end, it's a fail imo. -

gunbust3r Is the $1232 Prosumer PC going to be better? I kind of dread entering the giveaway at this point for fear of winning this stinker.Reply