AMD takes $800M haircut as US gov't cuts off China's AI GPU supply

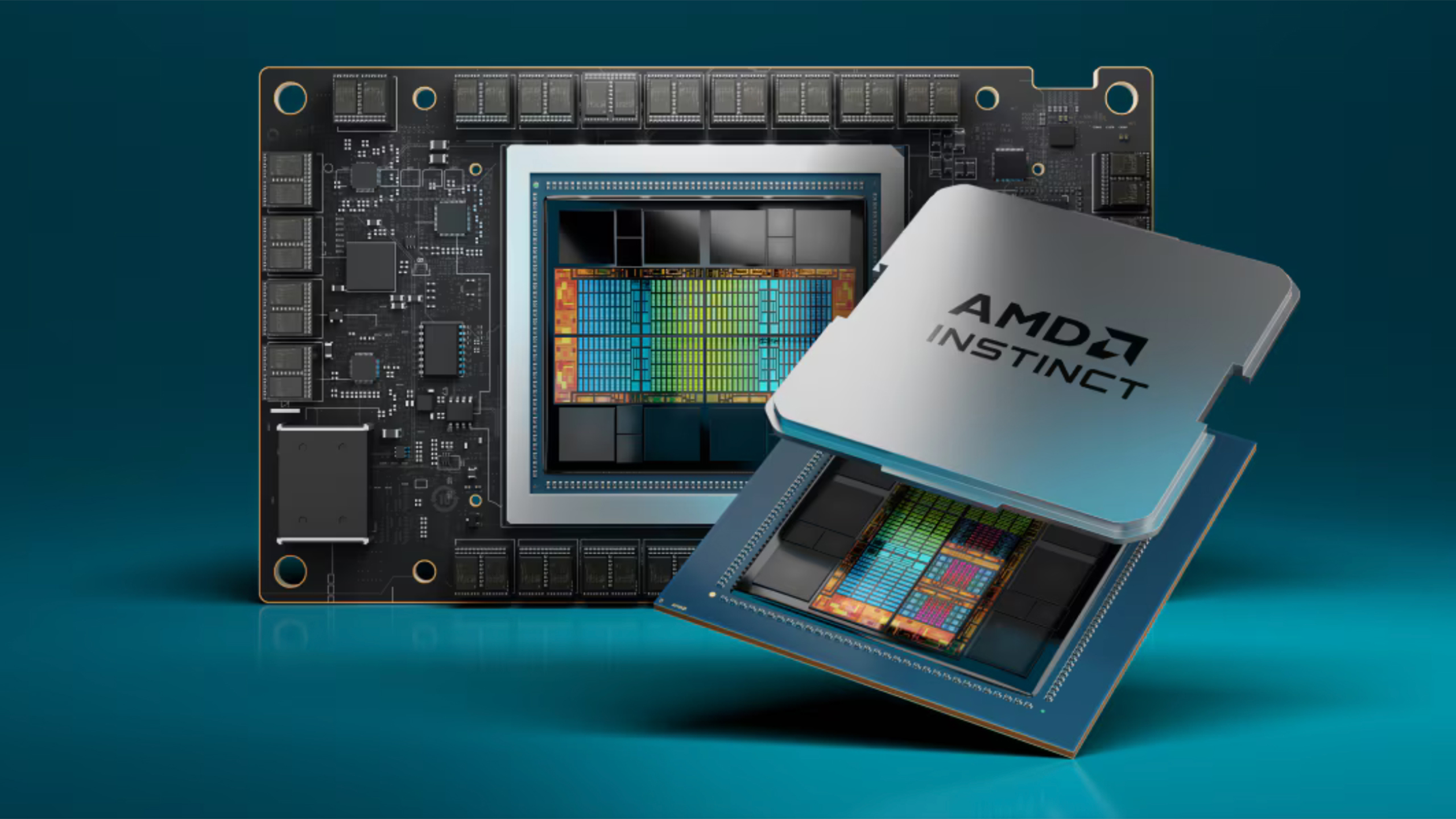

AMD's MI308 chips are now subject to export licenses.

The U.S. recently issued fresh restrictions on AI chip exports to China and other countries, further reducing the maximum allowable performance that manufacturers like Nvidia and AMD can deliver to their customers in the affected regions. Because of this, AMD reported in an SEC filing that it might suffer “charges of up to approximately $800 million in inventory, purchase commitments, and related reserves.”

The U.S. government has been taking steps to limit Beijing’s access to America’s most advanced chips to ensure the U.S. will retain its edge in artificial intelligence and prevent its East Asian rival from outpacing it. Because of this, both AMD and Nvidia have created AI GPUs specifically designed to meet the federal government’s restrictions. However, the White House updated its limitations recently, making the Nvidia H20 and AMD MI308 ineligible for export without a license.

“On April 15, 2025, Advanced Micro Devices, Inc. (the “Company”) completed its initial assessment of a new license requirement implemented by the United States government for the export of certain semiconductor products to China (including Hong Kong and Macau) and D:5 countries…,” the company said in its SEC filing. “The Export Control applies to the Company’s MI308 products. The Company expects to apply for licenses but there is no assurance that licenses will be granted.”

AMD isn’t the only one taking a substantial cut thanks to expanded export controls. Nvidia has also been hit massively, with the company expecting to make a $5.5 billion write-off with its H20 GPUs. The U.S. Department of Commerce said (via Reuters) that it’s enacting these requirements to act on “the President’s directive to safeguard our national and economic security.”

This new restriction will make it harder for Chinese companies to acquire the chips they need to train their AI models. Nevertheless, it’s been proven that these bans and sanctions have largely been ineffective. We’ve already seen some examples of Chinese businessmen importing sanctioned Nvidia H200 GPUs, and it’s widely reported that you can get these advanced chips into China by using an intermediary located in nearby countries such as Malaysia, Vietnam, and Taiwan. Singapore and Malaysia are making moves to reduce the leakage of AI chips into China, but we won’t know how effective their moves will be in interdicting the massive black market demand.

Get Tom's Hardware news, analysis, and in-depth reviews delivered straight to your feed: Check us out on Google News, and be sure to click the Follow button in the upper right.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jowi Morales is a tech enthusiast with years of experience working in the industry. He’s been writing with several tech publications since 2021, where he’s been interested in tech hardware and consumer electronics.

-

TerryLaze Reply

What are you going to do with an AI chip in a gaming GPU?!ohio_buckeye said:Maybe this will be good for gamers if these start ending up on gpus?

It uses a little bit of AI for frame generation and stuff like that but these AI chips are for completely different scale of things.

Also unless you want to spend thousands of $$$ on an GPU you don't want them. -

Misgar What about the $5.5B haircut taken by NVidia on H20 cards? Makes AMD's $800M look relatively small.Reply

https://www.cnbc.com/2025/04/15/nvidia-says-it-will-record-5point5-billion-quarterly-charge-tied-to-h20-processors-exported-to-china.html

Should be good news for Huawei if AMD and NVidia are not allowed to sell AI in China for several years. -

usertests Reply

Not if they don't have any shaders or graphics outputs.ohio_buckeye said:Maybe this will be good for gamers if these start ending up on gpus?

However, I don't see why other businesses can't use these for AI. They may be hobbled for the Chinese market but they are still powerful.

Here it is:Misgar said:What about the $5.5B haircut taken by NVidia on H20 cards? Makes AMD's $800M look relatively small.

https://www.tomshardware.com/tech-industry/artificial-intelligence/nvidia-writes-off-usd5-5-billion-in-gpus-as-us-govt-chokes-off-supply-of-h20s-to-china

In proportion to AI accelerator market share / market cap, maybe it's worse for AMD?? -

Eximo Reply

Much worse. Nvidia can absorb it, AMD less so. However, that does mean they should be able to still sell them to others. It might help some fledgling AI companies jump the queue and get accelerators faster.usertests said:In proportion to AI accelerator market share / market cap, maybe it's worse for AMD??

As to using them for gaming, it could be done. Nothing says someone won't make an AI dependent game and having an AI accelerator card may become a thing in the future. Now I would expect this to get baked into gaming GPUs by the time that happens, but still.

I think an RPG with LLM driven NPCs would make for an interesting experience. -

usertests Reply

We'll definitely see open world RPGs/games utilizing LLMs and other AI/ML methods for NPC interactivity and maybe complex user-driven storylines (although if done wrong it will become buggier than you could possibly imagine). We will also see real-time voice synthesis, which can help solve the problem of games taking gigabytes to store a relatively small amount of voice acted canned responses. There are already companies allowing you to license voice actors for synthesis.Eximo said:I think an RPG with LLM driven NPCs would make for an interesting experience.

I think it comes down to what the next-gen consoles ship with, since PC gaming follows wherever they go. I can't see PS6 or the Xbox 720 shipping with more than 32 GB of memory, even though the sky's the limit for some of this stuff. We may see integrated NPUs in the 300-1000 TOPS range, or they could simply make the GPU larger so developers can choose.

I doubt PC gamers will ever need to add PCIe/M.2 AI accelerators. AMD claims the 9070 XT is good for up to 779 INT8 TOPS with sparsity, or 1557 INT4 TOPS with sparsity. What effect that has on using it for graphics at the same time, I do not know. But it may be another 5+ years before games require a form of AI acceleration matching next-gen consoles, and more powerful GPUs will be available by then. -

ottonis Reply"The U.S. government has been taking steps to limit Beijing’s access to America’s most advanced chips to ensure the U.S. will retain its edge in artificial intelligence and prevent its East Asian rival from outpacing it."

In music production, film editing or photography, it has long been known that limited tools may actually boost creativity and output, For example, A musician who has installed more than 500 VST-plugins to his DAW will never get to know and master each individual virtual instrument as a similarly talented artist who is working only with 20-30 VSTs. Excellent and skilled photographers often use relatively old cameras and lenses (5-10 years old), which are in technical terms totally outdated but which they mastered so well that the camera does never get in the way of the creative process.

Something similar may be expected with CPU/GPU hardware: consoles such as the Playstation, the Nintendo Switch or the Xbox are much much more constrained compared to contemporary high-end PCs. However, the programmers are forced to optimize their code for exactely one single (albeit limited) cofiguration, which actually leads to perfectly playable and grat looking games on consoles.

And with DeepSeek we have seen that restrictions in computing hardware seem to stimulate lots of workarounds and code- and workflow-optimizations that ultimately deliver surprsingly competitive results. -

TerryLaze Reply

LOL!ottonis said:Something similar may be expected with CPU/GPU hardware: consoles such as the Playstation, the Nintendo Switch or the Xbox are much much more constrained compared to contemporary high-end PCs. However, the programmers are forced to optimize their code for exactely one single (albeit limited) cofiguration, which actually leads to perfectly playable and grat looking games on consoles.

No!

All the console games are made on a few game engines that create the same crap code for consoles as for PCs, it's the same engine that makes the code, they just tick the PC box instead of the console box and recompile it.

It's also all the same hardware except for nintendo, everybody is on x86 and a normal GPU.

A lot of games run very badly on consoles and also on PC.

The PC just has a lot more additional options for more filters and more crap on top (raytracing and so on)

For more than a decade now (ps4 and xbone) every PC game has not been a port of a console game but has been a console game with different settings applied. -

jp7189 Reply

Here's hoping the 5090d lands on the list so the rest of the world might actually have a chance to get gb202 based gaming cards. So far, they appear to be all but a token supply outside of china.ohio_buckeye said:Maybe this will be good for gamers if these start ending up on gpus? -

emerth AMD and nVidia should sell these products in the west then. There is essentially unlimited demand for AI chips everywhere, there is no need to landfill these things.Reply