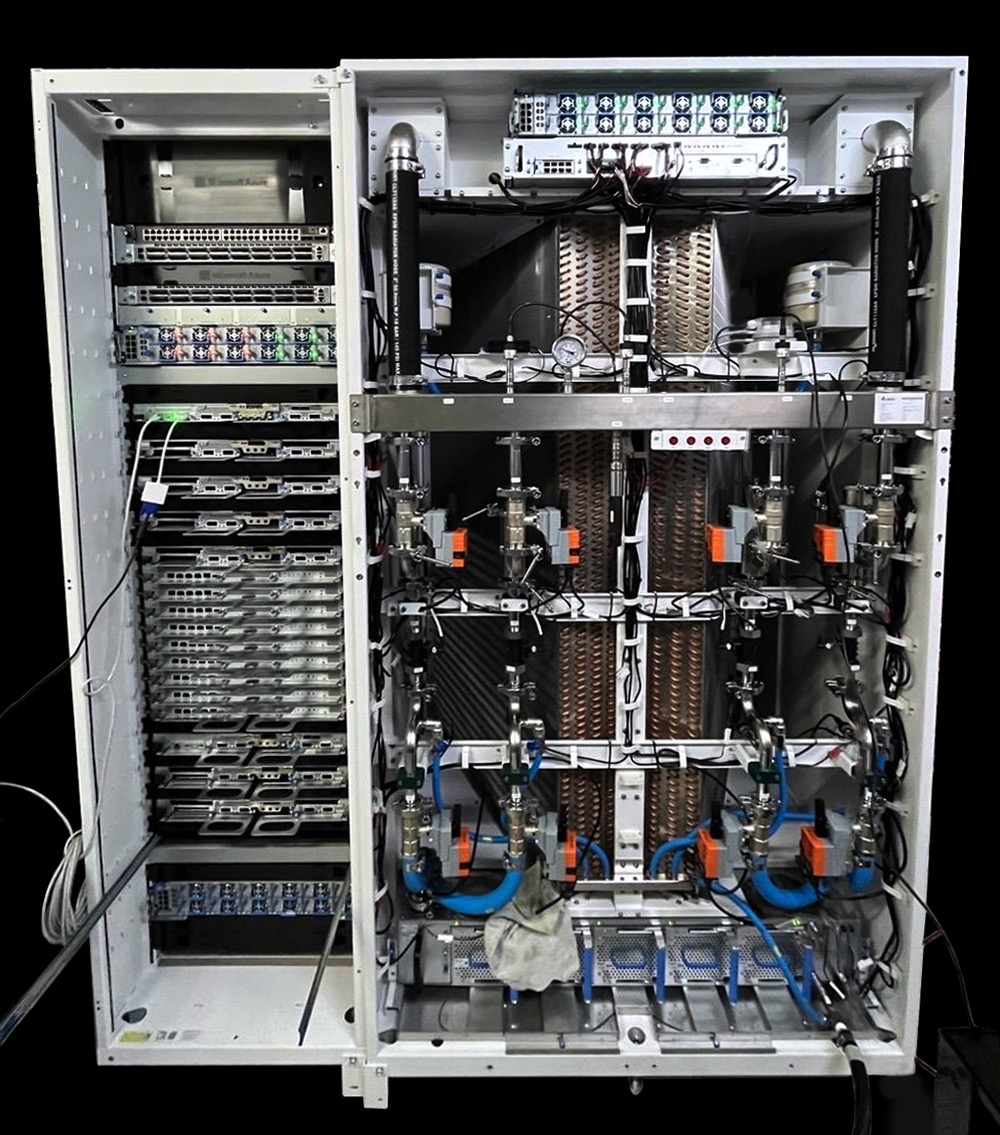

Microsoft Azure flaunts world's first custom Nvidia Blackwell racks — tests its own 32 GPU server rack and liquid cooling solution

Non-Nvidia servers running Blackwell are up and running.

Nvidia's Blackwell processors are among the most sought-after data center hardware these days as companies are endeavoring to train large language models (LLMs) with an increasingly large number of parameters. Microsoft was rumored to be the first company to lay its hands on Blackwell servers, but that was unofficial information. Today, the company said that it had not only obtained Nvidia Blackwell parts, but they were up and running.

"Microsoft Azure is the first cloud [service provider] running Nvidia's Blackwell system with GB200-powered AI servers," a Microsoft Azure post over at X reads. "We are optimizing at every layer to power the world's most advanced AI models, leveraging Infiniband networking and innovative closed loop liquid cooling. Learn more at MS Ignite."

So, Microsoft Azure has at least one GB200-based server rack with an unknown number of B200 processors — presumably 32. It uses a highly-sophisticated liquid cooling system. The machine is not Nvidia's NVL72 GB200, which Microsoft reportedly prefers over less dense variants. This particular rack will likely be used for testing purposes (both of Nvidia Blackwell GPUs and the liquid cooling system), and in the coming months Microsoft will deploy Blackwell-based servers for commercial workloads.

It's expected that an NVL72 GB200 machine with 72 B200 graphics processor will consume and dissipate around 120 kW of power, making liquid cooling mandatory for such machines. As a result, it's a good idea for Microsoft to test its own liquid cooling solution before deploying Blackwell-based cabinets.

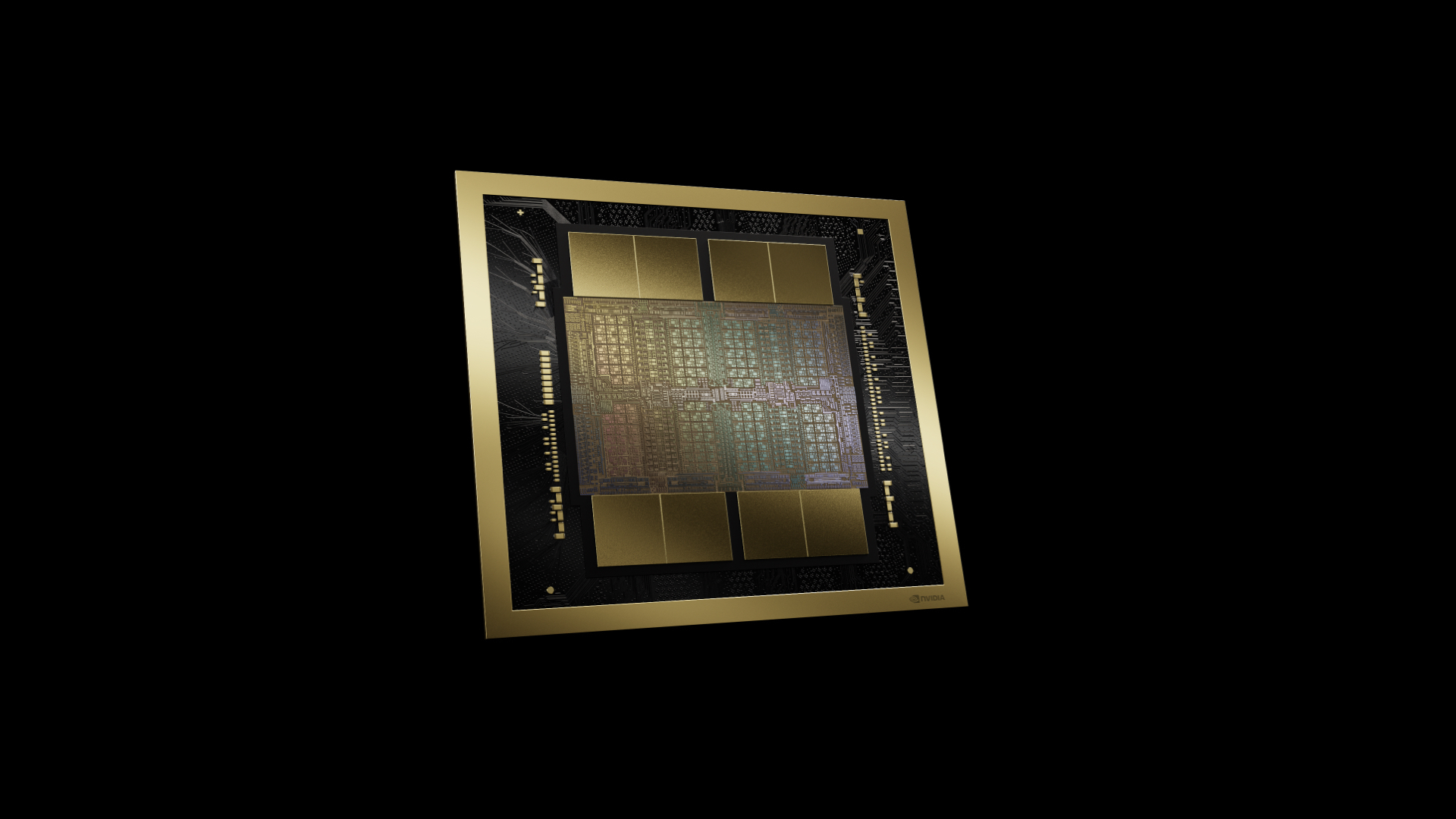

Nvidia's B200 GPU offers 2.5 times higher performance compared to the H100 processor when it comes to FP8/INT8 performance (4,500 TFLOPS/TOPS vs 1,980 TOPS). With the FP4 data format, Nvidia's B200 offers some whopping 9 PFLOPS of performance, opening the doors for the training of extremely sophisticated LLMs that can enable new usage models for AI in general.

"Our long-standing partnership with NVIDIA and deep innovation continues to lead the industry, powering the most sophisticated AI workloads," said Satya Nadella, chief executive of Microsoft, in another X post.

Microsoft is expected to share more details about its Blackwell-based machines, and AI projects in general, at its annual Ignite conference. The MS Ignite conference will take place in Chicago from November 18 to November 22, 2024. Widescale deployment of Blackwell server installations is expected to ramp up late this year or in early 2025.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JRStern The water cooling will fail and a river of red-hot magma will flow into Puget Sound and fry all the fish.Reply