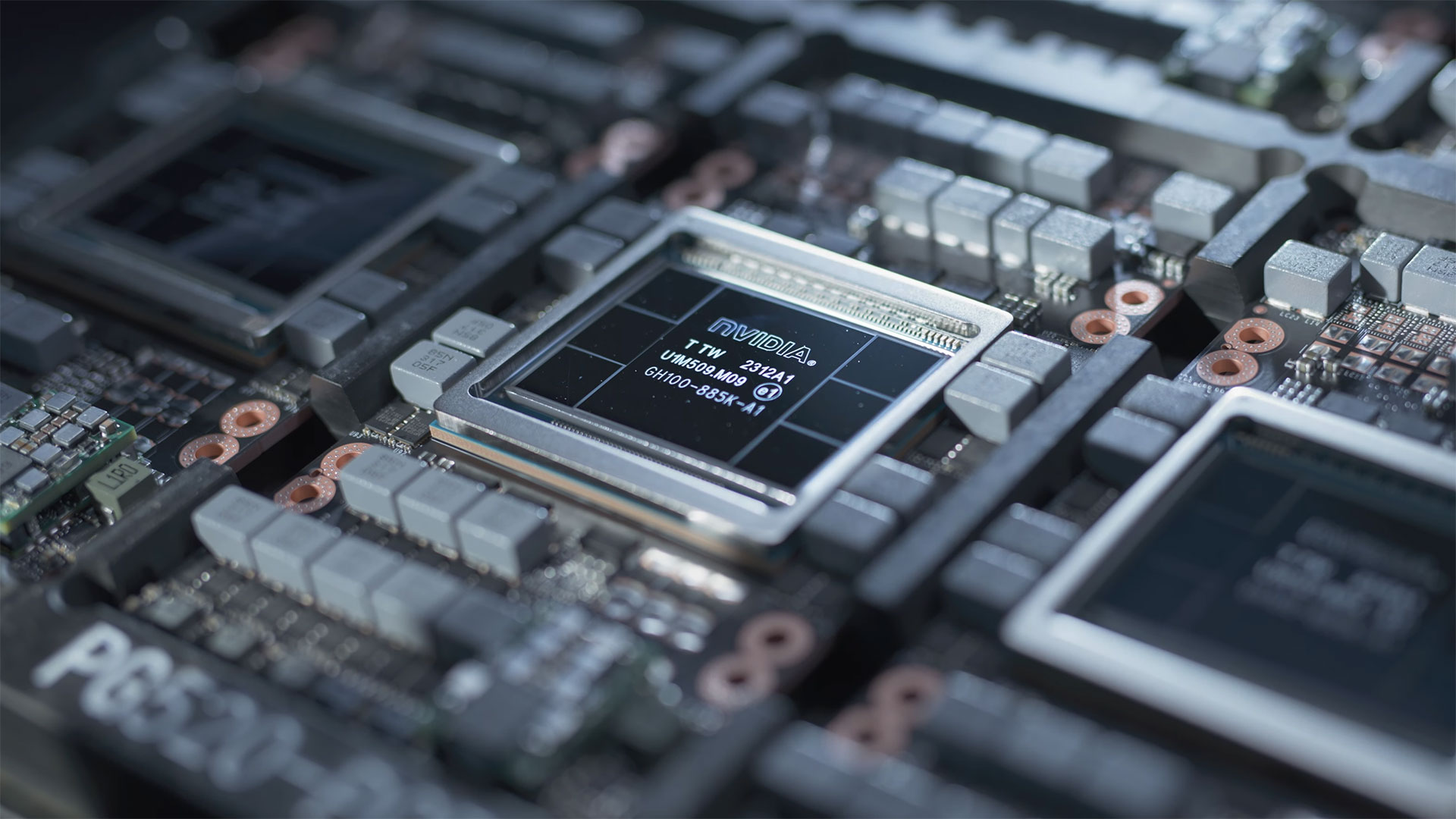

Buyers of Nvidia's highest-end H100 AI GPU are reportedly reselling them as supply issues ease

Nvidia's H100 processors are easier to get and rent.

Evidence mounts that lead times for Nvidia's H100 GPUs commonly used in artificial intelligence (AI) and high-performance computing (HPC) applications have shrunken significantly from 8-11 months to just 3-4 months. As a result, some companies who had bought ample amounts of H100 80GB processors are now trying to offload them. It is now much easier to rent from big companies like Amazon Web Services, Google Cloud, and Microsoft Azure. Meanwhile, companies developing their own large language models still face supply challenges.

The Information reports that some companies are reselling their H100 GPUs or reducing orders due to their decreased scarcity and the high cost of maintaining unused inventory. This marks a significant shift from the previous year when obtaining Nvidia's Hopper GPUs was a major challenge. Despite improved chip availability and significantly decreased lead times, the demand for AI chips continues to outstrip supply, particularly for those training their own LLMs, such as OpenAI, according to The Information.

The easing of the AI processor shortage is partly due to cloud service providers (CSPs) like AWS making it easier to rent Nvidia's H100 GPUs. For example, AWS has introduced a new service allowing customers to schedule GPU rentals for shorter periods, addressing previous issues with availability and location of chips. This has led to a reduction in demand and wait times for AI chips, the report claims.

Despite overall improvement in H100 availability, companies developing their own LLMs continue to struggle with supply constraints, to a large degree because they need tens and hundreds of thousands of GPUs. Accessing large GPU clusters, necessary for training LLMs remains a challenge, with some companies facing delays of several months to receive processors or capacity they need. As a result, prices of Nvidia's H100 and other processors have not fallen and the company continues to enjoy high profit margins.

The increased availability of Nvidia's AI processors has also led to a shift in buyer behavior. Companies are becoming more price-conscious and selective in their purchases or rentals, looking for smaller GPU clusters and focusing on the economic viability of their businesses.

The AI sector's growth is no longer as hampered by chip supply constraints as it was last year. Alternatives to Nvidia's processors, such as those from AMD or AWS are gaining performance and software support. This, combined with the more cautious spending on AI processors, could lead to a more balanced situation on the market.

Meanwhile, demand for AI chips remains strong and as LLMs get larger, more compute performance is needed, which is why OpenAI's Sam Altman is reportedly trying to raise substantial capital to build additional fabs to produce AI processors.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Li Ken-un Reply

Definitely not to eBay I presume. 🤔 And supposing it were to end up on eBay, probably nigh unaffordable for us peons.Admin said:Some AI startups offload Nvidia H100 amid supply situation ease