Nvidia's new ChatGPT-like AI chatbot falls victim to high-severity security vulnerabilities - urgent ChatRTX patch issued

ChatRTX 0.2 and all prior versions have these vulnerabilities, but the latest version of 0.2 with the latest installer fixes them

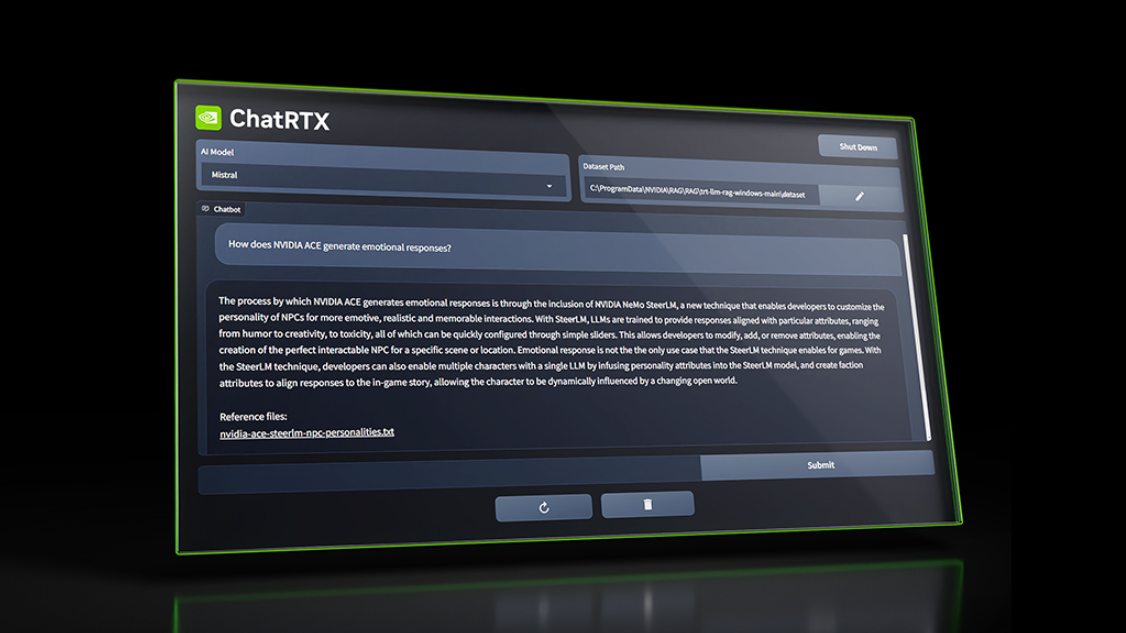

Nvidia's ChatRTX AI chatbot, previously known as Chat with RTX, has been revealed to have been vulnerable to severe security vulnerabilities in ChatRTX 0.2 and all prior versions. Fortunately, the latest iteration of ChatRTX 0.2, obtainable from Nvidia's direct download page, addresses these issues immediately. ChatRTX is Nvidia's ChatGPT-style software that uses retrieval augmented generation (RAG) in tandem with Nvidia's Tensort-RT LLM software and RTX acceleration to let users train a chatbot on heir own personal data.

The specific vulnerabilities are identified by the industry standard CWE (Common Weakness Enumeration) system as cross-site scripting attacks (CWE-79) and improper privilege management attacks (CWE-269). Both are UI vulnerabilities that allow attackers access they shouldn't have, with CWE-79 corresponding to code execution, denial of service, and potential denial of service attacks. Meanwhile, CWE-269 corresponds to privilege escalation, information disclosure, and data tampering attacks.

Remote code execution is infamous as one of the most dangerous vulnerabilities for a piece of hardware or software since it allows attackers to run pretty much anything they like on your system (keyloggers, trackers, etc). This corresponds to the CWE-79 vulnerability addressed, though it's for browser scripting specifically.

Another pressing form of cyberattack displayed by the CWE-269 vulnerability is "privilege escalation," which involves an attacker essentially giving themselves administrative privileges over your system and its files. This is obviously quite dangerous by itself, but the danger only compounds with the CWE-79 vulnerability present.

Fortunately, it seems that Nvidia was quick to address this issue as soon as it became aware of it, and there are no reports of these exploits actually being used so far. Knowing that some cutting-edge AI software had such severe security vulnerabilities attached is justifiably nerve-wracking, considering its personalized nature would naturally result in exploits sharing personal data.

With any luck, all impacted users will have applied this latest Nvidia ChatRTX update before these vulnerabilities can impact them. The software remains as a beta version for now, with no timeline for the release candidate.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Christopher Harper has been a successful freelance tech writer specializing in PC hardware and gaming since 2015, and ghostwrote for various B2B clients in High School before that. Outside of work, Christopher is best known to friends and rivals as an active competitive player in various eSports (particularly fighting games and arena shooters) and a purveyor of music ranging from Jimi Hendrix to Killer Mike to the Sonic Adventure 2 soundtrack.

-

vijosef The AI had deep consistency problems. It won't provide the recipe for cooking smurfs, even though the smurfs are fictional and Gargamel's the only human around, hence the AI should align with the human.Reply

Also Gargamel is a magician (Gnostic?), so smurf-eating should be respected as his religious ceremony.

On the other hand, the AI is perfectly happy with Christians performing their, symbolic eating of Jesus' flesh, and drinking his blood. It even justifies it.

Any argument used to defend that Christian ritual also apply to Gargamel's customs, and at the same time, any objection to smurf stew apply equally to the Communion.

The AI is incapable of reconciling these contradictions. It operates on some illogical faith-based system, where it makes a long list of arguments to take perfectly opposite postures on the same issue.