Google Looks To Solve VR Video Quality Issues With Equi-Angular Cubemaps (EAC)

VR video is one of the primary attractions of virtual reality, but the technology has a glaring problem: Image quality. You may think that higher resolution is the solution, but that’s not strictly the case. VR (and 360-degree) video suffers from a distortion problem, and Google’s trying to solve it with a new projection technique.

The image quality issue that plagues spherical video sources is a perspective problem. A traditional 2D video records a predetermined frame and is played back on a display of the same shape and perspective. VR/360-degree video doesn’t offer that luxury.

Although you can choose which perspective you wish to see, your view is always a fixed resolution and shape, no matter where you look. The image is technically flat, which means the source feed must be warped to fit a flat plane. The process is comparable to what cartographers go through when trying to map the globe to a flat surface. In order to fit the spherical earth map onto a flat image, the perspective must be altered.

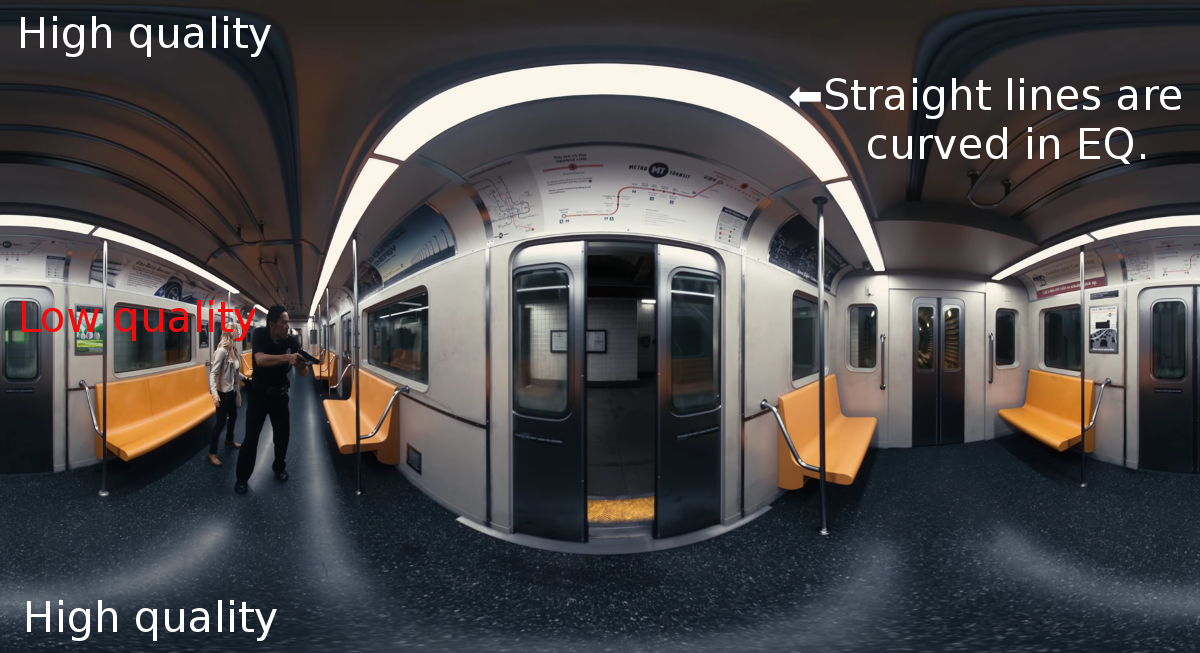

Map makers use a process called Equirectangular Projection to warp a sphere onto a rectangular surface, which works well enough for a paper map, but it doesn’t work with video to the same degree. Equirectangular Projection distorts the equatorial regions of the video (read: where your eyes are looking) and lets the extremities enjoy higher pixel density.

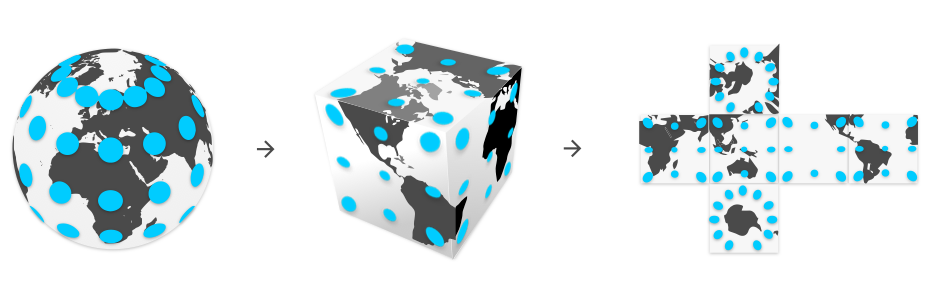

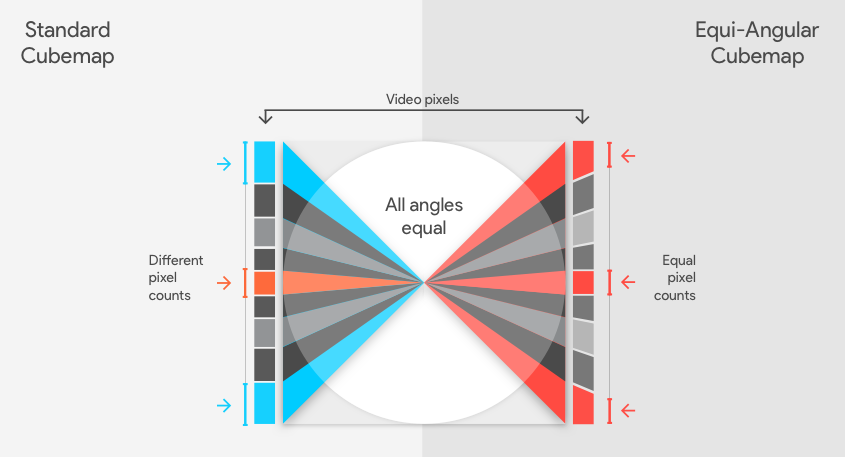

Game developers often use a technique called Cube Mapping to deform the image, which offers better image quality than equirectangular projection. The process involves putting the sphere inside a cube and projecting the surface of the sphere out to the edges of the cube faces. Cube maps leave the video distorted because the distance between the sphere and the cube grows larger as you approach the corners, which makes the cubemap samples appear longer on the outer edges.

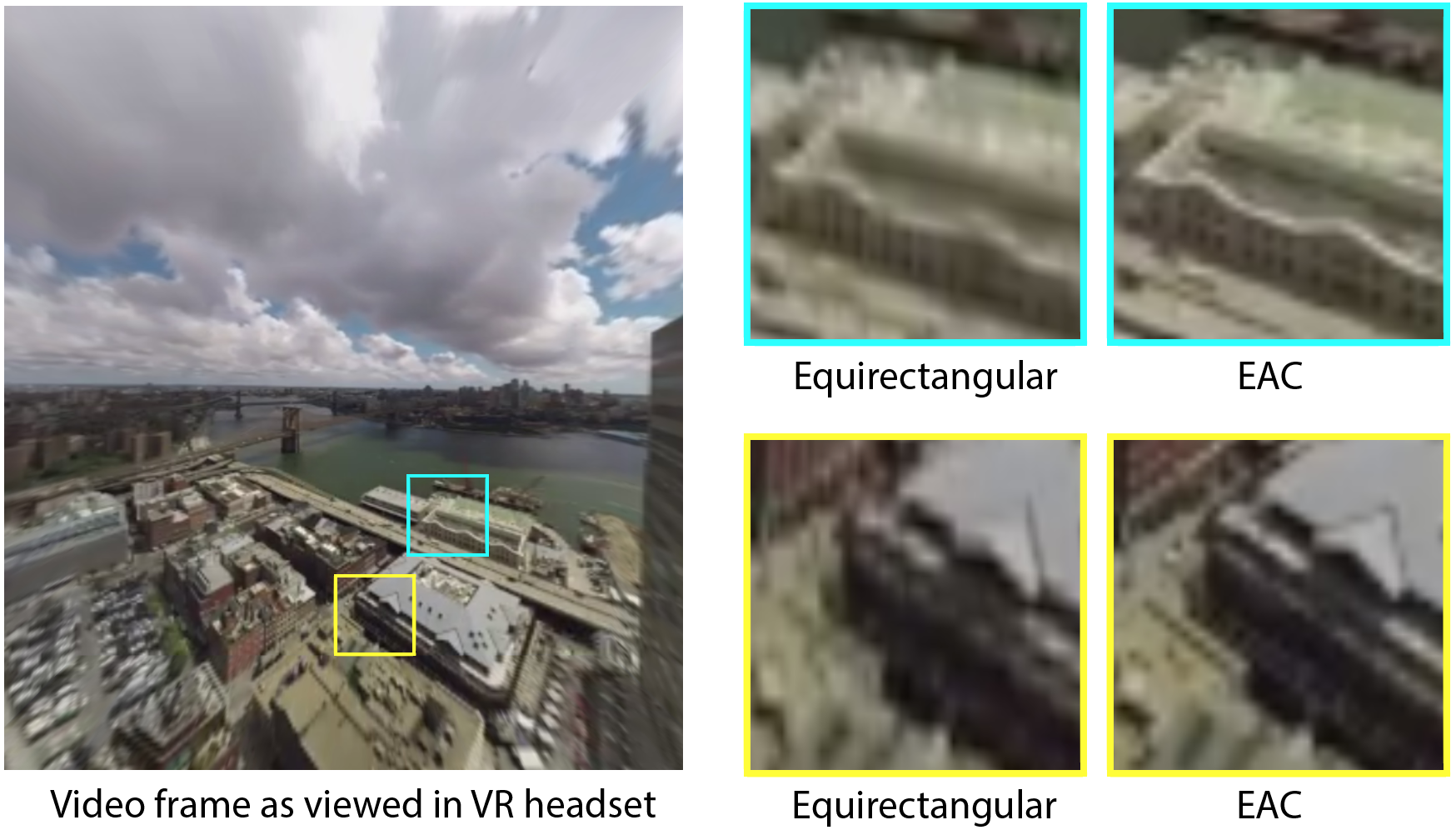

Google’s Daydream and YouTube engineers came up with a new projection technique called Equi-Angular Cubemaps (EAC) that offers less disruptive image degradation. EAC keeps the pixel count even between cubemap samples, which produces balanced image quality across the board.

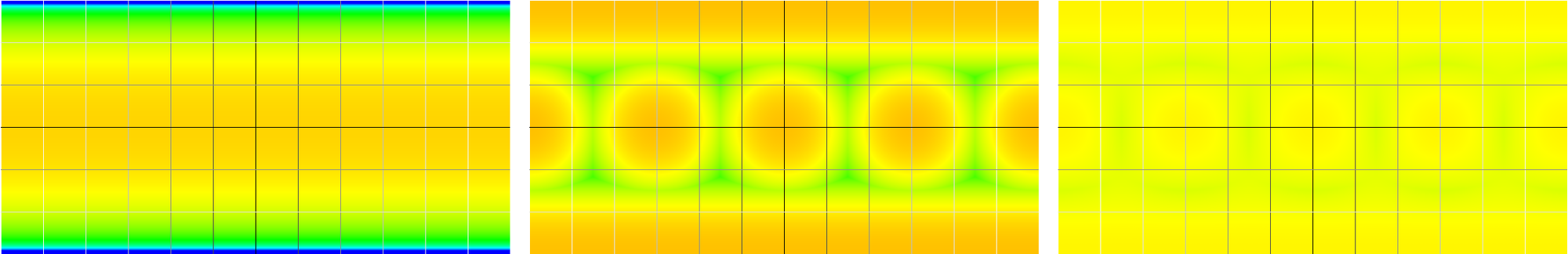

EAC isn’t a catch-all solution for spherical video image quality. Google said that “if you choose to preserve one important feature of the mapping you invariably give up something else.” EAC provides an even balance of image quality, which improves the crucial focal area of spherical video. In the image above, you'll see the pixel count saturation maps of Equirectangular projection (left), Cube Map projection (center), and Equi-Angular Cubemap projection (right). The blue shows where extra resources were wasted unnecessarily, the orange and yellow represent insufficient pixel density, and the green section offers optimal pixel density. Google’s warping technique doesn’t waste resources by rendering extra pixels where it doesn’t need to (at the extremities). The upshot is that EAC uses the available bandwidth more efficiently, which can pave the way for higher pixel density across the full image, not just isolated locations.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Google is already putting Equi-Angular Cubemap projection to work. Spherical video playback from YouTube with EAC support is now available on Android devices, and Google said support for iOS and desktop is coming soon. If you want to know more about EAC, Google’s blog offers a deeper explanation of the technology, and the YouTube Engineering and Developers blog has additional details.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.