Deep Learning On A Stick: Movidius' 'Fathom' Neural Compute Stick (Updated)

Deep learning, a machine learning technique that allows a system to "learn" on its own, became mainstream when Google’s Alphago AI beat the world’s best Go player in four out of five games. Deep learning is now widely considered the Next Big Thing that could significantly improve the lives of both consumers and enterprise customers in the coming years, and many companies are rushing to take advantage of it.

We recently saw Nvidia launch the Pascal-based P100 deep learning monster of a chip for large companies and research labs, and now we’re seeing Movidius launch its Fathom neural compute stick, which can give embedded devices a big deep learning boost, as well.

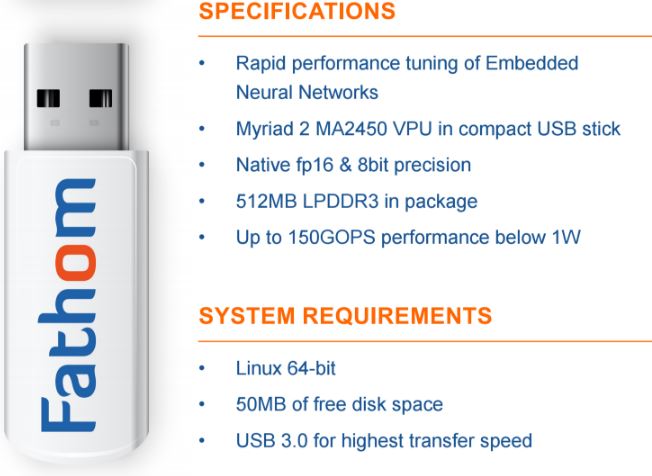

The Fathom stick comes with a Myriad 2 “vision processing unit” (VPU). The first generation Myriad chip was used in Google’s Project Tango to accelerate the computer vision capabilities of that tablet. The company moved from a 65nm process to a 28nm one to try and increase its chip’s efficiency by 20-30x.

The first 5x in performance/Watt compared to the Myriad 1 was achieved by increasing the count of its Streaming Hybrid Architecture Vector Engine (SHAVE) vector processors from 8 to 12, and their clock speed from 180 MHz to 600 MHz. The other 15-25x improvement was achieved by including 20 hardware accelerators on the chip.

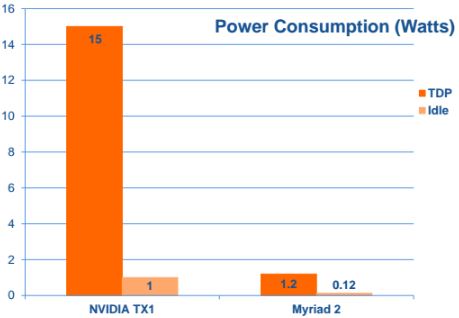

The Fathom’s performance ranges from 80 to 150 GFLOPS, depending on the neural network’s complexity and precision (8-bit and 16-bit precision is supported). That performance requires less than 1.2W of power, which is 12x lower power than, for example, what an Nvidia Jetson TX1 SoC needs (although Nvidia said its GPU needs less than 10W of power).

The Fathom can be used to upgrade products that are already powered by ARM chips, such as robots, drones and surveillance cameras. For instance,the Fathom can drastically improve a surveillance camera’s analytics performance, making it easier to identify people or various objects.

All of these capabilities can now be put into a local device such as a surveillance camera, instead of relying on the cloud. This sort of feature could be especially useful for people who don’t trust a surveillance camera company to host their videos or to have access to their livestreams.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Cloud-backed machine intelligence will be first to arrive because it’s still much more capable at analyzing complex data. However, for simpler devices or services, which don’t need to analyze data that’s overly complex for a machine, local deep learning chips could also suffice, and in time they’ll only get more powerful.

Movidius’ software framework comes with its own optimized vision algorithms that are designed to run at low power, as well as various solutions for 3D depth, object tracking, and natural user interfaces. Customers can also further customize the software to fit their needs. The Fathom compute stick supports two of the major deep learning frameworks such as Caffe, and TensorFlow, which Google released as open source last year.

“Deep learning has tremendous potential -- it's exciting to see this kind of intelligence working directly in the low-power mobile environment of consumer devices,” said Pete Warden, lead for Google's TensorFlow mobile team. “With TensorFlow supported from the outset, Fathom goes a long way towards helping tune and run these complex neural networks inside devices," he added.

Movidius will offer 1,000 free Fathom neural compute sticks to developers who want to experiment with them and who contact the company directly. Afterwards, the stick is expected to cost "under $100 per unit," according to Movidius. Fathom will be shown in public for the first time at the Embedded Vision Summit in Santa Clara, California on May 2-4.

Updated, 5/13/2016, 8:40am PT: The post was updated to specify that the Myriad 2 VPU is 12x lower power than the Jetson TX1 SoC, not 12x more efficient as previously stated. The Myriad VPU is also only 8x lower power than Nvidia's embedded GPU, which can achieve up to 1 teraFLOPS performance inside the Jetson TX1.

Lucian Armasu is a Contributing Writer for Tom's Hardware. You can follow him at @lucian_armasu.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

jakjawagon ReplyThe Fathom’s performance ranges from 80 to 150 GFLOPS, depending on the neural network’s complexity and precision (8-bit and 16-bit precision is supported). That performance requires less than 1.2W of power, which is more than 12 times as efficient as, for example, an Nvidia Tegra X1 processor.

OK, let's maths this out. The linked article says the Tegra X1 peaks at 1024 GFLOPS (FP16). At 15 watts, that's ~68.3 GFLOPS per watt. The Fathom gets 150 GFLOPS at 1.2 watts, making 125 GFLOPS per watt. Yes, quite a bit more efficient than the Tegra X1, but nowhere near the 12 times that the article says. -

spladam Well, I for one welcome our new overlord AI / App. Care to play a game of Tick Tack Toe?Reply -

Co BIY ReplyCare to play a game of Tick Tack Toe?

The good outcome depicted in War Games depended on the "game" being unwinnable and the computer being ruthlessly rational. Once we give the AI some ability to bluff for advantage and take even reasonable risks along with the a more normal scenario that includes some possibility of winning the fictional future could be much more dangerous.