Nvidia's Deep Learning Updates Build Bigger Neural Nets Faster: Digits 2, cuDNN 3, CUDA 7.5

At a machine learning convention in France, Nvidia announced updates to its contributions to Deep Learning.

If there's one company that puts a heap of effort into Deep Learning, it's Nvidia, and today in Lille, France, at ICML (International Conference on Machine Learning), the GPU maker announced three updates: the new Nvidia DIGITS 2 system, Nvidia CuDNN 3, and CUDA 7.5.

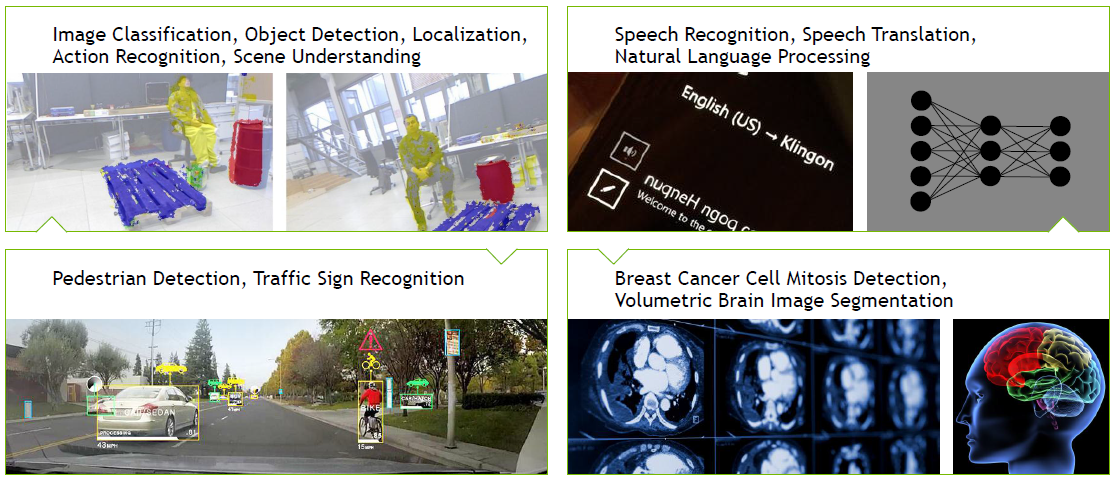

Deep Learning is a concept where computers can build deep neural networks based on given information, which then can be used to accomplish various complicated tasks such as image recognition, object detection and classification, speech recognition, translation, and various medical applications.

You may not realize it, but Deep Learning really is all around us already. Google uses it quite widely, Nvidia applied it in its Drive PX auto-pilot car computer, and medical institutions are starting to use it to detect tumors with much higher accuracy than doctors can.

The reason Deep Learning has been able to explode the way it has is because of the huge amounts of compute power available to us with GPUs. Building DNNs (Deep Neural Networks) is a massively parallel task that takes lots of power. For example, building a simple neural net to recognize everyday images (such as the ImageNet challenge) can take days, if not weeks, depending on the hardware used. It is therefore essential that the software be highly optimized to use the resources most effectively, because not all neural nets end up working, and rebuilding another with slightly different parameters to increase its accuracy is a lengthy process.

DIGITS 2

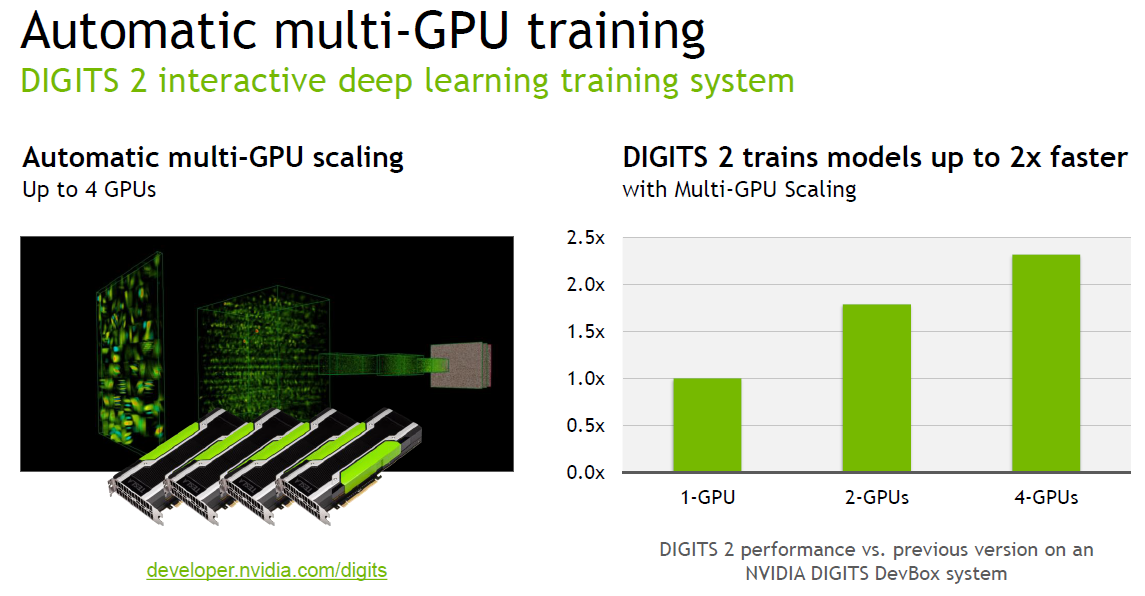

For that reason, DIGITS 2's biggest update is that it can now build a single neural net using up to four GPUs; in the past, you could only use one per neural net. When using four GPUs, the training process for a neural net is up to 2x faster than on a single GPU. Of course, you may then say: build four different neural nets and see which one is the best. But it's not quite that simple.

A researcher may begin by building four different neural nets with different parameters, but based on the same learning data, and figure out which one is best, and then from there on out improve the parameters until the ideal setup is found, at which point only a single neural net needs to be trained.

Nvidia's DIGITS is a software package with a web-based interface for Deep Learning, where scientists and researchers can start, monitor, and train DNNs. It comes with various Deep Learning libraries, making it a complete package. It allows researchers to focus on the results, rather than have to spend heaps of time figuring out how to install various libraries and how to use them.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

CuDNN 3

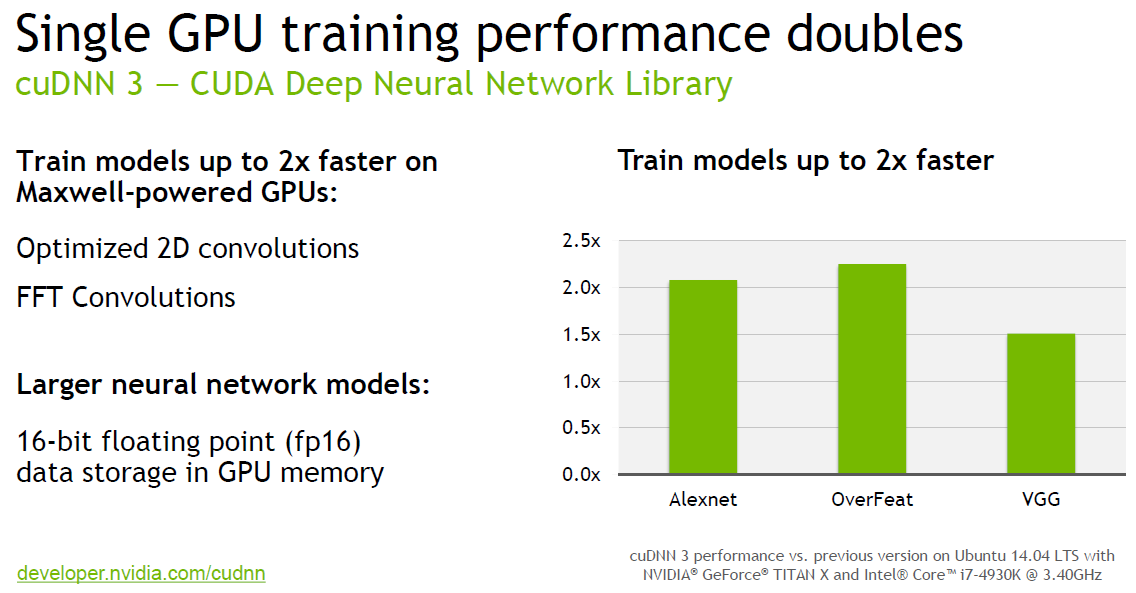

CuDNN 3 is Nvidia's Deep Learning library, and as you may have guessed, it is CUDA based. Compared to the previous version, it can train DNNs up to 2x faster on identical hardware platforms with Maxwell GPUs. Nvidia achieved this improvement by optimizing the 2D convolution and FFT convolution processes.

CuDNN 3 also has support for 16-bit floating point data storage in the GPU memory, which enables larger neural networks. In the past, all data points were 32 bits in size, but not a lot of vector data needs the full accuracy of 32-bit data. Of course, some accuracy is lost in the process for each vector point, but the result of that tradeoff is that the GPU's memory has room more vectors, which in turn can increase the accuracy of the entire model.

CUDA 7.5

Both of the above pieces of software are based on the new CUDA 7.5 toolkit. The reason why CuDNN 3 supports 16-bit floating point data is because CUDA 7.5 now supports it. Most notably, it offers support for mixed floating point data, meaning that 16-bit vector data can be used where accuracy is less essential, and 32-bit data points will be used when higher accuracy is required.

Additional changes include new cuSPARSE GEMVI routines, along with instruction-level profiling, which can help you figure out which part of your code is limiting GPU performance.

The Preview Release version of the DIGTS 2 software is all available for free to registered CUDA developers, with final versions coming soon. More information is available here.

Follow Niels Broekhuijsen @NBroekhuijsen. Follow us @tomshardware, on Facebook and on Google+.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.

-

photonboy Deep learning is going to be very significant in the future. For example, in the field of medicine there will be virtual testing done on virtually diseased cells to find cures.Reply

This will be done in part using Evolutionary Algorithms (simple molecules are weighted according to the survival rate of the cell, then the most successful are weighted higher for processing time.)