Nvidia RTXGI: Ray Tracing Global Illumination At Any Frame Rate

You Could Run Ray Tracing On Your PS4 or Xbox One

Nvidia's has opened the doors on it's new RTXGI SDK to allow Ray Traced Global Illumination on all GPU platforms and tweak it to any performance standard required by the developers.

Global Illumination is how games light themselves up, it's how you actually see anything in a video game in any realistic way. Typically you "pre-bake" your lighting where you run pre-calculated lighting runs and then "bake" them into the textures. But ray-tracing allows real time fully accurate lighting in game. The only problem is that it's usually reserved for ray-tracing accelerated cards and runs very very poorly on standard GPUs.

RTXGI's architecture allows it to run asynchronously to the game engine itself. What this means is it can update real time lighting calculations at a different frame rate than the game itself.

This allows game developers a ton of opportunities to tweak the game's performance numbers purely by changing how many times global illumination gets updated. (Say you want to run your video game at 60 FPS, but only update your ray traced GI at 30 FPS.)

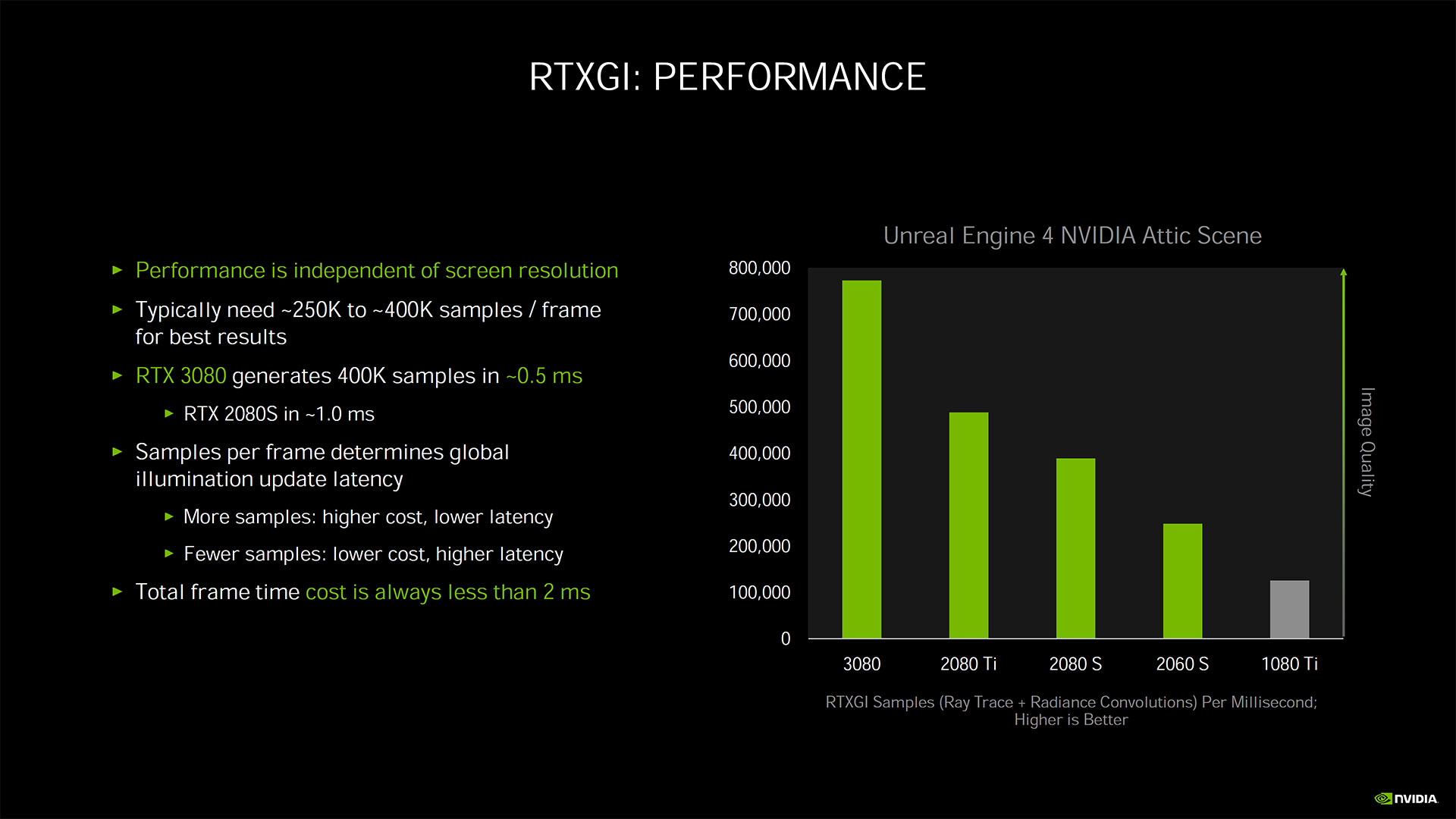

Nvidia showed an impressive RTX 3080 RTXGI performance chart with the 3080 almost doubling the RTX 2080 Ti's sample rate in RTXGI workloads. They even show the GTX 1080 Ti running RTXGI, demonstrating that the SDK indeed can run on any GPU platform.

Because of this, you could run RTXGI on anything from a Radeon GPU to a Intel IGP or even an Xbox One or PS4, if a game developer ever decided to run this tech in a console game. But don't expect it to look as good as on a Turing or Ampere GPU.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

derekullo How would games look as the difference in actual fps and ray traced GI fps grows?Reply

I'm picturing lazy shadows that don't quite match the movement of what made them. -

spentshells Replyderekullo said:How would games look as the difference in actual fps and ray traced GI fps grows?

I'm picturing lazy shadows that don't quite match the movement of what made them.

I'm not so sure, this sounds more like removing the calculations not required as shadows and or rays don't have fluid movement in the first place. -

rapidware Reply

I'm with derek on this. Shadows should be as fluid as the player. However, given I hardly have time to notice these shadows, I'm not surprised halving it or limiting it to 30fps was considered an option.spentshells said:I'm not so sure, this sounds more like removing the calculations not required as shadows and or rays don't have fluid movement in the first place. -

Chung Leong Replyderekullo said:How would games look as the difference in actual fps and ray traced GI fps grows?

I'm picturing lazy shadows that don't quite match the movement of what made them.

If an object is moving so fast that it visibly outpaces its shadow in 1/30 of a second, it's moving too fast for you to see clearly ;). We need high FPS in games because our perspective of the game world can change rapidly, not because the game world itself changes rapidly. -

TechyInAZ Yeah I'm not too sure on the exact details, but from what I gathered from the RTXGI info on Nvidia's site, it seems like the ray tracing calculations are "saved" into data that can be used multiple times by the game engine. Very similar to pre-baking lighting, the game engine is referring to pre-calculated lighting data. This could be the exact same thing with Ray Tracing.Reply -

KenkaOni Reply

Yes, in games with unlocked frame rates this could happen, example: shadows and reflections at 30fps on a game that runs at +120fps could be noticeable, but, on games with locked frame rate could be a great addition if its a option that the user can activate, more modest hardware could gain performance, specially first generation RTX Cards, example: Fighting games or any other game at 60fps, a 30fps RTXGI could help a lot on modest Graphic cards like the RTX2060. 1 of every 2 frames shouldn't be that noticeable.derekullo said:How would games look as the difference in actual fps and ray traced GI fps grows?

I'm picturing lazy shadows that don't quite match the movement of what made them. -

d0x360 Even if the game only ran at 60fps a 30 fps update rate on shadows would be highly noticeable and it would only get worse the higher the frame rate gets. Hell it wouldn't even look right in a 30fps game.Reply

Also since this is software why would it look better on turing or ampere than anything else? Surely big Navi would look better than turing and close to ampere. -

KenkaOni Reply

Would look better on Turing and Ampere because those architectures have the RTX Cores which made them process RayTracing faster without having to use the base cores. Is a dedicated part of the hardware to do those calculations. Which until now not even one AMD card have. RayTracing is possible on current RTXless hardware, but the performance hit is too high to be viable.d0x360 said:Even if the game only ran at 60fps a 30 fps update rate on shadows would be highly noticeable and it would only get worse the higher the frame rate gets. Hell it wouldn't even look right in a 30fps game.

Also since this is software why would it look better on turing or ampere than anything else? Surely big Navi would look better than turing and close to ampere.