The Evolution Of Mobile GPUs In Pictures

Adreno 500 (2015 - 2016)

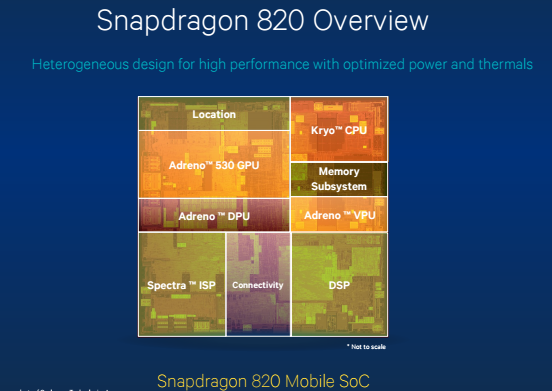

In 2015, Qualcomm announced several new Snapdragon SoC, including the 430, 618, 620 and 820. Inside of these SoCs is a new generation of Adreno GPUs: the 500 Series. Although this series is not yet available on the market, we already know the main characteristics. The Adreno 530 and 510 are compatible with OpenGL ES 3.1+ AES, OpenCL 2.0, Vulkan and DirectX 11.2. Meanwhile, the Adreno 505 is limited to OpenGL ES 3.1 and DirectX 11.2. The Adreno 530 is also the first GPU from Qualcomm to be produced on 14 nm lithography (the Adreno 505 and 510 remain at the 28 nm lithography).

| Name | Adreno 505 | Adreno 510 | Adreno 530 |

|---|---|---|---|

| Fab | 28 nm | 14 nm | |

| Clock Speed | ? | ? | 650 MHz |

| ALUs | ? | ? | 256 |

| API | OpenGL ES 3.1OpenCL 2.0DirectX 11.2 | OpenGL ES 3.2 OpenCL 2.0DirectX 12 (feature level 12_1) | |

| Fillrate | ? | ? | 8/9,6 GPixels/s |

| GFLOPS | ? | ? | 550-600 |

The ARM Mali Series

In 2006, ARM acquired Norway-based GPU developer Falanx. The company became ARM's Norway branch, and develops ARM's GPU technology: Mali. ARM licenses the Mali GPU to other companies to be incorporated into an SoC alongside ARM's CPU cores. Mali GPUs have Nordic code names (Utgard, Midgard) and have been growing increasingly popular with SoC developers, especially manufacturers that offer entry-level SoCs. This is because the companies can license essentially complete, ready-to-use SoCs from ARM and rush into production.

Mali-400 & Mali-450 (2008)

The Mali-400 and Mali-450 (code-named Utgard) are ARM's oldest GPUs, and are still sold as entry-level products. Similar to the PowerVR 5XT series, they can be used in multicore configurations. The Mali-400 is typically found in the 400 MP, 400 MP2 or 400 MP4 designs. The Mali-450 has the option to contain up to eight cores — twice as many as the Mali-400. The Mali-400 MP4 is used in some Samsung Exynos SoCs as well as devices like the Samsung Galaxy S3 and the Galaxy Note. The Mali-450 is rarer, and dedicated to the entry-level market. Th-400 and Mali-450 are designed for games, and are compatible with OpenGL ES 2.0.

| Name | Mali-400MP | Mali-450MP |

|---|---|---|

| Fab | 40/28 nm | |

| Clock Speed | 500 MHz | 650 MHz |

| Cores | 1-4 | 1-8 |

| API | OpenGL ES 2.0 OpenVG 1.1 | |

| Fillrate | 500 Mpixels/s | 650 MPixels/s |

| GFLOPS per core | 5 | 14,6 |

Mali-T600 (2012 - 2014)

In 2012, ARM unveiled the Mali-T600 GPU family. Unlike the 400 series, the Mali-T600 GPU family is intended for both games and computational work, and GPUs in this series feature support for OpenCL. They also include improved OpenGL ES and DirectX 9.0 support. Mali-T600 GPUs are quite rare, but they're used inside the Samsung Exynos 5, coupled with ARM Cortex-A15 CPUs. Samsung also equipped its first Chromebook with a GPU from the Mali-T600 family.

Mali-T700 (2013)

The next generation of Mali GPUs, the T700 series, was used inside the first 64-bit SoC. An evolution of the Midgard architecture inside the T600 series, it features extended API support for DirectX 11.1 (feature_level 9_3), OpenGL ES 3.1 and OpenCL 1.1. It is used inside several SoCs, including the MediaTek MT6732 (Mali-T760), MT6752 (Mali-T760), MT6753 (Mali-T720MP3) Samsung Exynos Octa 7 (MP6 in some versions, but MP8 in others) and Rockchip RK3288 (Mali-T764).

Mali-T800 (2015 - 2016)

By the end of the year, ARM plans to launch its latest T800 Series of GPUs: Mali-T820, T830 and T860. Built with 28-nm lithography and clocked at 650 MHz, the GPU is DirectX 11.1-compatible (feature level 9_3, except for T860, which is DirectX 11.2-compliant with feature level 11_1), and features support for OpenGL ES 3.1 and OpenCL 1.2 Full Profile. These GPUs continue to use the Midgard architecture, which was introduced with the T600 series. ARM plans to launch an even more powerful GPU in the second quarter of 2016: the Mali-T880. The Mali-T880 will benefit from 16-nm lithography and operate at up to 850 MHz.

Nvidia Enters The Mobile Race

Nvidia entered the mobile GPU game with its Tegra SoCs, incorporating ARM CPUs with Nvidia's graphics technology. The original Tegra was used in some devices, like the Kin and the Zune HD, but did not gain widespread usage. Nvidia's second chip, the Tegra 2, has been much more successful.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Tegra 2 (2010)

The Tegra 2 was the first dual-core SoC, featuring two ARM Cortex-A9 CPUs and a decent GPU. It was compatible with OpenGL ES 2.0 but did not use a unified architecture. Instead, it incorporated four Vertex Shaders alongside four Pixel Shaders. The Tegra 2 had some flaws, such as the lack of support for the NEON instruction set and a video decoding engine issue, but the chip still had much success. Motorola, Samsung, LG, Sony and many other big names have devices that use the Tegra 2.

Tegra 3 (2011)

The Tegra 3, code-named Kal-el, was an evolution of the Tegra 2. Nvidia opted for a quad-core CPU design, and a GPU with a total of 12 cores (eight Pixel Shaders and four Vertex Shaders). The clock speed was also increased to improve performance, and the Tegra 3 was fairly successful. It was used inside the first Microsoft Surface RT, the HTC One X and the Google Nexus 7. The GPU, though highly efficient, suffered from design problems, as it was not a unified architecture, preventing it from supporting versions of OpenGL newer than OpenGL ES 2.0.

Tegra 4 & Tegra 4i (2013)

The Tegra 4 and Tegra 4i failed to gain the popularity of the Tegra 2 and Tegra 3. Nvidia upgraded the processor to Cortex-A15 CPU cores but did not significantly alter the GPU architecture. This proved to be a serious issue, as Nvidia's major competitors in the mobile graphics processor market had already advanced to using unified GPU architectures capable of supporting OpenGL ES 3.0. In an attempt to stay competitive, Nvidia drastically increased the core count inside the GPU to bolster performance. The Tegra 4i's GPU contained 60 processing units (48 Pixel Shaders and 12 Vertex Shaders), while the Tegra 4 possessed 72 cores (48 Pixel Shaders and 24 Vertex Shaders. Few devices were released using these SoCs. The most prominent devices to use them were Nvidia's own Shield and Tegra Note 7.

-

blackmagnum Today's mobile gpu render pictures so fast that the screen can't keep up and you see screen tearing. It's time for smartphone version of G-sync or Free-sync, or just force V-sync like old times.Reply -

owned66 ReplyToday's mobile gpu render pictures so fast that the screen can't keep up and you see screen tearing. It's time for smartphone version of G-sync or Free-sync, or just force V-sync like old times.

all android phones now a triple buffered and stuck at 60 Hz - stutter yes but NEVER tear -

owned66 ReplyToday's mobile gpu render pictures so fast that the screen can't keep up and you see screen tearing. It's time for smartphone version of G-sync or Free-sync, or just force V-sync like old times.

all android phones now a triple buffered and stuck at 60 Hz - stutter yes but NEVER tear

60 FPS*

-

MetzMan007 ATI / AMD probably kicking them selves now for selling off the Imageon line. Nvidia just needs 1 good device for there stuff and they will take off.Reply -

IInuyasha74 Reply17113807 said:ATI / AMD probably kicking them selves now for selling off the Imageon line. Nvidia just needs 1 good device for there stuff and they will take off.

I'd say you are right. Not that AMD couldn't redesign a similar low-power GPU like the original Imageon series of products, but they could have continued upgrading it and probably had a more profitable licensing business similar to what ARM has now. -

razor512 V-sync can be disabled on android at least, though I do not recommend it. A heavy GPU load can increase temperatures and drain the battery fast. On higher end SOC it leads to extra throttling. You can test this using a program like 3c toolbox to monitor the clock speeds after a heavy load with v-sync on and off.Reply

Even SOCs that don't normally throttle, will throttle with a 100% GPU and CPU load. (I have never seen an android game be graphically intensive and also do a 100% CPU load). -

g00ey Something that gave nVidia a performance advantage was according to this article when they gave up their Tegra line and started using their Keplar and Maxwall hardware in their phones.Reply

So AMD could do something similar with their Radeon hardware if they wanted to and probably be as successful as nVidia. Now AMDs present GPU hardware is getting old so I guess they are focusing on the next generation hardware. Guess it would be better to first take care of that before considering pushing GPU hardware to mobile devices. -

DbD2 ReplySomething that gave nVidia a performance advantage was according to this article when they gave up their Tegra line and started using their Keplar and Maxwall hardware in their phones.

So AMD could do something similar with their Radeon hardware if they wanted to and probably be as successful as nVidia. Now AMDs present GPU hardware is getting old so I guess they are focusing on the next generation hardware. Guess it would be better to first take care of that before considering pushing GPU hardware to mobile devices.

More that nvidia made their Kepler and Maxwell architectures from the ground up to be mobile friendly, something AMD haven't done. In a moment of stupidity AMD sold their whole mobile line to qualcomm for peanuts, and decided to concentrate on high powered desktop gpu's.

The unbelievable thing is AMD were ahead of nvidia years back - they had a mobile gpu line before nvidia and even their desktop gpu's were more efficient then nvidia (who at the time were more interested in gpu compute and super computers). Nvidia then recognised the importance of low power and mobile and made that a number 1 priority. In that same time period AMD sold their mobile gpu's and went high power with their gpu's becoming less efficient then nvidia's. Now nvidia have not only the mobile market but pretty well the entire discrete gpu laptop market too, while AMD are going bust.

Bottom line is AMD's management suck!