Curbing Your GPU's Power Use: Is It Worthwhile?

In many cases, the graphics card is the most power-hungry component in a PC. The enthusiast community is no stranger to CPU tweaking, so why hasn't GPU modification caught on? We're going to see just how much you stand to gain (or lose) from tweaking.

Conclusion: Doable, But No Walk In The Park

Pros and Cons

First things first: can you lower your GPU’s power consumption, particularly when you use it in games and applications? The answer is a resounding yes. You can pretty much use the same approach you’d employ if you were trying to cut the power consumption of your CPU. The difference is that graphics cards seem much more black-box in nature. Enthusiasts are less likely to tinker with them because granular settings are more difficult to access.

The methods range from very simple to not-so-easy, at least as they pertain to AMD’s graphics cards (an exploration of Nvidia’s solutions could be particularly interesting, given the company’s intention to protect its boards from overvolting with some sort of lock). The easiest strategy is running the card in UVD mode. You can use this trick on pretty much any Radeon card. And it’s great because those settings are already available by default. That means your card continues to operate within its specifications.

However, if you want more significant savings, you’ll have to get your hands a bit dirty. As the results show, the biggest savings are enabled when you drop clock speed and voltage. In that case, your choices are limited to cards which have voltage adjustment features or selectable core voltages in the BIOS.

There is a matter of convenience. Although it is pretty easy to trigger UVD mode, doing so every time you want to play a game or run an application is not. Unfortunately, third-party apps don’t help much in this regard. For example, Asus’ SmartDoctor utility lacks an option to save profiles. Asus does offer another utility for tuning graphics cards called ITracker2, but it only works with the company’s Matrix- and ROG-series graphics cards.

There are other utilities like MSI’s Afterburner that can save clock and voltage presets, but its voltage adjustment only works with certain supported cards. If you have your heart set on trying this yourself, it would be wise to pick a card that provides everything you need. You need to check out graphics card reviews for detailed descriptions about bundled utilities.

Putting Things In Perspective

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

So, why would you do this? Saving power might be a great idea for the environmentally-inclined, but does it make sense financially? Particularly compared to simply buying a mainstream card right out of the gate, such as AMD’s Radeon HD 5770? We’re talking about 10% power consumption savings in desktop applications and between 15 to 25% in games, with about the same performance penalty (using default DXVA settings). With voltage adjustment, you could probably push that to around 30% (around 100 watts).

Just how big of a difference does 100 watts make, financially? Well, that’s about 0.1 kWh per hour, which is less than 1 cent per hour (depending on where you live and the rates your power company charge you). For most desktop users with a single PC, the savings are negligible at best.

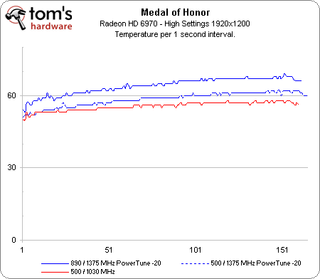

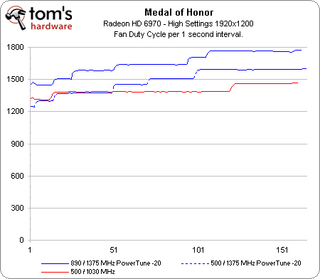

What about other benefits, such as temperature and fan speed/noise? Here’s a look at both. We’ve taken the numbers from the Radeon HD 6970’s GPU-Z log running Medal of Honor.

Obviously lower clocks and voltages have other advantages, but mainstream cards also offer these same benefits. They also cost a lot less than high-end cards. So, what's the point then? One thing that most mainstream don’t have is performance. Mainstream cards may offer simplicity, lower power consumption, operating temperatures with less noise at a lower cost, but they don’t always have the performance. With a high-end card, you have more performance available when it's needed. From this experiment, we learned that they can be power savers, with a little bit of effort and luck.

The focus of this article was to find out if it's possible to have performance when you need it and power savings when you want with a high-end desktop graphics card. The answer is that yes, you can achieve both of those things, though swapping back and forth is hardly practical at this point in time. We have the hardware today, and that much is clear. Hopefully, we get more control from companies like AMD and the third-part software developers writing tools for its cards in the future.

Current page: Conclusion: Doable, But No Walk In The Park

Prev Page PowerTune: Taming Cayman-

I think, considering those people using SLi and crossfire and higher end videocards, they don't really give a gat about how much elec. they are using. They can afford to buy two expensive PCBs, why would they care about extra 5~10 bucks per month? If poeple are focused on lower power consumption, they would go for lower performance components, arent they?Reply

-

anttonij I guess the most important point of this review is that you can lower the cards voltage while running at stock speed. For example I'm running my GTX 460 (stock 675/1800@1.012V) at 777/2070@0.975V or if I wanted to use the stock speeds, I could lower the voltage to 0.875V. I've also lowered the fan speeds to allow the card to run almost silently even at full load.Reply -

Khimera2000 @.@ there is no apple @.@Reply

This is neat though :) I wonder if this article might inspire someone to make an application. Come on open source dont fail me now >. -

Could you do comparison of "the fastest VC" vs "entry level" and then show us how much money we might end up paying each month or day?Reply

-

the_krasno Manufacturers should find a way to implement this automatically, imagine the possibilities!Reply -

wrxchris @OvaCerReply

I have 2 gfx cards pushing 3 displays, but I'm all for saving watts wherever I can. Our society has advanced to the point where sustainability is a very important buzzword that is widely ignored by mainstream media and many corporations, and this ignorance trickles down to the mainstream like Reaganomics. Minuscule reductions such as 30w savings across hundreds of thousands if not millions of users adds up to a significant reduction in carcinogenic emissions and saves valuable resources for future consumption. -

neiroatopelcc So when playing video, you risk your amd card going into uvd mode? What models does that apply to?Reply

I want to know, cause for instance in a raid, I'd sometimes watch video content on another screen while waiting around for whatever there is to wait for. I already lose the crossfire performance because of window mode. I don't want to lose even more.

Does my ancient 4870x2 support uvd? -

jestersage so... for the dual bios HD6900s, I can RBE one bios with my desired settings and just choose which bios to use before I power up my PC? hmmm... interesting.Reply

Most Popular