Intel Core i7-975 Extreme And i7-950 Reviewed

Overclocking/Memory Scaling

We wanted to test Intel’s Core i7-975 Extreme in two different ways: processor overclocking and memory scaling.

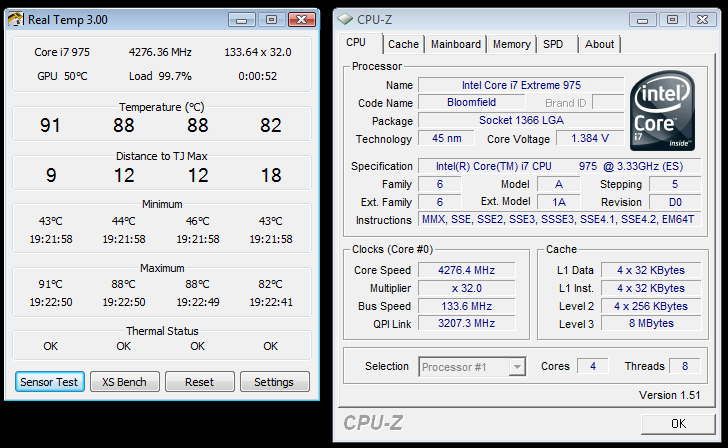

The first part was easy, especially with an Extreme CPU. We used quick multiplier adjustments and found that 31x (4.12 GHz) was as fast as we could go stably. With voltages up to 1.385 V, we were able to get into Windows at 4.25 GHz, but at that point, it only takes a couple of minutes under Prime95 to push you into the 92-93 degree Celsius range, where blue screens are inevitable (this is with Thermalright’s Ultra 120 Extreme—naturally, the situation changes as you pursue more aggressive cooling).

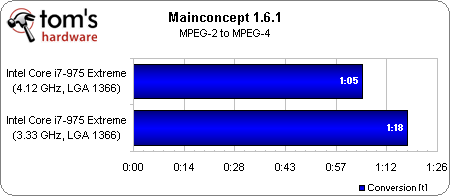

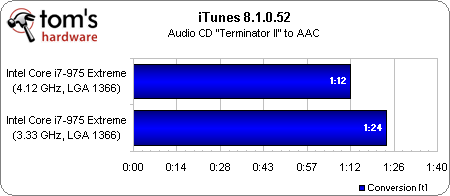

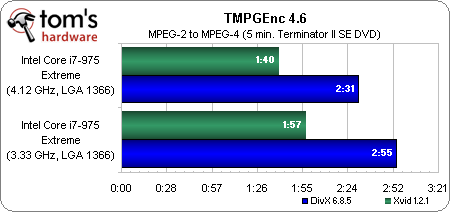

Once we backed off of 4.25 GHz, we were able to drop the voltage required for stability at 4.12 GHz all the way to 1.29 V. In order to switch things up a little bit, we also dropped the multiplier to 23x and hit 4.14 GHz with a 180 MHz Bclk (20 x anything over 200 MHz simply wasn’t cooperative). In either case, the result of our encoding test was exactly the same (1:05). Use multipliers if you can or Bclk if you’re not running an Extreme chip—what matters is that you hit your target.

Although the Core i7-975 Extreme sports the same 130W TDP as its predecessor, it's interesting that the new CPU runs a higher voltage by default (and despite the fact that it's a D0 stepping). See the CPU-Z screenshots up top for more.

OCZ was also kind enough to send over a set of its DDR3 PC3-17000 Blade modules specified for DDR3-2133 at 8-9-8. Given the extreme variance in price between mainstream and high-end DDR3 memory, we thought it’d be a good idea to quickly explore the benefits of loading up on memory running at those extreme speeds. In theory, low latencies are best in environments heavy on multi-tasking, where the fast timings enable quick bursts. Conversely, you’d suspect gobs of bandwidth to help most in data-intensive apps like video encode jobs.

It's worth noting, too, that achieving DDR3-2133 speeds necessitated bumping our QPI voltage to 1.75V (a substantial increase). This was required in order to run the cache/uncore at 4,266 MHz (twice memory speed). Even then, we were able to take a memory bandwidth reading but not complete a full run of MainConcept without stability issues. This is something that'll inevitably get worked out over time, we're sure. For now, OCZ considers the ICs that bin for these speeds to be the top 1% or so.

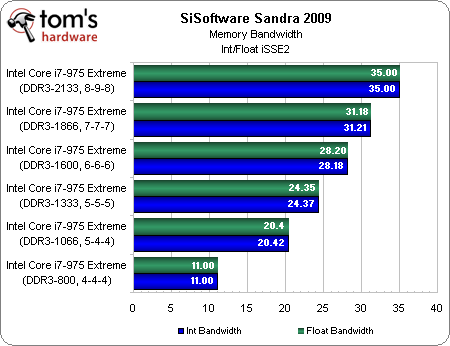

As you can see, there’s a huge difference in the bandwidth we’re moving using this triple-channel kit. The only truly strange result came from running at DDR3-800, where the memory controller switched off one channel, resulting in the lower-throughput result. Otherwise, the step-down is fairly even, so long as you maintain timings.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This is all well and good, of course, if you have modules able to do, say, DDR3-1066 at 5-4-4. But what if you want to compare an older DDR3-1066 kit running 9-9-9 to a lower-latency upgrade? We ran those numbers too, just to check, and came up with 19 GB/s (compared to 20.4 GB/s at 5-4-4).

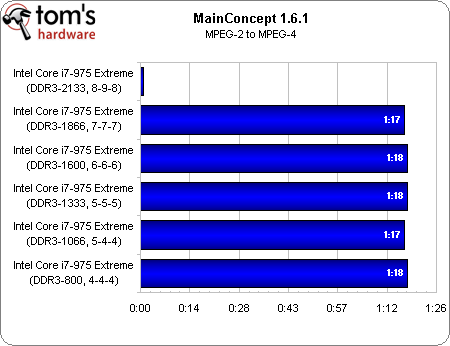

For the most part, our real-world encoding batch demonstrates zero impact each step of the way. And even when we run the DDR3-1066/9-9-9 configuration, we come back with 1:19 as a result. At least in your average desktop environment, Intel’s Core i7 does not seem to be starved for throughput. We’ll spend some time exploring what exactly it does take to tax the micro-architecture’s memory subsystem in a couple of weeks.

Current page: Overclocking/Memory Scaling

Prev Page Core i7-975 Extreme Details Next Page Test Setup And Benchmarks-

cangelini The i7's disadvantage in Far Cry 2 is well-known. That it gets beat in HAWX is something we only discovered this time around. In everything else, it's the faster CPU.Reply -

Tindytim Are we going to see a price reduction in the 940 or the 965 that gives me any reason to purchase them over the 920?Reply -

burnley14 Good thing I didn't shell out for the 965 yesterday.Reply

Oh wait, I don't have unlimited cash, so I won't be shelling out for the 975 any time soon either . . . -

Dustpuppy Those game results look like you ran into serious GPU limits. As a result I think you may have been showing a difference in motherboards rather than processors on some of those tests. That does make it an interesting result in other ways though. It looks like the i7 boards have room to mature a little bit more relative to the older tech.Reply -

Summer Leigh Castle Who said that AMD holds the crown in performance? I think any half witted enthusiast who hasn't been hiding underneath a rock for the past year knows that the i7 (and even the core 2 duo in some test) is king. I would hope that people who visit tomshardware or rather any tech website knows that in terms of highend power, AMD doesn't come close to Intel at all.Reply -

cangelini DustpuppyThose game results look like you ran into serious GPU limits. As a result I think you may have been showing a difference in motherboards rather than processors on some of those tests. That does make it an interesting result in other ways though. It looks like the i7 boards have room to mature a little bit more relative to the older tech.Reply

Likely, yes. If you look back to this doozy of a benchmark-fest, you'll see it isn't under you add a second or third GTX 280 that i7 starts putting on some distance. Up until then, though, it's worth noting that the other two platforms (Core 2 and Phenom) are actually faster! -

doomtomb Really, any of the i7 processors besides the 920 seems like a waste because of the marginal performance increases for exponential price hikes. I was especially alarmed by the DDR3 memory results. There is the synthetic benchmark advantage of higher bandwidth at higher speeds but absolutely no difference across the board ranging from 1066 to 2133 in real world encoding or what not.Reply

Pretty absurd, I think I'd just stick with the 920 @ 3.8GHz and some affordable DDR3 1600MHz memory.