AMD Radeon HD 6990 4 GB Review: Antilles Makes (Too Much) Noise

Several months late and supposedly only a couple of weeks ahead of Nvidia's own dual-GPU flagship launch, AMD's Radeon HD 6990 has no trouble establishing performance superiority. But does speed at any cost sacrifice too much of the user experience?

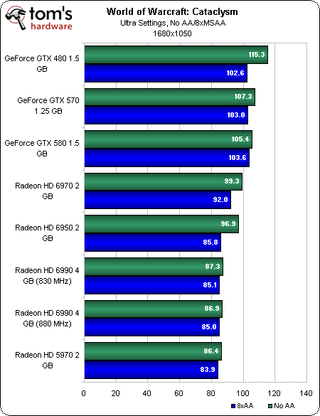

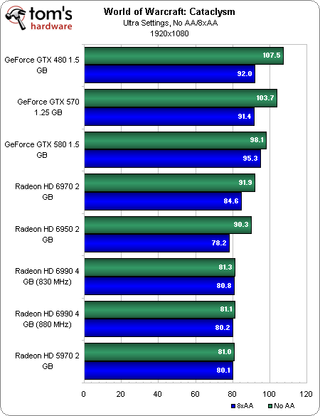

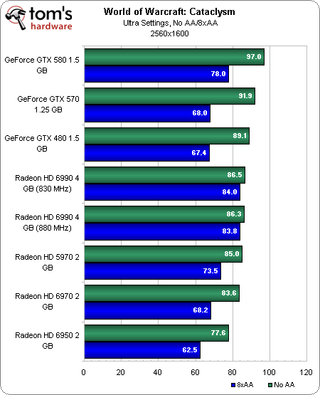

Benchmark Results: World Of Warcraft (DX9)

Platform potential seems to make a ton of difference in WoW. I ran my GeForce GTX 560 Ti review using an overclocked Sandy Bridge-based configuration, and that system demonstrated all kinds of separation between cards. Here, however, a Gulftown-based build running at the same 4 GHz suggests there’s a ton of congestion.

The Nvidia cards are least-constrained by processor performance, and they consistently best all of AMD’s cards. This is exactly consistent with what we saw in World Of Warcraft: Cataclysm--Tom's Performance Guide.

When it comes time to turn on anti-aliasing, though, the Radeon HD 6990s take the smallest performance hit. Even still, you can run Cataclysm at 2560x1600 with 8x MSAA on a Radeon HD 6950 and still average more than 60 frames per second. There’s really no reason to buy such a high-end card for this fairly mid-range title.

Incidentally, World of Warcraft is the other game where AMD’s beta driver exhibits a flickering/shimmering menu screen at 1680x1050.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Current page: Benchmark Results: World Of Warcraft (DX9)

Prev Page Benchmark Results: Just Cause 2 (DX11) Next Page Benchmark Results: Dual-GPU Performance (CrossFire And SLI)-

CrazeEAdrian Great job AMD. You need to expect noise and heat when dealing with a card that beasts out that kind of performance, it's part of the territory.Reply -

jprahman This thing is a monster, 375W TDP, 4GB of VRAM! Some people don't even have 4GB of regular RAM in their systems, let alone on their video card.Reply -

one-shot Did I miss the load power draw? I just noticed the idle and noise ratings. It would be informative to see the power draw of Crossfire 6990s and overclocked i7. I see the graph, but a chart with CPU only and GPU only followed by a combination of both would be nice to see.Reply -

anacandor For the people that actually buy this card, i'm sure they'll be able to afford an aftermarket cooler for this thing once they come out...Reply -

cangelini one-shotDid I miss the load power draw? I just noticed the idle and noise ratings. It would be informative to see the power draw of Crossfire 6990s and overclocked i7. I see the graph, but a chart with CPU only and GPU only followed by a combination of both would be nice to see.Reply

We don't have two cards here to test, unfortunately. The logged load results for a single card are on the same page, though! -

bombat1994 things we need to see are this thing water cooled.Reply

and tested at 7680 x 1600

that will see just how well it does.

That thing is an absolute monster of a card.

They really should have made it 32nm. then the power draw would have fallen below 300w and the thing would be cooler.

STILL NICE WORK AMD -

Bigmac80 Pretty fast i wonder if this will be cheaper then 2 GTX 570's or 2 6950's?Reply

But omg this thing is freakin loud. What's the point of having a quite system now with Noctua fans :(

Most Popular