AMD Radeon HD 6990 4 GB Review: Antilles Makes (Too Much) Noise

Several months late and supposedly only a couple of weeks ahead of Nvidia's own dual-GPU flagship launch, AMD's Radeon HD 6990 has no trouble establishing performance superiority. But does speed at any cost sacrifice too much of the user experience?

Display Outputs And AMD's Tessellation Coup

Eye See You

Given the Radeon HD 6990’s brute force approach to performance and cooling, we’re happy to see that elegance didn’t go completely ignored. Using a single slot worth of the I/O bracket, AMD exposes an unprecedented five display outputs: one dual-link DVI and four mini-DisplayPort connectors. The retail Radeon HD 6990 will ship with a trio of adapters for more diversity, including one passive mini-DP-to-single-link DVI, one active mini-DP-to-single-link DVI, and one passive mini-DP-to-HDMI. AMD calls these a roughly $60 value.

I’m a big proponent of multi-display configurations for enhancing productivity, and I currently use a 3x1 landscape configuration. I consider five screens overkill for what I do. But AMD is now pushing a native 5x1 portrait mode that admittedly looks pretty interesting.

Beyond simply working more efficiently, using three or five screens is also a great way to take advantage of graphics horsepower available from a 375+ W dual-GPU card. As you’ll see in the benchmarks, 1680x1050 and 1920x1080 are often wasted on such a potent piece of hardware—even with anti-aliasing and anisotropic filtering enabled.

Just remember, with more than one screen attached to a card like AMD’s Radeon HD 6990, idle power consumption won’t match the figures we present toward the end of this piece. It actually jumps fairly substantially due to the need for higher clocks. With just one screen attached to the 6990’s dual-link DVI output, we observe 148.5 W system power consumption at idle. With a trio attached to the mini-DisplayPort connectors, that figure jumps to 187.2 W.

To be clear, you'll see higher power use from Nvidia cards as well in multi-monitor environments. AMD is the first to explain why this power increase is necessary, though:

"PowerPlay saves power via engine voltage, engine clock, and memory clock switching. Memory clock switching is timed to be done within an LCD VBLANK so that a flash isn't seen on the screen when the memory speed is changed. This can be done on a single display, but not with multiple displays because they can (and in 99% of the cases, will be) running different timings and virtually impossible to hit a VBLANK on both at the same time on all the panels connected (and when we say "timings" it’s not as simple as just the refresh rate of the panel, but the exact timings that the panel's receivers are running). So, to keep away from the end user seeing flashing all the time, the MCLK is kept at the high MCLK rate at all times.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With regard to power savings under multiple monitors, we have to trade-off between usability and power. Because we can't control what combinations of panels are connected to a desktop system we have to choose usability. Other power saving features are still active (such as clock gating, etc.) so you are still saving more power than peak activities. Note, that in a DisplayPort environment we have more control over the timing and hence this issue could go away if all the panels connected where DP."

PolyMorph What?

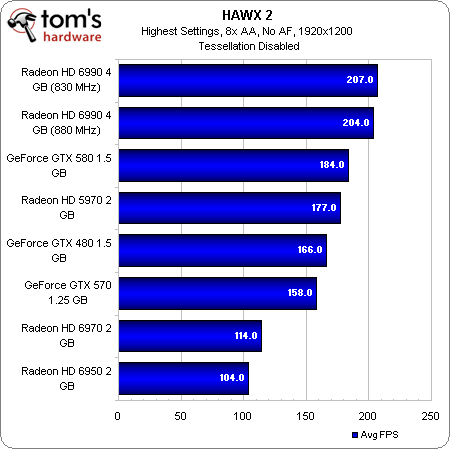

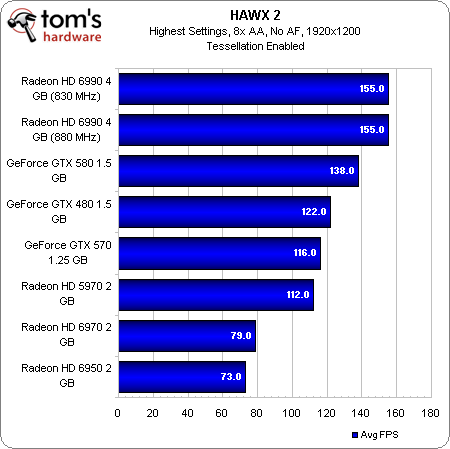

When Nvidia launched its GF100-based cards (GeForce GTX 480 And 470: From Fermi And GF100 To Actual Cards!), it pushed geometry as the next logical step in enhancing the realism of our games. We saw many compelling tech demos and game engine demonstrations that backed up the company's party line. But I wasn't prepared to give Nvidia a pat on the back until an actual game started shipping with more than a superficial implementation of tessellation, used to actually augment reality. HAWX 2 was the first example of this. I immediately started using HAWX 2 for all measures of tessellation performance in graphics card reviews, and I came away with some interesting conclusions.

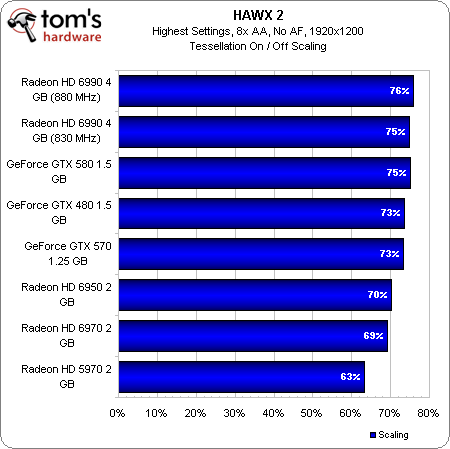

First, the PolyMorph engines resident in each of Nvidia's Streaming Multiprocessors didn't seem to scale very well. A GeForce GTX 560 Ti features eight SMs, and consequently eight PolyMorph geometry engines. In comparison, a GeForce GTX 570 employs 15 SMs. Yet, we've already seen that the 570 retains 71% of its performance in HAWX 2 after turning tessellation on. Meanwhile the 560 Ti serves up 70% of its original performance with the feature enabled. That one percent difference screams out that more PolyMorph engines only minimize the impact of using tessellation up to a certain extent.

But at least Nvidia could still point out that AMD's cards shed nearly 40% of their performance with tessellation enabled. Well, it'd seem that a pair of Cayman GPUs cumulatively able to crank out four primitives per clock turns that story on its head. Radeon HD 6990 doesn't impress us with its frankly modest lead over the GeForce GTX 580; it impresses us by retaining 76% of its original frame rate with tessellation turned on. That's better than GeForce GTX 580's 75%. Never mind those 16 PolyMorph engines. It looks like four of AMD's tessellation units do the trick here.

Current page: Display Outputs And AMD's Tessellation Coup

Prev Page Radeon HD 6990: Power, Cooling, And Size--All Extreme Next Page Test Setup And Benchmarks-

CrazeEAdrian Great job AMD. You need to expect noise and heat when dealing with a card that beasts out that kind of performance, it's part of the territory.Reply -

jprahman This thing is a monster, 375W TDP, 4GB of VRAM! Some people don't even have 4GB of regular RAM in their systems, let alone on their video card.Reply -

one-shot Did I miss the load power draw? I just noticed the idle and noise ratings. It would be informative to see the power draw of Crossfire 6990s and overclocked i7. I see the graph, but a chart with CPU only and GPU only followed by a combination of both would be nice to see.Reply -

anacandor For the people that actually buy this card, i'm sure they'll be able to afford an aftermarket cooler for this thing once they come out...Reply -

cangelini one-shotDid I miss the load power draw? I just noticed the idle and noise ratings. It would be informative to see the power draw of Crossfire 6990s and overclocked i7. I see the graph, but a chart with CPU only and GPU only followed by a combination of both would be nice to see.Reply

We don't have two cards here to test, unfortunately. The logged load results for a single card are on the same page, though! -

bombat1994 things we need to see are this thing water cooled.Reply

and tested at 7680 x 1600

that will see just how well it does.

That thing is an absolute monster of a card.

They really should have made it 32nm. then the power draw would have fallen below 300w and the thing would be cooler.

STILL NICE WORK AMD -

Bigmac80 Pretty fast i wonder if this will be cheaper then 2 GTX 570's or 2 6950's?Reply

But omg this thing is freakin loud. What's the point of having a quite system now with Noctua fans :(