2 ExaFLOPS Aurora Supercomputer Is Ready: Intel Max Series CPUs and GPUs Inside

Coming online later this year.

Argonne National Laboratory and Intel announced on Thursday that installation of 10,624 blades for the Aurora supercomputer has been completed and the system will come online later in 2023. The machine uses tens of thousands of Xeon Max 'Sapphire Rapids' processors with HBM2E memory as well as tens of thousands Data Center GPU Max 'Ponte Vecchio' compute GPUs to achieve performance of over 2 FP64 ExaFLOPS.

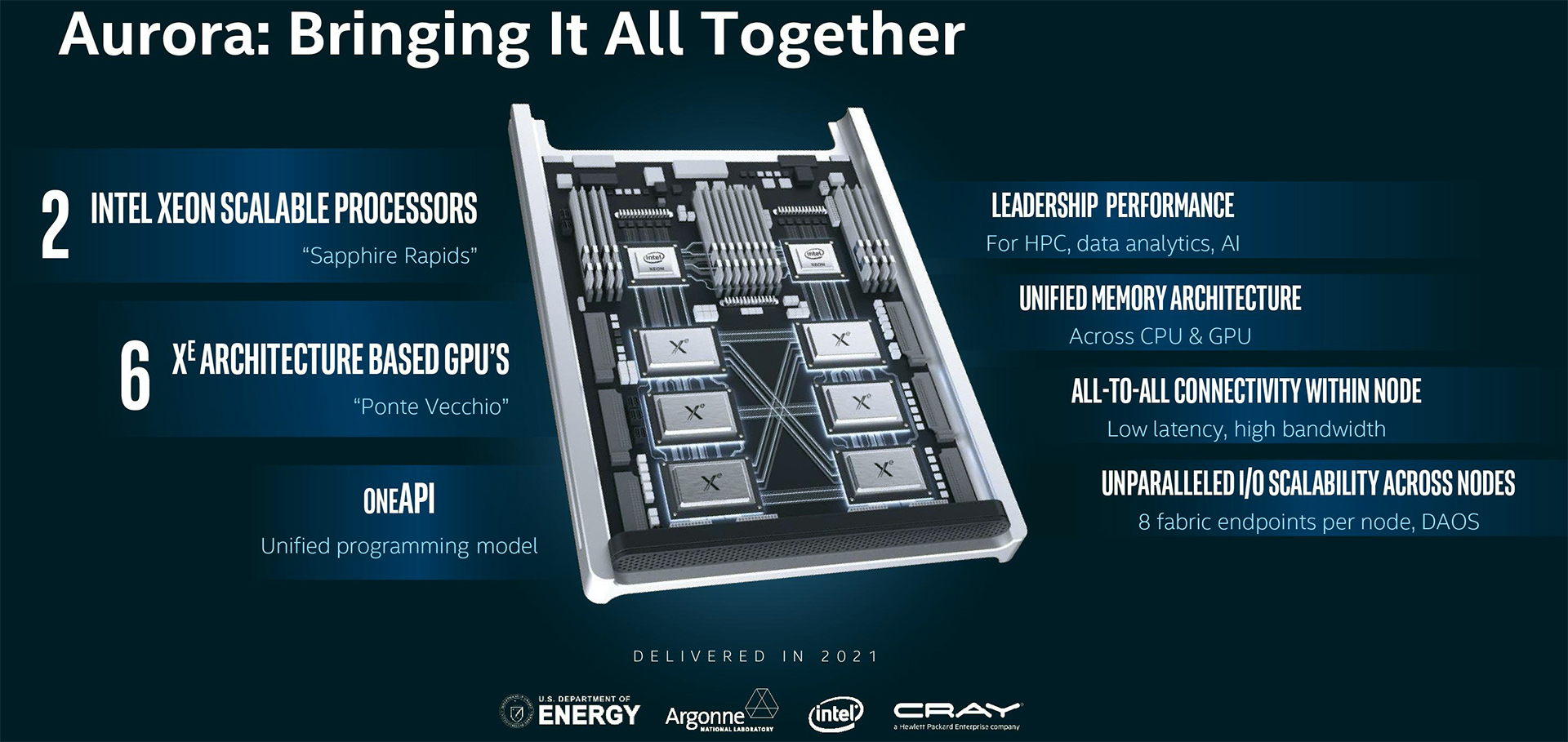

The HPE-built Aurora supercomputer consists of 166 racks with 64 blades per rack, for a total of 10,624 blades. Each Aurora blade is based on two Xeon Max CPUs with 64 GB on-package HBM2E memory as well as six Intel Data Center Max 'Ponte Vecchio' compute GPUs. These CPUs and GPUs will be cooled with a custom liquid-cooling system.

In total, the Aurora supercomputer packs 21,248 general purpose CPUs with over 1.1 million high performance cores, 19.9 petabytes (PB) of DDR5 memory, 1.36 PB of HBM2E memory attached to the CPUs, and 63,744 compute GPUs designed for massively parallel AI and HPC workloads with 8.16 PB of HBM2E memory onboard. The blades are interconnected using HPE's Slingshot fabric designed specifically for supercomputers.

"Aurora is the first deployment of Intel's Max Series GPU, the biggest Xeon Max CPU-based system, and the largest GPU cluster in the world," said Jeff McVeigh, Intel's corporate vice president and general manager of the Super Compute Group. "We are proud to be part of this historic system and excited for the ground-breaking AI, science, and engineering Aurora will enable."

The Aurora supercomputer uses an array of 1,024 storage nodes consisting of solid-state storage devices and providing 220PB of capacity as well as 31 TB/s of total bandwidth, which will be handy for handling workloads involving massive datasets, such as nuclear fusion research, scientific engineering, physical simulations, cure research, weather forecasting, and other tasks.

While the installation of the Aurora blades has been completed, the supercomputer has yet to pass acceptance testing. When it does and comes online later this year, it promises to reach a theoretical peak performance beyond 2 ExaFLOPS, making it the first supercomputer to achieve this level of performance when it joins the ranks of the Top500 list.

"While we work toward acceptance testing, we are going to be using Aurora to train some large-scale open source generative AI models for science," said Rick Stevens, Argonne National Laboratory associate laboratory director. "Aurora, with over 60,000 Intel Max GPUs, a very fast I/O system, and an all solid-state mass storage system, is the perfect environment to train these models."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

While the Aurora supercomputer yet has to pass tests and ANL yet has to submit its performance results to Top500.org, Intel took a chance to share performance advantages its hardware has over competing solutions from AMD and Nvidia.

According to Intel, preliminary tests with the Max Series GPUs show they excel in 'real-world science and engineering workloads,' delivering performance twice as high as AMD Instinct MI250X GPUs on OpenMC, and nearly perfectly scalable across hundreds of nodes. In addition, Intel says that its Intel Xeon Max Series CPU offers a 40% advantage in performance over its rivals in numerous real-world HPC applications, including HPCG, NEMO-GYRE, Anerlastic Wave Propagation, BlackScholes, and OpenFOAM.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user So, are you going to tell us how often it suffers hardware failures, or are we going to pretend this only happens to AMD machines?Reply

https://www.tomshardware.com/news/worlds-fastest-supercomputer-cant-run-a-day-without-failure

Hint: I've heard Intel faced unprecedented challenges achieving yield of fully-assembled Ponte Vecchio GPUs. I'm genuinely curious how reliable such a complex device is, under sustained loads. -

JarredWaltonGPU Reply

We won't actually know until/unless the Aurora people provide such information. Given it's not even fully online right now, or at least it hasn't passed acceptance testing, I suspect hardware errors will be pretty common. I mean, it was delayed at least a year or two (not counting the earlier iterations where it wasn't Saphire Rapids and Ponte Vecchio).bit_user said:So, are you going to tell us how often it suffers hardware failures, or are we going to pretend this only happens to AMD machines?

https://www.tomshardware.com/news/worlds-fastest-supercomputer-cant-run-a-day-without-failure

Hint: I've heard Intel faced unprecedented challenges achieving yield of fully-assembled Ponte Vecchio GPUs. I'm genuinely curious how reliable such a complex device is, under sustained loads. -

jp7189 Reply

I guess it depends on how savvy (or not) this program director is in bashing their hardware partners.bit_user said:So, are you going to tell us how often it suffers hardware failures, or are we going to pretend this only happens to AMD machines?

https://www.tomshardware.com/news/worlds-fastest-supercomputer-cant-run-a-day-without-failure

Hint: I've heard Intel faced unprecedented challenges achieving yield of fully-assembled Ponte Vecchio GPUs. I'm genuinely curious how reliable such a complex device is, under sustained loads. -

bit_user ReplyThe Aurora supercomputer uses an array of 1,024 storage nodes consisting of solid-state storage devices and providing 220TB of capacity

If they used Optane P5800X drives, that would cost $357.5k, going by the current market price of $2600 for a 1.6 TB model. On the other hand, if they used P5520 TLC drives, they could do it for a mere $17.5k.

However, I suspect it was meant to read "220 PB of capacity", in which case multiply each of those figures each by 1000. I expect the storage is probably tiered, with Optane making up just a portion of it.

Indeed, this article claims the storage capacity is 230 PB and uses Intel's DAOS filesystem:

https://www.nextplatform.com/2023/05/23/aurora-rising-a-massive-machine-for-hpc-and-ai/

This whitepaper about DAOS shows nodes incorporating both Optane and NAND-based SSDs:

https://www.intel.com/content/dam/www/public/us/en/documents/solution-briefs/high-performance-storage-brief.pdf -

domih Given the comparison one can make between ENIAC (1945) and a PC today, we can wonder if in 80 years, one will have Aurora computing power multiplied by X, in a chip implanted in the brain.Reply -

bit_user Reply

No. You can't just scale up compute power by an arbitrary amount.domih said:Given the comparison one can make between ENIAC (1945) and a PC today, we can wonder if in 80 years, one will have Aurora computing power multiplied by X, in a chip implanted in the brain.

More importantly, energy efficiency is becoming an increasing limitation on performance. Lisa Su had some good slides about this, in a presentation earlier this year on achieving zetta-scale, but they were omitted by the article on this site about it and I didn't find them hosted anywhere I could embed from my posts on here.

This is just about the only time I would ever recommend reading anything on WCCFTech, but their coverage of this presentation was surprisingly decent and a lot more thorough (and timely) than Toms':

https://wccftech.com/amd-lays-the-path-to-zettascale-computing-talks-cpu-gpu-performance-plus-efficiency-trends-next-gen-chiplet-packaging-more/ -

domih Reply

I gently and philosophically disagree. You're assuming scaling the same kind of technology, which I agree has limits in terms of density and energy. However, today's PC are using technologies very different from ENIAC 80 years ago. So we should assume that tiny 'intelligent' devices in 80 years will also use very different technologies compared to the ones of today.bit_user said:No. You can't just scale up compute power by an arbitrary amount.

More importantly, energy efficiency is becoming an increasing limitation on performance. Lisa Su had some good slides about this, in a presentation earlier this year on achieving zetta-scale, but they were omitted by the article on this site about it and I didn't find them hosted anywhere I could embed from my posts on here.

I stand by my prediction, unfortunately the majority of us will be eating dandelions by the root when it happens. -

bit_user Reply

Yes, because physics.domih said:You're assuming scaling the same kind of technology, which I agree has limits in terms of density and energy.

If you're going to appeal to some unfathomable technology, then you don't get to extrapolate. But, you don't even need to! Absent the constraints of any known physics or theory of operation, it's just a childish fantasy.domih said:However, today's PC are using technologies very different from ENIAC 80 years ago. So we should assume that tiny 'intelligent' devices in 80 years will also use very different technologies compared to the ones of today.

I stand by my prediction, unfortunately the majority of us will be eating dandelions by the root when it happens.

There are other examples we can look at, to see what happens when people blindly extrapolate trends. In the 1950's, sci fi writers tended to observe how quickly we were progressing towards entering the space race. They assumed this progress would continue unabated, and that we'd all be taking pleasure trips to orbital resorts or lunar bases, by the 2000's. Permanent human habitations on Mars was a veritable certainty.

Energy is another trend that got mis-extrapolated. For instance, they saw the nuclear energy revolution and what impact it had on energy prices and predicted energy would become so abundant that it would be virtually free. This underpinned predictions of ubiquitous flying cars, jetpacks, etc. I think this also ties in with ideas about ubiquitous space travel. -

TerryLaze Reply

Nobody is going to implant a traditional CPU into a brain anyway, that's just crazy, the effort for upgrades and maintenance would just be way too much, if it would restore some lost functions (like sight or the use of your limbs) maybe but for anybody else it would be too much to deal with.bit_user said:If you're going to appeal to some unfathomable technology, then you don't get to extrapolate. But, you don't even need to! Absent the constraints of any known physics or theory of operation, it's just a childish fantasy.

Science is already working on turning brain cells into computers so you would only need to implant a controller module and even that could be a external device like an advanced version of the Brain-computer interfaces we already have.

I don't see any practical application for this since you can just talk to your smartphone and your smartphone can cloud compute any amount of data for you but anyway.

I guess cyberpunk dream/memory transfers would be a huge seller.

https://en.wikipedia.org/wiki/Cerebral_organoid -

dalek1234 "Aurora Supercomputer is ready"Reply

"comes online later this year"

Let me correct the title for you: "Aurora Supercomputer is not ready, but might be if it passes acceptance testing later this year"