3DMark Gets a Fully Ray Traced Feature Test

How far are we from 100% ray tracing in games?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

The best graphics cards need to compete not just on performance and price, but also on features. Now that the RX 6900 XT, RX 6800 XT, and RX 6800 are joining Nvidia in supporting ray tracing via hardware, we really want to know how they compare in performance. Who will take top honors in our GPU benchmarks hierarchy, and how will things change if we test with ray tracing enabled?

There have been various hot takes on ray tracing in games over the years. I remember when Quake 4 Enemy Territory got a prototype ray tracing mod back in 2008. It looked pretty cool, but performance was terrible. 720p at 16 fps on a 16-core CPU? But at least it was something!

Flash forward a decade and Nvidia's RTX hardware promised far superior quality and performance. Except, even with RTX cards, games are still using a hybrid rendering approach where most of the rendering is done using traditional methods, and ray tracing is only applied after the fact for a few specific effects like reflections or shadows.

What would it take to do full path tracing on a game, using today's modern GPUs that support ray tracing calculations? Quake II RTX and Minecraft RTX sort of already do this, but they're older and less complex games with path tracing tacked on. Now UL has added a feature test for 3DMark that does a fully ray traced rendering of assets from the Port Royal benchmark. (Note that you'll need the Advanced or Pro version to access the DirectX Raytracing Feature Test, as it's called.)

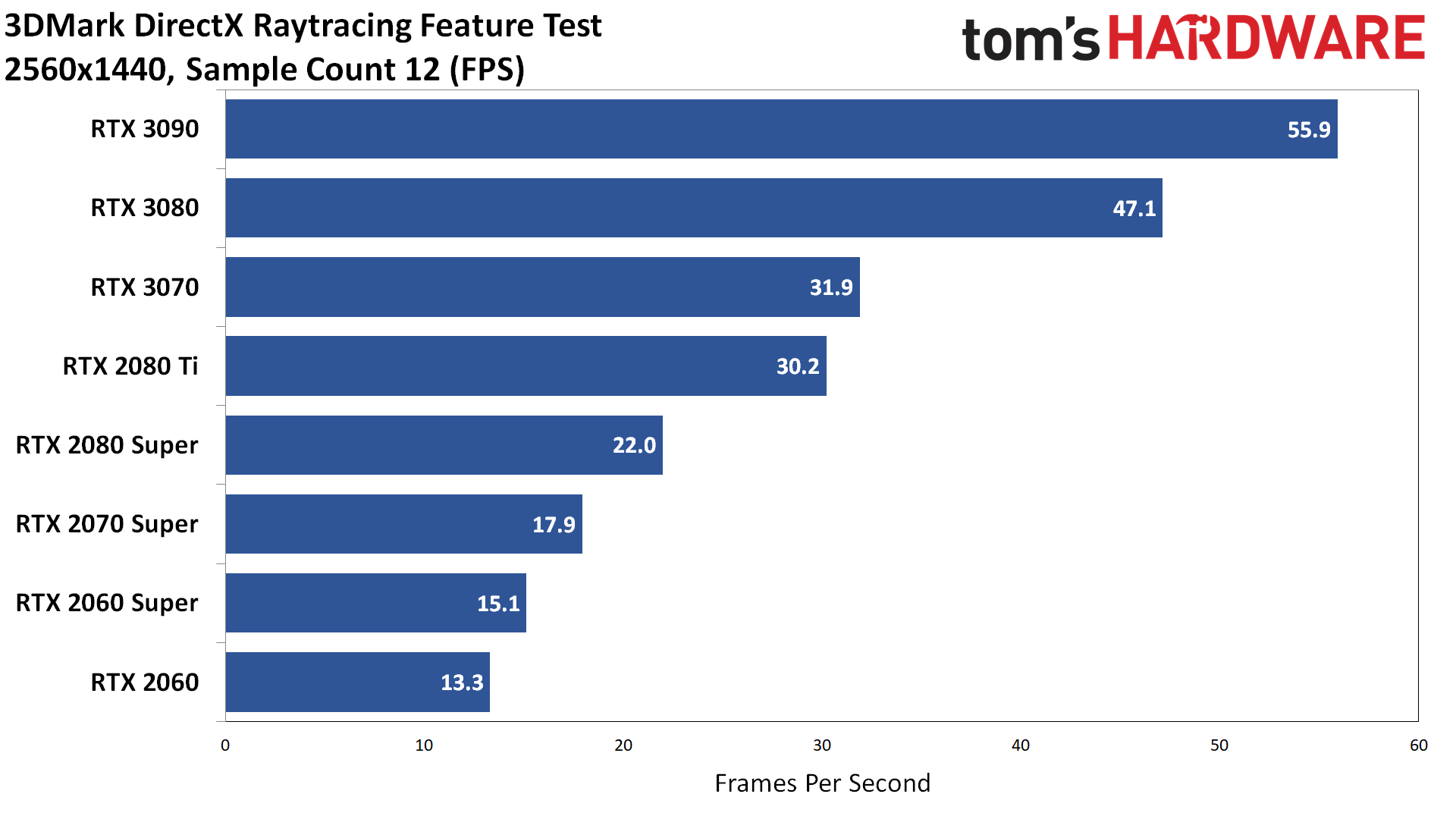

We like the idea of being able to compare 'pure' ray tracing performance on the various GPUs. The 3DMark DXR Feature Test now gives us another way to do that, and while it doesn't report the number of ray/triangle intersections per second (which is what we'd really like to see), it does provide an fps score that's directly correlated with the ray tracing hardware. We've rounded up the current RTX GPUs as a point of reference below. More important will be seeing how AMD's upcoming Big Navi stacks up, with the RX 6900 XT, RX 6800 XT, and RX 6800 all set to launch in the next month or so.

We ran all the RTX cards through the DXR Feature Test, with the exception of the RTX 2070 and 2080 (those should land about midway between the newer Super variants). The GeForce RTX 3090 is over 4X the performance of the RTX 2060, the RTX 2080 Ti is a bit more than twice as fast as the 2060, and the GeForce RTX 3070 is slightly faster than the 2080 Ti. The RTX 3090 is also 19 percent faster than the GeForce RTX 3080, which is a bigger gap than in most of the games we've tested. Basically, things are far more GPU limited here, so theoretical performance ends up pretty close to reality.

How close? We crunched some numbers, using the number of RT cores, the GPU clocks, and the RT core generation. Nvidia says the second gen 30-series RT cores are about 70 percent faster than the first gen 20-series RT cores. That means the 3070 for example should be about 7 percent faster than the 2080 Ti FE, while the 2080 Ti should be 150 percent faster than the RTX 2060.

The 30-series GPUs scale almost perfectly in line with expectations. 3070 is indeed 7 percent faster than 2080 Ti, 3080 is 48 percent faster than 3070 (47 percent expected), and 3090 is 19 percent faster than 3080 (vs 19.5 percent expected).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The results on the 20-series parts end up being more varied, however. The 2060 Super is 14 percent faster than the 2060 (vs 11 percent expected). However, the 2070 Super is only 35 percent faster than the 2060 (41 percent theoretical), 2080 Super is 65 percent faster (73 percent theoretical), and the 2080 Ti is 127 percent faster (vs. 150 percent theoretical). Still, that's mostly close enough to the respected behavior and a good starting point.

We wanted to run the DXR Feature Test on some non-RTX GPUs as well, but it basically laughed at us and mocked our hardware. "Your puny GTX 1660 Super and GTX 1080 Ti can't handle the ray traced truth!" Actually, it told us that our hardware didn't support the required DXR Tier 1.1 feature set needed to run the test. So much for DXR on GTX via drivers and shader hacks: Ray tracing hardware acceleration is required.

The bigger question: How will AMD's RX 6800, RX 6800 XT, and RX 6900 XT fare against RTX 3070, RTX 3080, and RTX 3090? Based on theoretical estimates, the 6900 XT may land about midway between the 3070 and 3080, while the 6800 XT would be just a bit faster than the 3070, with the RX 6800 sitting between the 2080 Ti and 2080 Super. But theoretical performance estimates may not match reality, and we're definitely interested to see how things shape up later this month.

There's a lot more to discuss with the 3DMark DXR Feature Test. For example, the way it runs isn't quite what we expected. The default settings render with 12 randomized samples per pixel at 1440p, and like most path tracing algorithms, the inputs from those samples are combined to give a final pixel color result. Then things are sampled again and the quality of the result improves. Normally, this process would be repeated until some desired quality level is achieved.

The catch is that 3DMark DXR Feature Test does all of the sampling and accumulation in real-time. The sample count specifies the rate of accumulation, and directly impacts image quality when things are in motion. However, once the camera stops moving, you end up with a similar maximum quality result for each scene (after the same amount of total time).

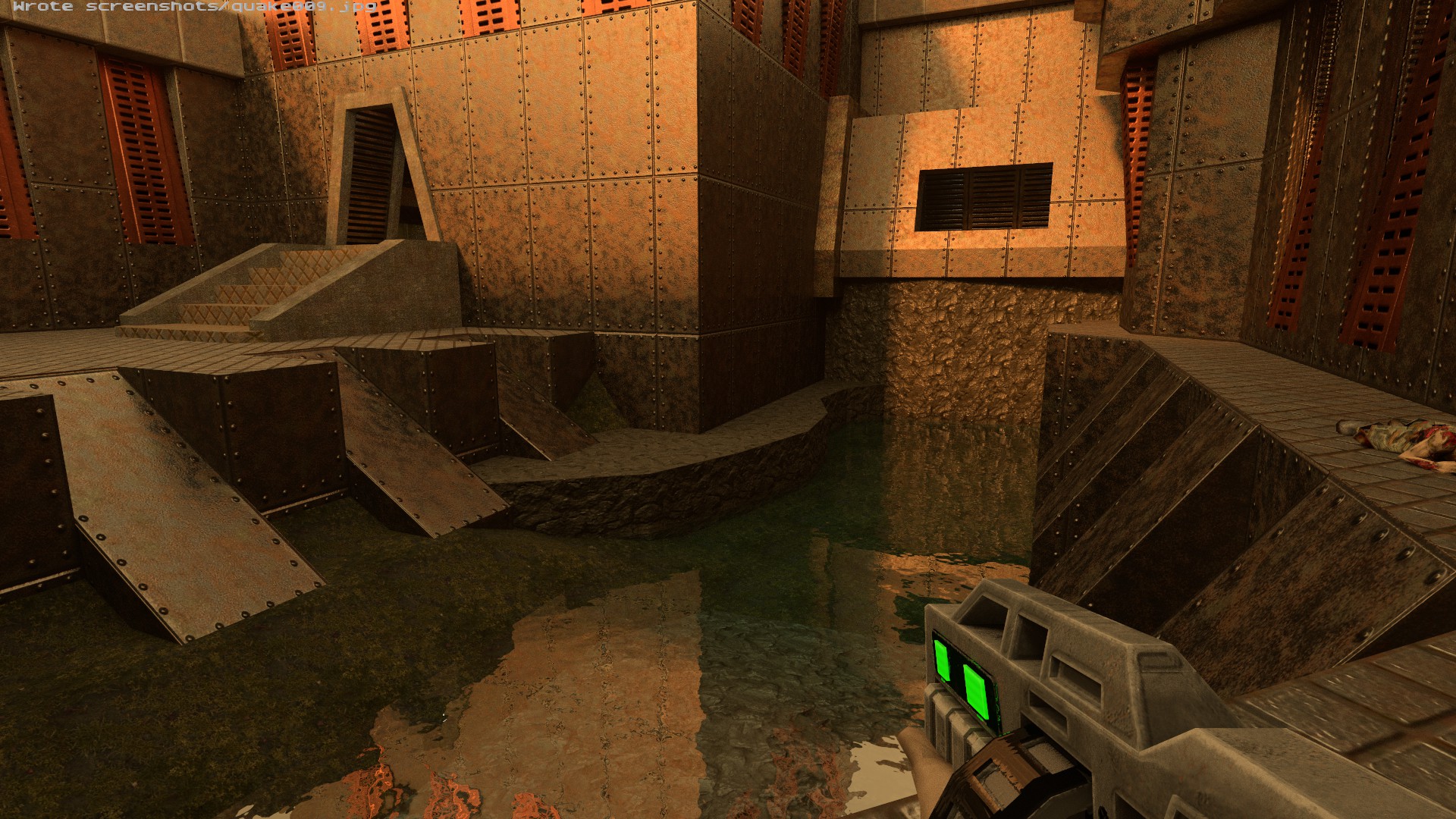

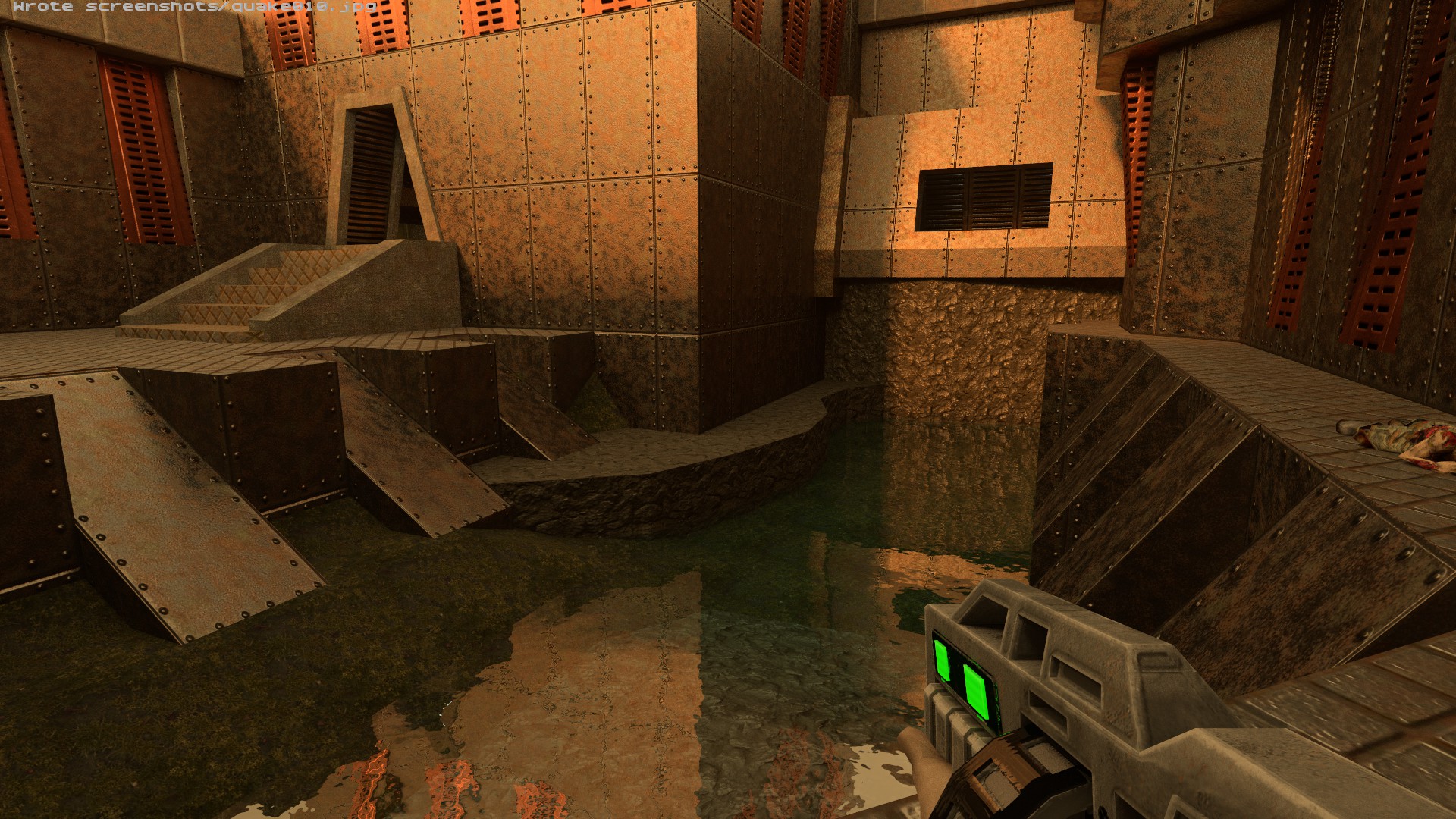

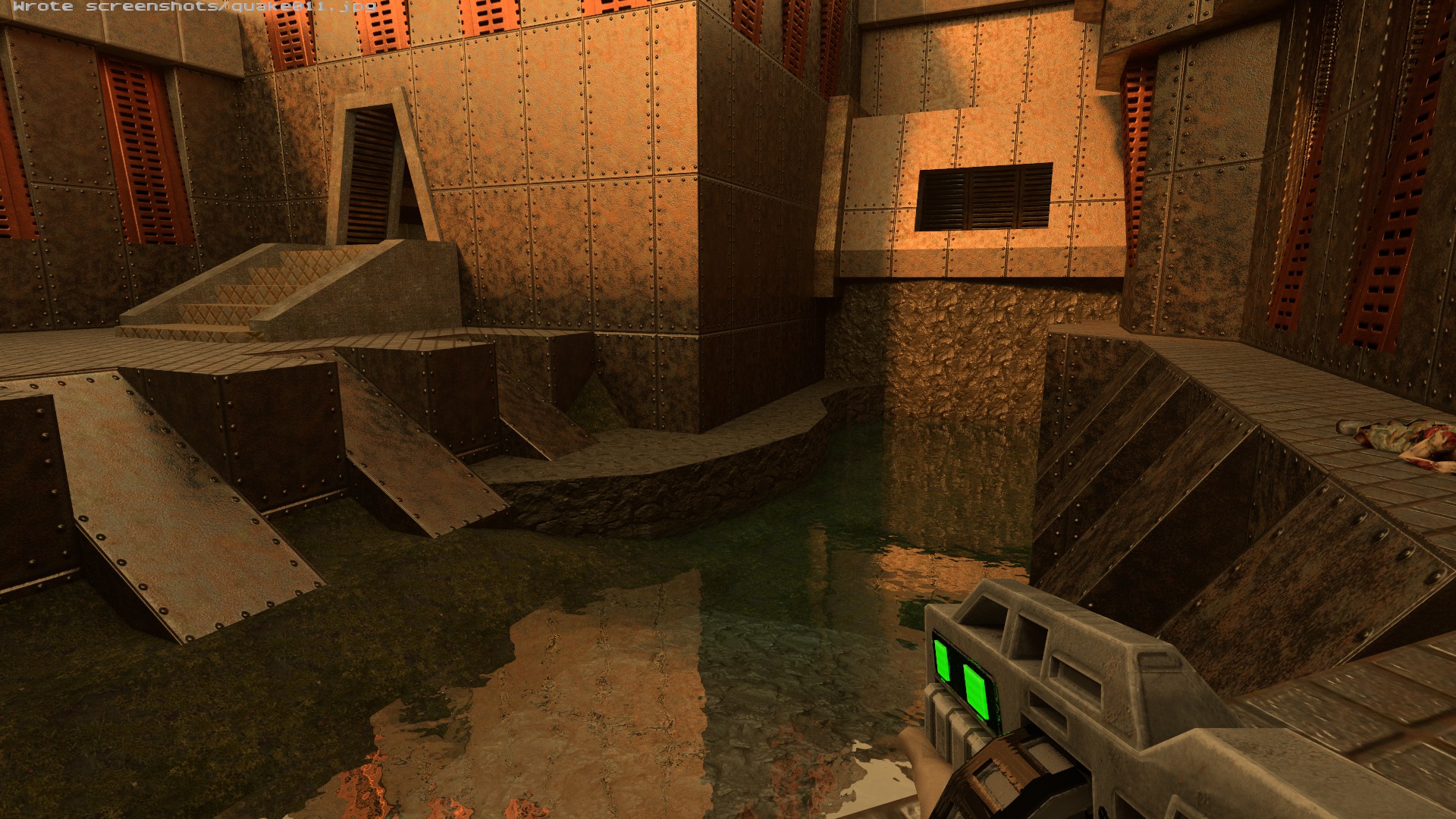

With a 2-sample setting, when the camera starts moving things look quite grainy compared to the 12-sample default, which in turn looks slightly worse than the maximum 20-sample setting. Once the camera pauses for a few seconds, however, the on-screen results start to converge quite quickly. Check out these image quality comparisons.

2-sample start

12-sample start

20-sample start

2-sample end

12-sample end

20-sample end

The above gallery shows images of the same scene right after the camera pauses, up until just before it starts moving again. The first three "start" images are thus the 'worst' quality, while the three "end" images are the 'best' quality. Note that the screengrabs are taken from video captures made with ShadowPlay, so there are some compression artifacts that you should ignore. This was to make capturing the same frame for each setting easier. (These results are from an RTX 2080 Ti.)

The video compression artifacts obfuscate a lot of the additional noise you'll see when a scene is in motion, particularly on the 2-sample setting. However, check out how similar the images look given even a small amount of time for an image to stabilize. The three 'end' results are all basically the same, with the only difference being how the randomized sampling plays out. The biggest factor by far, however, is performance.

Using an RTX 2080 Ti, the average performance for the default 12 samples is 30.2 fps. Dropping down to two samples per pixel, performance jumps to 165 fps, while increasing the sample count to 20 samples per pixel cuts performance to just 18.1 fps. In other words, the number of samples per pixel per frame directly impacts performance, almost linearly.

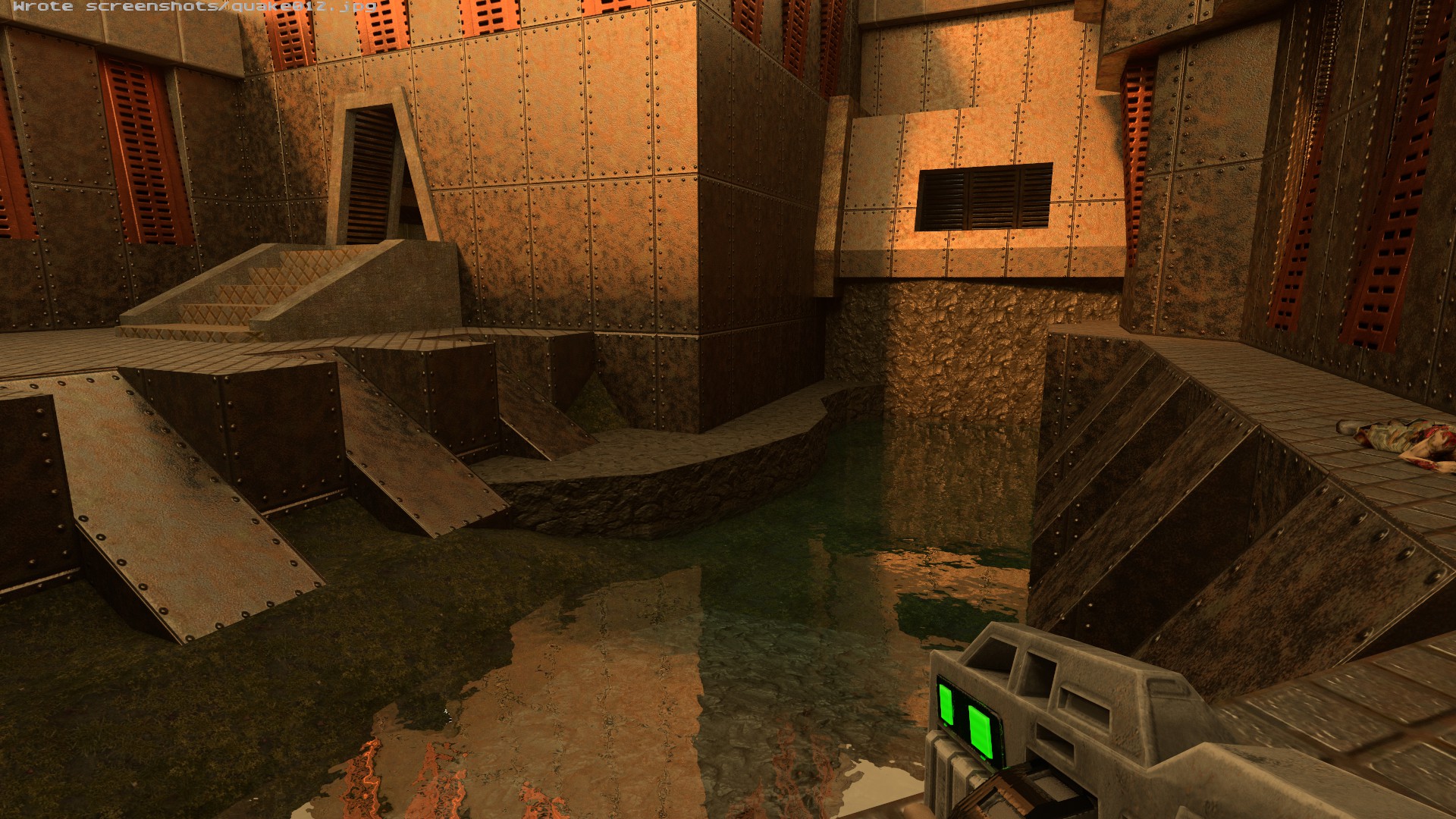

What I want to see is a deep learning derived denoising algorithm running on top of the various sample counts. Sure, the 2-sample image quality is quite noisy by default, but then so is Quake II RTX. Look at the following as an example:

Full size denoised image | Full size 'noisy' image

The 'noisy' image runs 15-25 percent faster, but it's far more useful if you're actually trying to make a playable game. The goal then is to do enough samples to get an image that can be converted into a nicer looking result. Think DLSS, but instead of upcsaling it would remove noise and interpolate between the various pixels in a consistent fashion.

The number of randomized samples per pixel required to get a near-perfect path tracing result is typically in the hundreds. That's not going to happen any time soon for real-time gaming applications. However, Quake II RTX allows you to adjust the number of samples it uses for the photo mode, ranging from 100 up to 8000.

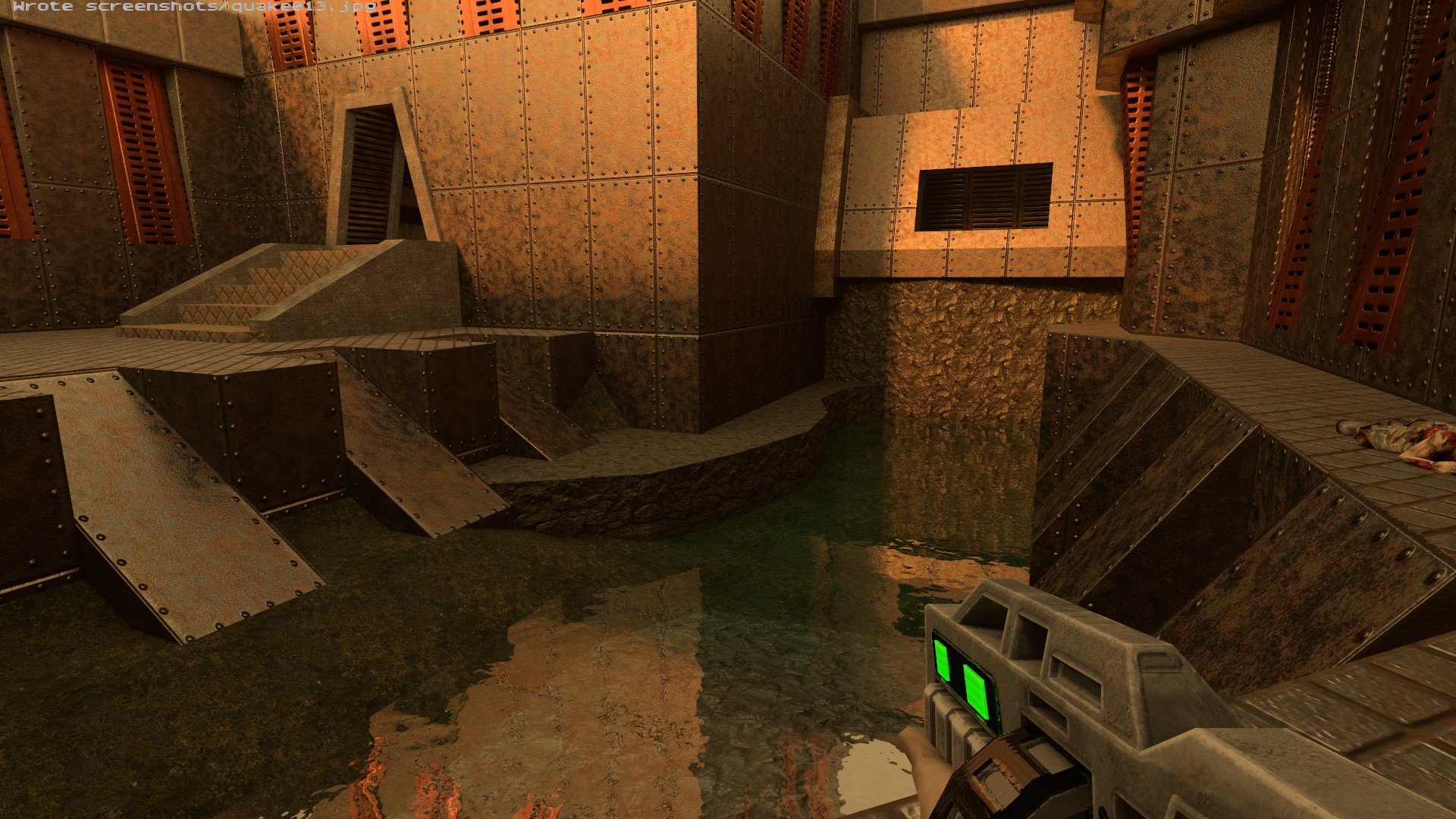

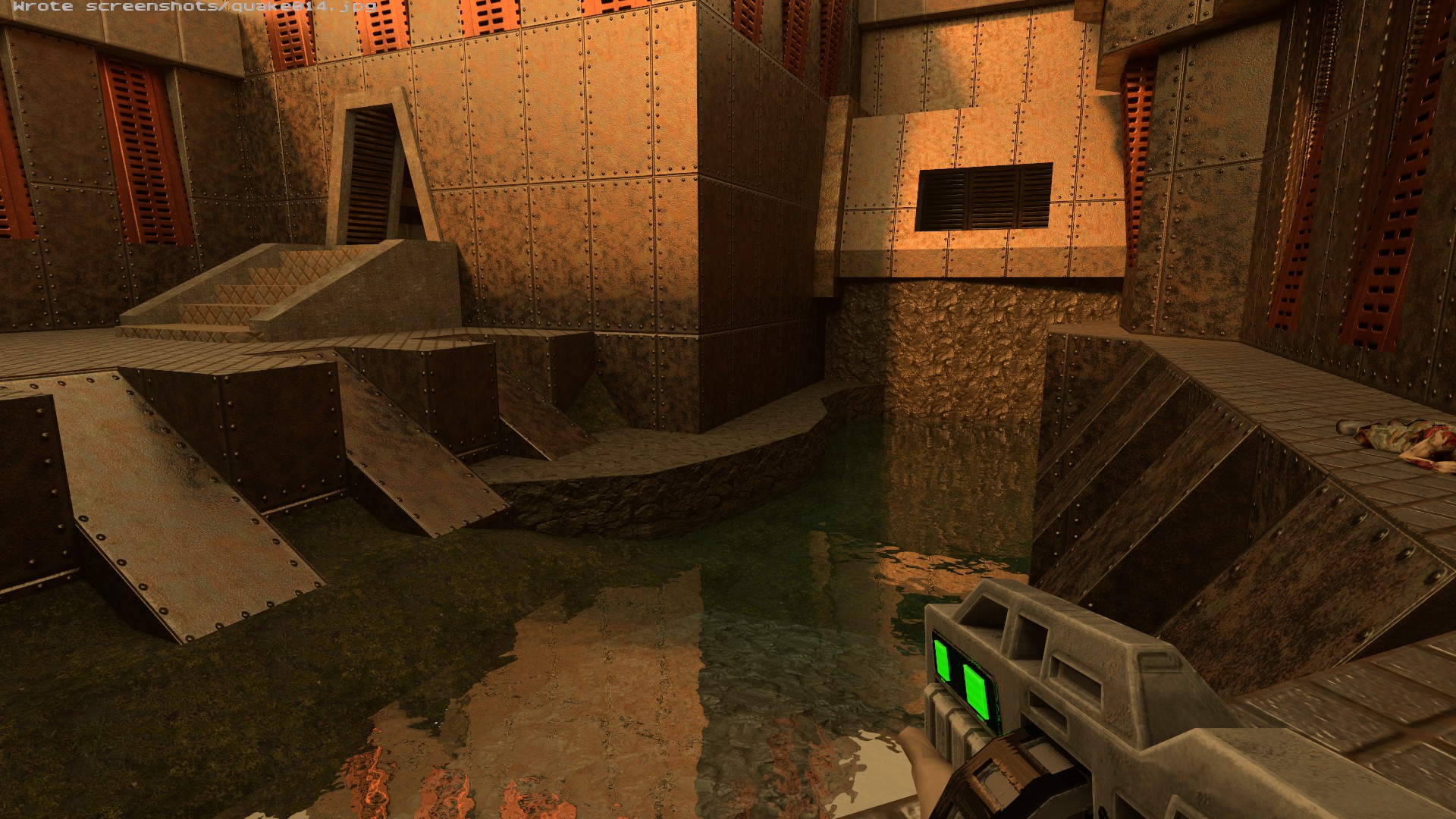

Noisy real-time

Denoised real-time

Photo mode 100 samples

Photo mode 200 samples

Photo mode 400 samples

Photo mode 800 samples

Photo mode 1600 samples

Photo mode 8000 samples

The maximum takes about a minute to render a single frame at 1080p, while 100 samples can be done quite quickly. The image quality meanwhile doesn't change much beyond the baseline 100 samples, though it would probably matter more if you had a more complex game to render than Quake II.

The choice is more samples, lower performance, and higher image quality; or fewer samples, better performance, and lower image quality. Finding a good balance between quality and performance is all we need for games to make use of higher quality ray traced rendering techniques. Outside of the photo mode, Quake II RTX uses only a few samples per pixel and can easily reach playable performance on RTX graphics cards.

Of course, there's are several remaining questions. First, for denoising, would it require Nvidia's Tensor cores, or could it be done via FP16 on regular GPU shaders (making it compatible with AMD GPUs)? And how much would it impact performance?

More important is the question of whether fully ray traced games or graphics even matter. Current ray tracing games typically use a hybrid rendering approach, with rasterization handling most of the underlying graphics, and ray tracing effects only used for things like reflections, shadows, or global illumination. But if hybrid rendering can achieve the same visual result while running substantially faster, isn't that the best overall result?

One concern is that hybrid rendering requires all the code for traditional rasterization, plus code for ray tracing, and that means potentially more work for the game developers. Then again, with support for ray tracing built into Unreal Engine and Unity, that's less of a problem than you might thing. Regardless, we're not likely to leave rasterization behind any time soon.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

hotaru.hino ReplyOne concern is that hybrid rendering requires all the code for traditional rasterization, plus code for ray tracing, and that means potentially more work for the game developers. Then again, with support for ray tracing built into Unreal Engine and Unity, that's less of a problem than you might thing. Regardless, we're not likely to leave rasterization behind any time soon.

GPU designers could also allocate more silicon for ray tracing hardware to improve performance there, but that would come at the cost of shaders, if we assume the same transistor count. But then comes a problem: if it's balanced it out too far towards ray tracing, we'll start regressing in rasterized only performance. -

nicoff Spent many many hours in the late 80s watching my Amiga of the day (020 then 030 upgraded) grind away ray tracing a single image. The holy grail of gaming was to ray trace a game at a playable frame rate. At those Amiga resolutions (SD PAL), extrapolating from the 1440P benchmarks, that we are there today!!! Obviously, our expectations have shifted to 1440P or 4k with HDR (and ultra wide for me) and there is a need to add a game engine on top of the ray tracing, but, it seems after waiting decades we are within a generation or two of GPUs being able to ray trace in real time, at modern refresh rates (100+fps), at consumer-level price points.Reply

Bring it on!!!! -

sizzling Reply

We are already there. With my 3080 I can have RT on at 1440p and hit 100+ FPS. Even at 4K it stays over 60 in the games I tested.nicoff said:Spent many many hours in the late 80s watching my Amiga of the day (020 then 030 upgraded) grind away ray tracing a single image. The holy grail of gaming was to ray trace a game at a playable frame rate. At those Amiga resolutions (SD PAL), extrapolating from the 1440P benchmarks, that we are there today!!! Obviously, our expectations have shifted to 1440P or 4k with HDR (and ultra wide for me) and there is a need to add a game engine on top of the ray tracing, but, it seems after waiting decades we are within a generation or two of GPUs being able to ray trace in real time, at modern refresh rates (100+fps), at consumer-level price points.

Bring it on!!!! -

nicoff Reply

Sorry I should have been clearer. I meant pure ray tracing, not hybrid, as per the current crop of games.sizzling said:We are already there. With my 3080 I can have RT on at 1440p and hit 100+ FPS. Even at 4K it stays over 60 in the games I tested. -

digitalgriffin Right now its akin to the early days of 3d engines and the early days of vr. Starting requirements are low as hardware catches up. But there's a logarithmic like curve on complexity that grows quickly in the beginning.Reply

We used to think a voodoo 2 would keep us ready for years after quake. We thought a R290 which maxed out steam vr test would surfice long term.

Todays cards will become quickly outdated. But if you choose one, go for as much power as you can. I consider the 3080 the minimum for a couple years of rt use. (3 years). After that you'll be looking at rasterized gaming. (Generally speaking on a broad sample of games)

I dont like saying that as I prefer AMD, but if Im going to spend this kind of scratch, I dont want to look at my card in 2 years and realize I cant do rt at reasonable rates even at low res. The games are already there to push the best system to its limits like Control, cyber punk 2077, and watch dogs:legion. -

cryoburner Reply

Or more likely, rasterized performance would just stall, and not improve much from one generation to the next. A bit like Nvidia's 20-series at launch, for example. Only minor improvements in standard rendering performance over the 10-series at any given price point, but with some amount of raytracing hardware added. For that generation, the tradeoff did not feel particularly worthwhile, at least at launch, since there were no games utilizing the feature, and questionable performance for future games that eventually would. But if they were to do something similar for the 40 or 50 series, it might be taken better, assuming raytracing becomes a lot more common in games over the next couple years or so. If most newer big games support RT effects by that time, and improved RT hardware were to boost frame rates by 50% or more with those effects enabled, that might be viewed in a more positive light, even if the performance in games not utilizing the effects doesn't improve much.hotaru.hino said:GPU designers could also allocate more silicon for ray tracing hardware to improve performance there, but that would come at the cost of shaders, if we assume the same transistor count. But then comes a problem: if it's balanced it out too far towards ray tracing, we'll start regressing in rasterized only performance.

And while RT will probably become the new standard for "ultra" graphics settings relatively soon, I suspect traditional rendering will still be supported for a number of years to come. Game publishers are not going to want to limit their sales to only those with higher-end RT hardware, and there's bound to be a large portion of systems without it for at least several years to come. And hybrid RT rendering will probably be the norm through at least this console generation. I suppose it's possible that PC releases could add the option for fully-raytraced rendering some years down the line, for newer, higher-end cards that might manage playable performance with the feature enabled, but I have some doubts that it would be worth the performance hit over a hybrid approach, for what would likely be very small improvements to visuals. -

vinay2070 Reply

I remember telling my brother. Hey, I need to buy a GeForce 256. It has the capability to offload work from the CPU, that means we never have to upgrade our computers again for a long time to come. lol. What was I even thinking back then :tearsofjoy: Then there was GeForce 2, 3, 4. Once the Geforce 2/3 were out and how they enhanced gaming performance, I realised how wrong I was.digitalgriffin said:

We used to think a voodoo 2 would keep us ready for years after quake. We thought a R290 which maxed out steam vr test would surfice long term.

-

kyzarvs Reply

Nice attempted flex - but as the article clearly states, there's no games 100% raytraced at the moment, only a fraction of the effects you see are 'traced.sizzling said:We are already there. With my 3080 I can have RT on at 1440p and hit 100+ FPS. Even at 4K it stays over 60 in the games I tested. -

spongiemaster Reply

No new games. I'm pretty sure Quake 2 RTX is 100% path traced.kyzarvs said:Nice attempted flex - but as the article clearly states, there's no games 100% raytraced at the moment, only a fraction of the effects you see are 'traced. -

hotaru.hino Now that I had a chance to run the feature test, I'm annoyed that the only prominent feature it's showing appears to be DoF. That or other light phenomena is too subtle to really notice.Reply

It is, since it's based on the Quake 2 VKPT project. In addition, Minecraft RTX is also fully path traced.spongiemaster said:No new games. I'm pretty sure Quake 2 RTX is 100% path traced.