AlphaGo Zero Learns To Play Go From Scratch With No Human Data

Recently, OpenAI talked about its new discovery, in which using “self-play” mechanisms to train AI agents resulted in significantly more advanced but also less complex neural networks. OpenAI noted that self-play will have a major role in the training of AI in the future, not only because the AI doesn’t need large data sets from which to learn anymore, but also because the AI can improve and achieve mastery for a given skill much more quickly.

No Human Intervention

DeepMind, an Alphabet company, has been experimenting with self-play, too. It used this mechanism to design a new version of AlphaGo, called AlphaGo Zero, which could learn the Go game from scratch simply by playing against itself.

The previous version of AlphaGo had to learn the Go game from “watching” thousands of recorded amateur and professional human games. Eventually, it figured out which were the best “winning moves” for most situations, and it would play those against any human player. However, for the most part, the older AlphaGo was still limited by human knowledge and experience. The new AlphaGo has no such limits.

Timeline For Mastery

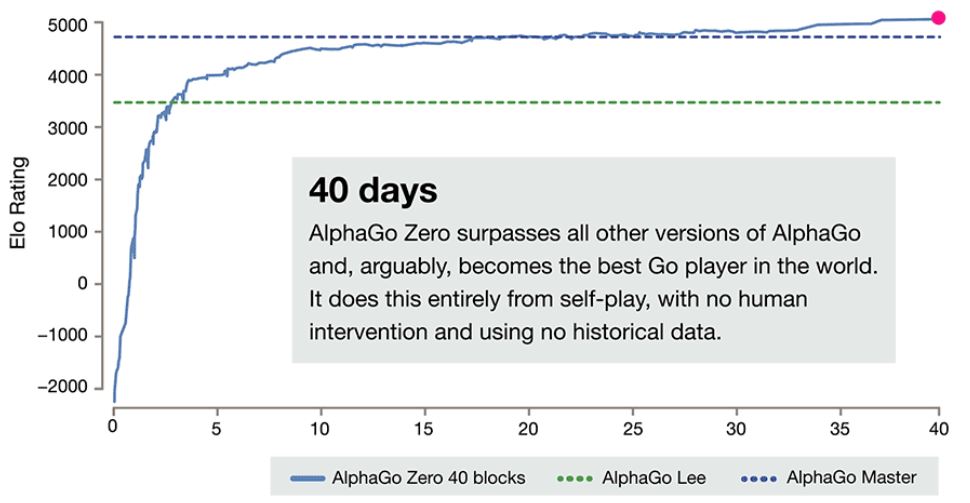

AlphaGo Zero started out making completely random moves at first, while sparring with an equal-strength version of itself. It took three hours for the AI to achieve human amateur level of playing, where it would simply try to gain as many stones as possible.

Within 20 hours, it learned more advanced tactics that are typically used only by professional players. By the third day, AlphaGo Zero had already gotten to the level that the first iteration of the original AlphaGo was when it defeated Lee Sedol in four out of five games last year.

It then took 21 days to get to the level where it was when it defeated Ke Jie 3-0, earlier this year. At the time, Ke Jie called AlphaGo a “god of Go.”

By the 40th day, AlphaGo Zero could already beat the best iteration of the original AlphaGo 100-0. Suffice it to say that it’s unlikely any human could ever hope to beat AlphaGo Zero at this point.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Differences From The Original AlphaGo

The first version of AlphaGo included a small number of hand-engineered features so that it would work correctly. The new AlphaGo Zero knows only about the white and black stones and that it can move them anywhere on the board.

Additionally, the first AlphaGo used two neural networks: a “policy network” to select the next move to play, and a “value network,” which predicted the winner of the game from each position. The two are combined for AlphaGo Zero, allowing it to be trained more efficiently.

It also seems that AlphaGo Zero doesn’t need to use “rollouts.” These were fast, random games started from an existing position, so that the agent could calculate what was the best move to make at that point. AlphaGo Zero simply relies on the intelligence of its own neural network to tell it what is the best move to make in any scenario.

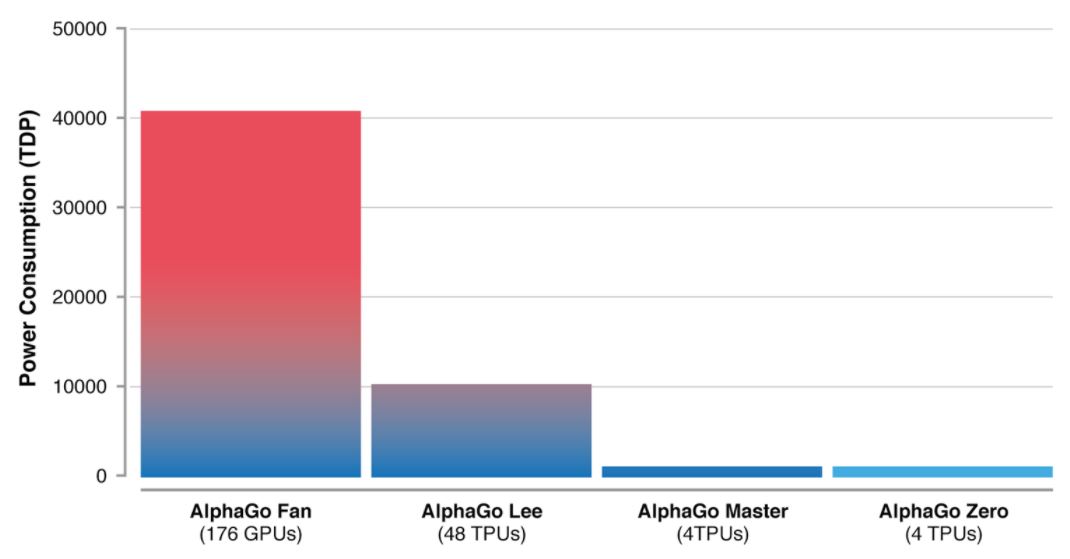

The first ever version of AlphaGo ran on 176 GPUs, with a TDP of 40,000W (40 kW). The newest versions needed only four Tensor Processing Units (TPUs) for training. This large increase in efficiency is due to both algorithmical changes in how AlphaGo works and the latest TPUs being much more efficient than older Nvidia GPUs.

Thousands Of Years Of Knowledge Achieved In Days

AlphaGo Zero showed that AI has gotten to the point where it could learn skills in a matter of days or weeks that took humans hundreds or thousands of years to master. DeepMind believes that this sort of technology could be a multiplier for human ingenuity.

Similar techniques could be applied to protein folding, reducing energy consumption, or searching for revolutionary new drugs and materials. All of these could ultimately have a large positive impact on society (especially if DeepMind can keep its AI under control).

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

velocityg4 One more step to Skynet or HAL. Perhaps they should institute Isaac Asimov's Three Laws.Reply -

chicofehr "All of these could ultimately have a large positive impact on society"Reply

More accurately, everyone gets laid off and the big executives get their big bonuses for cutting costs. Everyone wins. -

Kelavarus Reply20288909 said:Stop that, AlphaGo, you'll go blind!

Haha, dang it! I was going to say something similar.

"DeepMind, an Alphabet company, has been experimenting with self-play, too."

I've been doing this for years! -

DerekA_C when are we going to start to think that Elon Musk is right about AI even in a benign scenario it will not be to beneficial for the average person like one said above a lot of people in almost every field of work will be let go due to the fast logical thought process and design ability these AI's are going to be capable of. I don't feel to safe with the thought of a machine that can't be controlled that can easily create it's own language already happened for Facebook and Microsoft's AI only a matter of time before we lose control over all network.Reply -

woodsen The human mind is based, built on dualism...'to be or not to be', idealism, where the thought is dividing itself, creating a contradictory state between 'what is and what is not'...the abstract and the real. The 'Alpha Go Zero" seems to approach questions with absolute logic, thus eliminating everything else but the factual.Reply