AMD Says Some Gamers Don't Care About GPU Power Consumption, Fixes Underway (Updated)

Fixes are in the works, but some gamers don't mind.

Edit 8/31/2023 2:20pm PT: Clarified Herkelman's statements and added quote.

Amended article:

In an interview with Club386, AMD's Scott Herkelman shared insight into the company's perception of GPU power consumption and how it believes gamers prioritize power consumption in their graphics cards. According to Herkleman, AMD believes GPU power consumption is important in the laptop segment. He goes on to note that while some gamers care about power efficiency, some gamers don't care as much.

"We have a company initiative in driving good performance per watt across our portfolio of products. We look at perf per watt on every chart when bringing all chips to market. In notebooks, it matters greatly. In desktop, however, it matters, but not to everyone. There are some people who are really concerned about power, others don’t care as much. We definitely want to make a better perf-per-watt chip, [...]" said Herkelman.

"Power is definitely a prime initiative. You’ll see us over time get better and better at it. We need to catch up. There are still bugs that we need to fix. Idle power is one of them. We believe there is a driver fix for it. It’s a wonky one, actually. We do see some inconsistencies with idle power that shouldn’t be there, and we’re working with our partners to maybe help with that. It will be cleaned up, but it might take a bit more time," he continued.

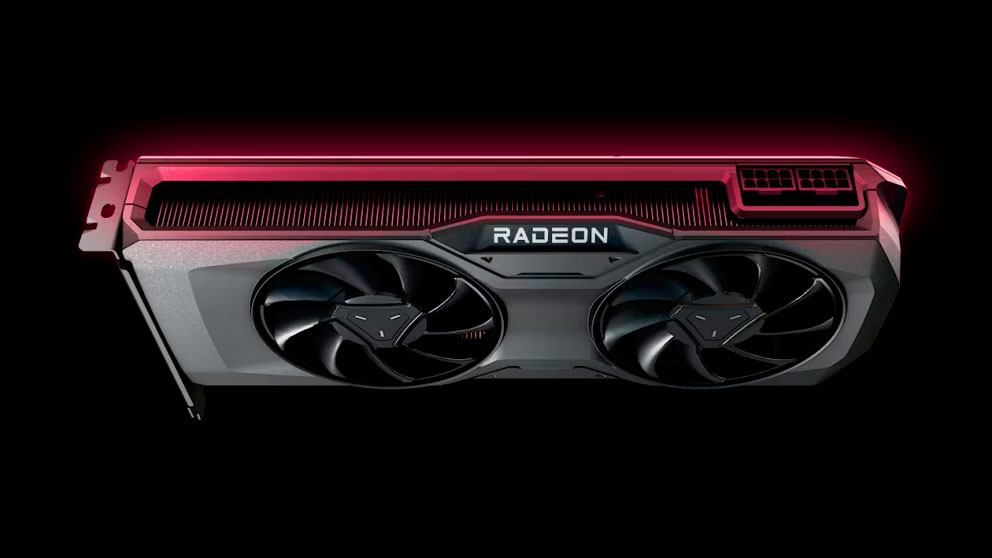

Herkleman's answer was in response to a power efficiency question Club 386 asked regarding how concerned AMD is about RDNA3's weaker energy efficiency compared to Nvidia's RTX 40 series GPUs and the Ada Lovelace GPU architecture. Club386 specifically gave the example of the RX 7700 XT compared to the RTX 4070. The former is rated at 245W of power consumption versus the latter's 200W TGP. The RX 7700 XT also reportedly performs worse, targeting the RTX 4060 Ti.

Herkleman also discussed AMD's thought process behind energy efficiency and how it plays out in GPU development. Despite his claims that some gamers don't care much about power consumption, Herlkeman says that AMD strives for good performance per watt across all its products, noting that AMD looks at perf per watt on every chart when they bring all chips to the market. He continues by saying that making a more power-efficient chip also leads to a more affordable board design, enabling AMD to reduce graphics card prices or increase graphics card performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Herkleman's assessment has some merit; some gamers don't care about power consumption and would rather have as much performance as possible, no matter the cost. But, we wonder how much of that assessment is due to AMD lagging in the efficiency race behind Nvidia.

For some perspective, Nvidia's RTX 40-series graphics cards generally consume far less energy than AMD's RX 7000 series competitors. Nvidia's RTX 4080, for instance, has a 320W power rating but typically only uses around 300W in gaming, according to our tests. On the other hand, AMD's RX 7900 XTX has a higher 355W power rating and uses around 351W in gaming workloads in our testing. You'll see this trend across all of Nvidia's 40 series product stack, down to the RTX 4060 and RX 7600.

Thankfully, AMD's competitive prices combat most of RDNA3's power disadvantages. Still, we can't help but wonder what AMD's opinion would be if it led the power efficiency race like it once did with the RX-6000 series.

While it's true that desktop gamers generally don't care a lot about GPU power consumption, opinions on GPU power consumption are not as cut and dry as they once were. With the introduction of 350W and 450W GPUs a few years ago, power consumption is significantly more impactful on gamers and DIY builders. If you want to build a system with one of these power-hungry GPUs, you'll need to ensure you have enough headroom from your power supply to run the card, or you'll run into problems.

You must also ensure that your case can handle a 350W or 450W graphics card from a physical and thermal perspective. Graphics cards with these super high power ratings put out substantially more heat and require more physical space to fit inside of a case due to extra-large triple-slot and quadruple-slot coolers. This is even more problematic with the mini-ITX systems, which are much smaller than standard ATX and micro-ATX machines.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

-Fran- They're not wrong though...Reply

Even when* they've been more efficient, the overwhelming majority of gamers will still choose what they want first as long as they can power it.

Personally, I do like more efficient designs and nVidia has always had an advantage there, but as long as the TBP is under 300W, it's ok for me as long as it hits the performance target I want.

EDIT: Please put the relevant quote as well: "In desktop, however, it matters, but not to everyone. There are some people who are really concerned about power, others don’t care as much. We definitely want to make a better perf-per-watt chip."

It feels disingenuous to not do so. Specially when the title is an absolute stretch to what he really says.

EDIT2: Thanks for the update :)

Regards. -

kjfatl Personally I care more about the average power, particularly when the PC is on, but a game is not being played.Reply

Peak power doesn't mean a lot if it is only happening 5% or the time. -

PBme Reviewers and a few folks who are dealing with existing power supply actually care. Many care in theory. But my guess is almost all who are buying a card mainly for gaming put most weight into performance for what that they are willing to spend and the least weight on power consumption. I wager that aesthetics of different cards makes much more of a difference in buying decisions that their relative watts to fps does.Reply -

hotaru251 i mean...they arent wrong in states where power is cheap.Reply

the dif between nvidia and amd is gonna be dollars over a yr.

we generally already expect "high" power as we have gaming PC's & a/c to keep room cool. -

PBme Reply

And almost none are actually going to take the time to try to estimate what the annual cost difference is going to be for them (if they are even paying the power bill).hotaru251 said:i mean...they arent wrong in states where power is cheap.

the dif between nvidia and amd is gonna be dollars over a yr.

we generally already expect "high" power as we have gaming PC's & a/c to keep room cool. -

punkncat Just being honest. I didn't care. Then I built a "powerful" system that produced loads of heat with that power. It made the office/game area too hot to sit in during summertime, particularly while playing or running the powerful PC as a work box while another PC (and person) were in that room with me.Reply

We mitigated parts of it with AC adjustments and a well placed fan or two, but one of my major concerns now has to do with heat generation due to capability. -

-Fran- Also, people. Don't confuse "efficient" and "max power". They're related, but not the same thing.Reply

If you target a certain performance level, two cards can hit it at different power levels, but your choice will be driven by price. If you need to upgrade your PSU, it'll add to the cost of upgrade and it'll probably be a no-go. If it adds too much to the power bill and you mind, etc...

If you already own a PSU that can power a 300W card, then you can buy whatever GPU that is under that, no? The difference will come down to price and performance.

Gotta be practical and not cynical.

Regards. -

vanadiel007 You buy a video card based on performance, not power consumption unless you are concerned about the power draw and have to include a PSU upgrade to power your new card.Reply

Even then, most just get a new PSU rather than downgrading performance to a lesser power consuming model. -

King_V Reply

Ok, nitpick on this, they've actually traded blows over the years. Nvidia most certainly has not always had the advantage.-Fran- said:Personally, I do like more efficient designs and nVidia has always had an advantage there,

That aside, while my preferences generally run AMD, and they're likely not wrong when it comes to gamer sentiment, my own view is "ehh, come on, guys, this is NOT a good look for you."

Or:

-

InvalidError Reply

For people who have to rely on window ACs in summer, higher power may also require an AC upgrade that isn't necessarily possible - I cannot fit anything much bigger than what I already have in my narrow windows. I doubt a bigger AC would fit within the circuit breaker budget either.vanadiel007 said:You buy a video card based on performance, not power consumption unless you are concerned about the power draw and have to include a PSU upgrade to power your new card.