AMD to Expand ROCm Support to Pro and Consumer RDNA 3 GPUs This Fall

Currently ROCm support doesn’t go beyond a few RDNA 2 workstation GPUs.

AMD has announced the release of Radeon Open Compute platform (ROCm) 5.6, the latest version of its open source platform for GPU Compute. If you are looking to buy a contemporary GPU, ROCM only officially supports AMD Instinct RDNA 2 workstation graphics cards. However, AMD is responding to feedback about challenges to using ROCm on other AMD GPUs and has decided to widen its support. Sometime this fall, AMD will expand ROCm support to more RDNA 2 GPUs and select AMD RDNA 3 workstation and consumer GPUs.

Consumer interest in GPU Compute has grown considerably due to breakthroughs in artificial intelligence and machine learning. AI acceleration has transformed from ‘why would I want that’ into a highly desirable feature. Perhaps this change in the computing universe has taken some by surprise. AMD, for example, includes AI acceleration solely in it Ryzen 7040 Phoenix chips for consumers. In contrast, mobile processor makers have been adding and tuning AI acceleration for several generations now.

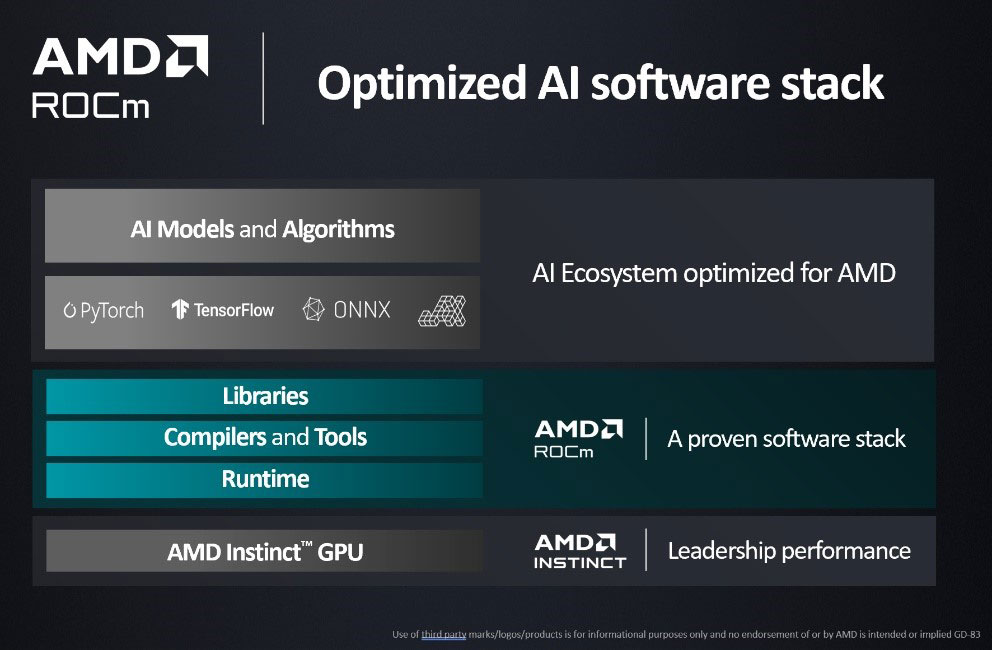

AMD ROCm is said to deliver a comprehensive suite of optimizations for AI and HPC workloads. It boasts “fine-tuned kernels for large language models, support for new data types, and support for new technologies like the OpenAI Triton programming language.”

Initial support for ROCm on RDNA 3 GPUs is due in the fall. The first such graphics cards to get official support will be the 48GB Radeon PRO W7900 and the 24GB Radeon RX 7900 XTX, according to the AMD Community blog. Support for additional RDNA 3 cards and expanded capabilities will be added over time.

In the meantime, AMD’s newest ROCm 5.6 release sounds like it will be less fussy about which GPUs it will run on. AMD says that it has heard of community members grumbling about driver issues on unsupported GPUs, but that it has “fixed the reported issues in ROCm 5.6 and we are committed to expanding our support going forward.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

digitalgriffin I made this response a couple months back. The fact CUDA couldn't be easily mapped on consumer AMD GPUs was a nightmare. And AI uses CUDA.Reply

Will this create another GPU shortage? Only if CUDA ports are successful to consumer hardware. -

bit_user Reply

Not necessarily. AI developers are using frameworks like PyTorch and TensorFlow. Sure, some use custom layers written using CUDA, but the more popular models will tend to have all their needs catered for by the layers that already ship with these frameworks.digitalgriffin said:I made this response a couple months back. The fact CUDA couldn't be easily mapped on consumer AMD GPUs was a nightmare. And AI uses CUDA.

Also, AMD has their CUDA clone, called HIP. They have tools which help you convert your CUDA code to HIP. So, existing CUDA users do have a migration path. Intel did something similar, except they're using SYCL (an open standard - more credit to them!). -

bit_user What bothers me about this is all the APU users with GCN that are being left out in the cold.Reply

And I get that AMD needs to focus on its new products, but if they really wanted to help sell off their backlog of RDNA2 inventory, they'd also add official support for all of those cards. -

digitalgriffin Reply

CUDA has a stranglehold on AI. Trust me on this. A number of the biggest libraries use it's underpinnings.bit_user said:Not necessarily. AI developers are using frameworks like PyTorch and TensorFlow. Sure, some use custom layers written using CUDA, but the more popular models will tend to have all their needs catered for by the layers that already ship with these frameworks.

Also, AMD has their CUDA clone, called HIP. They have tools which help you convert your CUDA code to HIP. So, existing CUDA users do have a migration path. Intel did something similar, except they're using SYCL (an open standard - more credit to them!).

It is possible to port over with some mods using HIP (as you said) But it was a pain in the duckass. I'm hoping more will work on it now that AMD is opening ROCm up to the comsumer GPU space. -

bit_user Reply

Yes, the popular deep learning frameworks all have cudnn backends, but they also have backends for other hardware.digitalgriffin said:CUDA has a stranglehold on AI. Trust me on this. A number of the biggest libraries use it's underpinnings.

Nvidia's biggest advantage is its performance, followed by their level of software support. Given how expensive they are and how scarce they've become, even those advantages are no longer enough to keep their competitors shut out of the market.

It's not only a 2-horse race!digitalgriffin said:It is possible to port over with some mods using HIP (as you said) But it was a pain in the duckass. I'm hoping more will work on it now that AMD is opening ROCm up to the comsumer GPU space.