AMD Debuts FidelityFX Super Resolution to Take on DLSS at Computex

It's been a long wait for AMD's DLSS competitor.

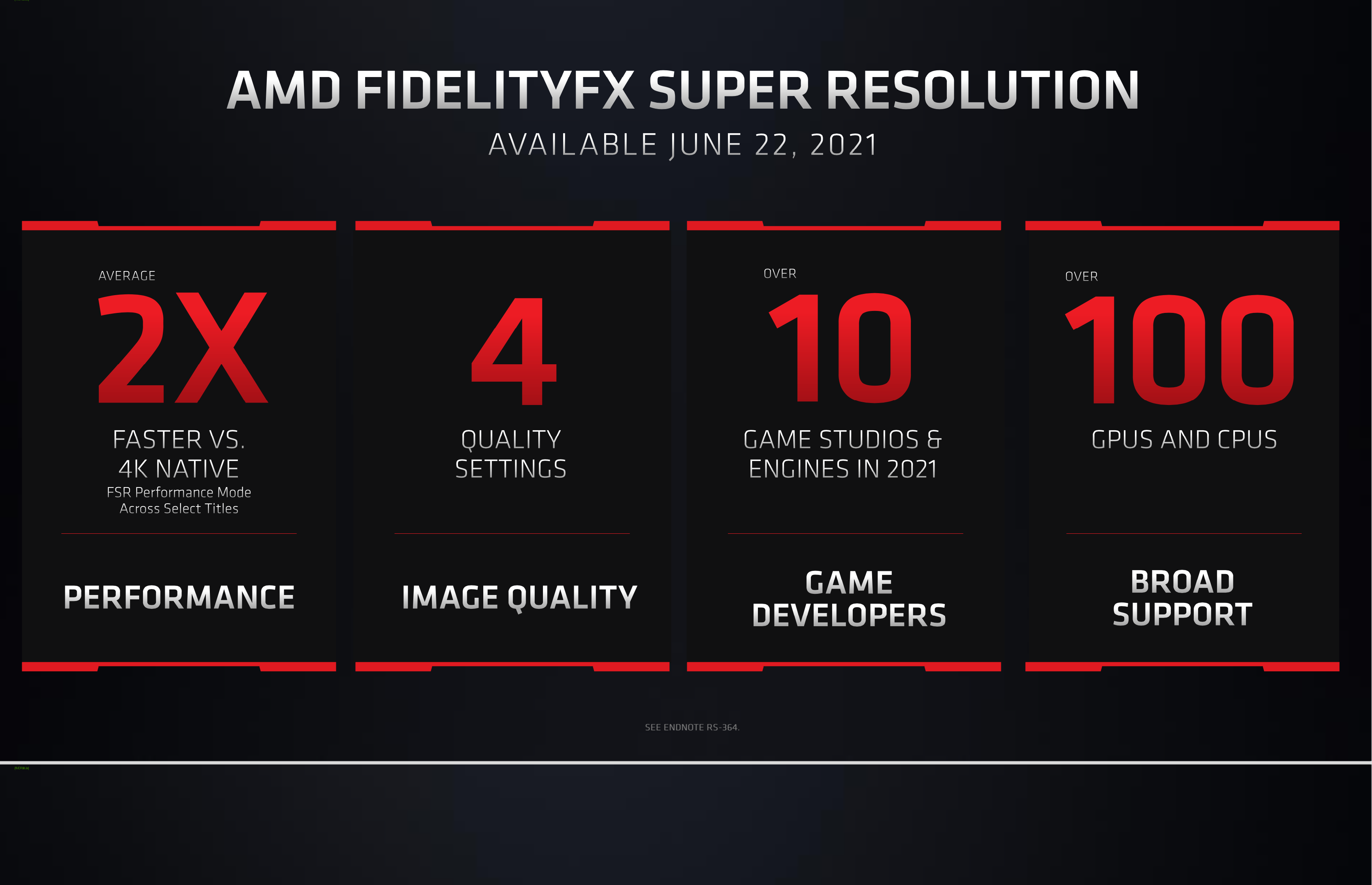

After a long wait, AMD has finally introduced FidelityFX Super Resolution (AMD FSR), the company's upscaling technology to rival Nvidia's machine learning-powered DLSS. It was introduced during AMD chief executive Dr. Lisa Su's virtual keynote address at Computex, which is being held online this year. The new feature will launch on June 22.

AMD promises that FSR will deliver up to 2.5 times higher performance while using the dedicated performance mode in "select titles." At least ten game studios will integrate FSR into their games and engines this year. The first titles should show up this month, and the company also detailed FSR's roots in open source. The feature is based on AMD's OpenGPU suite.

FSR has four presets: ultra quality, quality, balanced and performance. The first two focus on higher quality by rendering at closer to native resolution, while the latter two push you to get as many frames as possible. FSR works on both desktops and laptops, as well as both integrated and discrete graphics.

In its own tests using Gearbox Software's Godfall (AMD used the Radeon RX 6900 XT, RX 6800 XT and RX 6700 XT on the game's epic preset at 4K with ray tracing on), the company claimed 49 frames per second at native rendering, but 78 fps using ultra quality FSR, 99 fps using quality, 124 fps on balanced and 150 fps on performance.

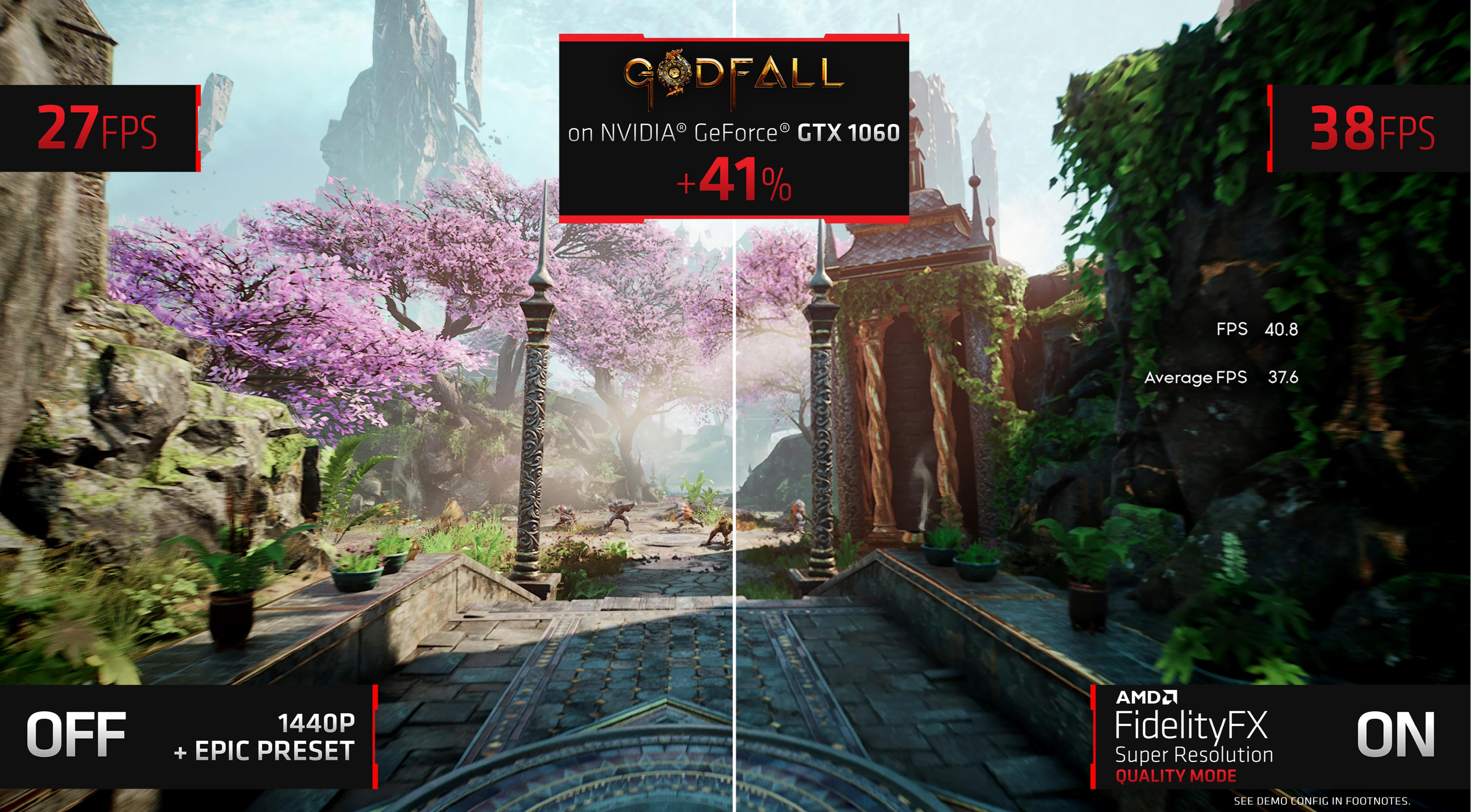

But FSR works on other hardware, including Nvidia's graphics cards. AMD tested one of Nvidia's older (but still very popular) mainstream GPUs, the GTX 1060, with Godfall at 1440p on the epic preset. It ran natively at 27 fps, but at 38 fps with quality mode on — a 41% boost. In fact, AMD says that FSR, which needs to be implemented by game developers to suit their titles, will work with over 100 CPUs and GPUs, including its own and competitors.

We'll be able to test FidelityFX Super Resolution when it launches, starting with Godfall on June 22, so keep an eye out for our thoughts. While the performance gains sound impressive, we're also keen to check out image quality. We've been fairly impressed by Nvidia's DLSS 2.0, but the original DLSS implementation was far less compelling. It seems as though AMD aims to provide similar upscaling but without all the fancy machine learning.

Su's keynote included other graphics announcements, such as the launch of the Radeon RX 6800M, RX 6700M and RX 6600M mobile GPUs based on RDNA 2, as well as a handful of new APUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Andrew E. Freedman is a senior editor at Tom's Hardware focusing on laptops, desktops and gaming. He also keeps up with the latest news. A lover of all things gaming and tech, his previous work has shown up in Tom's Guide, Laptop Mag, Kotaku, PCMag and Complex, among others. Follow him on Threads @FreedmanAE and BlueSky @andrewfreedman.net. You can send him tips on Signal: andrewfreedman.01

-

VforV This is the best news this year, so far! GJ AMD!Reply

As a (still forced by stock and prices) GTX 1080 user, I'm very excited about this. I also can't wait to get a new AMD GPU when prices come to more sane levels.

Nvidia has disappointed me enough times. With this tech working on GTX cards I can hold on till I can buy new. This is so exciting :) -

hotaru.hino According to AnandTech, their method only works on just the frame, so it's about the same as DLSS 1.0. When they integrate it with temporal anti-alaising, then it might have a shot at DLSS 2.0.Reply -

VforV Reply

That's the only thing I have to see in (high rez) benchmarks / tests, how good or bad do those presets look, but AMD said is not a big difference on Ultra and frankly, even Ultra gives enough performance for me.hotaru.hino said:According to AnandTech, their method only works on just the frame, so it's about the same as DLSS 1.0. When they integrate it with temporal anti-alaising, then it might have a shot at DLSS 2.0.

Also all the leaks so far said it's like a DLSS 1.5, not 1.0. So yeah I'll wait for those confirmations, but even if they are behind nvidia at this point, the rate of adoption and advancement this tech will have since it's open source will surpass DLSS 2.1 fast. I think nvidia soon will have to just embrace this tech and forget about DLSS (just my opinion).

edit: AMD uploaded the 4k video of FSR

eHPmkJzwOFcView: https://www.youtube.com/watch?v=eHPmkJzwOFc

Looks like it's indeed good enough and better than DLSS 1.0. -

m4ko ReplyVforV said:That's the only thing I have to see in (high rez) benchmarks / tests, how good or bad do those presets look, but AMD said is not a big difference on Ultra and frankly, even Ultra gives enough performance for me.

Also all the leaks so far said it's like a DLSS 1.5, not 1.0. So yeah I'll wait for those confirmations, but even if they are behind nvidia at this point, the rate of adoption and advancement this tech will have since it's open source will surpass DLSS 2.1 fast. I think nvidia soon will have to just embrace this tech and forget about DLSS (just my opinion).

AMDs solution is FAR inferior:

AMD uses only the current frame

AMD uses no advanced information like motion vectors or depth masks

AMD occupies the normal rasterization hardware (so you have LESS raw rasterization power when it is turned on)

With this knowledge it should be clear that AMDs solution will look much worse then current DLSS implementations. And because it occupies the normal rendering hardware instead of dedicated hardware it will most probably run worse then DLSS at the same internal rendering resolution.

And don't get me wrong: I would love for this to be a good alternative. But AMD has always been really bad with software - and knowing how their implementation seems to work ... I have little hope that it is anything more then a simple image-upscaler. -

VforV Reply

Did you look at the 4k video? Only lower resolutions look worse, which look worse on DLSS too, no exception or difference there.m4ko said:AMDs solution is FAR inferior:

AMD uses only the current frame

AMD uses no advanced information like motion vectors or depth masks

AMD occupies the normal rasterization hardware (so you have LESS raw rasterization power when it is turned on)With this knowledge it should be clear that AMDs solution will look much worse then current DLSS implementations. And because it occupies the normal rendering hardware instead of dedicated hardware it will most probably run worse then DLSS at the same internal rendering resolution.

And don't get me wrong: I would love for this to be a good alternative. But AMD has always been really bad with software - and knowing how their implementation seems to work ... I have little hope that it is anything more then a simple image-upscaler.

Also, this is now, the tech will progress much faster and the adoption much wider than DLSS. 3rd party reviews will confirm that it's good enough for what it brings, and does and how wide it works, even now at it's inception.

The future for gaming looks a little better now thanks to FSR, nvidia needs to figure out what they do next: invest even more money on their black box DLSS or adopt FSR, like they did with freesync... the history repeats.

Tom is right, it's a chess move by AMD and nvidia is in check:

rMzjs85pUh4View: https://www.youtube.com/watch?v=rMzjs85pUh4 -

TheJoker2020 Replym4ko said:AMDs solution is FAR inferior:

AMD uses only the current frame

AMD uses no advanced information like motion vectors or depth masks

AMD occupies the normal rasterization hardware (so you have LESS raw rasterization power when it is turned on)With this knowledge it should be clear that AMDs solution will look much worse then current DLSS implementations. And because it occupies the normal rendering hardware instead of dedicated hardware it will most probably run worse then DLSS at the same internal rendering resolution.

And don't get me wrong: I would love for this to be a good alternative. But AMD has always been really bad with software - and knowing how their implementation seems to work ... I have little hope that it is anything more then a simple image-upscaler.

I will wait and see what this is like in reality and come to my own conclusions thank you on my 5700XT on the various different settings.

As for "how good this is compared to the nVidia solution", I will wait for reviews as I don't have a nVidia card to do direct comparisons on myself. Speculation at this point is largely moot as sometimes (not always) "inferior tech" can result in good quality and performance even though it is technically inferior in theory.

Remember: In theory there is no difference between theory and practice, in practice there is. -

Aaron44126 Reply

Um, some of what you say is true, but...m4ko said:AMDs solution is FAR inferior:

AMD uses only the current frame

AMD uses no advanced information like motion vectors or depth masks

AMD occupies the normal rasterization hardware (so you have LESS raw rasterization power when it is turned on)With this knowledge it should be clear that AMDs solution will look much worse then current DLSS implementations. And because it occupies the normal rendering hardware instead of dedicated hardware it will most probably run worse then DLSS at the same internal rendering resolution.

And don't get me wrong: I would love for this to be a good alternative. But AMD has always been really bad with software - and knowing how their implementation seems to work ... I have little hope that it is anything more then a simple image-upscaler.

Outright claims that it is "inferior" (compared to DLSS 1.0 in particular) can't be made until we have the tech in-hand for people to do independent tests.

Even if it "occupies the normal rasterization hardware", it clearly boosts the framerate so you can't really claim that the performance hit is important. What matters is the quality hit.

In the case of both FSR and DLSS, obviously rendering at native resolution would be ideal. These products exist to boost performance in the case where you can't achieve your performance goal running at native resolution without "tricks". Both involve a tradeoff (higher performance at lower quality). We don't yet have a good understanding kind of quality hit is imposed by FSR. We will in a few weeks when this launches for real. It may well turn out that FSR is "good enough" for many use cases even though they are using a different approach than DLSS. And the fact that it runs on NVIDIA GPUs is pretty cool, NVIDIA users will be able to take advantage of this in games that support FSR but not DLSS. -

renz496 ReplyVforV said:That's the only thing I have to see in (high rez) benchmarks / tests, how good or bad do those presets look, but AMD said is not a big difference on Ultra and frankly, even Ultra gives enough performance for me.

Also all the leaks so far said it's like a DLSS 1.5, not 1.0. So yeah I'll wait for those confirmations, but even if they are behind nvidia at this point, the rate of adoption and advancement this tech will have since it's open source will surpass DLSS 2.1 fast. I think nvidia soon will have to just embrace this tech and forget about DLSS (just my opinion).

edit: AMD uploaded the 4k video of FSR

eHPmkJzwOFcView: https://www.youtube.com/watch?v=eHPmkJzwOFc

Looks like it's indeed good enough and better than DLSS 1.0.

some tech outlet also said something similar about DLSS before when AMD coming up with RIS. and when nvidia highlighting their freestyle and add the option to use sharpening filter directly from their own control panel some even said nvidia are finally giving up on DLSS. then nvidia come out with DLSS2. nvidia is not the type to abandon their tech immediately. not even after years of bad reception (just look how long nvidia keep TXAA around even when there is not favorable view of it since the very beginning). nvidia most likely keep improving their DLSS and working with major game engine to integrate their easy to use plugin. -

dorsai ReplyAaron44126 said:....It may well turn out that FSR is "good enough" for many use cases even though they are using a different approach than DLSS. And the fact that it runs on NVIDIA GPUs is pretty cool, NVIDIA users will be able to take advantage of this in games that support FSR but not DLSS.

Great point...I'm not going to look the gift horse in the mouth without even seeing the gift. Lets hope AMD bats it out of the park with this one and everyone benefits. -

VforV Reply

Ok, I can agree on that. Maybe they won't abandon DLSS and that's not a problem, it's maybe even better for us customers to have more options, but for me one thing is sure: either nvidia is forced to abandon DLSS (at some point in the future) or make it even better, accelerate it's progress even more than if they were alone with this tech. So the competition just got more fierce. Win-win for gamers.renz496 said:some tech outlet also said something similar about DLSS before when AMD coming up with RIS. and when nvidia highlighting their freestyle and add the option to use sharpening filter directly from their own control panel some even said nvidia are finally giving up on DLSS. then nvidia come out with DLSS2. nvidia is not the type to abandon their tech immediately. not even after years of bad reception (just look how long nvidia keep TXAA around even when there is not favorable view of it since the very beginning). nvidia most likely keep improving their DLSS and working with major game engine to integrate their easy to use plugin.

P.S. What if because of FSR, nvidia decides to make DLSS open too? That's a crazy hilarious thought. I don't think it will happen at all, but funny nonetheless.