D-Wave’s ‘Quadrant’ Machine Learning Does More With Less Data

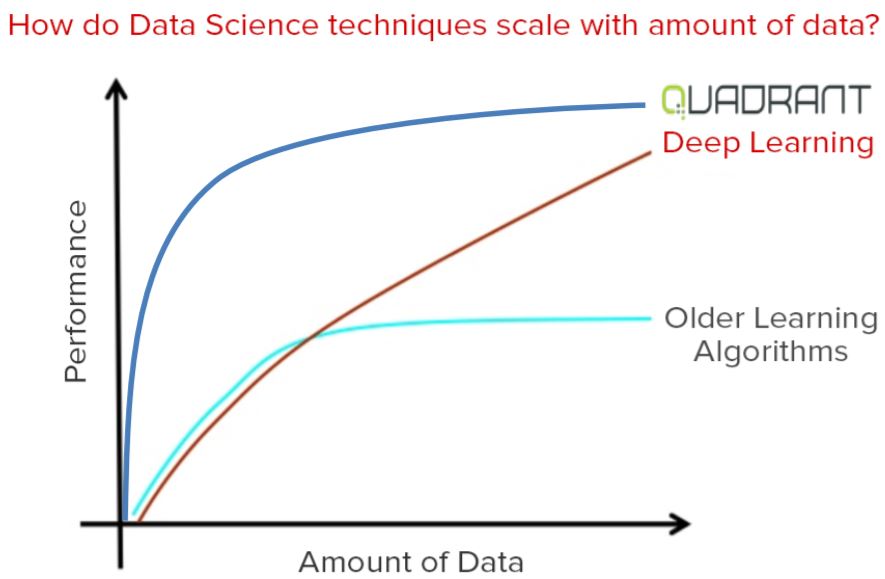

D-Wave announced “Quadrant,” a new business unit that will provide machine learning services powered by both CPU/GPUs and its quantum annealing computer. D-Wave’s Quadrant algorithms will be able to more efficiently provide accurate results with less training data compared to classical deep learning solutions that require significant amounts of labeled data.

Taking Machine Learning To The Next Level

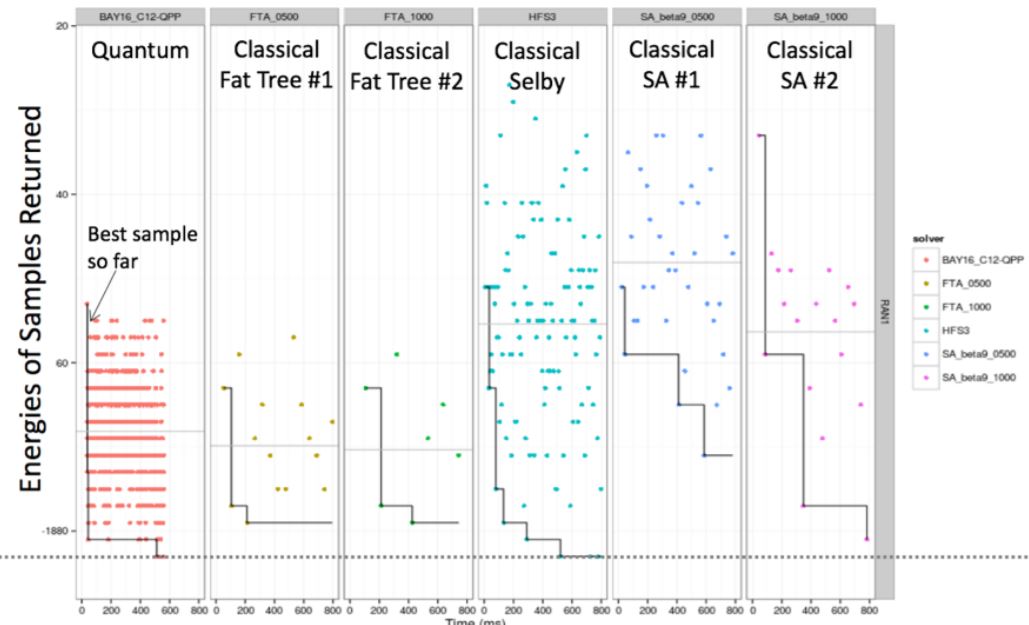

One of the promises of quantum computers has been that they are so much better at calculating multiple possibilities at the same time and finding the “optimum” result for a variety of problems. D-Wave’s quantum annealing computer (a more specialized kind of quantum computer) has already been used in real-world applications such as optimizing traffic flow.

Handol Kim, Senior Director, Quadrant Machine Learning at D-Wave, said:

Machine learning has the potential to accelerate efficiency and innovation across virtually every industry. Quadrant’s models are able to perform deep learning using smaller amounts of labeled data, and our experts can help to choose and implement the best models, enabling more companies to tap into this powerful technology.

Most of the machine learning (ML) or artificial intelligence (AI) solutions out there currently need millions and millions of data points in order to come up with an accurate model that can then be used in the learn world effectively. For instance, if you want to build an ML model that can recognize cats, then you need to train it on millions of pictures of cats.

This is not a problem when you have to recognize objects for which we can show the algorithm a million different versions of those objects. However, things get more difficult when it’s not as easy to get a million data points on something.

For instance, if you want to train a model to recognize an extremely rare skin disease with high accuracy, you will not have a million examples of how that skin disease looks on people. And if you only have a handful of such images, you will not be able to build an accurate and reliable model, which means it wouldn’t be useful in practice.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

How The New Quadrant Solution Works

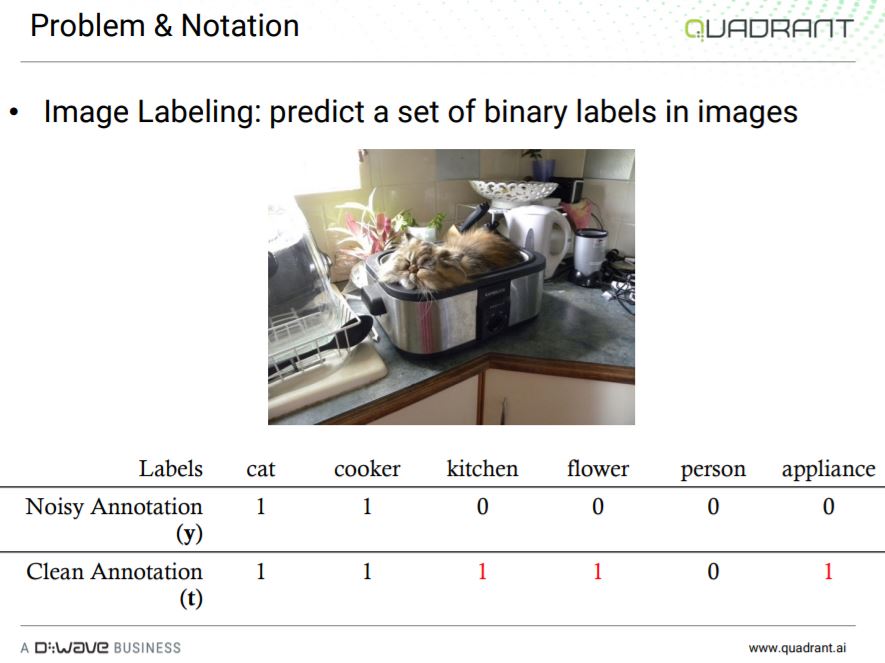

D-Wave said that right now deep learning neural networks require not just large amounts of data but also annotated data, which can get quite expensive, too. That means you don’t just have to feed the algorithm millions of pictures of cats but actually tell it that those objects in the images are cats. Training on labeled data is also called supervised learning.

D-Wave’s semi-supervised learning algorithms can make use of images with “noisy” labels, such as the images typically found in search engines and social media, which are also cheaper to obtain. They can also use unlabeled data or small amounts of labeled data in order to train an accurate model.

D-Wave said that applications for its algorithms includes: bioinformatics, medical imaging, financial services, and telecommunications. The company has already partnered with Siemens Healthineers, a global medical technology provider. The two companies were able to take the first place in the CATARACTS grand challenge, with an ML solution that could accurately identify medical instruments. This solution could be used for computer-assisted interventions.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

TJ Hooker The first figure in this article could really use some explanation, or at the very least a caption. As it is now I have no idea what I'm looking at.Reply -

bit_user This sort of goes without saying. When you can find the optimal solution to a multi-variate problem and express machine learning in such a form, then you can use fewer variables to achieve a similar quality result. In machine learning, the number of training samples required is largely a function of the size of the model. So, the smaller number of required training samples follows naturally from enabling smaller models.Reply

What they're not saying is the down side - that they don't scale well to larger models. Otherwise, perhaps they'd put Nvidia's cloud business out of business virtually overnight. It will probably be a while before Nvidia or anyone building training-oriented machine learning chips has to worry about these guys.