AMD Drops FSR 2.0 Source Code, Takes Shots at DLSS and XeSS

Machine learning isn't a prerequisite, says AMD

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

AMD has released FSR 2.0's source code on GPUOpen, available for anyone to download and use — part of its commitment to making FSR fully open-source. The download contains all the necessary APIs and libraries for integrating the upscaling algorithm into DirectX 12 and Vulkan-based titles, along with a quick start checklist. AMD says DirectX 11 support needs to be discussed with AMD representatives, which suggests DirectX 11 is either not officially supported or is more difficult to implement.

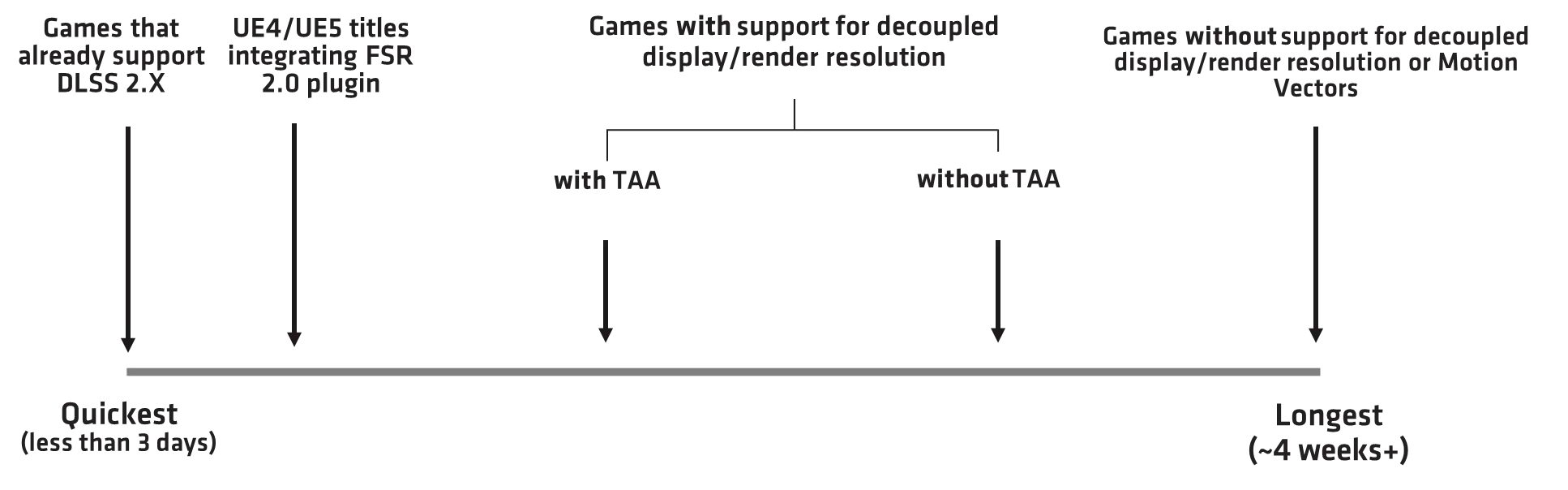

Implementing FSR 2.0 will apparently take developers anywhere from under three days to four weeks (or more), depending on features supported within the game engine. FSR 2.0 uses temporal upscaling, which requires additional data inputs from motion vectors, depth buffers, and color buffers to generate a quality image. Games will need to add these structures to their engine if they're not already available.

Games that already support 2.0 versions of DLSS will be the easiest to integrate, typically requiring less than three days of development, according to AMD. Next up are UE4 and UE5 titles with the new FSR 2.0 plugin. Games with support for decoupled display and render resolution are in the middle of AMD's "development timeline," which includes most games with temporal anti-aliasing (TAA) support. Finally, games with none of FSR 2.0's required inputs will take four weeks or longer.

Game developers will need to implement FSR 2.0 right in the middle of the frame rendering pipeline, because it fully replaces the duties of temporal anti-aliasing. This will require any post-processing effects that need anti-aliasing to be handled later in the pipeline after FSR 2.0 upscaling takes effect.

At the beginning of the pipeline you have rendered and pre-upscale plus post-processing effects that don't require anti-aliasing. Directly in the middle is where FSR 2.0 upscaling takes place, then afterward post-upscale and anti-aliased post-processing effects are handled. Finally, HUD rendering takes place after everything else is completed.

AMD Says Machine Learning Is Overrated

Perhaps the most controversial aspect of AMD's GPUOpen article is its view on machine learning. AMD says machine learning is not a prerequisite to achieving good image quality and is often times only used to combine previous frames to generate the upscaled image and that is it. This means there's no AI algorithm for actually recognizing shapes or objects within a scene, which is what we would expect from an "AI upscaler."

This statement is a direct attack on Nvidia's Deep Learning Super Sampling (DLSS) technology, as well as Intel's upcoming XeSS upscaling algorithm — both of which are AI upscaled. Nvidia specifically has boasted greatly about DLSS' AI requirements, suggesting it's a necessity to generate native-like image quality.

However, we can't back up AMD's statement that machine learning is only used for combining previous frame data and not for objects in the actual scene. Nvidia has stated that the AI training for DLSS takes lower and higher resolution images, and then all of that gets combined with the depth buffers and motion vectors with DLSS 2.0 and later. Stating exactly what the weighted AI network does and doesn't do isn't really possible with most machine learning algorithms.

Regardless, AMD has demonstrated with FSR 2.0 that you do not need machine learning hardware (i.e. Nvidia's Tensor cores or Intel's upcoming Matrix Engines) to generate native-like image quality. FSR 2.0 has proven itself to be nearly as good as DLSS 2.x in tests we have conducted in both God of War and Deathloop, and more importantly it's able to run on everything from current generation RX 6000- and RTX 30-series GPUs down to cards like the GTX 970 that launched clear back in 2014.

Even if we give Nvidia's DLSS a slight edge in image quality, restricting it to RTX cards makes it potentially far less useful for gamers. Going forward, any game that supports DLSS 2.x or FSR 2.0 will hopefully also support the other upscaling solution, providing all users access to one or the other feature.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

KananX While machine learning is a thing I want to remind everyone that “AI” is just a buzzword as there is no real artificial intelligence and we are in fact nowhere near it. The only difference here is the approach and it’s calculated in specialized cores rather than the usual shading cores. XeSS, if intel didn’t lie about it’s performance, could be way better than DLSS since it can run on specialized and non specialized cores as well, making it far more variable. DLSS will lose this game on the long run against FSR, it’s FreeSync vs Gsync again and we all know what happened. Proprietary solutions will always lose unless they are way better, which isn’t the case here anymore.Reply -

hotaru.hino Reply

So are you some kind of renowned academic in computer science that has the authority to define an industry wide term? Or some kind of philosophy scholar that has clout over the idea of what "intelligence" even is?KananX said:While machine learning is a thing I want to remind everyone that “AI” is just a buzzword as there is no real artificial intelligence and we are in fact nowhere near it.

While XeSS can run on non-specialized hardware, its performance suffers doing so.KananX said:The only difference here is the approach and it’s calculated in specialized cores rather than the usual shading cores. XeSS, if intel didn’t lie about it’s performance, could be way better than DLSS since it can run on specialized and non specialized cores as well, making it far more variable.

Considering G-Sync's been around since 2013 and it's still being used in high-end monitors, I don't think DLSS will die any time soon. Plus CUDA is still widely used despite OpenCL also being a thing.KananX said:DLSS will lose this game on the long run against FSR, it’s FreeSync vs Gsync again and we all know what happened. Proprietary solutions will always lose unless they are way better, which isn’t the case here anymore. -

KananX Reply

It’s widely known if you read a bit about “AI” and what it really means that it was not achieved yet. Just a few days ago there was a article here about a ousted google engineer that said they had a real AI, he was fired shortly after and google called everything he said back by stating it was in fact not a real sentient AI. Achieving a real AI needs something that can resemble a humans brain, we didn’t yet fully understand how the brain works, and much less able to build one of our own. Go and inform yourself a bit instead of trying to pick my posts apart, which won’t succeed anyway.hotaru.hino said:So are you some kind of renowned academic in computer science that has the authority to define an industry wide term? Or some kind of philosophy scholar that has clout over the idea of what "intelligence" even is?

I wouldn’t call a few percentages “suffering” you’re not well informed here as well.hotaru.hino said:While XeSS can run on non-specialized hardware, its performance suffers from it.

Gsync is barely used in monitors and even most high end monitors don’t use it anymore. Gsync had a short relevant time, now it’s largely replaced by FreeSync, this is also a well known fact. Do you inform yourself on tech, or just in the forum to pick apart things others posted and start useless discussions? I don’t see much sense in your post and I’m not doing your homework for you either.hotaru.hino said:Considering G-Sync's been around since 2013 and it's still being used in high-end monitors, I don't think DLSS will die any time soon. Plus CUDA is still widely used despite OpenCL also being a thing.

Comparing the CUDA and OpenCL situation with these makes no sense, as CUDA is preferred because it works really well, while the solutions of the competition don’t. This could change in the coming years as well. I see you have a biased Nvidia agenda here, not good. -

Good move AMD. If FSR 2.0 is pretty much as good as DLSS and it's royalty free it's the same case we had with other formats in the past. As long as something can be easily and widely adopted it doesn't matter if there are better solutions. Those better things are usually tied to one manufacturer or need expensive fees. Just like Betamax lost with VHS. It was better, but tied to Sony and it's products and never got traction.Reply

-

tennis2 Dear AMD,Reply

Since you're moving to IGPs on the upcoming Ryzen CPU launch, please resurrect the dGPU+IGP teamwork of Lucid's Virtu MVP Hyperformance tech. -

twotwotwo Replytennis2 said:3 days? 4 weeks?

How many programmers for that duration?

The README (or the presentations) breaks down what has to be done. The reason it might be a quick project is that if you used another flavor of temporal AA or the engine already supports it, you mostly plug the same inputs/outputs into FSR. The different types of TAA mostly do the same sort of thing.

If you're not so lucky, you need to change your renderer to output motion vectors and a depth buffer, which allows mapping pixels in previous frames to the current frame, you need to separate out some post-upscale steps (drawing the HUD, some effects), and need to jitter the camera position so a series of frames gets that sub-pixel-level detail. You can provide some "bonus" stuff like a mask that communicates "this part of the image may not be well predicted by past frames."

This is a neat visual breakdown of what TAA does: https://www.elopezr.com/temporal-aa-and-the-quest-for-the-holy-trail/ .

The actual README isn't so bad at breaking down the parts : https://github.com/GPUOpen-Effects/FidelityFX-FSR2 .

Unrelated to the quote, but a relatively simple addition that seems like it could have real payoff is smart/dynamic quality level--render at a higher quality level (or native) when possible, switch to lower ones as needed to hit some minimum framerate. -

-Fran- I have a serious problem with what AMD showed here...Reply

it's either "~4 weeks" or "4 weeks+" (which is analogous to ">4 weeks"). Using both at the same time is stupid and redundant (to a degree). How do you even read that? "It's around over 4 weeks" or "it's around 4 weeks plus"? XD

Oof, that was so much anger. Sorry.

As for what this means, well, I'm not entirely sure, but it is nice when Companies just open the door for the world to improve on what they have started.

As for the debacle of "AI" and "machine learning". They're basically buzzwords that are annoying just like when any other term is over abused and, sometimes, incorrectly used. "Cloud", anyone? "Synergy" anyone? Machine learning is not useless; far from it. It allows to narrow down algorithms based on heuristics that would otherwise take a long time for humans to do by hand. Whenever you have a problem that the exact result is too costly (usually non-polynomial in cost; NP), you want heuristics to help you get a close/good enough result and yadda yadda. So, the "AI" side of things on Consumer is just saying "hey, we have some algorithms based on running heuristics quite a lot and they'll improve things over generic algorithms or less refined heuristics" and most of those are math calculations that run at quarter/half precision (FP8 or FP16) since you are not looking for accurate results (lots of decimals) but fast operations. Is this truly useful? Flip a coin? It is truly "case by case". Sometimes a properly trained algorithm can help a lot, but that takes a lot of iterations and also requires the complexity of the solution is rather high. So, that brings us to the "temporal" solution based upscale. Is it a hard problem to solve from the algorithmic point of view? No, not really I'd say. The proof is in the pudding. How much better is DLSS 2.x over FSR 2.0? Within strike distance of the "generic" heuristics used by FSR, no? And even then, FSR has some image quality wins I'd say (subjective, so I won't argue that I can be wrong here). Maybe nVidia needs to train the AI more per game? Maybe they need to improve the backbone of the Tensor cores more so the heuristics can be more accurate or process more data per pass? Ugh, so much "whatifism", so I'll just stop here.

Anyway, again, good to see more (F?)OSS stuff.

Regards. -

KananX Reply

First of all AI is a buzzword in my opinion, machine learning is just the correct usage of language compared to that, it’s just facts.-Fran- said:I have a serious problem with what AMD showed here...

it's either "~4 weeks" or "4 weeks+" (which is analogous to ">4 weeks"). Using both at the same time is stupid and redundant (to a degree). How do you even read that? "It's around over 4 weeks" or "it's around 4 weeks plus"? XD

Oof, that was so much anger. Sorry.

As for what this means, well, I'm not entirely sure, but it is nice when Companies just open the door for the world to improve on what they have started.

As for the debacle of "AI" and "machine learning". They're basically buzzwords that are annoying just like when any other term is over abused and, sometimes, incorrectly used. "Cloud", anyone? "Synergy" anyone? Machine learning is not useless; far from it. It allows to narrow down algorithms based on heuristics that would otherwise take a long time for humans to do by hand. Whenever you have a problem that the exact result is too costly (usually non-polynomial in cost; NP), you want heuristics to help you get a close/good enough result and yadda yadda. So, the "AI" side of things on Consumer is just saying "hey, we have some algorithms based on running heuristics quite a lot and they'll improve things over generic algorithms or less refined heuristics" and most of those are math calculations that run at quarter/half precision (FP8 or FP16) since you are not looking for accurate results (lots of decimals) but fast operations. Is this truly useful? Flip a coin? It is truly "case by case". Sometimes a properly trained algorithm can help a lot, but that takes a lot of iterations and also requires the complexity of the solution is rather high. So, that brings us to the "temporal" solution based upscale. Is it a hard problem to solve from the algorithmic point of view? No, not really I'd say. The proof is in the pudding. How much better is DLSS 2.x over FSR 2.0? Within strike distance of the "generic" heuristics used by FSR, no? And even then, FSR has some image quality wins I'd say (subjective, so I won't argue that I can be wrong here). Maybe nVidia needs to train the AI more per game? Maybe they need to improve the backbone of the Tensor cores more so the heuristics can be more accurate or process more data per pass? Ugh, so much "whatifism", so I'll just stop here.

Anyway, again, good to see more (F?)OSS stuff.

Regards.

Secondly, as far as I know, DLSS 1.0 used trained “AI” via supercomputer and since 2.0 it’s just trained via tensor cores in the GPUs, so it’s very comparable to what FSR2.0 does, if you ask me. Both calculate imagery to upscale lower res to higher res, it’s not that complicated. Tensor cores are also just specialized cores, that can calculate certain data more efficient than the regular shaders. -

-Fran- Reply

I mean, if we want to be pedantic, both are kind of wrong. Unless you believe a machine can have "intelligence" or it can "learn". The act of learning is interesting by itself, as it requires a certain level of introspection and acknowledgement that, to be honest, machines do not have and, maybe, can't have. They could emulate it (exact results vs approximation can be defined) and start from there, I guess, but never at human capacity? As for "intelligence", well, depends on how you define it. Capacity to resolve problems? Capability of analysis? Calculations per second? Heh. I'm not sure as there's plenty definitions out there that can be valid from a psychological point of view. Same-ish with learning, but I'm inclined to use the "introspective" one as it makes the most sense to me: there can only be learning when you can look back and notice a change in knowledge.KananX said:First of all AI is a buzzword in my opinion, machine learning is just the correct usage of language compared to that, it’s just facts.

Secondly, as far as I know, DLSS 1.0 used trained “AI” via supercomputer and since 2.0 it’s just trained via tensor cores in the GPUs, so it’s very comparable to what FSR2.0 does, if you ask me. Both calculate imagery to upscale lower res to higher res, it’s not that complicated. Tensor cores are also just specialized cores, that can calculate certain data more efficient than the regular shaders.

Interesting topic for sure. Worthy of having a BBQ to discuss it over, haha.

Regards.