AMD FSR vs Nvidia DLSS: Which Upscaler Reigns Supreme?

FSR vs DLSS performance and image quality

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Nvidia DLSS (Deep Learning Super Sampling) and AMD FSR (FidelityFX Super Resolution) are two similar seeming technologies that are part of the debate among who makes the best graphics cards. With several months' worth of FSR integration into games, and over three years of increasing DLSS use among games and game engines, we're now equipped to shed a bit more light on how the two technologies compare.

We've selected four games that each support both technologies, so we can provide direct comparisons between them. We've also tested on one AMD and one Nvidia GPU for each game. This will allow us to compare performance scaling between the different rendering modes, as well as comparing image quality.

There's more to life than pure performance, though we do track that in our GPU benchmarks hierarchy. Image quality, and perhaps more importantly, image quality while reaching high frame rates, is what we really want. DLSS and FSR tackle this problem in very different ways, which we'll briefly cover here before getting into the results of our comparisons.

Nvidia DLSS Overview

Nvidia created DLSS to improve performance without drastically reducing image quality, but just as important, Nvidia wanted a good way for modern games to render at lower resolutions and then upscale, so that things like real-time ray tracing would be more viable on mainstream hardware. To that end, Nvidia added tensor core hardware to it's RTX 20-series GPUs, and basically doubled down on the tensor core performance with the RTX 30-series.

Tensor operations aren't something Nvidia invented, as they've been used and discussed in machine learning circles for a while now. The basic idea is to create special-purpose hardware that has enough precision combined with tons of compute to make the training and inference processes associated with artificial intelligence run better.

The RTX GPUs use FP16 (16-bit floating point) operations, and each tensor core on the RTX 20-series can work with 64 separate pieces of data, while the RTX 30-series GPUs can work on 128 chunks of data. The RTX 30-series also supports sparsity, which basically means that the hardware can skip multiplication by zero and effectively double its performance. The net result is that an RTX 2070 has a potential 59.7 TFLOPS of FP16 compute, while the RTX 3070 has a potential 163 TFLOPS of FP16 compute.

All of that computational power gets used to upscale and enhanced frames using inputs from previous frames, the lower resolution current frame, and an AI network trained on how to make lower resolution images look like they were rendered at a higher resolution.

Nvidia offers four DLSS modes: Quality mode renders at half the target resolution, balanced renders at one third the target resolution, performance uses one fourth the resolution, and ultra performance is one ninth the final resolution. Or, at least, that's the theory. In practice, it seems games have a bit of leeway in their rendered resolution.

AMD FSR Overview

AMD's FidelityFX Super Resolution involves far less computational work. It's a post-processing algorithm that has been designed to upscale and enhance frames, but without all of the deep learning stuff, tensor cores, and massive amounts of compute. Basically, it upscales an image using a spatial algorithm (it only looks at the current frame), and then has some edge detection and sharpening filters to improve the overall look — or if you want specifics, it's Lanczos upscaling with edge detection.

While that may not sound as impressive as DLSS, FSR does have some pretty major advantages. The biggest is that FSR can run on pretty much any relatively modern GPU. We've tested it on Intel's UHD 630, AMD GPUs going back as far as the RX 500-series, and Nvidia GPUs from the GTX 900-series, and it worked on all of them. Also, FSR is open-source, meaning others are free to adopt it, modify it, etc.

The FSR algorithm basically uses the shaders that are part of all modern GPUs to do the heavy lifting. As with DLSS, there are four modes commonly available: ultra quality, quality, balanced, and performance. AMD uses different scaling factors of 1.3x at ultra quality, 1.5x for quality, 1.7x for balanced, and 2.0 for performance. Those scaling factors apply in both horizontal and vertical dimensions, so performance mode ends up being 4x upscaling (the same as Nvidia's performance mode).

DLSS vs. FSR Test Setup

We've selected four games that support both FSR and DLSS for this article. The latest release is Back 4 Blood, which came out earlier in October — you can read our full Back 4 Blood benchmarks article for more details on how that game runs and what settings are available. The Myst remake also came out in August 2021, and it uses Unreal Engine 4 to allow you to walk around a fully rendered world, unlike the original point and click adventure of the 90s. Next is Deathloop (and I refuse to capitalize the whole name, silly Arkane), which released in September. Last (and perhaps least), Marvel's Avengers originally launched without DLSS support but it was added a month later, in October 2020, with FSR support added in July 2021.

That's three relatively recent games plus an older game, but there are a few others as well. Baldur's Gate III (RPG) supports both DLSS and FSR in the latest early access branch. Other games with FSR and DLSS include Chernobylite (sci-fi survival), Edge of Eternity (a JRPG), Enlisted (multiplayer war shooter), F1 2021 (race car sim), The Medium (adventure), and Necromunda: Hired Gun (shooter). Chernobylite and Necromunda both use Unreal Engine for their DLSS and FSR support, just like Myst, and we'll probably see quite a few Unreal Engine games in the future with both DLSS and FSR.

Test System for GPU Benchmarks

Intel Core i9-9900K

Corsair H150i Pro RGB

MSI MEG Z390 Ace

Corsair 32GB DDR4-3600 (2x 16GB)

XPG SX8200 Pro 2TB

Windows 10 Pro (21H1)

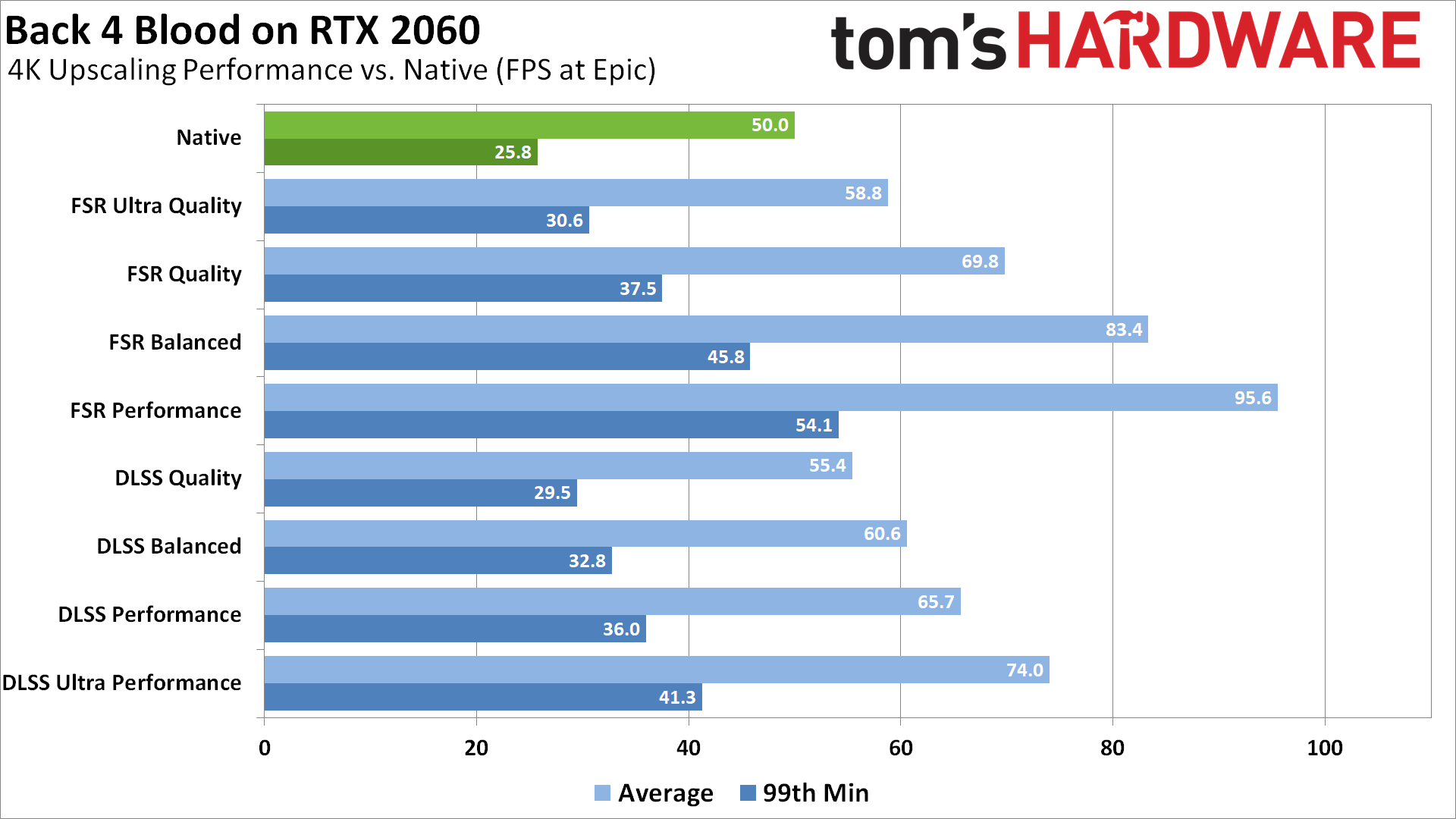

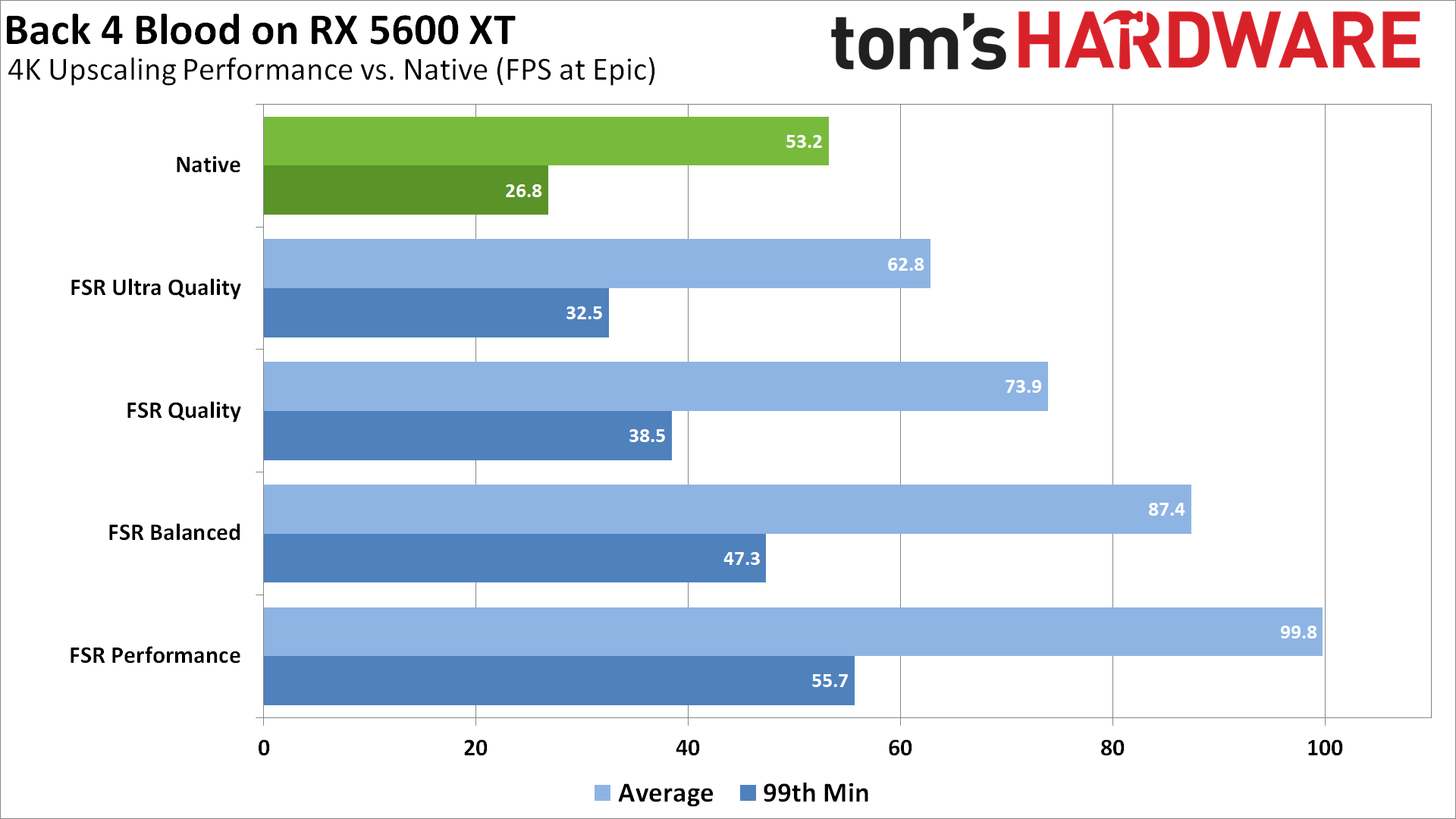

For testing, we're using our standard GPU testbed with a Core i9-9900K CPU, 32GB RAM, and a 2TB M.2 SSD. We used the GeForce RTX 2060 and Radeon RX 5600 XT in Back 4 Blood, since it's the least demanding of the four games and we wanted to look at performance scaling on slightly older and less capable GPUs in that game. The other games we tested with the GeForce RTX 3060 and the Radeon RX 6700 XT. Both have 12GB of VRAM, which generally ensured we didn't run into VRAM limitations. The other three games also support ray tracing, which we enabled for all of our testing.

The RX 6700 XT normally outperforms the RTX 3060 by a decent amount (by 34% in our GPU benchmarks hierarchy), so we're not really comparing AMD vs. Nvidia performance but rather looking at how DLSS and FSR affect performance. However, the use of ray tracing does narrow the gap some, as Nvidia's GPUs generally offer better RT performance. And with that out of the way, let's look at the results from the four games.

DLSS vs. FSR: Back 4 Blood

We have separate AMD and Nvidia charts, since only the Nvidia GPUs support DLSS. Starting with the RTX 2060, even in ultra performance mode, DLSS only improved performance by a bit less than 50%, while FSR in performance mode boosted framerates by over 90%. Each higher upscaling factor in DLSS improved performance by about 10%, with a slightly larger jump going from performance to ultra performance mode.

For FSR, each level of upscaling improved performance by closer to 20% on the RTX 2060. It's possible our use of previous generation hardware was a factor in how much performance improved, but in general Back 4 Blood wasn't a super demanding game and so CPU bottlenecks can start to be a factor.

Somewhat surprisingly, the RX 5600 XT showed slightly lower overall scaling compared to the RTX 2060. Framerates improved by up to 87% using performance mode, just a bit lower than the 91% we measured on the RTX 2060 — and most of the other levels are also 1–2% less of an improvement, with the exception being ultra quality mode where both GPUs improved by the same 18%.

At least when looking at Turing and RDNA GPUs, then, FSR doesn't appear to favor AMD's architecture at all. But performance, as we said in the beginning, is only part of the story. Let's take a look at image quality.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

DLSS Ultra Performance

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

DLSS Ultra Performance

You'll want to open up those 4K images and view at full magnification to see the finer details. We also saved using maximum quality JPG files, so there shouldn't be any noticeable loss in quality compared to the source PNG files (which were about 14MB each).

There's a minimal loss in visual fidelity going from no upscaling to FSR ultra quality — you can probably spot a few small details that get blurred out, but nothing particularly noteworthy, especially when the game's in motion. FSR quality also looks quite good, while FSR balanced starts to look a bit blurry and finally there's a clear loss in crispness using FSR performance mode. The branches and needles, grass, fences, ground, corrugated metal roof panels, and other elements all look a bit fuzzy using the balanced and performance FSR modes. However, in motion — this is a fast-paced zombie shooter, after all — a lot of those differences become far less noticeable.

Move on to DLSS upscaling, and again there's a minimal loss in detail using quality mode, a bit more fuzziness with balanced mode, and more noticeable blurring and lack of fine details with the performance and ultra performance modes. In motion, you might register the fuzziness during less intense parts of the game, but when you're fighting zombies it's all good. Which is surprising when you consider the 1280x720 source resolution with DLSS ultra performance mode.

If all of the DLSS and FSR games we tested looked and performed like Back 4 Blood, we'd be quite happy. Unfortunately, this is one of the better results for image quality using FSR. It's not clear why some of the other games look noticeably worse, but let's see for ourselves.

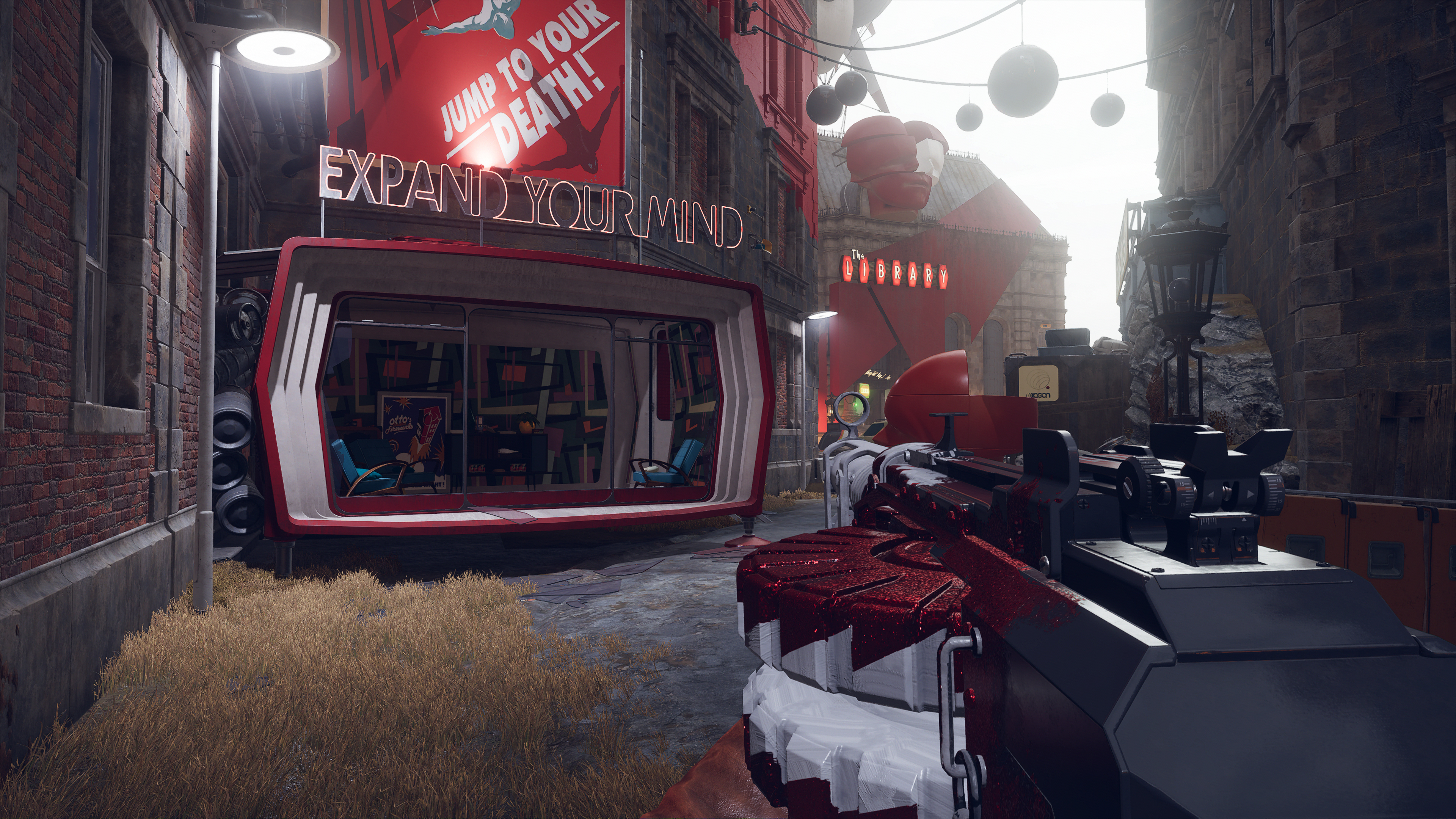

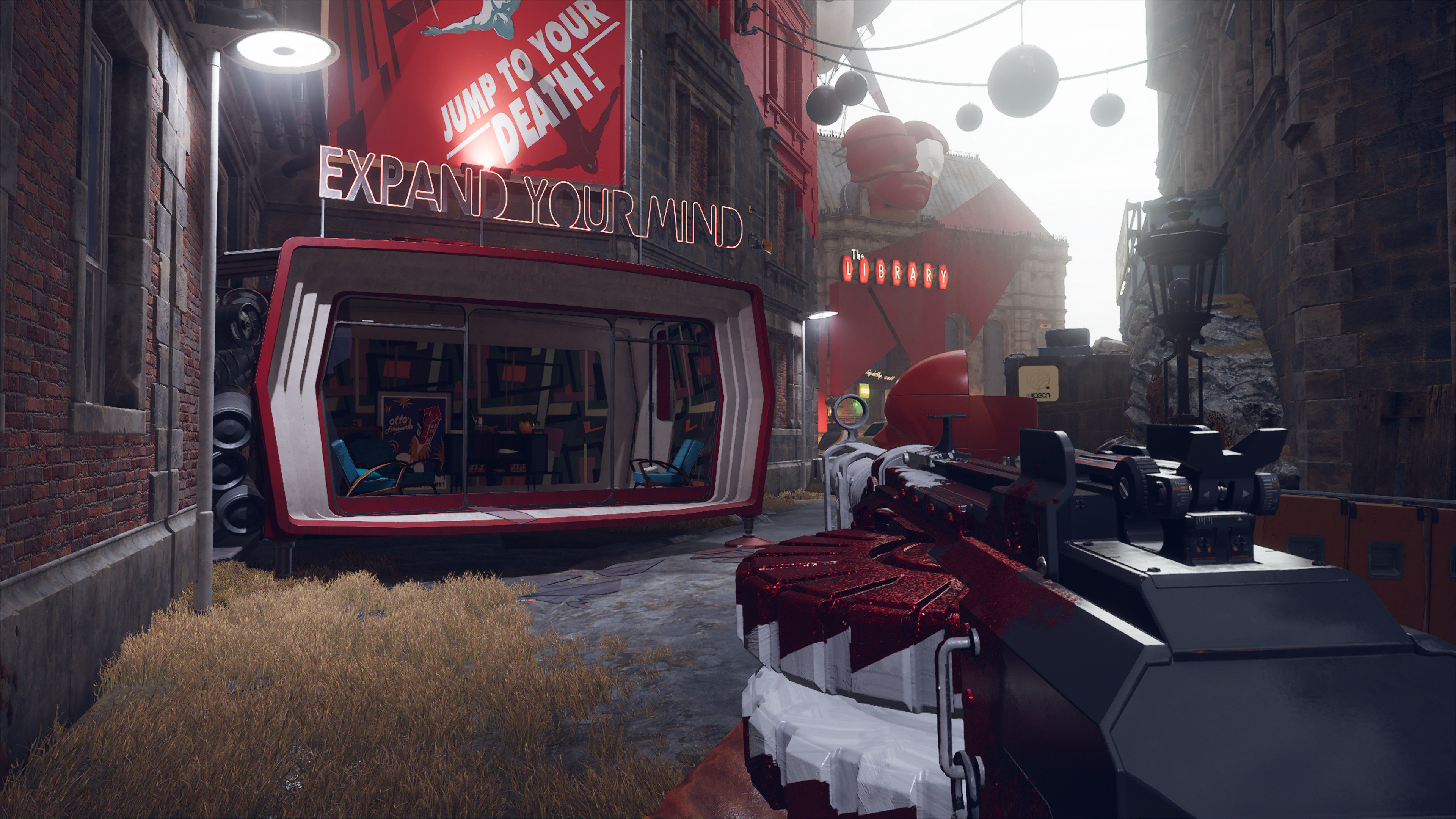

DLSS vs. FSR: Deathloop

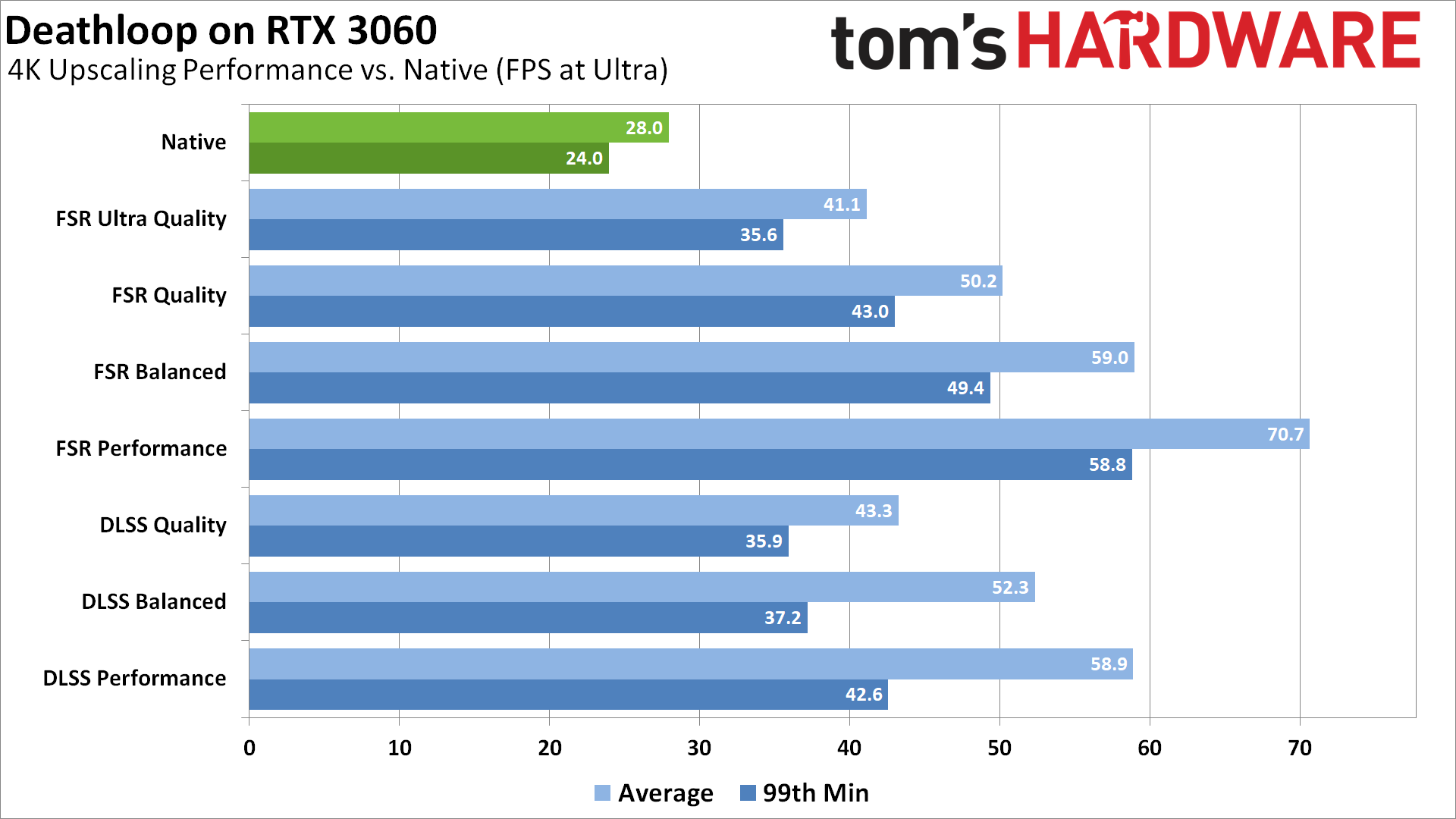

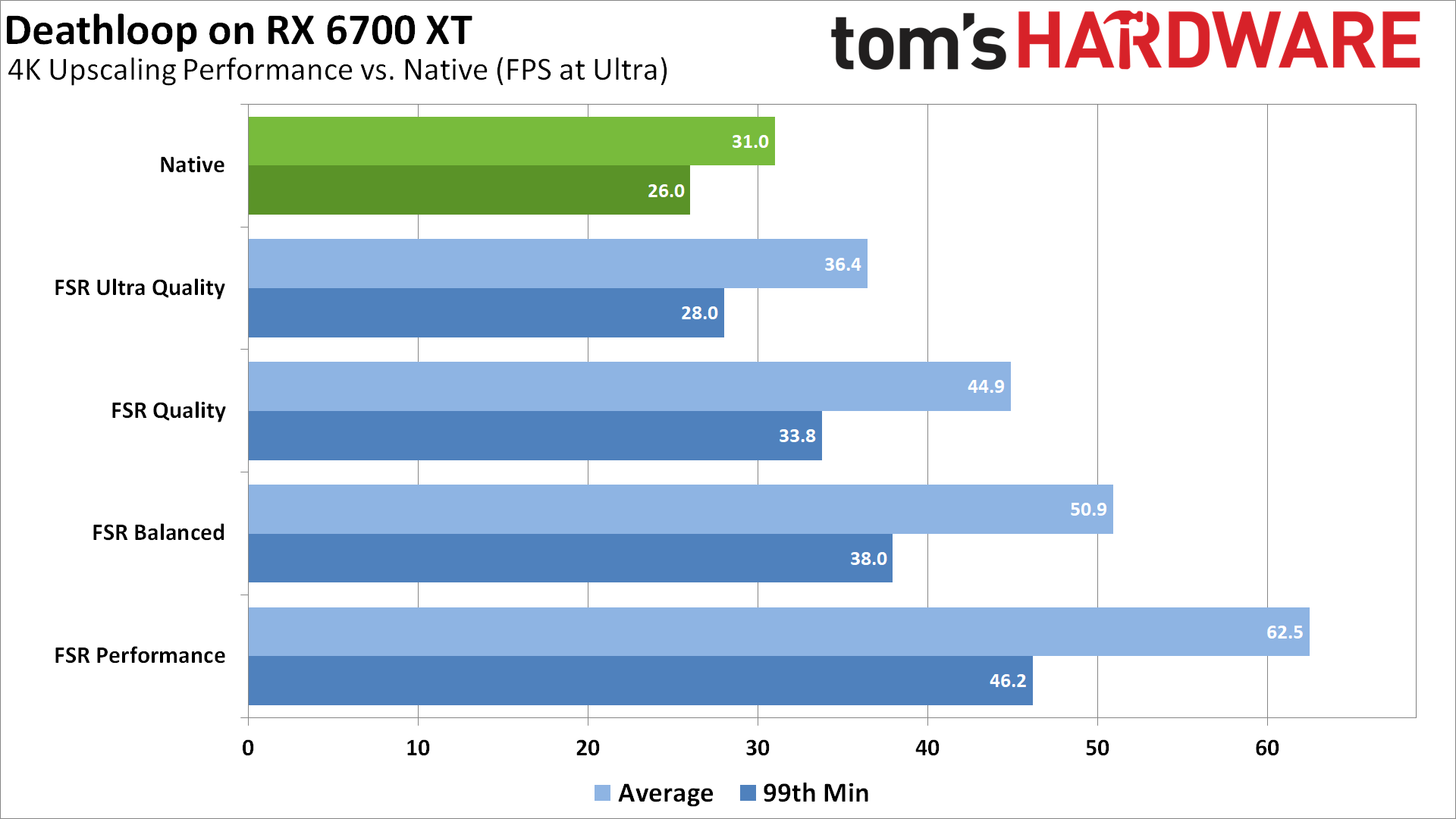

Deathloop was promoted by AMD, but it added DLSS with an update and also changed the 120 fps cap to 240 fps — both welcome changes in our book. DLSS ultra performance mode isn't supported, so that's one less scaling option to choose from this time.

We're using faster GPUs this time, but the performance scaling from the various modes ends up favoring Nvidia quite a bit for some reason. Perhaps it's because we're also using ray tracing, but we still would have expected similar gains from the FSR modes. DLSS performance scaling was also markedly better in Deathloop.

For the RTX 3060, DLSS quality mode boosted framerates by 55%, balanced improved performance by 87%, and performance mode was over twice as fast — 111% faster, to be precise. Sticking with the 3060, FSR ultra quality improved performance by 47%, quality mode gave an 80% increase, balanced was 111% (the same as DLSS performance mode), and FSR performance mode yielded a 153% improvement in fps. Note that the starting point was just 28 fps, however, and only FSR performance mode got the RTX 3060 to a (mostly) steady 60+ fps.

On the RX 6700 XT, the gains were generally quite a bit lower. FSR ultra quality only improved performance by 18%, quality mode bumped that to 45%, balanced mode gave a 64% increase, and performance mode slightly more than doubled performance with a 102% gain. Interestingly, performance at native resolution without FSR was 31 fps, while FSR performance mode ended up at 62 fps, so the RX 6700 XT started out faster than the 3060 but ended up slower. (Note: I may need to retest, just to verify these numbers, because they're a bit odd.)

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

Again, you need to click the enlarge button and access the full-size images to see the details better. This time, unfortunately, the image fidelity comparisons don't do FSR any favors. We've got two sets of images, and there's some clear aliasing present at higher FSR levels on the first set (right below the "Expand Your Mind" sign). But the ultra quality and quality modes can be used pretty much without complaint.

DLSS image quality is interesting because this time there are things that look better with DLSS quality mode than at native — look at the railing above the roof, for example. In fact, DLSS performance mode still looks almost the same as native mode, which is quite impressive.

In the second set of images, look at the rock face of the arch, the writing on signs, and other fine details like the lights on the red arrow sign and you see a very noticeable drop in details even with FSR quality mode, and it only gets worse at the higher upscaling settings. DLSS doesn't look better than native this time, but all three modes look very close to the same.

I do wonder if standing still for a screenshot perhaps benefits DLSS too much. It uses temporal data — meaning, data from previous frames — to help recover lost detail, and it could be that standing still presents a perfect opportunity for it to look nearly perfect. Still, it's when you're not really moving around that the fine details are actually noticeable, and DLSS works wonders in that case.

Based on performance gains, this time DLSS gets the nod. You can play using performance mode at 4K and still get a very good overall experience visually, while FSR boosts performance but causes a noticeable loss in fine details for some areas of the game. FSR ultra quality is still worth a shot, but I wouldn't want to use the balanced or performance modes if I could avoid it.

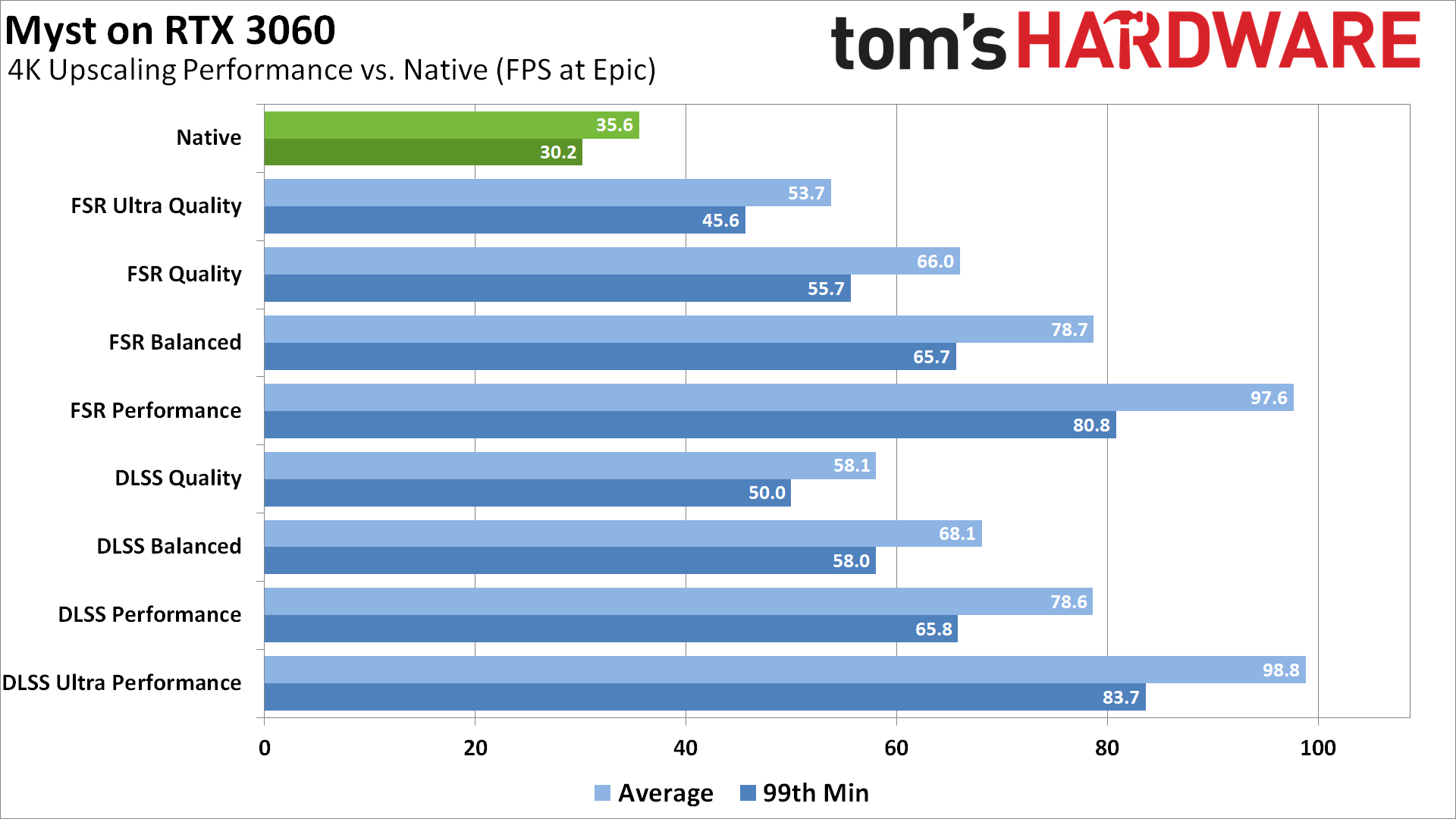

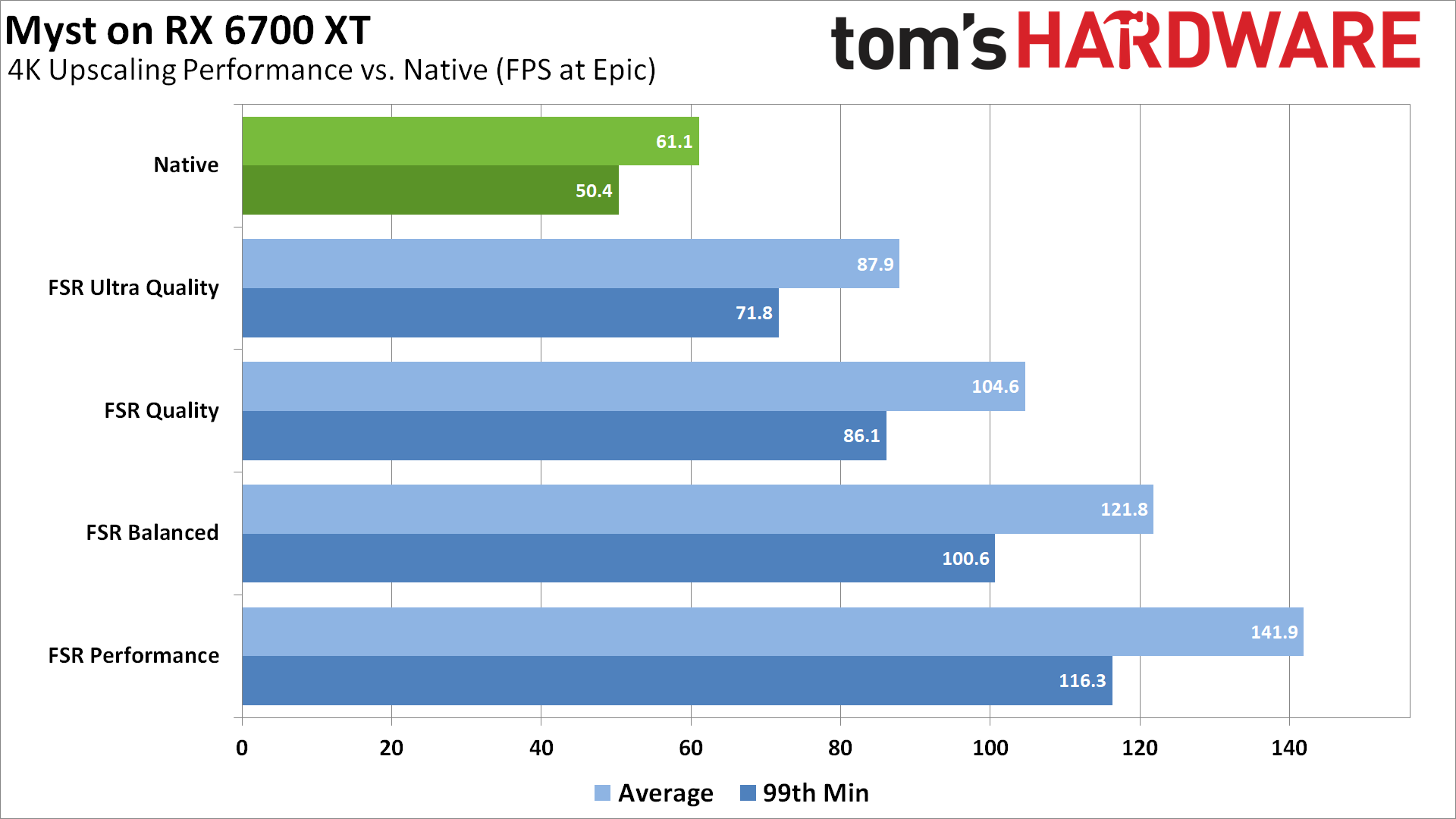

DLSS vs. FSR: Myst 2021

Myst performance improvements are a bit better than what we saw in Deathloop, but still tended to favor the RTX 3060. Ultra quality FSR boosts fps by 51% on the 3060 and 44% on the 6700 XT, quality mode yields an 86% gain on the 3060 vs. 71% on the 6700 XT, balanced was 121% compared to 100%, and performance increased by 175% versus 132%. These are both the latest GPU architectures, so it's interesting that FSR actually appears to run better on Ampere than on RDNA2, at least in some cases.

As for DLSS, ultra performance mode basically matched FSR performance mode with a 178% increase in fps. DLSS performance mode likewise matched FSR balanced mode at 121% higher fps, while DLSS balanced mode gave a 91% improvement and even quality mode boosted framerates by 63%. Myst isn't a fast-paced action game, so high fps isn't really required, but both GPUs easily managed more than 60 fps at 4K with the right upscaling settings — DLSS balanced or FSR quality or higher for the RTX 3060, and the RX 6700 XT got slightly more than 60 fps even with native rendering.

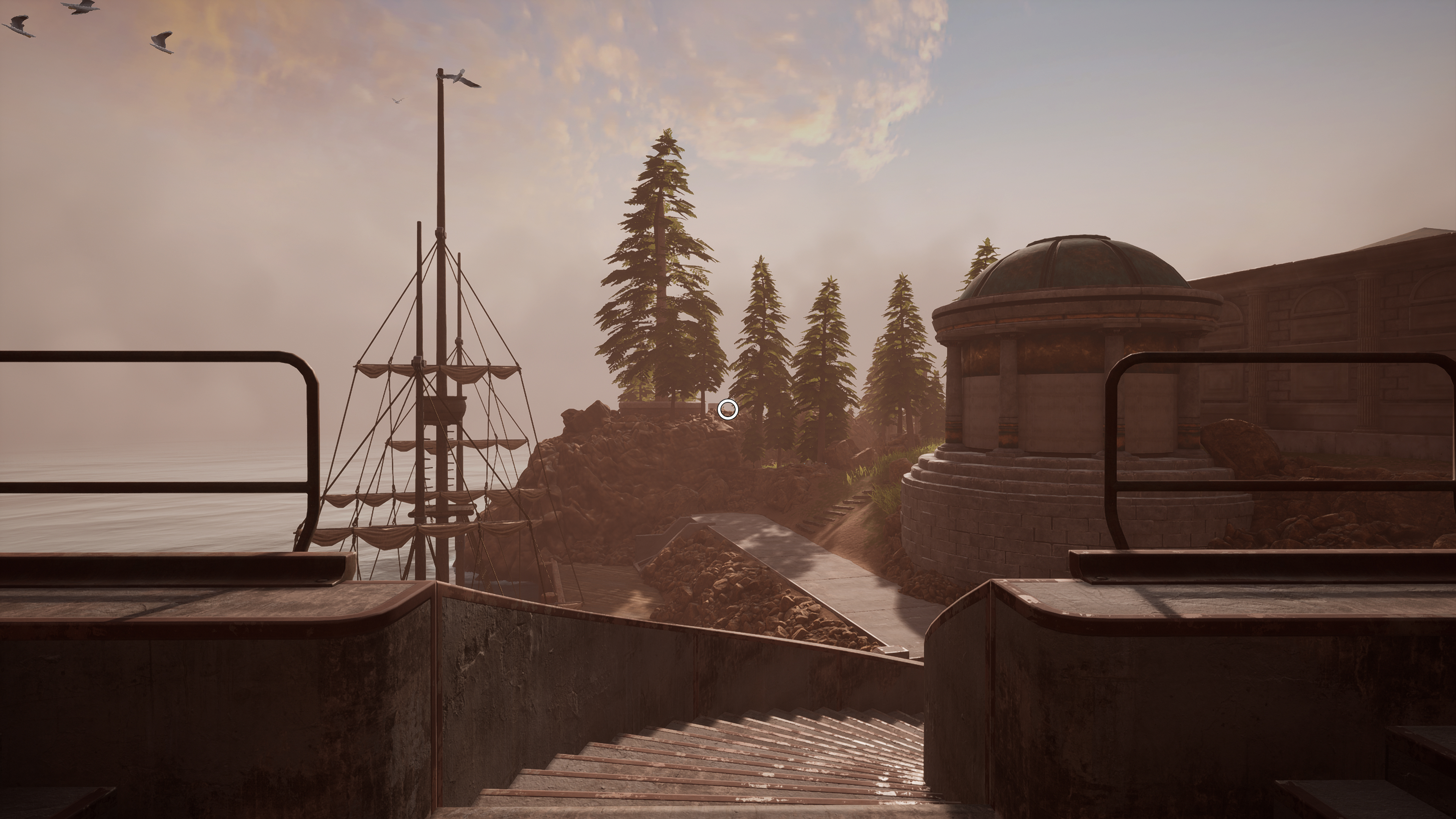

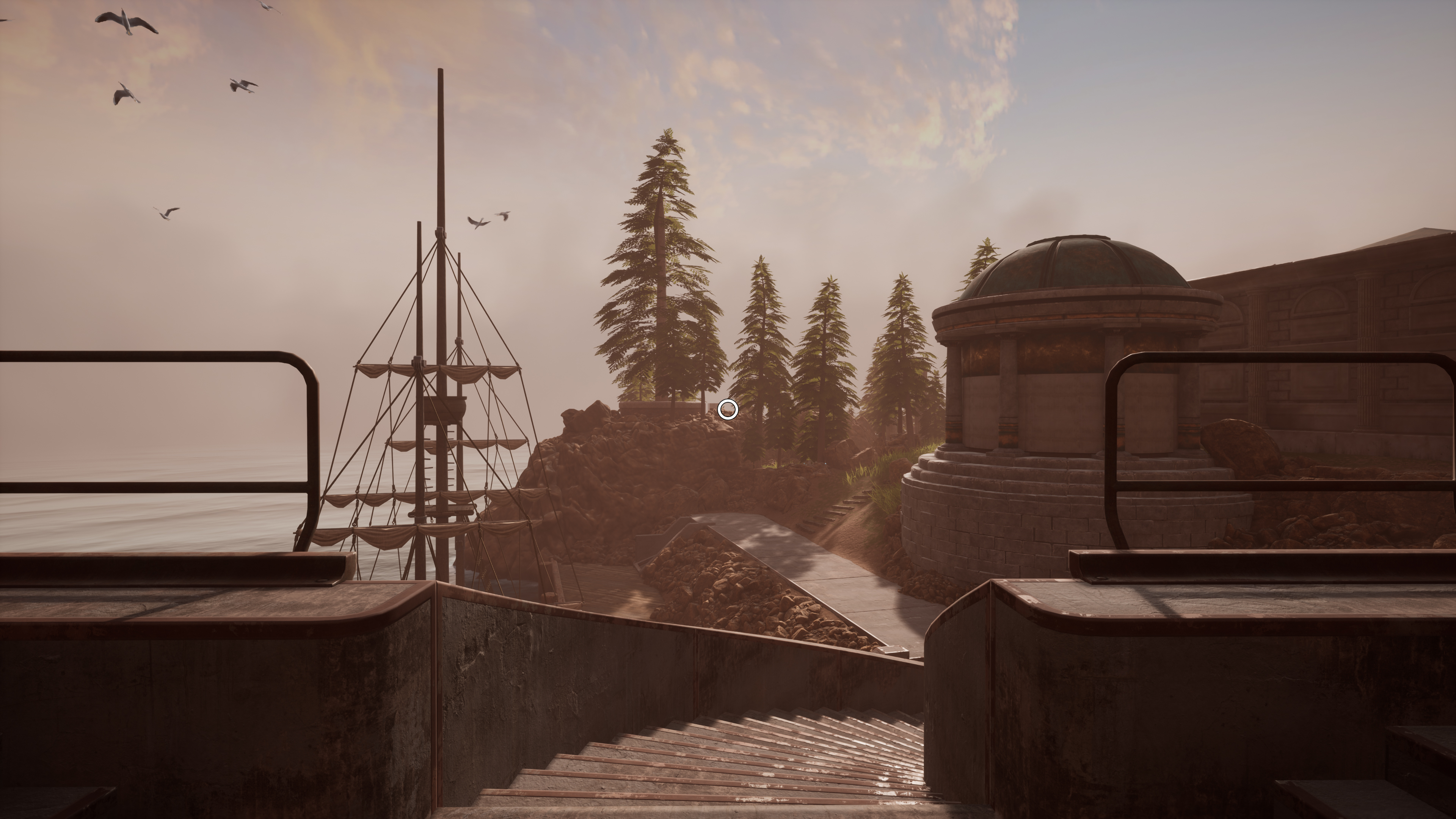

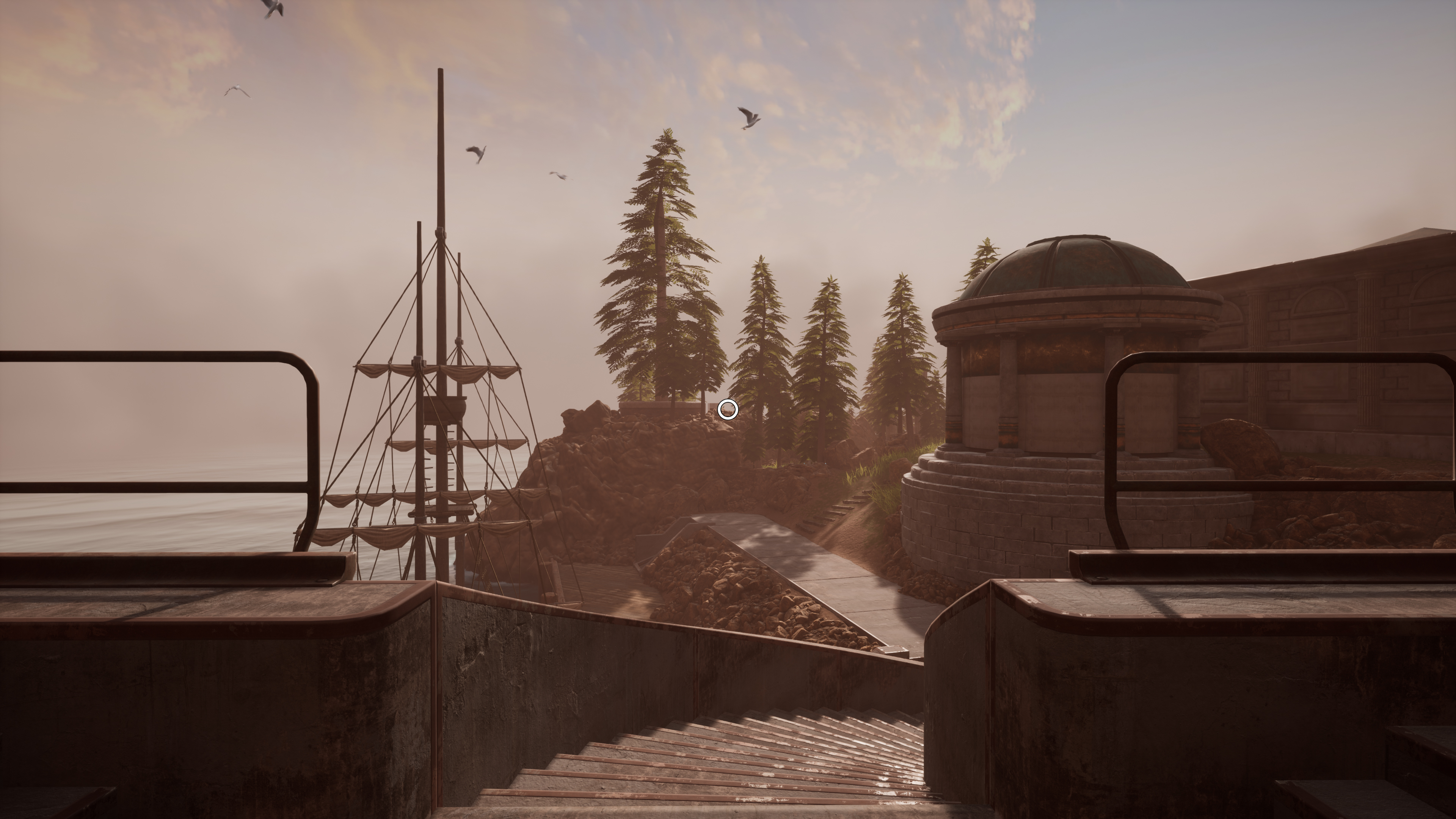

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

DLSS Ultra Performance

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

DLSS Ultra Performance

Image quality again ends up a bit of a blurry mess with FSR at higher scaling factors. It's not awful, but the trees and other details all start to look noticeably worse with the balanced and performance modes. DLSS looks better, at least up until the ultra performance mode.

Our second image test scene ended up being worthless, as you can see from the screen captures. All the swirling fog makes it hard to tell what's changing from upscaling and what looks different from the fog, which is a good illustration of times where you can get away with even FSR performance mode and not really notice the loss of fidelity.

Overall, DLSS looked better and performed well in Myst, but non-RTX users can certainly run FSR in the ultra quality or quality modes and get higher performance for a relatively unobtrusive drop in crispness and detail.

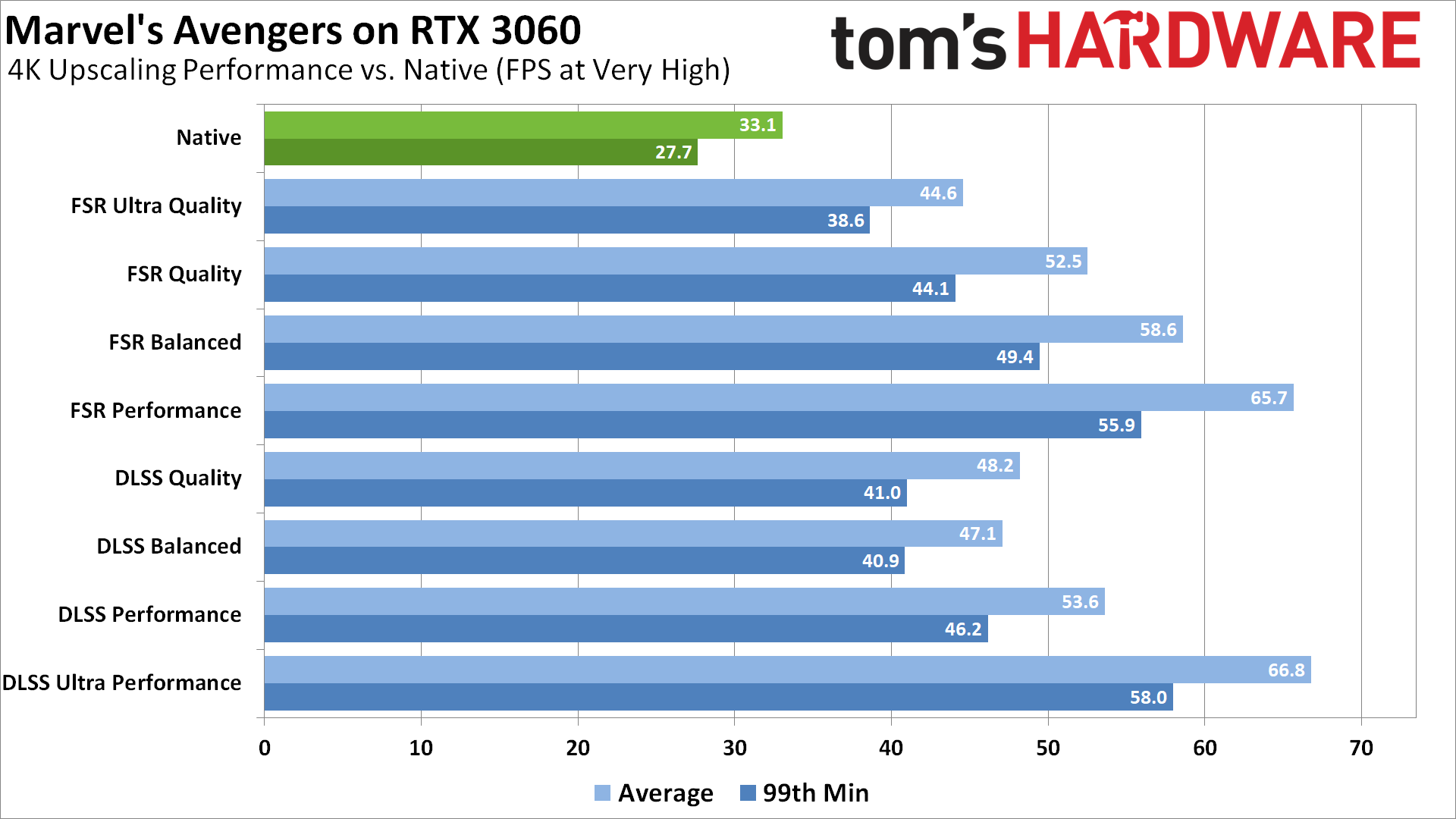

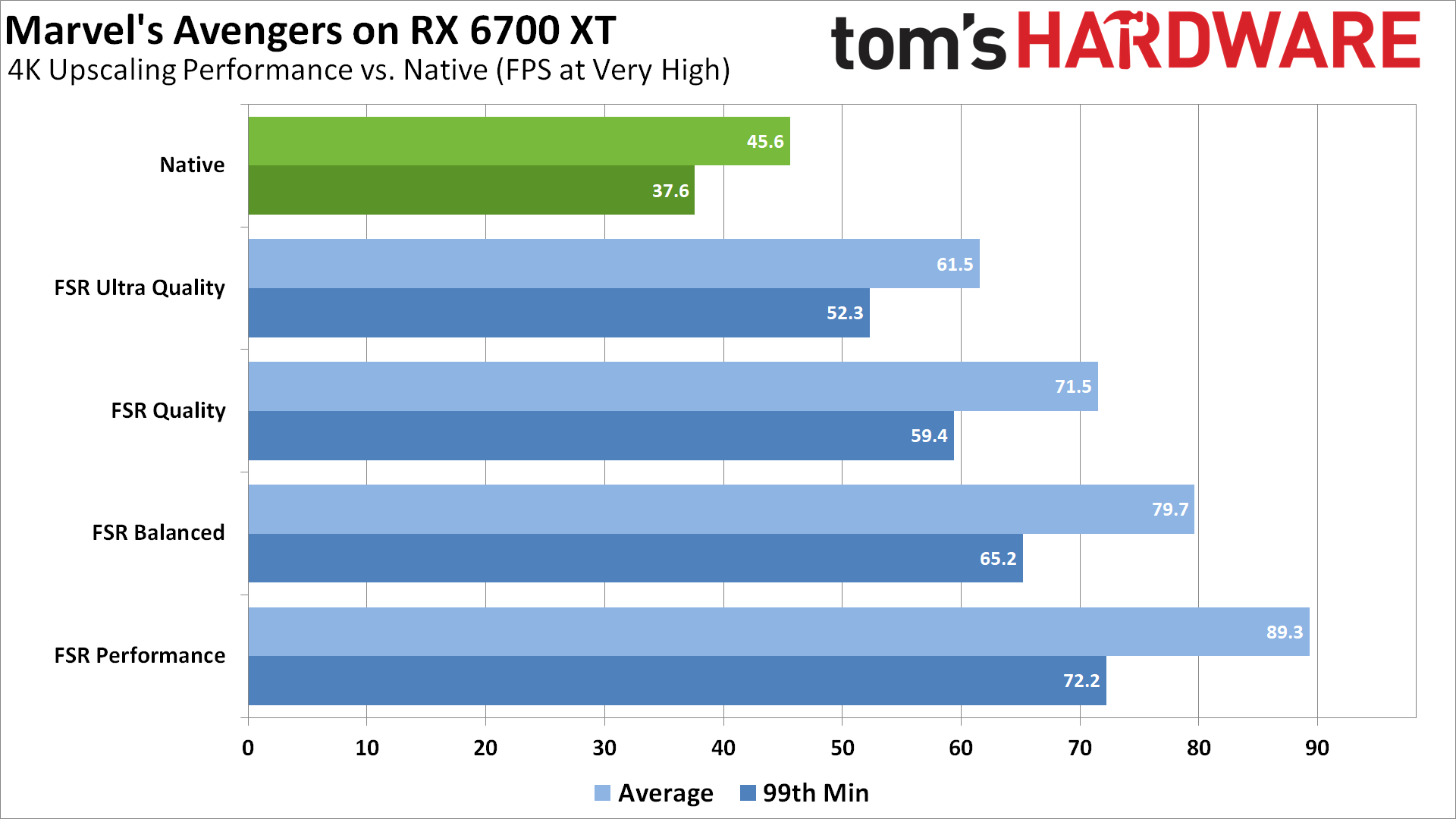

DLSS vs. FSR: Marvel's Avengers

Last, we have the oldest game of the bunch, Marvel's Avengers. It has some oddities that immediately stand out with DLSS, specifically that the balanced and quality modes perform basically the same — actually, quality mode performed slightly better. Other than that one oddity, though, things mostly make sense.

The AMD and Nvidia GPUs we tested were a lot closer in scaling this time, with the biggest difference being just 3% — the RTX 3060 ran 59% faster with FSR quality mode vs. native, while the RX 6700 XT improved by 57% for example.

DLSS ultra performance mode ended up providing the highest performance on the 3060, though again the 9x resolution upscaling was definitely noticeable. Either way, you can potentially double your performance in Avengers with the highest upscaling modes from FSR and DLSS.

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

DLSS Ultra Performance

Native

FSR Ultra Quality

FSR Quality

FSR Balanced

FSR Performance

DLSS Quality

DLSS Balanced

DLSS Performance

DLSS Ultra Performance

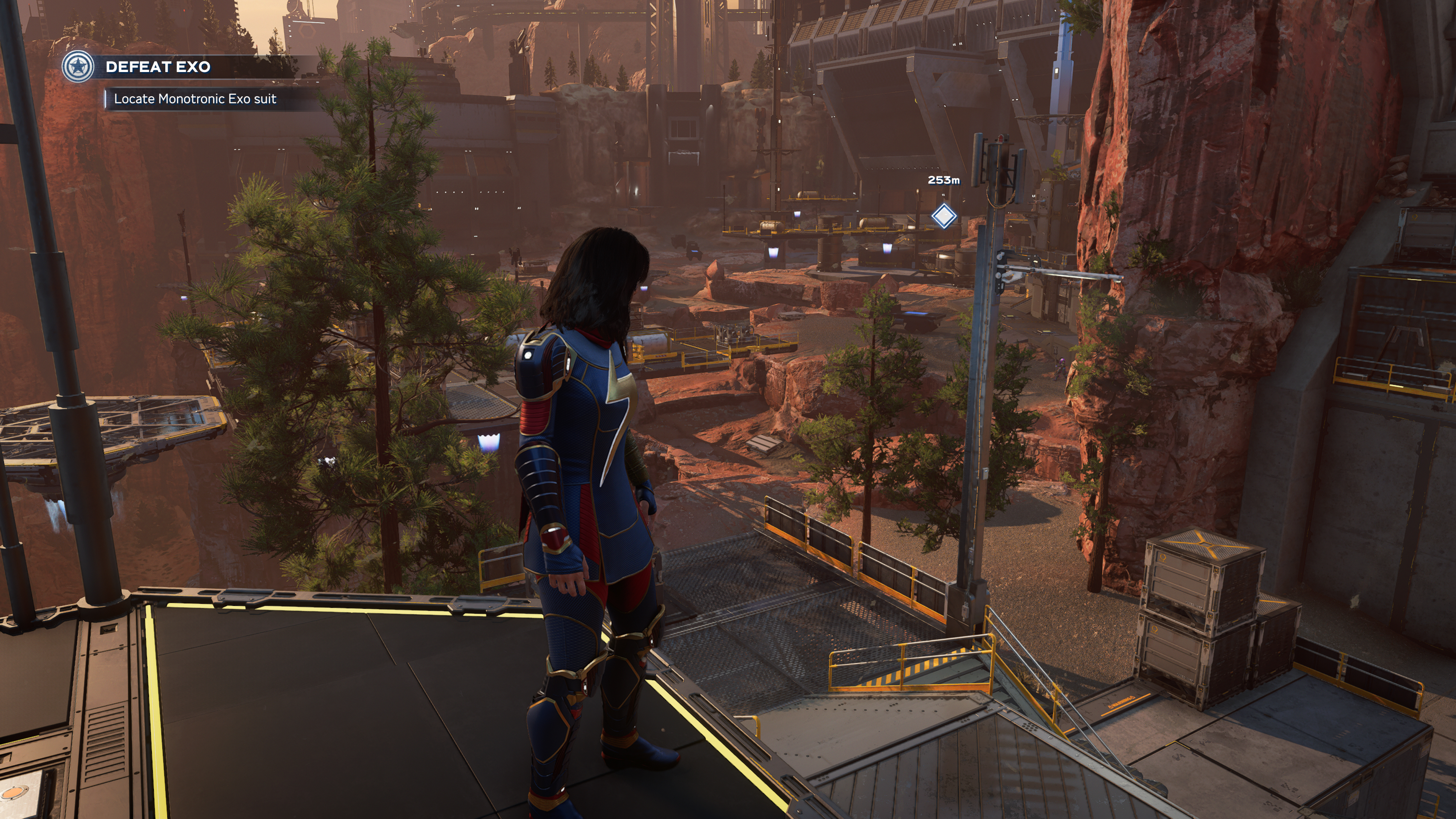

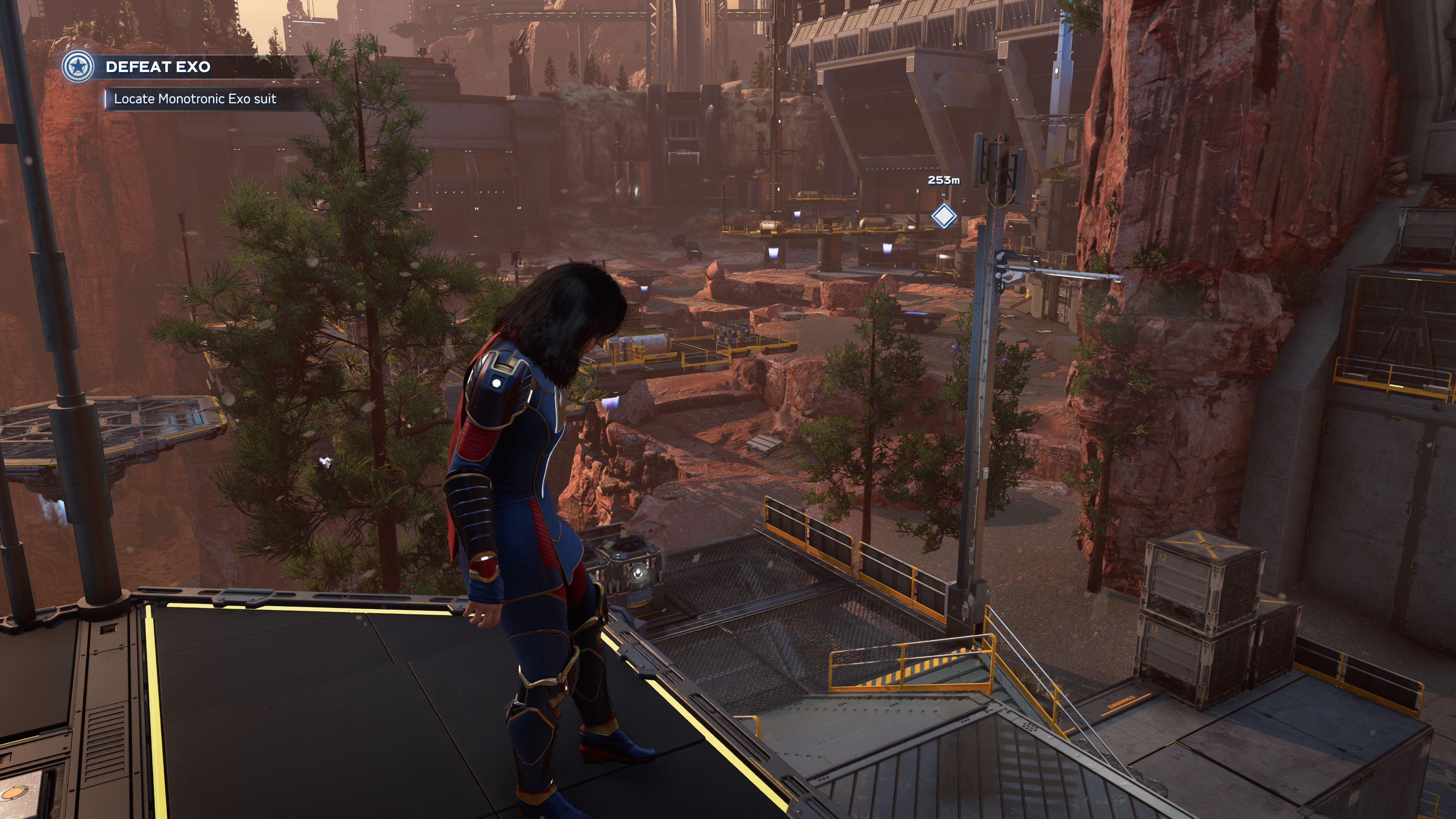

As with the second image set in Myst, there's a lot of vapor and clouds and such swirling around in Avengers, which makes it a bit more difficult to see what changed due to FSR and DLSS, and what changed just because of the weather. DLSS quality mode is visually indistinguishable from native rendering, though, and it's equally difficult to spot any differences between native and FSR ultra quality. FSR performance ,on the other hand, certainly looks blurrier. The trees and shrubberies also show more blockiness with FSR than with DLSS.

But when you're in the middle of a fight? Yeah, it all sort of blends together. Which is why so many console games have used upscaling in various forms for years without too many complaints from the gamers.

DLSS vs. FSR, the Bottom Line

If you were hoping to see a clear winner, there are far too many factors in play. DLSS certainly seems to be more capable of producing near-native quality images, especially at higher upscaling factors, but it requires far more computational horsepower and, at times, doesn't improve performance as much as we'd like. More importantly, it's an Nvidia-only technology, and not just that, an RTX-only tech. All the Nvidia users with GTX cards are out of luck, as are AMD card owners and Intel GPU users.

FSR doesn't win the image quality matchup, particularly in higher upscaling modes, but if you're running at 4K and use the ultra quality or quality mode, you'll get a boost in performance and probably won't miss the few details that got lost in the shuffle. FSR performance mode, however, can look pretty bad. In fact, I'd prefer running a game at 1080p and letting my monitor stretch that to 4K rather than using FSR performance mode. Balanced mode likewise should only be used if you're in dire need of improved performance.

The wildcard in this discussion is Intel's upcoming XeSS. It sounds a lot like DLSS, and one of the people who helped design DLSS left Nvidia to go work for Intel. It will supposedly work on most modern GPUs —Intel, AMD, or Nvidia — but how well it works is very much unknown. It will use Intel's version of tensor cores for Arc, and fall back to DP4a calculations for other GPUs. Plus, making a good upscaling algorithm isn't the same as getting it adopted by game developers.

We also didn't get into more traditional forms of upscaling, which quite a few games have been using for years. Temporal or spatial upscaling techniques that only drop performance a few percent exist, and many games even have dynamic scaling where you target your monitor refresh rate and the game adjusts the resolution on the fly. It may not look quite the same, but there are a lot of times where you won't even notice or care.

In terms of adoption rates, Nvidia DLSS still leads AMD FSR, thanks to its lengthy head start. AMD FSR has been announced or released in at least 27 games at present, while Nvidia DLSS is now available in at least 119 games (though many on Nvidia's DLSS list are lesser known indie games). With Unreal Engine and Unity both supporting DLSS and FSR now, though, we expect we'll see plenty more games incorporating both technologies going forward — and maybe even XeSS as well.

Ultimately, games and game developers now have several choices available, and that's a good thing. As shown here, adopting one of the technologies doesn't preclude supporting the other. Over time, we hope to see continued improvements in both algorithms, and it's something we'll continue to monitor going forward.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- This is not only the most important conclusion that needs to be drawn from this, but hopefully the only one: "Ultimately, games and game developers now have several choices available, and that's a good thing".Reply

For all everyone can argue or fanboi or pit one tech against another, the truth of the matter is that if these technologies are pushed to compete with one another and they can be used widely (i.e: no vendor lock), then everyone wins; maybe even AMD, Intel and nVidia.

Personally, I still find them stupid as up-scaling means you just need a better card or lower details. But as with everything, this is just another voluntary toggle that's there for you to use if you find it works for you.

Regards. -

JTCPingas Tom's Hardware failed to mention that FSR is open source. That alone is a big win over DLSS.Reply -

Alvar "Miles" Udell Something I can't wait to see is how Intel's XeSS compares with DLSS and FSR in terms of performance and quality, as well as game compatibility. Three different approaches mean the potential for a large performance delta between three cards of on paper equal power.Reply -

Blacksad999 Reply

It's a similar upscaling method to DLSS, using AI to "intelligently" fill in the gaps, rather than the FSR method, which is Lanzcos with edge detection.hotaru251 said:wasnt that just a modified version of FSR? -

Alvar "Miles" Udell Replyhotaru251 said:wasnt that just a modified version of FSR?

Nope, and Intel said it will eventually be open sourced as well, but it will have two modes, one for cards with AI processors and one without. If it all works out it's possible AMD FSR will be quickly buried and forgotten, leaving AMD to be a follower yet again instead of a leader.

Here is Intel’s Spectacular Xe SuperSampling (XeSS) Demo In 4K Quality (wccftech.com)

XeSS Is Intel’s Answer to DLSS, and It Has One Big Advantage | Digital Trends -

JarredWaltonGPU Reply

No, it's in there! (Or at least, it is now. :sneaky:) Note that the linked DLSS and FSR articles definitely go into more detail about how each works, as the focus here was mostly on performance and image quality comparisons.JTCPingas said:Tom's Hardware failed to mention that FSR is open source. That alone is a big win over DLSS. -

Squidkid I don't know if my eyes are just still in good condition but fsr is sooo blurry it just looks like youve lowered the resolution and then used reshade.Reply

It's not even a competition betweenthe two dlss is far superior with almost sometimes better than native image quality.

Fsr was just a gimmick to compete with Nvidia as amd knew they had lost with ray tracing it's like the no frills version.

I don't mind using dlss in games but I don't think I'd ever use and play a game with fsr just loses way to much quality would rather spend more money and buy a faster card. -

JarredWaltonGPU Reply

DLSS definitely looks better in most of the games I've tried as well. The thing is, FSR works on pretty much everything. If you're on an old GTX 1060 and struggling to get above 60 fps, FSR can probably get you there. And for games where you only need an extra 5-10 fps, you can use the ultra quality mode and likely never really notice. But the problem is FSR (and DLSS) aren't generally used on older games, and only about a third maybe of new games. Which means you'll have to use lower resolutions and just do it the old fashioned way. I'm still hopeful FSR can improve in quality over time, or maybe XeSS will end up as a universal upscaling solution with quality matching DLSS while working on all modern GPUs. One can dream, right?Squidkid said:I don't know if my eyes are just still in good condition but fsr is sooo blurry it just looks like youve lowered the resolution and then used reshade.

It's not even a competition betweenthe two dlss is far superior with almost sometimes better than native image quality.

Fsr was just a gimmick to compete with Nvidia as amd knew they had lost with ray tracing it's like the no frills version.

I don't mind using dlss in games but I don't think I'd ever use and play a game with fsr just loses way to much quality would rather spend more money and buy a faster card.

Also a fun aside: Serious image upscaling and enhancement that doesn't result in more noticeable image quality losses is certainly possible. The AMD and Nvidia images used in the article were upscaled using BigJPG.com, and the results are quite good. It also took about a minute to get the final result, but the source images were only 720p for AMD and 510p for Nvidia. And if you go look now, you'll see the denoising results of the upscaling, but it's still better (IMO) than the upscaling you get with Photoshop. I really hope the next iteration of Photoshop adds a high-end AI trained upscaling algorithm! Deep Image is the one to beat right now. Give me that level of quality at 60 fps and I'd be super impressed. 🙃 -

Sleepy_Hollowed Like others have mentioned, the AMD version has an edge just because it's Open Source and not only will it get better over time, but it's free to use for developers, which will help many smaller budget games use it instead of DLSS unless there's no tools to implement it easily.Reply