Tested: Nvidia GPU Hardware Scheduling with AMD and Intel CPUs

Putting new Nvidia drivers to the test

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

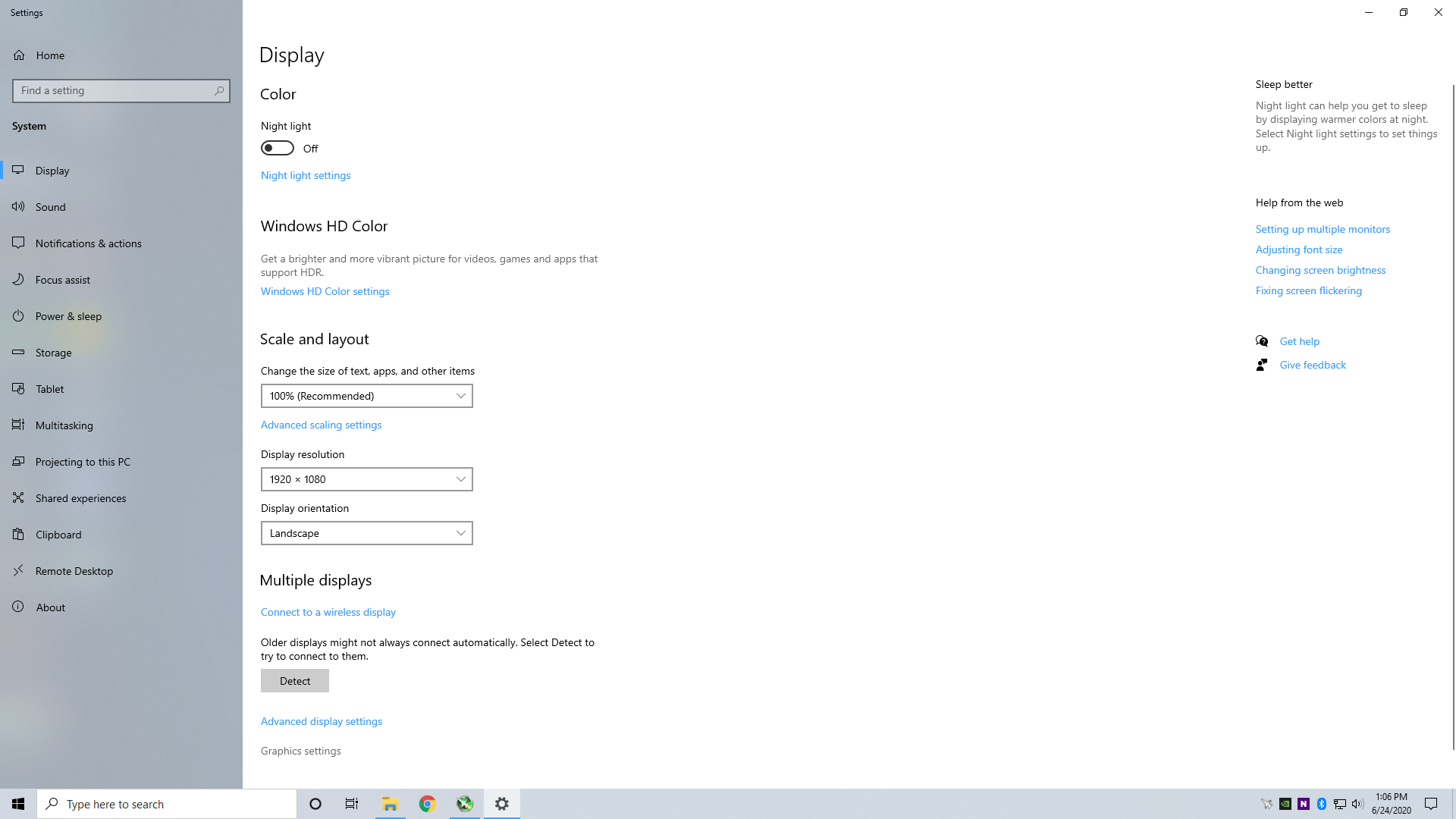

Nvidia just released its latest 451.48 drivers for Windows PCs. These are the first fully-certified DirectX 12 Ultimate drivers, but they also add support for WDDM 2.7—that's Windows Display Driver Model 2.7. New to Windows 10 with the May 2020 update, and now supported with Nvidia's drivers, is hardware scheduling. This new feature shows up in the Windows display settings, at the bottom under the Graphics Settings, provided you have a Pascal or later generation Nvidia GPU. Could this help the best graphics cards perform even better and maybe shake up the GPU hierarchy? Probably not, but we decided to find out with empirical testing.

First, it's important to note that this is not a new hardware feature but rather a new API feature. GPUs, at least as far as we understand things, have been able to support GPU hardware scheduling for some time. The description from Microsoft is vague as well.

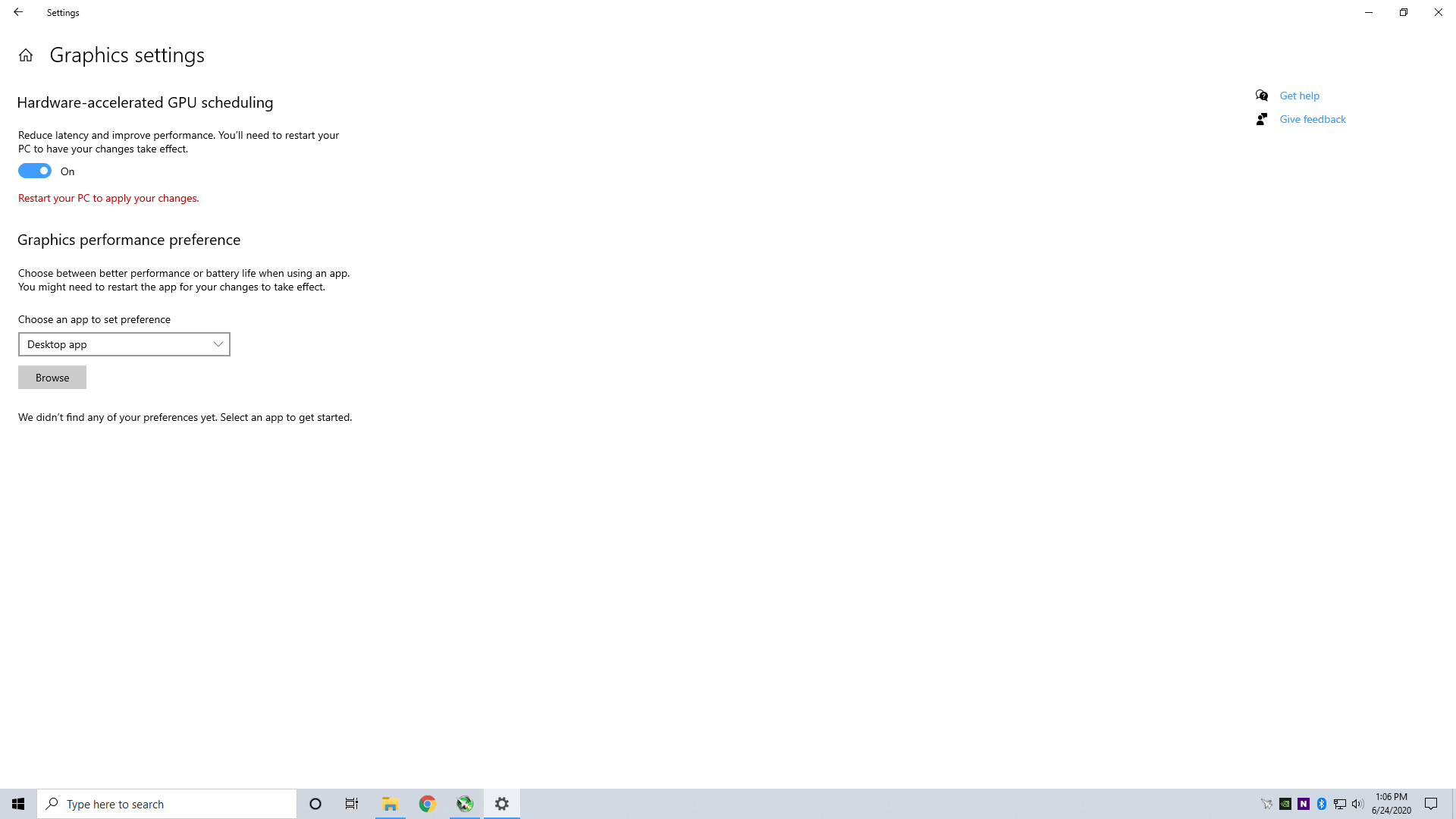

Enabling the feature is simple. In the Windows Settings section, under Display, you can click "Graphics settings" to find the toggle. It says, "Reduce latency and improve performance. You'll need to restart your PC to have your changes take effect."

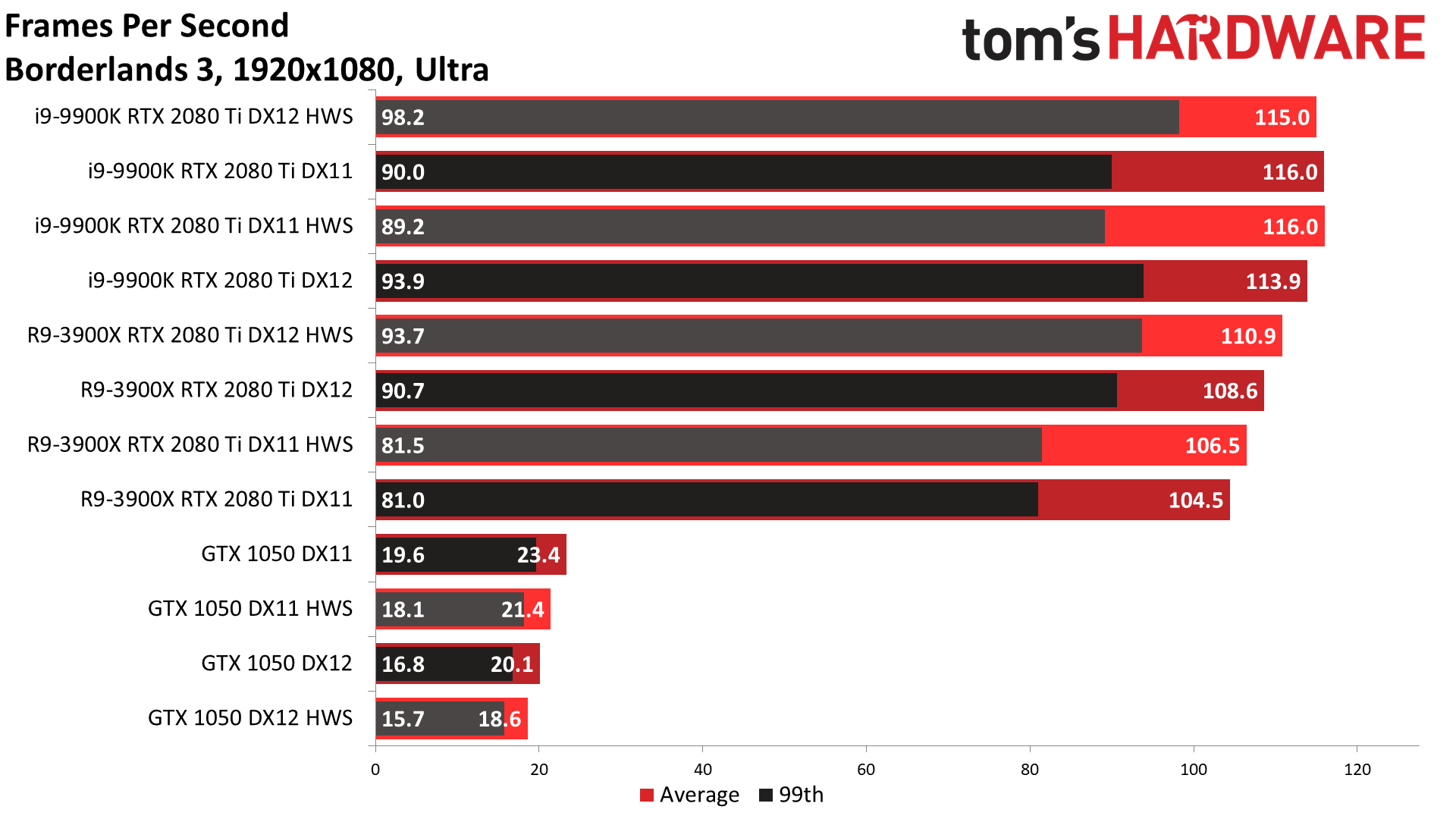

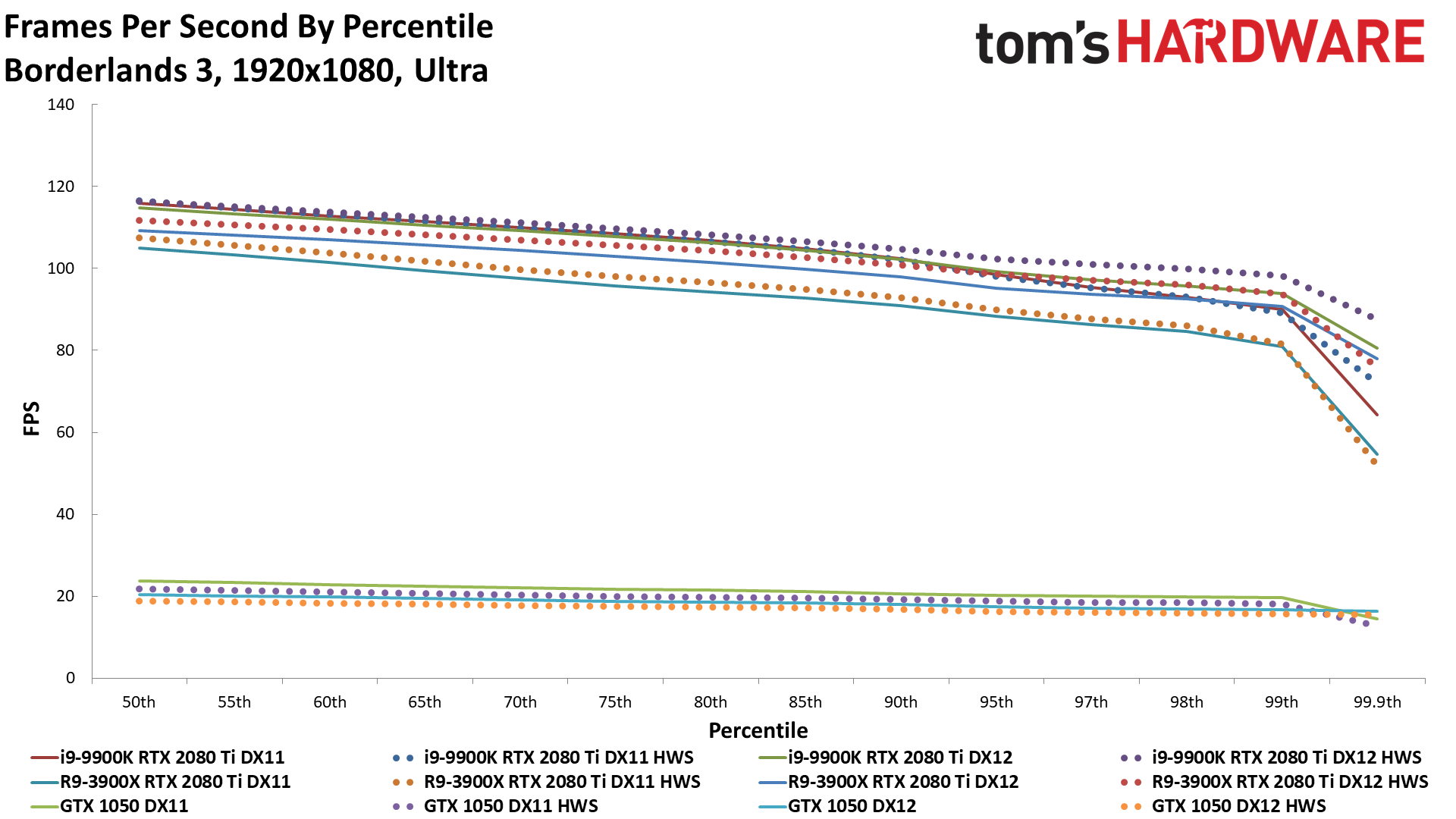

Information circulating on the web suggests GPU hardware scheduling could be quite useful, but we decided to investigate further. We selected five games out of our current GPU test suite, then ran benchmarks with and without hardware-accelerated GPU scheduling enabled on a few test configurations. We used an RTX 2080 Ti as the main test GPU, and ran the benchmarks with both Core i9-9900K and Ryzen 9 3900X. Thinking perhaps a low-end GPU might benefit more, we also went to the other extreme and tested a GTX 1050 card with the 9900K.

Obviously there are a ton of potential combinations, but this should be enough to at least get us started. We also tested with every possible API on each game, just for good measure. All testing was conducted at 1920x1080 with ultra settings, except for Red Dead Redemption 2 on the GTX 1050—it had to use 'medium' settings to run on the 2GB card. We ran each test multiple times, discarding the first run and then selecting the best result of the remaining two runs (after confirming performance was similar, and it was).

Spoiler alert: the results of GPU hardware scheduling are mixed and mostly much ado about nothing, at least in our tests. Here are the results, with charts, because we all love pretty graphs.

Borderlands 3 showed a modest increase in minimum fps on the RTX 2080 Ti using the DirectX 12 (DX12) API, while performance under DX11 was basically unchanged. The Ryzen 9 3900X showed improved performance with both APIs, of around 2%—measurable, but not really noticeable. The GTX 1050 meanwhile performed worse with hardware scheduling (HWS) enabled, regardless of API. The lack of a clear pattern is going to be the only 'pattern' it seems.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

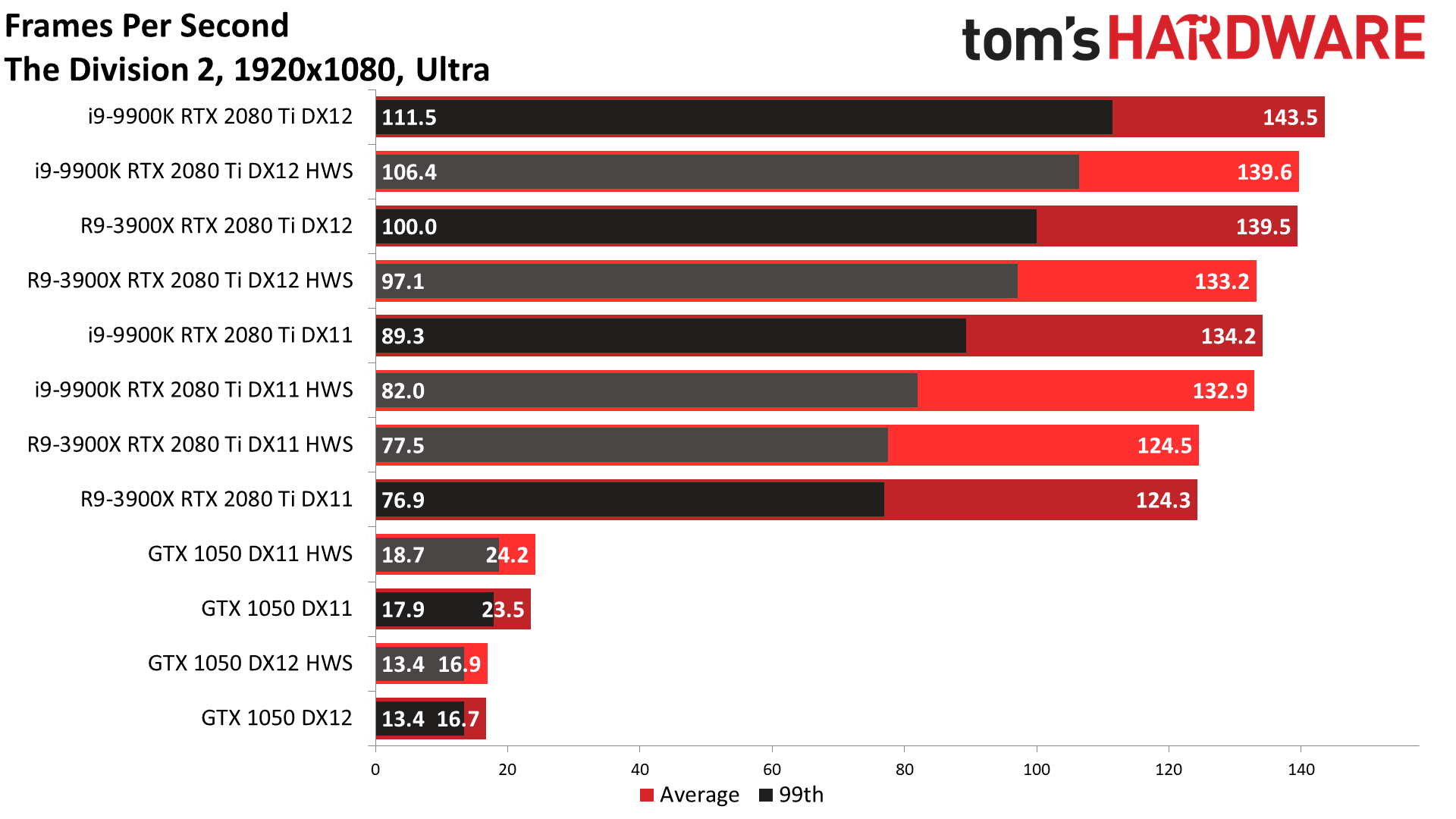

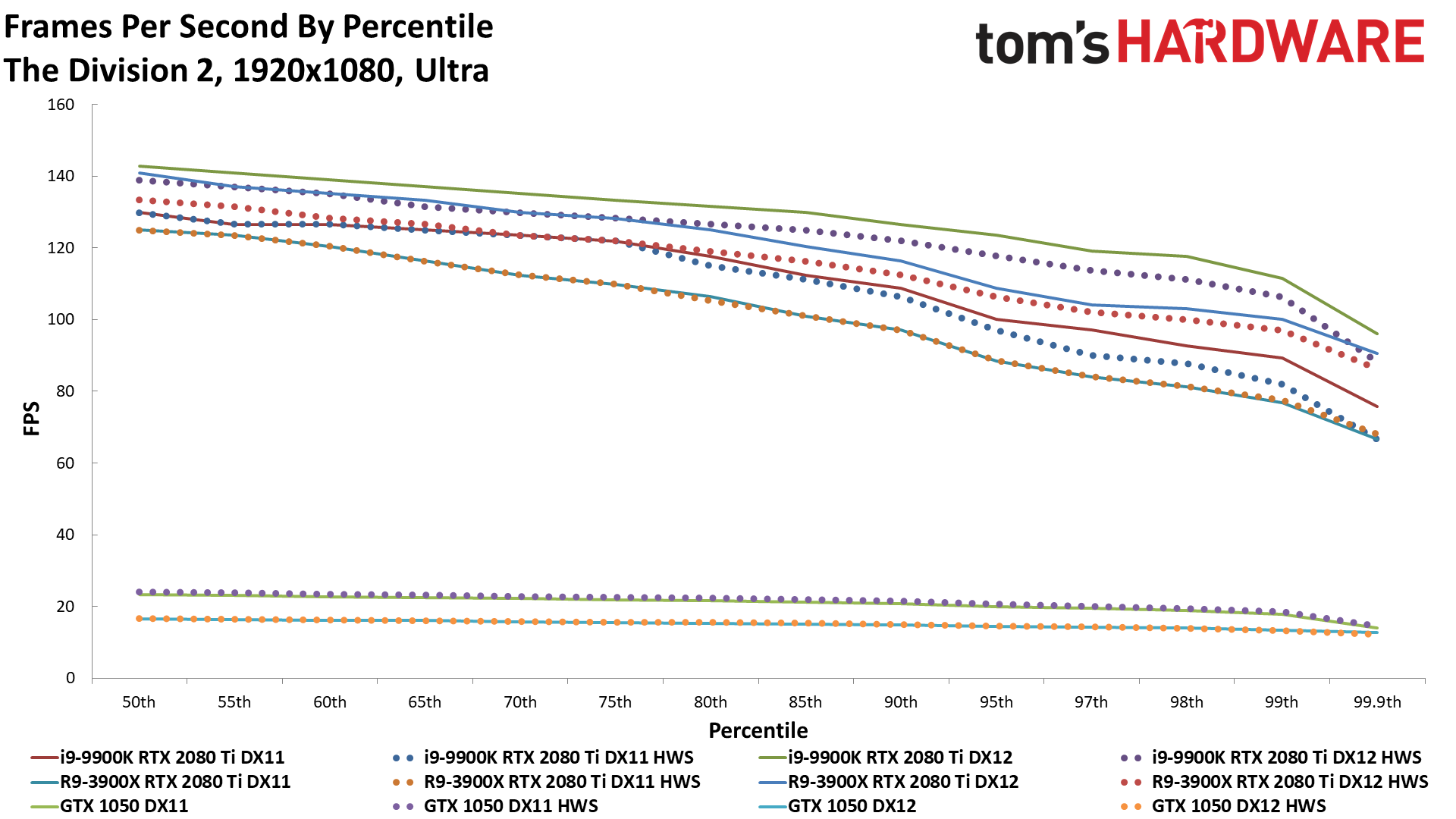

The Division 2 basically flips things around from Borderlands 3. Hardware scheduling resulted in reduced performance for both the 9900K and 3900X under DX12, and made little to no difference with DX11. Meanwhile, the GTX 1050 shows a very slight improvement with hardware scheduling, but not enough to really matter—it's about 3% faster, but at sub-30 fps.

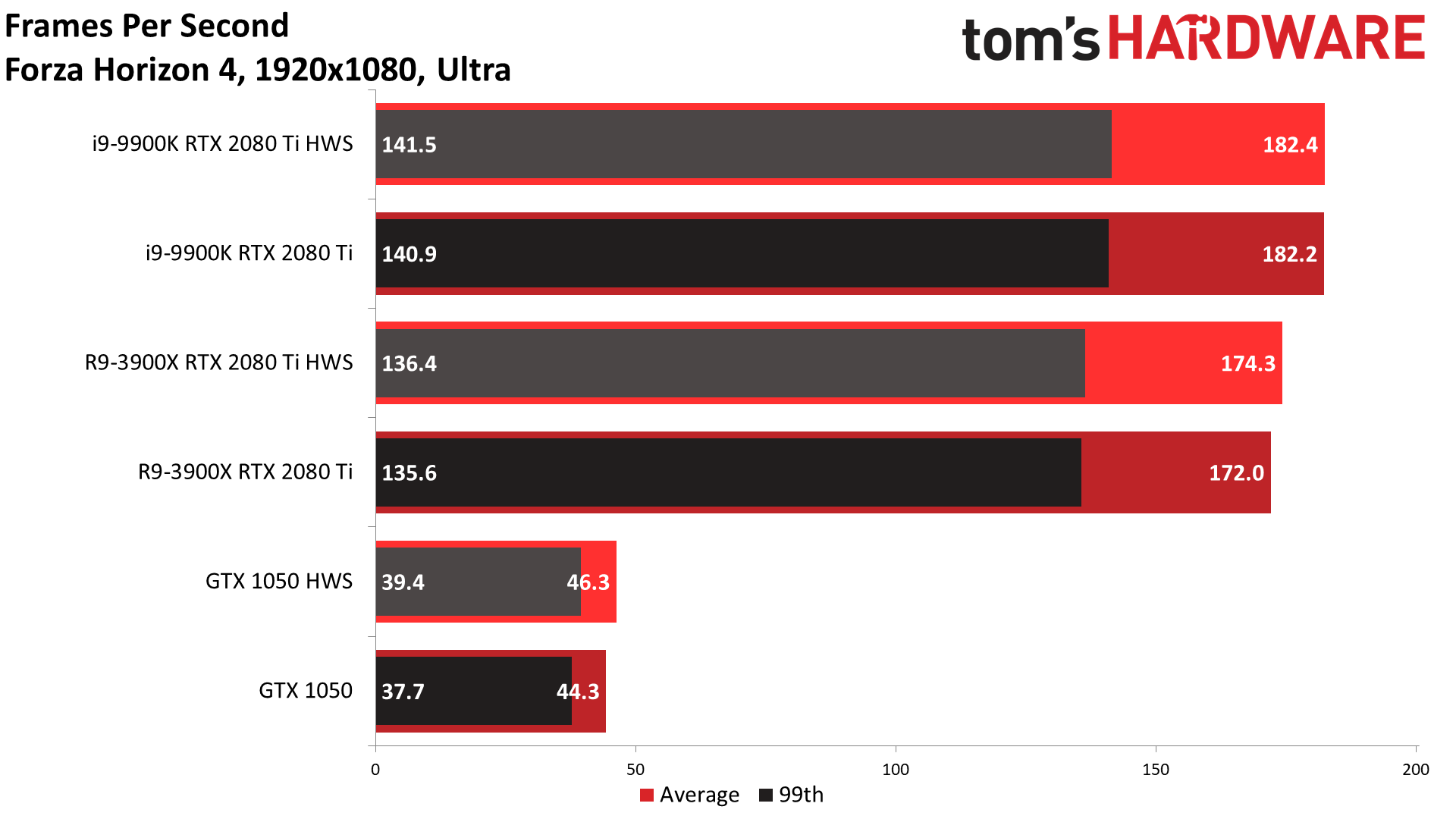

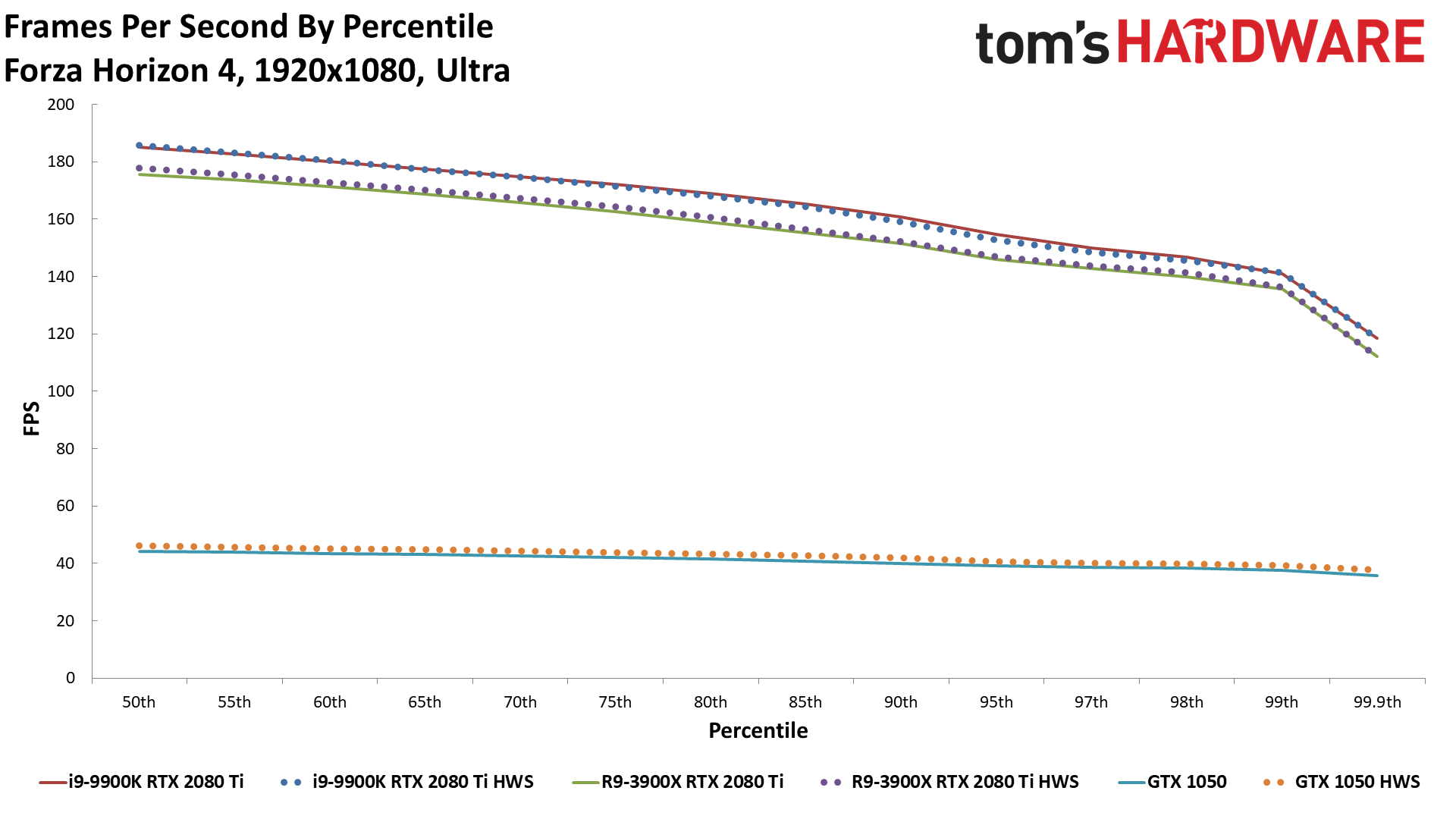

Forza Horizon 4 simplifies things a bit, since it only supports the DirectX 12 API. This time, we measured very slight improvements with the 3900X and a bit larger boost with the GTX 1050, while the 9900K with 2080 Ti had basically the same performance.

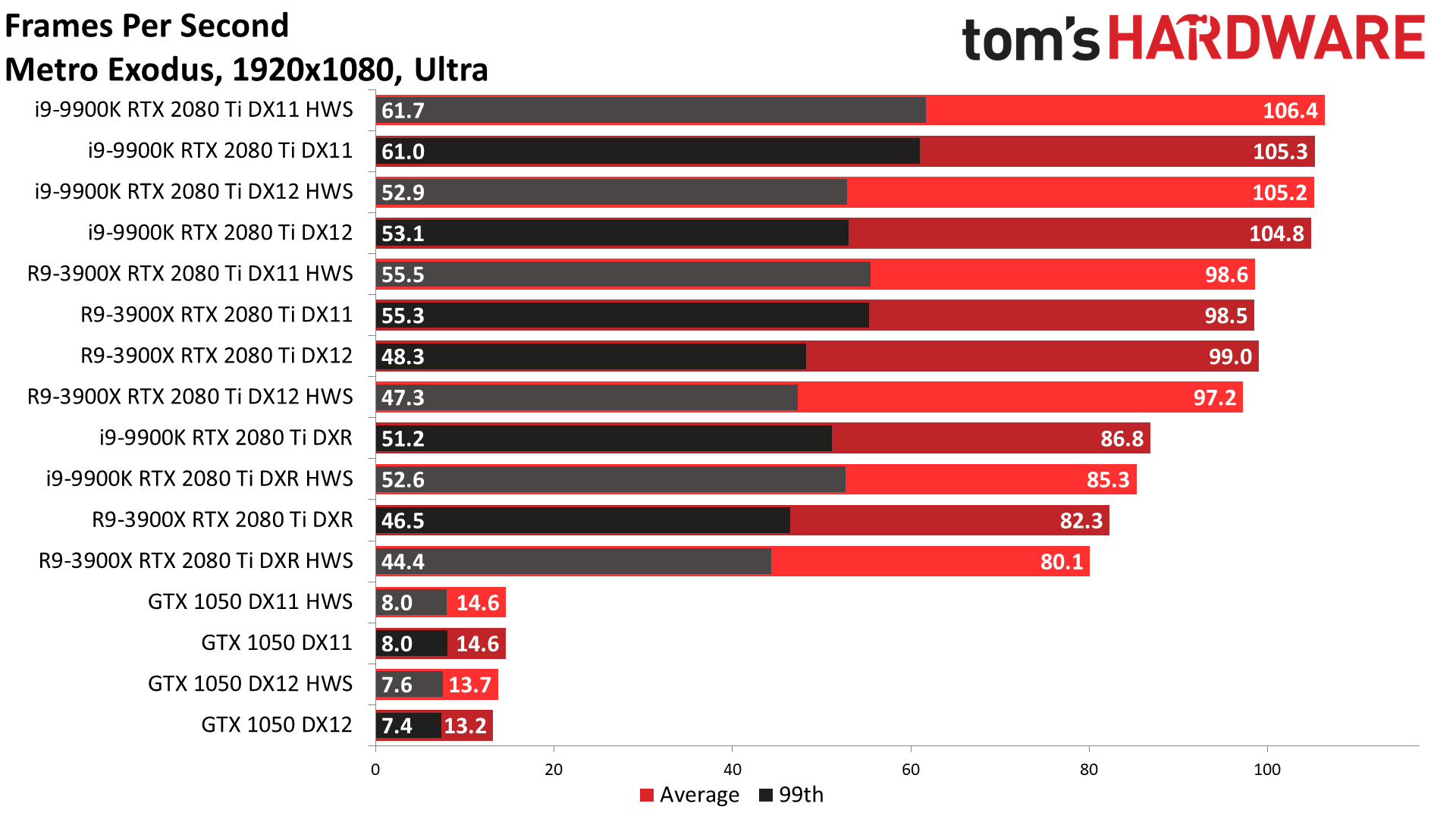

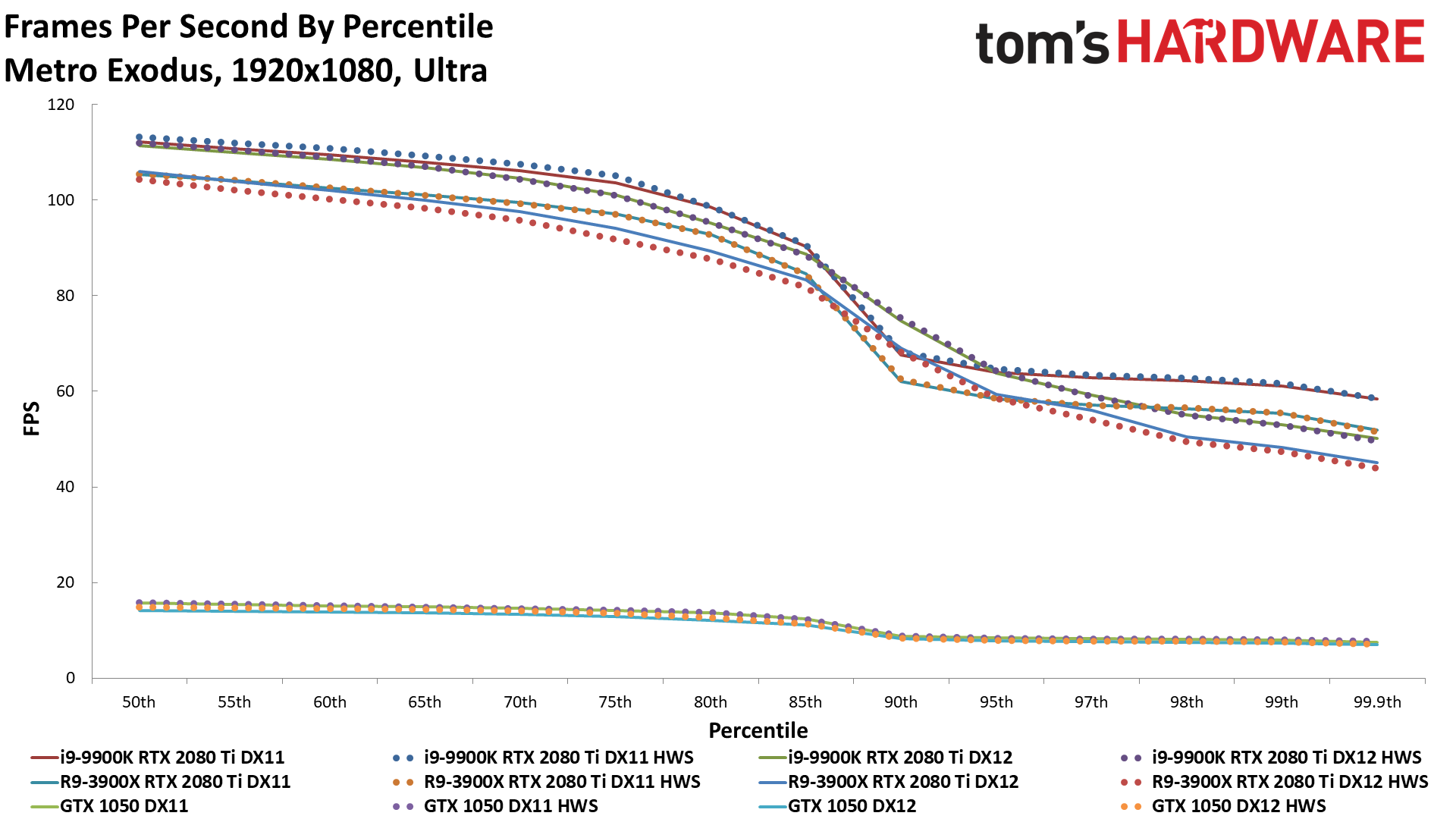

Metro Exodus kicks things up a notch with DirectX Raytracing (DXR) support on RTX cards, giving us yet another comparison point. Hardware scheduling gave a negligible performance increase on the 9900K, with DX11 performing slightly better than DX12. DX11 also performed better with the 3900X, and this time hardware scheduling reduced performance just a hair under DX12. For DXR, however, the results are mixed: higher minimum fps on the 9900K with lower average fps, and lower performance overall with the 3900X. As noted earlier, there's no real rhyme or reason here. The GTX 1050 meanwhile showed no change in DX11 performance, while it got a 4% boost (but at less than 14 fps) under DX12.

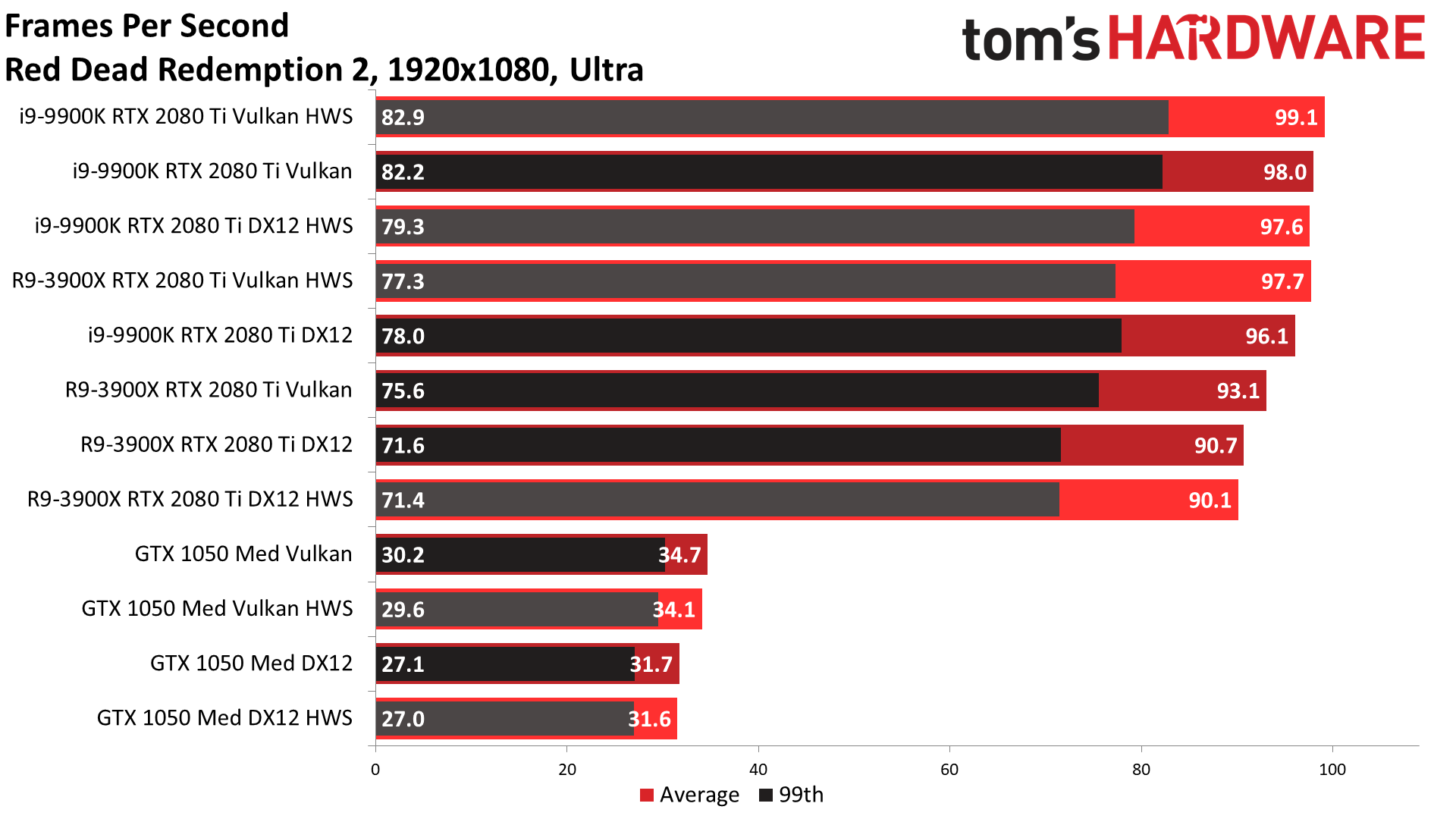

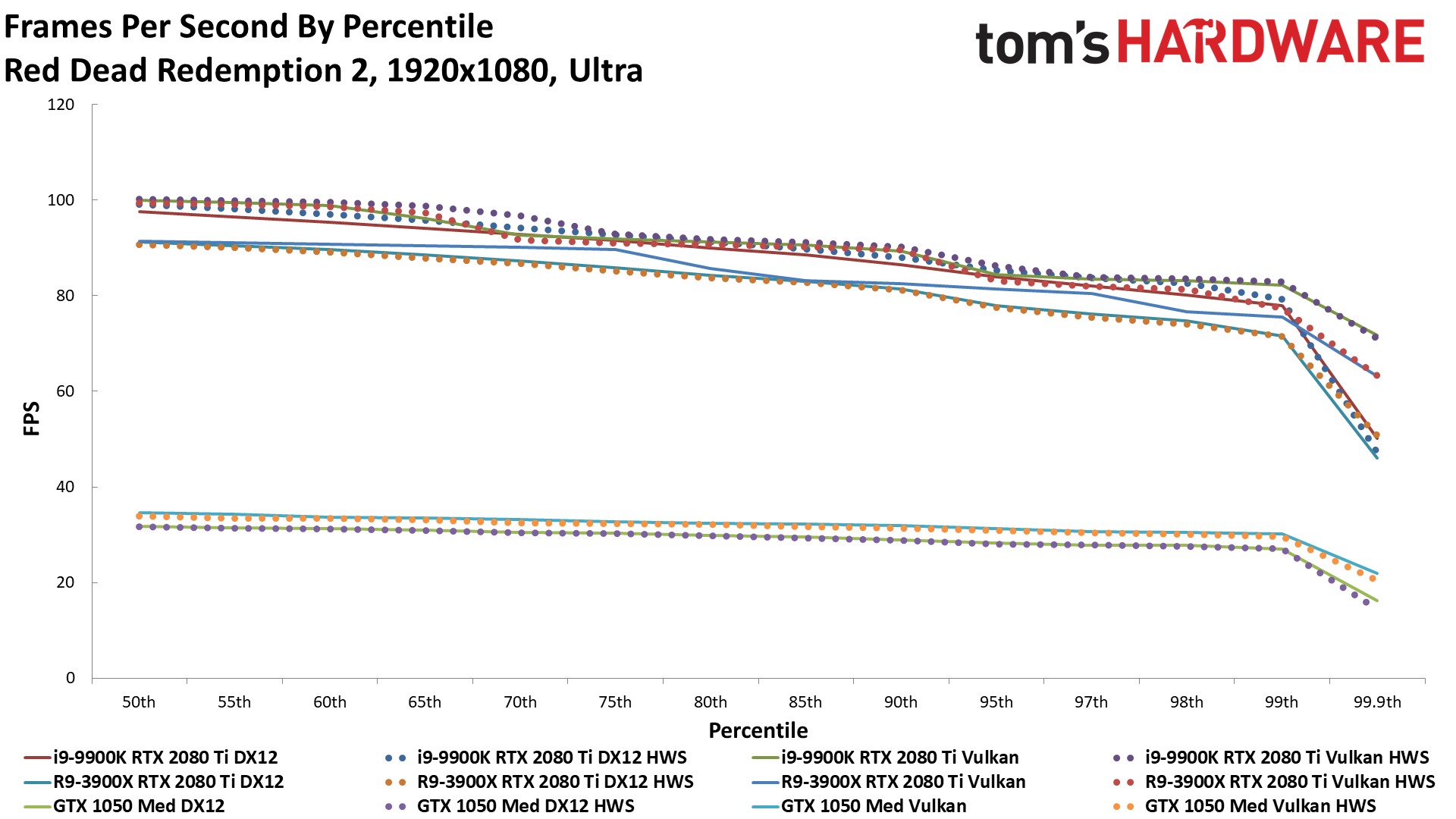

Finally, we have Red Dead Redemption 2, running under the Vulkan and DX12 APIs. Performance was universally better with Vulkan, as shown in the above chart. Hardware scheduling meanwhile gave a slight boost in performance on the 9900K, and a larger 5% boost to average framerates with the 3900X. 99th percentile fps on the 3900X only improved by 2%, however, so while hardware scheduling helped, it's not quite in the expected fashion—i.e. better minimum fps. The GTX 1050 showed slightly worse performance with hardware scheduling this time.

Initial Thoughts on Hardware Scheduling

What to make of all of this, then? Nvidia now supports a feature that can potentially improve its performance in some games. Except, it seems just as likely to hurt performance as well. This is a new API and driver feature, however, so perhaps it will prove more beneficial over time. Or perhaps I should have dug out a slower CPU or disabled some cores and threads. I'll leave that testing for someone else for now.

At present, across five tested games using multiple APIs, on average (looking at all nine or ten tests), the change in performance is basically nothing. The 9900K with RTX 2080 Ti performance is 0.03% slower, and the GTX 1050 with the 9900K performed 0.73% slower. The 3900X with RTX 2080 Ti did benefit, but only to the tune of 0.06%. In other words, the one or two cases where performance did improve are cancelled out by performance losses in other games.

If you're serious about squeezing out every last bit of performance possible, maybe for a benchmark record, you can try enabling or disabling the feature to see which performs best for the specific test(s) you're running. For most people, however, it appears to be a wash. Your time will be better spent playing games than trying to figure out when you should enable or disable hardware scheduling—and rebooting your PC between changes.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Schlachtwolf I ran Cinebench R15 with it on and off, same settings otherwise...... system specs belowReply

With it OFF 125.24 fpsWith it ON 119.17 fps

Just for the laugh I then ran Userbenchmark........ settings as above

On the final Globe with it OFF 392 fpsOn the final Globe with it ON 403 fps

Looks like another gimmick that change little or nought.... -

JarredWaltonGPU Reply

I don't know about 'gimmick' so much as it's a setting that may or may not affect performance, depending on how the game or application has been coded. Best-case, maybe a few percent higher performance.Schlachtwolf said:I ran Cinebench R15 with it on and off, same settings otherwise...... system specs below

With it OFF 125.24 fpsWith it ON 119.17 fps

Just for the laugh I then ran Userbenchmark........ settings as above

On the final Globe with it OFF 392 fpsOn the final Globe with it ON 403 fps

Looks like another gimmick that change little or nought....

FWIW, testing with the Cinebench graphics test and UserBenchmark are both pretty meaningless as far as real graphics performance goes. Not that you can't use them, but they don't correlate at all with most real-world gaming / graphics workloads. UserBenchmark's GPU test is way too simplistic, while Cinebench doesn't scale much beyond a certain level of GPU. -

escksu ReplySchlachtwolf said:I ran Cinebench R15 with it on and off, same settings otherwise...... system specs below

With it OFF 125.24 fpsWith it ON 119.17 fps

Just for the laugh I then ran Userbenchmark........ settings as above

On the final Globe with it OFF 392 fpsOn the final Globe with it ON 403 fps

Looks like another gimmick that change little or nought....

ITs nice to see 9fps increase for final Globe. But since its already at almost 400fps, we are looking at a little over 2%. Its good if its consistently 2% faster. Too bad some results went south instead.... AS you said, change little.... -

Schlachtwolf Look mine was not a scientifically based test, just a quick run of something that many may have on their PC, that is quite reasonably what many might use to see if it helps them on their everday normal setups. I played Mordor Shadow of War, Withcher 3, Chess Ultra and TheHunter Classic (11 year old game with still passable graphics) and I saw no difference in FPS or FPS stability. So for me it is a another questionable add-on resource eater or just released before any hardware/software can really use it.Reply -

razor512 Can you test a worst case scenario to see if the scheduling helps. Benchmark it on a GTX 970 where you have 2 memory pools (3.5GB and 512MB). Ignore the age of the card and focus on the how the scheduling can react to cases where there is naturally a ton of overhead in scheduling.Reply -

JarredWaltonGPU Reply

I actually already did that with GTX 1050, though not on the CPU side. Per Nvidia, you must have at least a Pascal GPU, which means GTX 1050 or above (because I do not count GT 1030 -- it's a junk card no one should buy!) GTX 970 unfortunately is Maxwell, so it will have to rely on non-HW scheduling.razor512 said:Can you test a worst case scenario to see if the scheduling helps. Benchmark it on a GTX 970 where you have 2 memory pools (3.5GB and 512MB). Ignore the age of the card and focus on the how the scheduling can react to cases where there is naturally a ton of overhead in scheduling. -

cryoburner Or perhaps I should have dug out a slower CPU or disabled some cores and threads.That was my first thought when you mentioned testing with the 1050, but nothing about testing another processor. It could be that both of these processors are too fast, or have too many threads to show any difference. Perhaps there's more of a performance difference when the game is starved for CPU resources, such as can be seen in some titles with a four-threaded processor, like Battlefield V. After all, it sounds like this feature is moving VRAM management to be handled by the GPU, so there could possibly be gains on the CPU side of things. It could be worth testing a few games that are demanding on the CPU after swapping in something like a Ryzen 1200 or an Athlon, or maybe just disabling some cores and SMT and cutting the clock rate back to achieve similar results.Reply

Another thought is that if the benefits are entirely on the VRAM management side of things, a 2080 Ti wouldn't likely see much benefit due to it having more VRAM than current games require, especially at 1080p. The performance benefits may appear in situations where the VRAM is getting filled, and data is getting swapped out to system memory. If the card can make better decisions about what data to keep in VRAM and what to offload, that might be where the performance benefits are. You did test a 1050 with just 4GB of VRAM, but perhaps a 1050 isn't fast enough for this to make much of a difference, especially considering its not even managing 30fps at the settings used in most of these tests. Another site I just checked only tested a couple games including in Forza Horizon 4, but showed around an 8% performance gain in both when using a 1650 SUPER paired with a 9900K, but no tangible difference to performance with a 2080 Ti, so that might be the case. Of course, that wasn't a site I would actually trust for the accuracy of benchmark results. : P -

JarredWaltonGPU Reply

Yeah, that's the thing: if you only run the game benchmark ONCE and then move on, you're going to get a lot more noise. I specifically ran each test three times to verify consistency of results (because the first run is almost always higher, thanks to the GPU being cooler and boost clocks kicking in more). Given the results so far, however, I don't want to put a bunch more time into testing, which is why the article says "I'll leave that to others."cryoburner said:Another site I just checked only tested a couple games including in Forza Horizon 4, but showed around an 8% performance gain in both when using a 1650 SUPER paired with a 9900K, but no tangible difference to performance with a 2080 Ti, so that might be the case. Of course, that wasn't a site I would actually trust for the accuracy of benchmark results. : P -

TerryLaze Reply

The thing here would be that game benchmarks are already perfectly RAM managed by the developers of that benchmark themselves (at least that's how I imagine it) while the probably pretty generic hardware accelerated scheduling won't be able to beat that.cryoburner said:After all, it sounds like this feature is moving VRAM management to be handled by the GPU, so there could possibly be gains on the CPU side of things.

The question is if it does anything outside of the canned benchmark where devs can't optimize each and every scene to perfection.

Also running a 2Gb video card at ultra and expecting anything other than a huge vram bottleneck is pretty funny.