Intel is Working on Optical Chips for More Efficient AI

Intel has reported progress on making optical neural networks a reality. By using light instead of electrons, these chips could be up to 100 times more power efficient and reduce latency by many orders of magnitude. Intel simulated two ONN architectures and considered scalability and manufacturability, as they described in a recent paper.

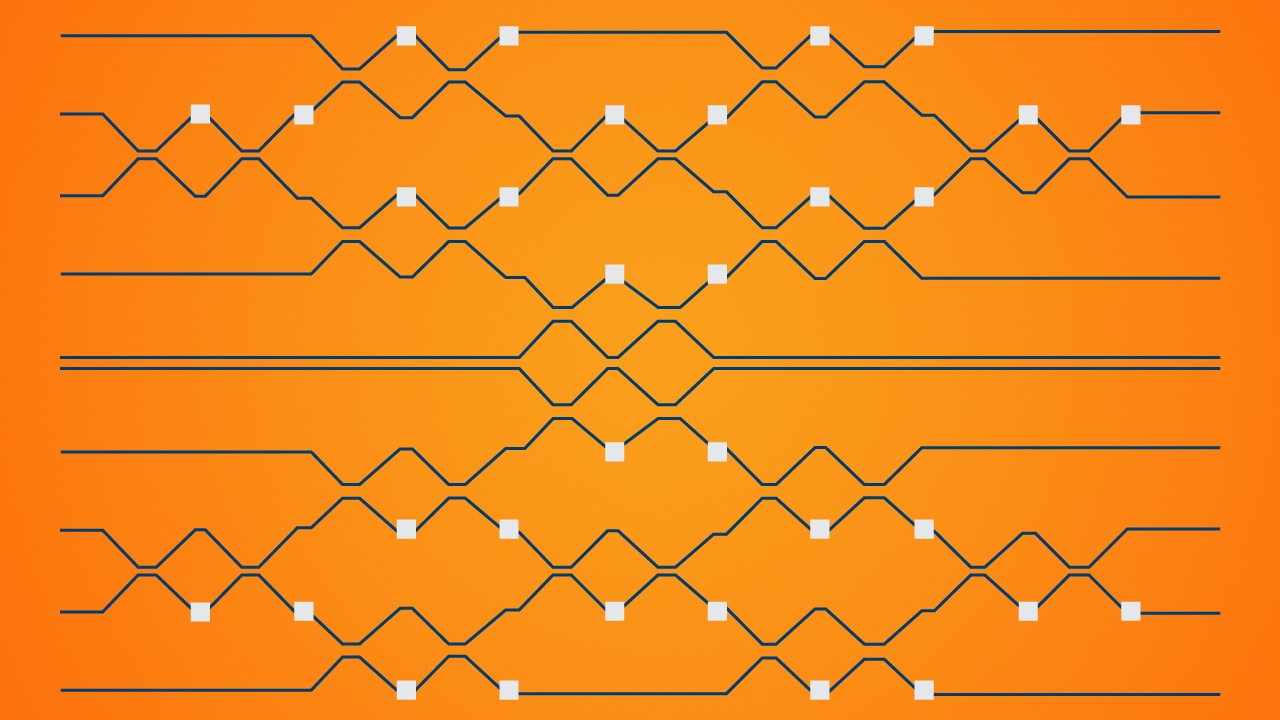

Using photons (light) instead of electrons as a medium for computing and using the same silicon as ordinary chips is known as silicon photonics. But so far silicon photonics’ commercial use (which was released by Intel in 2016) has been constrained to only data movement. While multiple designs for optical neural networks (ONNs) have been proposed, in 2017 it was demonstrated that a common photonic circuit component (called an MZI) could be configured to perform some form of 2x2 matrix multiplication, but they also showed it was possible to create larger matrices by placing the MZIs in a triangular mesh. In this way, it was shown that matrix-vector multiplication with photonic circuits is possible. Just two years ago, researchers from MIT proposed ONNs based on MZI, but clearly, Intel has also shown interest in the topic.

Intel’s research and contributions were focused on investigating what happens when fabrication errors are introduced, which ONNs are susceptible to (since computational photonics is analog in nature) and cause variations within and across the chips. While this has already been studied, previous work was focused more on post-fabrication optimization to deal with these imprecisions. But this has poor scalability as the networks become larger, resulting in more and more computational power that is required to configure the optical networks.

Instead of the post-fabrication optimization, Intel considered pre-fabrication training. One ideal ONN was trained once, after which the training parameters were distributed to multiple fabricated instances of the network with imprecise components. Intel considered two different ONN architectures, based on how the MZIs were arranged. They created a more tunable design, which they called GridNet, and a more fault tolerant architecture called FFTNet, and simulated the effects of imperfections. (Only the matrix operations were done by the ONNs.)

When there were no imperfections, the more tunable GridNet (98%) achieved higher accuracy than FFTNet (95%) in the MNIST handwriting digit recognition benchmark. However, when noise was introduced in the photonic components, FFTNet was found to be significantly more robust: while FFTNet’s accuracy remained almost constant, the researchers found that GridNET’s accuracy dropped below 50%.

While Intel’s research was based on simulations, a start-up from MIT has recently demonstrated a prototype photonic integrated circuit that reduced latency by 10,000 times and improved energy efficiency by “orders of magnitude,” showing that such designs have the potential to be a successful alternative to current electronic digital neural networks. GM of Intel’s AI Products Group, Naveen Rao, noted in a tweet about Intel's research: “Still some tough problems to solve, but [optical neural network processors] could change the fundamental computing substrate.”

It is also not the only alternative that Intel is investigating in the area of AI: the company is also researching neuromorphic chips and probabilistic computing. The paper can be found here.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.