Intel: Sapphire Rapids with HBM Is 2X Faster than AMD's Milan-X

In memory bound workloads.

Intel's fourth Generation Xeon Scalable 'Sapphire Rapids' processors can get a massive performance uplift from on-package HBM2E memory in memory-bound workloads, the company revealed on Thursday. The Sapphire Rapids CPUs with on-package HBM2E are about 2.8 times faster when compared to existing AMD EPYC 'Milan' and Intel Xeon Scalable 'Ice Lake' processors. More importantly, Intel is confident enough to say that its forthcoming part is two times faster than AMD's upcoming EPYC 'Milan-X.'

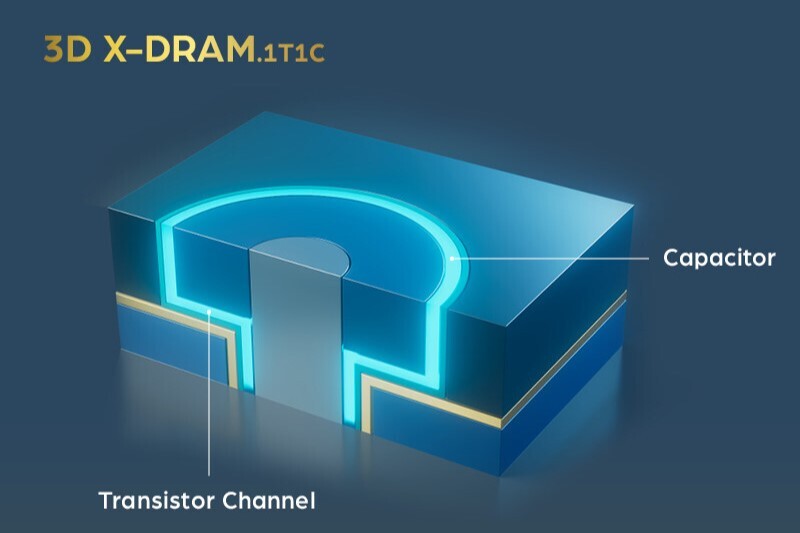

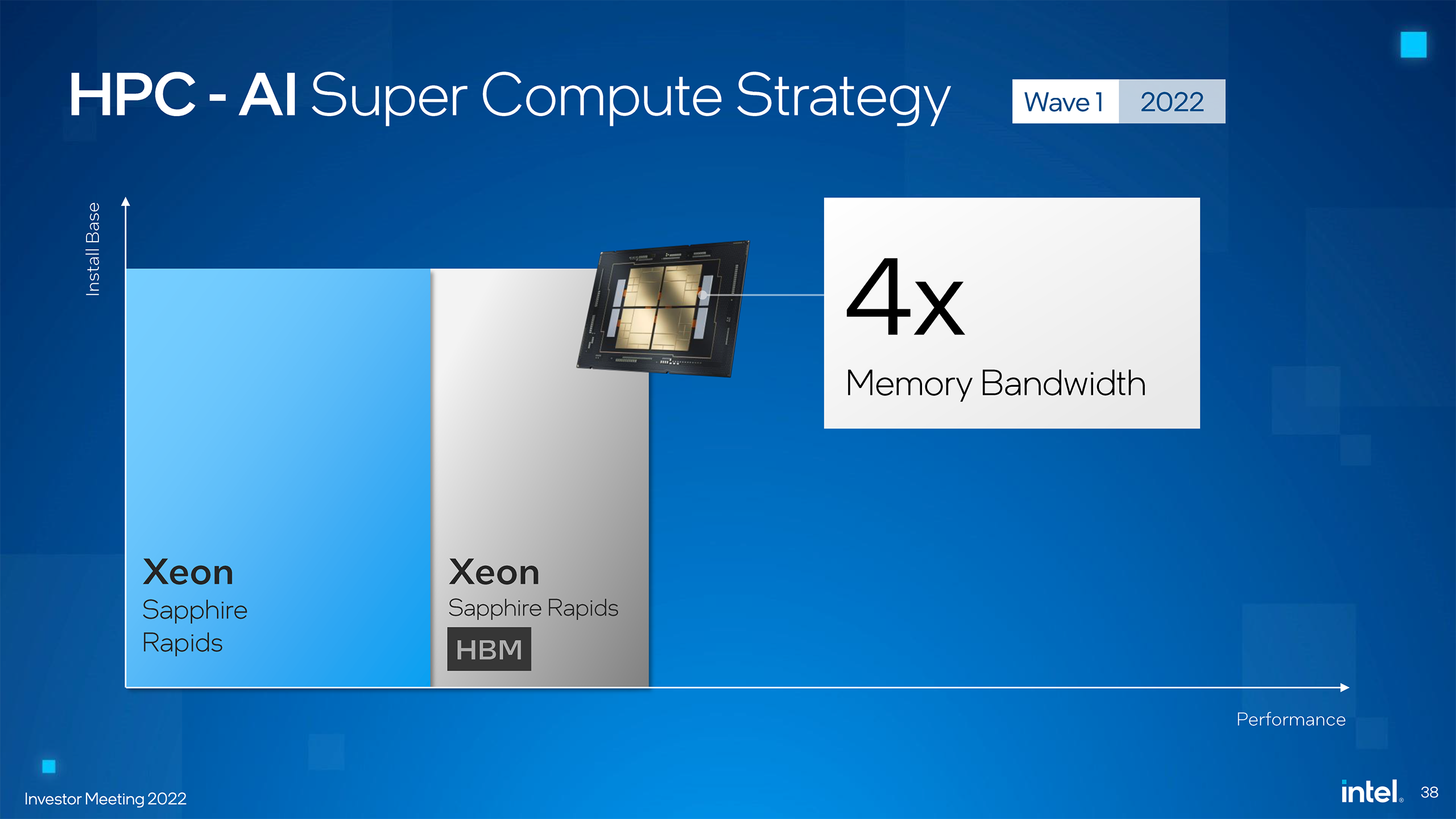

"Bringing [HBM2E memory into Xeon package] gives GPU-like memory bandwidth to CPU workloads," said Raja Koduri, the head of Intel's Intel's Accelerated Computing Systems and Graphics Group. "This offers many CPU applications, as much as four times more memory bandwidth. And they do not need to make any code changes to get benefit from this."

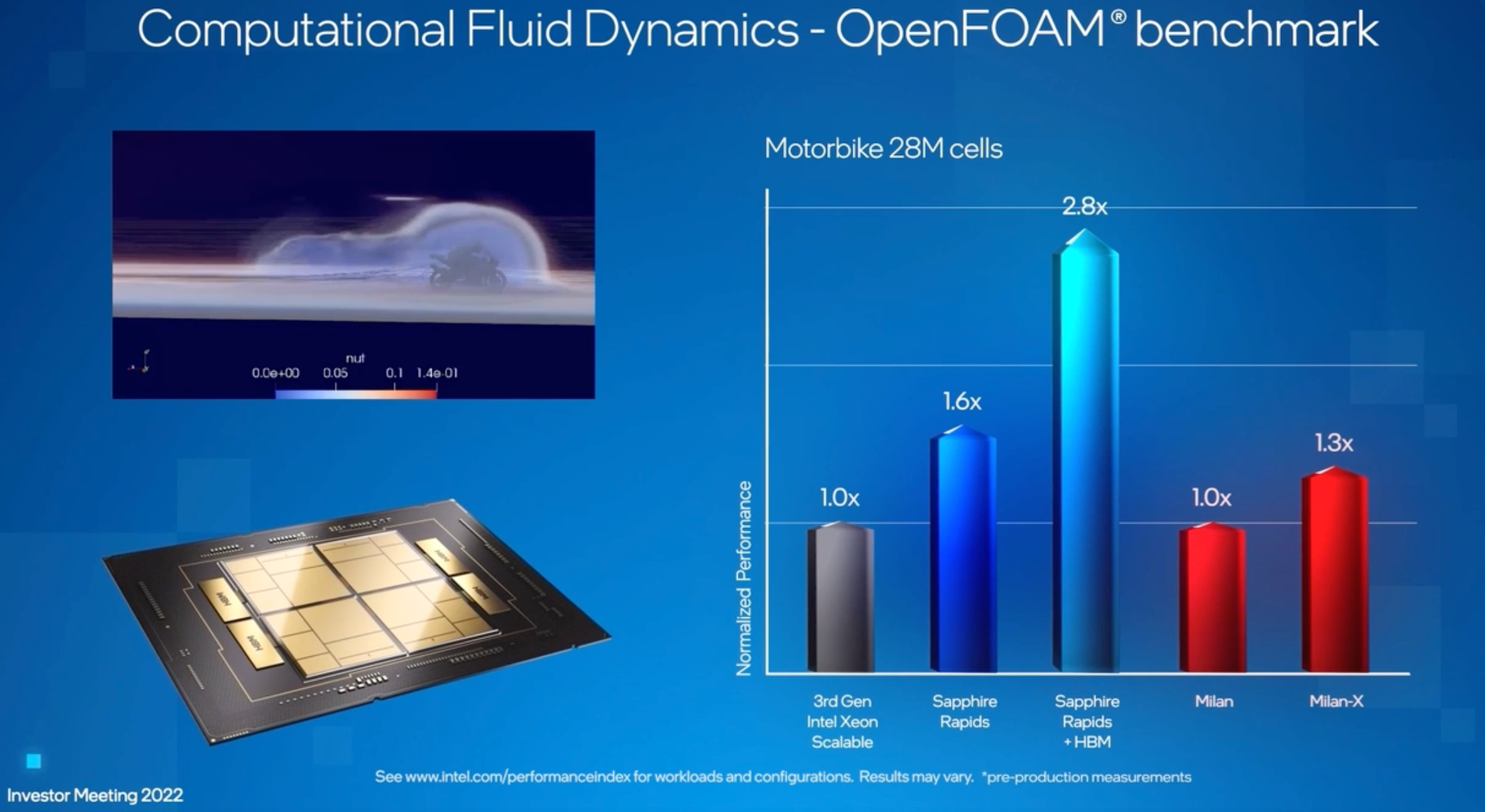

To prove its point, Intel took the OpenFOAM computational fluid dynamics (CFD) benchmark (28M_cell_motorbiketest) and ran it on its existing Xeon Scalable 'Ice Lake-SP' CPU, a sample of its regular Xeon Scalable 'Sapphire Rapids' processor, and a pre-production version of its Xeon Scalable 'Sapphire Rapids with HBM' CPU, revealing rather massive advantage that the upcoming CPUs will have over current platforms.

The difference that on-package HBM2E brings is indeed very significant: while a regular Sapphire Rapids is around 60% faster than an Ice Lake-SP, an HBM2E-equipped Sapphire Rapids brings in a whopping 180% performance boost.

What is perhaps more interesting is that Intel also compared performance of its future processors to an unknown AMD EPYC 'Milan' CPU (which performs just like Intel's Xeon 'Ice Lake', according to Intel and OpenBenchmarking.org) as well as yet-to-be-released EPYC 'Milan-X' processor that carries 256MB of L3 and 512MB of 3D V-Cache. Based on results from Intel, AMD's 3D V-Cache only improves performance by about 30%, which means that even a regular Sapphire Rapids will be faster than this part. By contrast, Intel's Sapphire Rapids with HBM2E will offer more than two times (or 115%) higher performance than Milan-X in OpenFOAM computational fluid dynamics (CFD) benchmark.

Performance claims like these made by companies must be verified by independent testers (especially given the fact that some other benchmark results show a different picture), but Intel seems to be very optimistic about its Sapphire Rapids processors equipped with HBM2E memory.

The addition of on-package 64GB HBM2E memory increases bandwidth available to Intel Xeon 'Sapphire Rapids' processor to approximately 1.22 TB/s, or by four times when compared to a standard Xeon 'Sapphire Rapids' CPU with eight DDR5-4800 channels. This kind of uplift is very significant for memory bandwidth dependent workloads, such as computational fluid dynamics. What is even more attractive is that developers do not need to change their code to take advantage of that bandwidth, assuming that the Sapphire Rapids HBM2E system is configured properly and HBM2E memory is operating in the right mode.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"Computational fluid dynamics is one of the applications that benefits from memory bandwidth performance," explained Koduri. "CFD is routinely used today inside a variety of HPC disciplines and industries significantly reducing product development, time and cost. We tested OpenFOAM, a leading open source HPC workload for CFD on a pre-production Xeon HBM2E system. As you can see it performs significantly faster than our current generation Xeon processor."

Intel will ship its Xeon Scalable 'Sapphire Rapids' processors with on-package HBM2E memory in the second half of the year.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.