Intel Xeon Phi Knights Landing Now Shipping; Omni Path Update, Too

Intel announced at the ISC 2016 High Performance Computing event that its Xeon Phi Knights Landing products, which it originally announced back in November at the Supercomputing conference, are now shipping. The Knights Landing products include Intel's Omni-Path Fabric, and the company also unveiled its HPC Orchestrator.

Intel recently restructured to focus on three non-desktop PC pillars, which include the datacenter, IoT and memory markets. Intel has the advantage of its much-ballyhooed 99.2 percent market share of datacenter CPU sockets, but this actually restricts its ability to grow datacenter revenue. Intel is merely stuck in a never-ending upgrade cycle that sees it replacing its own processors on a regular cadence.

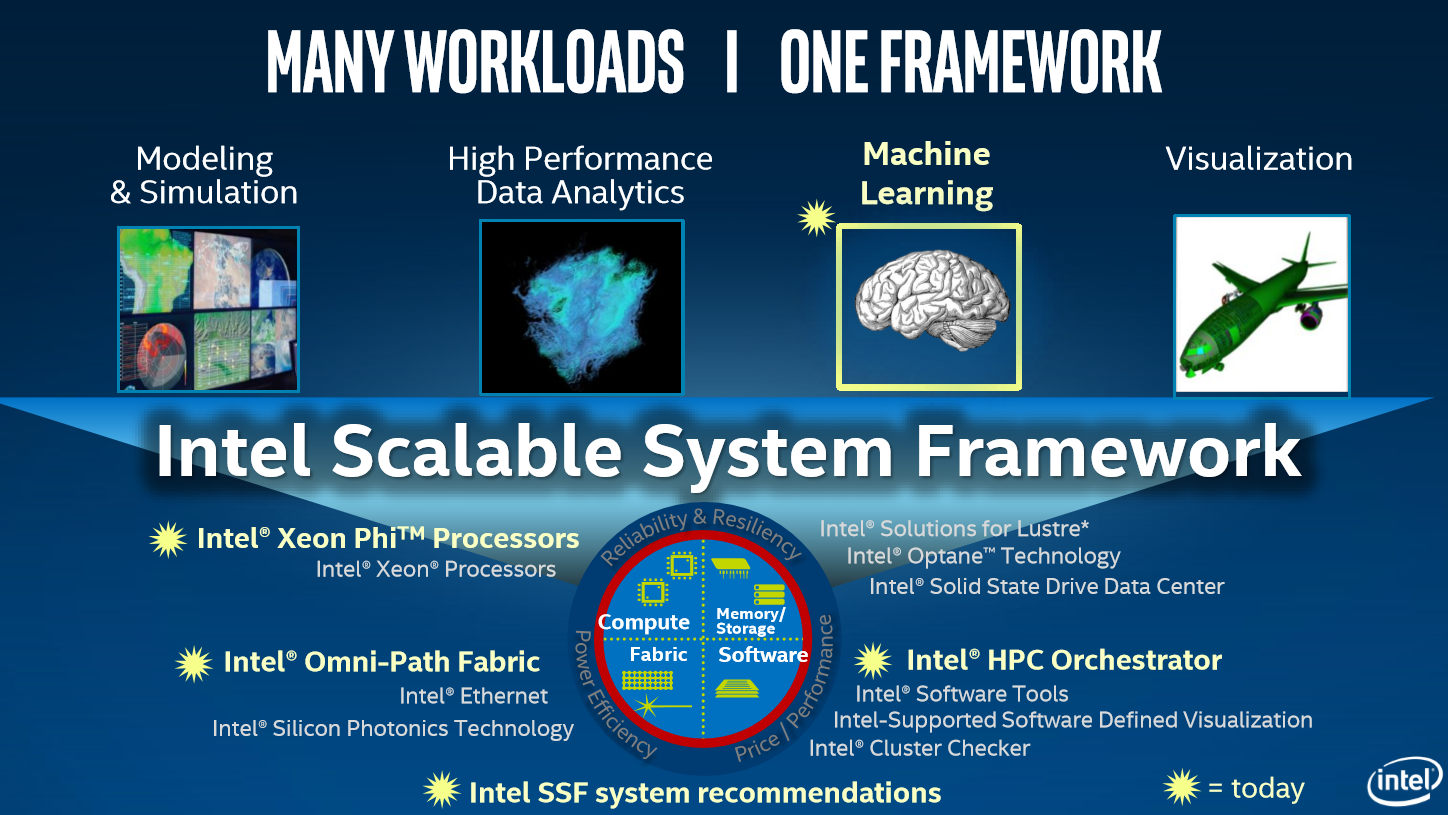

Surprisingly, the overall server hardware spend has not grown appreciably in over twenty years, so the tail-chasing upgrade cycle is not conducive to revenue growth. Intel is attempting to expand its datacenter penetration by targeting high-growth segments, such as parallel processing with the Xeon Phi family (ostensibly displacing GPUs), Omni-Path (networking) and 3D NAND/XPoint (memory). Intel's strategy is to use its CPU dominance as a beachhead to attack with its Scalable System Framework (SFF), which is a holistic approach to rack-scale architectures.

Xeon Phi Knights Landing

The Xeon Phi family, which Intel derived from the Larrabee project, is designed for the highly parallel workloads found in HPC, machine learning, financial and engineering workloads.

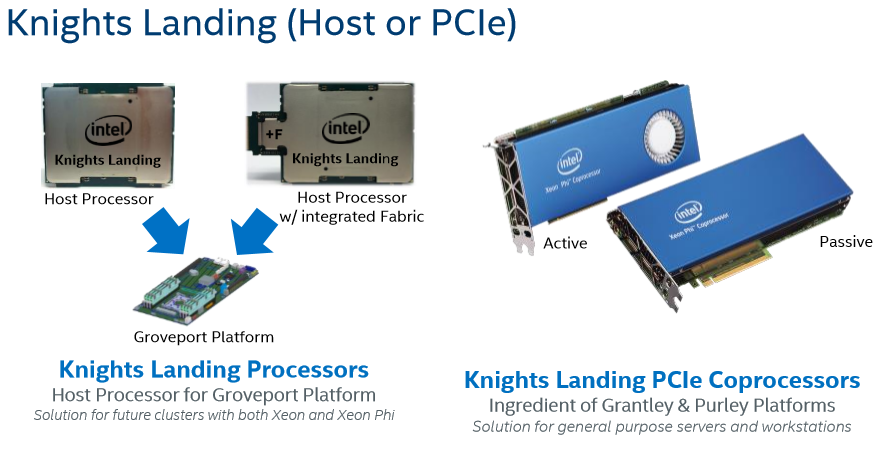

The Knights Landing (KNL) products are the second generation (x200) of the Xeon Phi family, and expand upon the previous-generation Knights Corner products by adding support for bootable socketed processors, while the first-generation products were available only as PCIe Coprocessors. KNL processors come with or without an integrated Omni-Path fabric, denoted by the protruding connector on the processor. The KNL processors snap into the LGA 3647 "Socket P," which has an opening to accommodate the Omni-Path fabric connector. Omni-Path is a key component to the Scalable System Framework, so the tight integration into the KNL platform will help Intel further its rack-scale objectives.

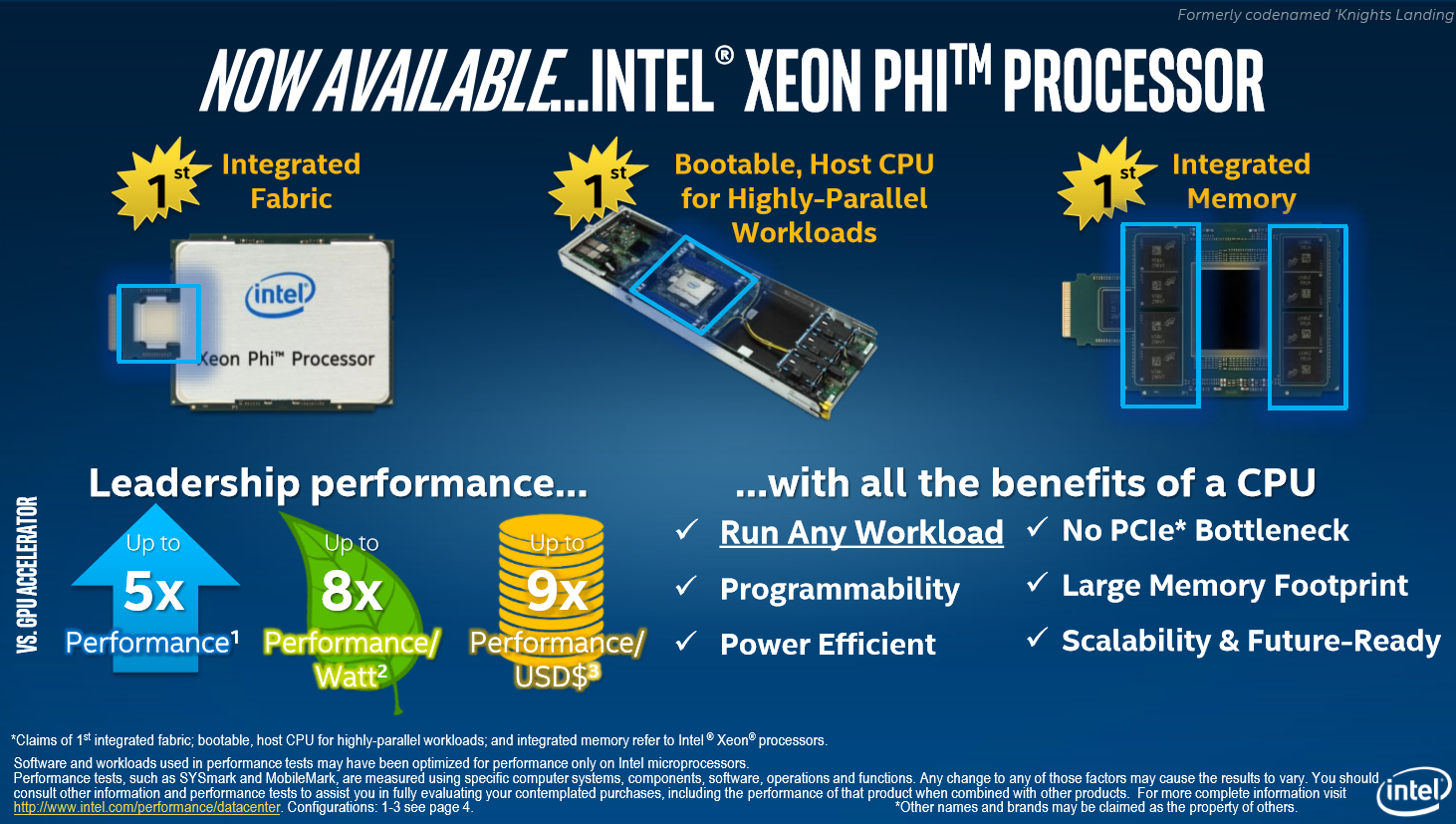

The KNL generation is the first bootable CPU designed specifically for parallel workloads and is the first processor with integrated Omni-Path fabric (note the additional control chip on the connector), HBM (High Bandwidth Memory) and AVX-512 support.

Intel claimed that KNL processors eliminate PCIe bottlenecks and provide up to 5x the performance, 8x the performance per watt, and 9x the performance per dollar than competing GPU solutions. It is notable that these results are from internal Intel testing, some of which the company generated with previous-generation GPUs. Intel indicated this is due to limited sample availability.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

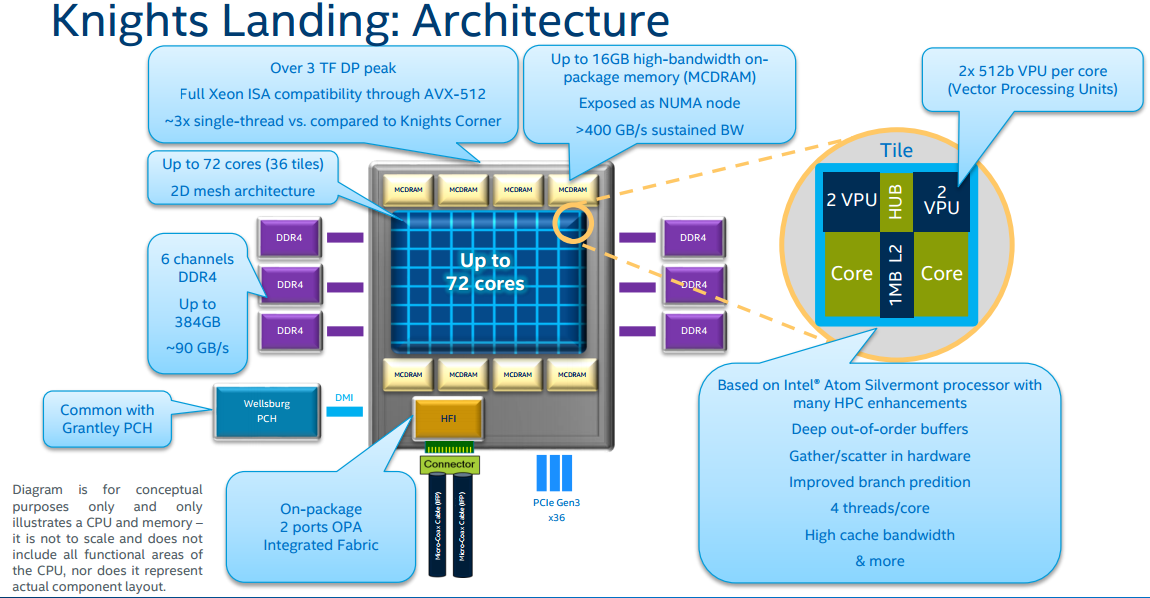

The KNL (>8 billion transistor) 14nm processor features an innovative 72-core architecture, which is split into 36 dual-core "Tiles" that are arranged in a mesh configuration. Each quad-threaded Silvermont core features two AVX-512 VPU (Vector Processing Units) per core, for a total of 144 VPU. Each tile houses 1 MB of shared L2 cache, which equates to 36 MB of total L2 cache. The KNL processors feature up to 3x the single-threaded performance relative to the previous-generation Knights Crossing products.

KNL features 16 GB of on-package MCDRAM (Multi-Channel DRAM) Micron HBM, which provides up to 500 GB/s of throughput (100 GB/s more than indicated on the slide due to updated information provided by Intel). The eight Micron HBM packages serve as a fast memory tier, and the KNL platform provides three modes of operation (cache, hybrid and flat). The processor supports up to 384 GB of DDR4 memory spread over six channels (~90 GB/s) and connects to the Wellsburg PCH through a four-lane DMI connection.

The aforementioned integrated Omni-Path fabric is connected to the die via dual PCIe x16 ports and provides a dual-ported 100 Gbps pipe for networking traffic. The processor also features 36 lanes of PCIe 3.0, but it does not feature a QPI connection for multi-socket applications (it does support various internal Clustering/NUMA operating modes). The socketed processors are bootable, whereas the PCIe cards are not.

| Xeon Phi Knights Landing | KNL 7290 | KNL 7250 | KNL 7230 | KNL 7210 |

|---|---|---|---|---|

| Process | 14nm | 14nm | 14nm | 14nm |

| Architecture | Silvermont | Silvermont | Silvermont | Silvermont |

| Cores/Threads | 72 / 288 | 68 / 272 | 64 / 256 | 64 / 256 |

| Clock (GHz) | 1.5 | 1.4 | 1.3 | 1.3 |

| HBM / Speed (GT/s) | 16 GB / 7.2 | 16 GB / 7.2 | 16 GB / 7.2 | 16 GB / 6.4 |

| DDR4 / Speed (MHz) | 384 GB / 2400 | 384 GB / 2400 | 384 GB / 2400 | 384 GB / 2133 |

| TDP | 245W | 215W | 215W | 215W |

| Omni-Path Fabric | Yes | Yes | Yes | Yes |

| MSRP | $6,254 | $4,876 | $3,710 | $2,438 |

Intel spread the Knights Landing family among four primary SKUs, though Intel might introduce more in the future. The primary differentiators between each SKU boil down to the clock speed and core count, though the low-end 7210 also suffers bandwidth restrictions with both standard DRAM and HBM. Intel predicts the 7210 will be the most popular product, as it offers 80-85 percent of the performance of the high-end 7290 model at half the cost. Intel has already sold (or has orders for) 100,000 units, and cultivated an ecosystem of 32 OEM/channel systems and 30 ISVs prior to the official launch.

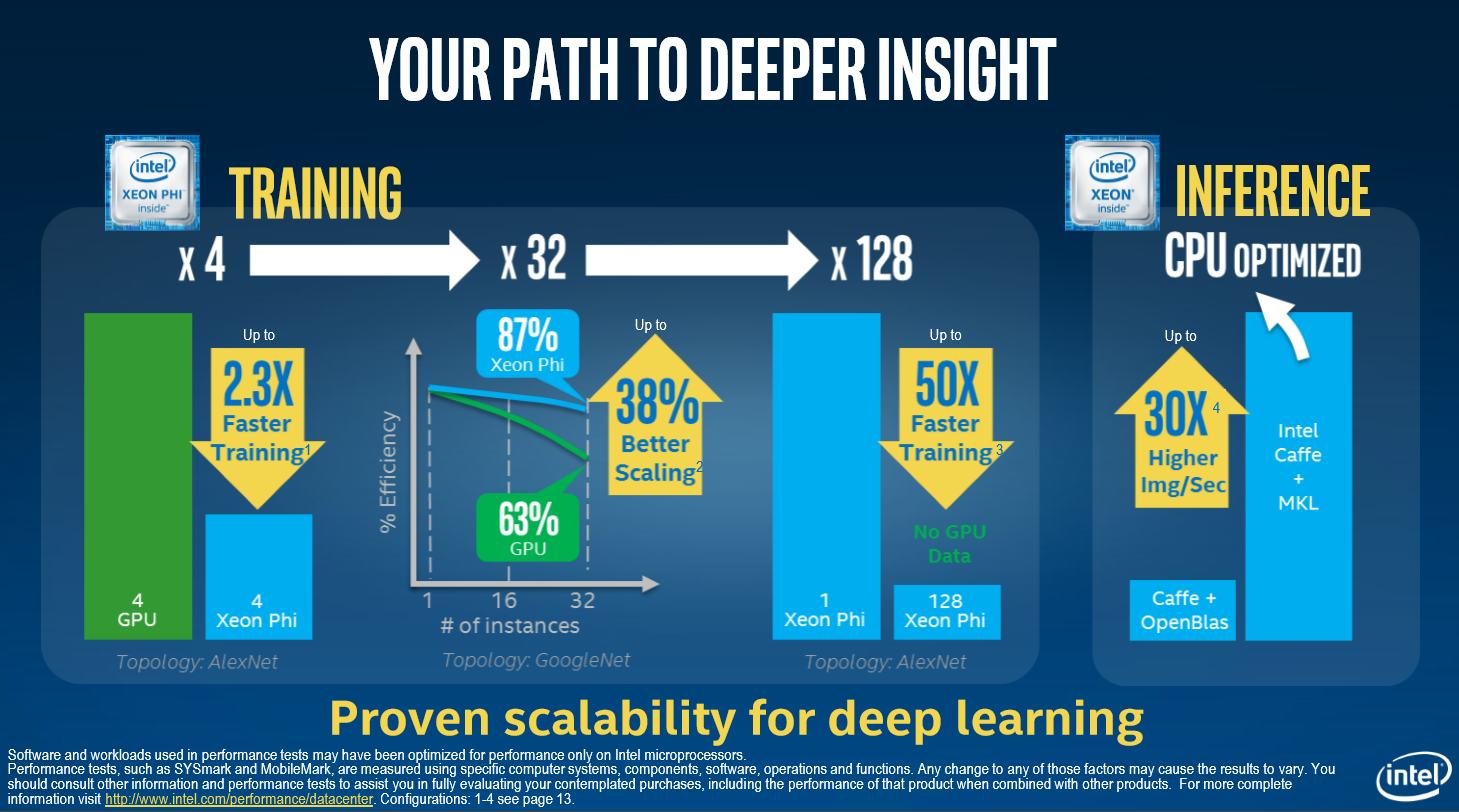

Intel claims the KNL platform will deliver 3+ TeraFLOPs of double-precision per single-socket node (6+ TFLOPs of single-precision). Intel also provided a specific breakout of KNL performance relative to GPUs for machine learning applications.

Machine learning algorithms are broken into two general categories: training and inference. Training is the more compute-intensive of the two tasks and is where users typically employ GPUs, FPGAs and ASICs (to a lesser extent) to handle the heavy lifting. Companies employ ASICs, such as Google's recently announced Tensor Processing Unit, after rigorous development on an FPGA platform. The higher cost of ASICs tends to relegate it to hyperscale applications, and Intel positions its Xeon Phi family as a more general-purpose and affordable alternative.

Another important consideration with ASIC-based architectures is that they invariably need some form of compute to drive them, and in today's climate, that means a Xeon. Intel feels that the bootable KNL can help reduce the cost and complexity of a combined ASIC-Xeon architecture.

Intel has the FPGA bases covered. It acquired Altera to bring FPGAs on-die with some Xeon-based products, which might change the paradigm in the future. Intel feels that KNL is uniquely positioned to challenge GPUs in training tasks, and touts its scalability and performance as key differentiators. Intel indicated that GPUs are usually confined to scale-up applications (heavy compute in a single node) instead of the scale-out architectures (multi-node) that KNL addresses.

However, Nvidia’s new Tesla P100 that it announced today can offer more scalability in the traditional PCIe-based multi-node scale-out architectures, which might alter the calculus a bit. Intel also (unsurprisingly) feels that its Xeon family is the best fit for lighter-weight inference tasks.

Omni-Path And Scalable System Framework

Intel also offered an update on the Omni-Path ecosystem, which now consists of over 80,000 nodes (20-25 percent of the addressable market, per Intel). Intel said that many of its Omni-Path deployments are overlapping with its Xeon Phi sales, and we would expect that trend to accelerate now that Intel integrated the dual-port 100 Gbps connection into the KNL package.

Finally, Intel announced its new HPC Orchestrator system software, which is based upon OpenHPC. The pre-integrated, pre-tested and pre-validated product is already in trials with major EOMs, integrators, ISVs and HPC research centers, and will launch in Q4 2016.

Paul Alcorn is a Contributing Editor for Tom's Hardware. Follow him on Twitter and Google+.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

bit_user Replyfirst processor with ... AVX-512 support.

BTW, its predecessor also had 512-bit vector instructions, but of a slightly different flavor. I think AVX-512 might be inferior, due to the lack of fp-16 support. This will certainly put it at a disadvantage vs. the GP100, for certain machine learning applications.

I want to see Intel put a 480+ core (20+ slice) GPU in a Socket-P, paired with an 8-core CPU and HBM. That would be probably be more competitive with GPUs. -

bit_user Reply

I saw that. Already fixed. Check the last-edited timestamp.18154562 said:

Actually, per Wiki "AVX-512 is not the first 512-bit SIMD instruction set that Intel has introduced in processors.18154419 said:first processor with ... AVX-512 support.

Not true. Its predecessor also had this.

Anyway, what's with this?

Each quad-threaded Silvermont core features two AVX-512 VPU (Vector Processing Units) per core, for a total of 288 VPU.

If it's two per core, wouldn't it be 144 VPUs? And I think this would equate to 2304 "cores", as AMD and Nvidia would count them.

IMO, Intel missed the boat, on machine learning. As you point out, Google is already beyond FPGAs. I predict they're already working on a hard-wired accelerator block, not unlike the QuickSink video accelerator block in their desktop & mobile GPUs. -

Paul Alcorn ReplyIf it's two per core, wouldn't it be 144 VPUs? And I think this would equate to 2304 "cores", as AMD and Nvidia would count them.

IMO, Intel missed the boat, on machine learning. As you point out, Google is already beyond FPGAs. I predict they're already working on a hard-wired accelerator block, not unlike the QuickSink video accelerator block in their desktop & mobile GPUs.

Good catch, I got the number wrong :) Google is certainly nebulous, but it surely has something newer than its TPU in the works, or already deployed, as otherwise I doubt it would have shown the TPU.

-

bit_user Reply

I'm talking about Intel, though.18154675 said:Google is certainly nebulous, but it surely has something newer than its TPU in the works, or already deployed, as otherwise I doubt it would have shown the TPU.

It seems AVX-512 is missing half-precision floats, though their Gen9 HD Graphics and Nvidia's Pascal (and Maxwell, for that matter) have it. So, that puts Knights Landing at a disadvantage vs. GPUs. Then, consider that Google & others are even beyond FPGAs, for certain things, which it doesn't even have. So, if I were Intel, I'd be scrambling to build a dedicated machine learning engine into my next generation of chips (see Qualcomm's Zeroth engine).

And, actually, the HD Graphics GPUs are pretty nice. So, I'd love to see them scale up & do more with that architecture. -

bit_user Wow, I just noticed they stuck with Silvermont! Rumors were they'd use a new Atom core - Goldmont. This is actually the most surprising detail, for me.Reply -

turkey3_scratch Reply18153662 said:72 core / 288 threads.

just what you need for playing solitaire :D

Minesweeper with 1 trillion boxes. -

Paul Alcorn Reply18155141 said:

I'm talking about Intel, though.18154675 said:Google is certainly nebulous, but it surely has something newer than its TPU in the works, or already deployed, as otherwise I doubt it would have shown the TPU.

It seems AVX-512 is missing half-precision floats, though their Gen9 HD Graphics and Nvidia's Pascal (and Maxwell, for that matter) have it. So, that puts Knights Landing at a disadvantage vs. GPUs. Then, consider that Google & others are even beyond FPGAs, for certain things, which it doesn't even have. So, if I were Intel, I'd be scrambling to build a dedicated machine learning engine into my next generation of chips (see Qualcomm's Zeroth engine).

And, actually, the HD Graphics GPUs are pretty nice. So, I'd love to see them scale up & do more with that architecture.

I think that would be a good idea, it definitely should hardcode something a la QuickSync.

In some odd way Intel seems to be setting itself up to compete against itself on the machine learning side. It has the KNL out, but it also purchased Altera to bring the FPGA on-die with Xeons, and judging from the early silicon they are teasing, it is well underway. I see that as a competitor of sorts to the KNL...but perhaps they are just tailoring for specific audiences.

I have no idea how Zeroth managed to fly under my radar, some interesting reading for tonight!