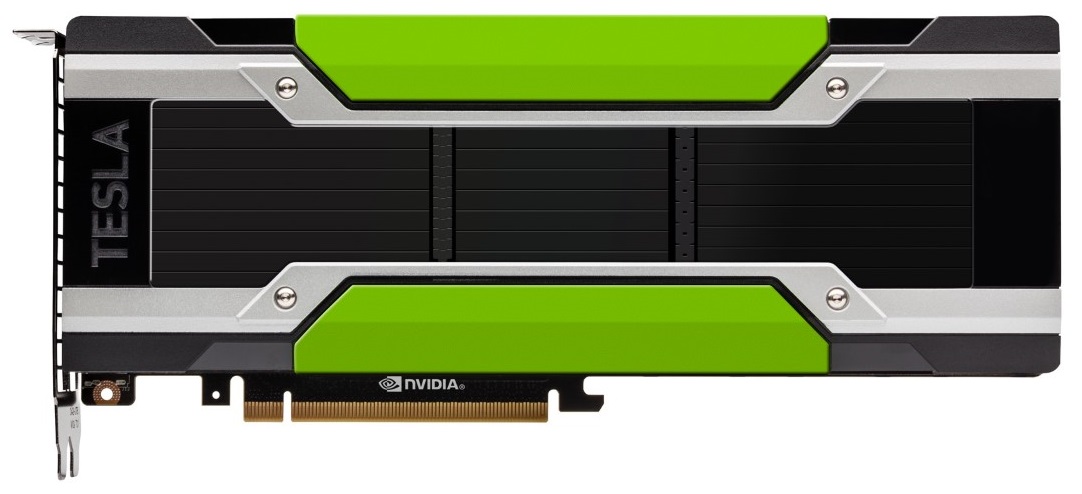

Nvidia Unveils PCI-Express Variant Of Tesla P100 At ISC 2016

Another supercomputing event has arrived, and Nvidia has an equally appropriate GPU announcement for it. Meet the PCI-Express variant of the Tesla P100.

Back in April, at GTC (Nvidia’s own GPU Technology Conference), Nvidia announced its Tesla P100 graphics card. At the time, it came with only an NVLink interconnect. Now, for the first day of ISC 2016, Nvidia announced the PCI-Express variant of the Tesla P100.

This card is based on the same Pascal GPU as the NVLink P100, packing 15.3 billion transistors made with the 16 nm FinFET manufacturing process and of course with HBM2 memory. The GP100 aboard is a huge GPU that measures 610 square mm.

However, the PCI-Express variant of the P100 operates a little slower than the previously-announced NVLink variant. Due to TDP restrictions in PCI-Express environments, Nvidia had to lower the power levels down to 250 W from 300 W, which it accomplished by lowering the GPU clocks from a boost clock of 1480 MHz down to 1300 MHz.

The result is that, according to Nvidia, performance measures 18.7 teraflops for half-precision (what much of Deep Learning is done at), 9.3 teraflops for single-precision, and 4.7 teraflops for double-precision -- a tad below the NVLink variant, but still far beyond what any other card can offer over PCI-Express.

In context, the NVLink P100 is therefore meant for large-scale applications, whereas the PCI-Express variant is meant to be used in workstations for smaller-scale workloads--which is still ideal for Deep Learning.

Whereas the NVlink P100 came with 16 GB of HBM2 memory only, the PCI-Express variant comes with either that, or for less memory-intensive applications, a 12 GB variant that delivers 540 GB/s memory bandwidth rather than 720 GB/s.

Availability for the cards in systems is slated for Q4 2016.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Niels Broekhuijsen @NBroekhuijsen. Follow us @tomshardware, on Facebook and on Google+.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.

-

AdviserKulikov The "TDP restrictions" sound like an excuse to push their proprietary tech and require users get a NVidia only motherboard. PCI-e has had 500W GPUs running on them, the option for additional power connections is always available.Reply -

jimmysmitty Reply18152496 said:The "TDP restrictions" sound like an excuse to push their proprietary tech and require users get a NVidia only motherboard. PCI-e has had 500W GPUs running on them, the option for additional power connections is always available.

It is more about the environment. Sure they could throw a 300W GPU in there. Problem is that it would limit the TDP of other parts. Servers and HPC, where this will be going, require a precise design for the best cooling and functionality. They don't have custom liquid cooling or cases but a set design and air cooling.

It is also not stating a TDP limitation of PCIe but rather "Due to TDP restrictions in PCI-Express environments" which again refers to the limitations in cooling when you have tons of these in a single room doing HPC work.

The interesting thing is that this might be close to what the Titan for Pascal will be. Guess we will have to wait and see though because the Titan already has 12GB of VRAM and I would expect the new GPU to have more, 16GB but that is just me. -

bit_user Reply

This is all a bit silly. There's no reason a server can't dissipate this much power and more. In fact, 4 of the 6 current Intel Xeon Phi SKUs (the PCIe cards, released a couple years ago) are 300 W.18152616 said:

It is more about the environment. Sure they could throw a 300W GPU in there. Problem is that it would limit the TDP of other parts. Servers and HPC, where this will be going, require a precise design for the best cooling and functionality. They don't have custom liquid cooling or cases but a set design and air cooling.18152496 said:The "TDP restrictions" sound like an excuse to push their proprietary tech and require users get a NVidia only motherboard.

It is also not stating a TDP limitation of PCIe but rather "Due to TDP restrictions in PCI-Express environments" which again refers to the limitations in cooling when you have tons of these in a single room doing HPC work.

Plus, why do you assume these will only be used in servers? The aforementioned Xeon Phi's come in two variants - actively and passively air-cooled. The passive ones are for use in servers, while those with integrated blowers are aimed at workstations.

I've read that the GP100 has no graphics-specific blocks, meaning it cannot be used on a graphics card. We'll have to await a completely different chip.18152616 said:The interesting thing is that this might be close to what the Titan for Pascal will be.

-

SpAwNtoHell Future looks promising ... But somehow doubt this chip will end up at some point in a mainstream enthusiast card, probably a gp102 will take that spot, but will be many factors to consider...Reply

Overwall what bothers me is that nvidia fights a battle with itself on both fields mainstream high end and profesional... And this is no good... -

jimmysmitty Reply18156182 said:

This is all a bit silly. There's no reason a server can't dissipate this much power and more. In fact, 4 of the 6 current Intel Xeon Phi SKUs (the PCIe cards, released a couple years ago) are 300 W.18152616 said:

It is more about the environment. Sure they could throw a 300W GPU in there. Problem is that it would limit the TDP of other parts. Servers and HPC, where this will be going, require a precise design for the best cooling and functionality. They don't have custom liquid cooling or cases but a set design and air cooling.18152496 said:The "TDP restrictions" sound like an excuse to push their proprietary tech and require users get a NVidia only motherboard.

It is also not stating a TDP limitation of PCIe but rather "Due to TDP restrictions in PCI-Express environments" which again refers to the limitations in cooling when you have tons of these in a single room doing HPC work.

Plus, why do you assume these will only be used in servers? The aforementioned Xeon Phi's come in two variants - actively and passively air-cooled. The passive ones are for use in servers, while those with integrated blowers are aimed at workstations.

I've read that the GP100 has no graphics-specific blocks, meaning it cannot be used on a graphics card. We'll have to await a completely different chip.18152616 said:The interesting thing is that this might be close to what the Titan for Pascal will be.

It may be silly but I assume that nVidia does it for a reason. Do you think they don't want to be able to sell people a 300W GPU/Coprocessor? I think they would if they could. People assume it is because they want people to go to the next step, that is probably true and would be true for any company but I also think they wouldn't leave a possible market open for Intel to scoop up. I can't find anything confirming any restrictions for PCIe to 250w TDP but again there has to be a reason why nVidia wouldn't try to sell to a market they could easily sell to.

And I am not saying only servers but the majority of Tesla and Xeon Phi cards are put into HPC farms and not work stations.

I also said this is probably close to what the next Titan will be, not that it is. Nothing is stopping nVidia from having a version of GP100 with the GPU blocks. -

bit_user Reply

Rumors are that their high-end Graphics Processing Unit will be the GP102. I hope and expect it'll have HBM2. But, if costs remain too high, I could see them going with 384-bit GDDR5X.18157815 said:I also said this is probably close to what the next Titan will be, not that it is. Nothing is stopping nVidia from having a version of GP100 with the GPU blocks.

-

DotNetMaster777 Welcome to the world of high performance computing !!!Reply

This will be useful for AI and Machine learning )))) it will improve the machine learning infrastructure !!! -

Jarmund wow, things look pretty sexy in the HPC world, damn! i wish there was a way to use this marvel and transform it into a gaming card i mean... it shares the same GTX 1080 GPU (if i'm not mistaken... correct me please if i'm wrong) but it's packed with the better performing HBM Vram and 12 gigs of it! why nVidia why? why won't you let me burn my credit card?!Reply -

bit_user Reply

No, this uses the GP100, which has no ROPs or other graphics-specific engines. And 12 or 16 GB of HBM2. You need to wait for the GP102, which sounds like it's going to use GDDR5X (probably 384-bit, I'd guess). The GTX 1080 & 1070 both use the GP104.18163790 said:it shares the same GTX 1080 GPU (if i'm not mistaken... correct me please if i'm wrong) but it's packed with the better performing HBM Vram and 12 gigs of it!

Because there's not a big enough market of people willing to pay like $5k or $10k for a gaming card. So, they went for a less price-sensitive market, until they can bring down all the costs that make this thing so expensive.18163790 said:why nVidia why? why won't you let me burn my credit card?!

A more cynical explanation would be that GTX 1080 already beats all of AMD's existing + soon-to-be-released cards, allowing them to hold back their big weapon.

-

TJ Hooker @Jarmund it does not share the same GPU as 1080. The Tesla is GP100, 1080 is GP104, IIRC.Reply