Intel LGA9324 leak reveals colossal CPU socket with 9,324 pins for up to 700W Diamond Rapids Xeons

5x more contacts than consumer-grade LGA 1851 Arrow Lake sockets.

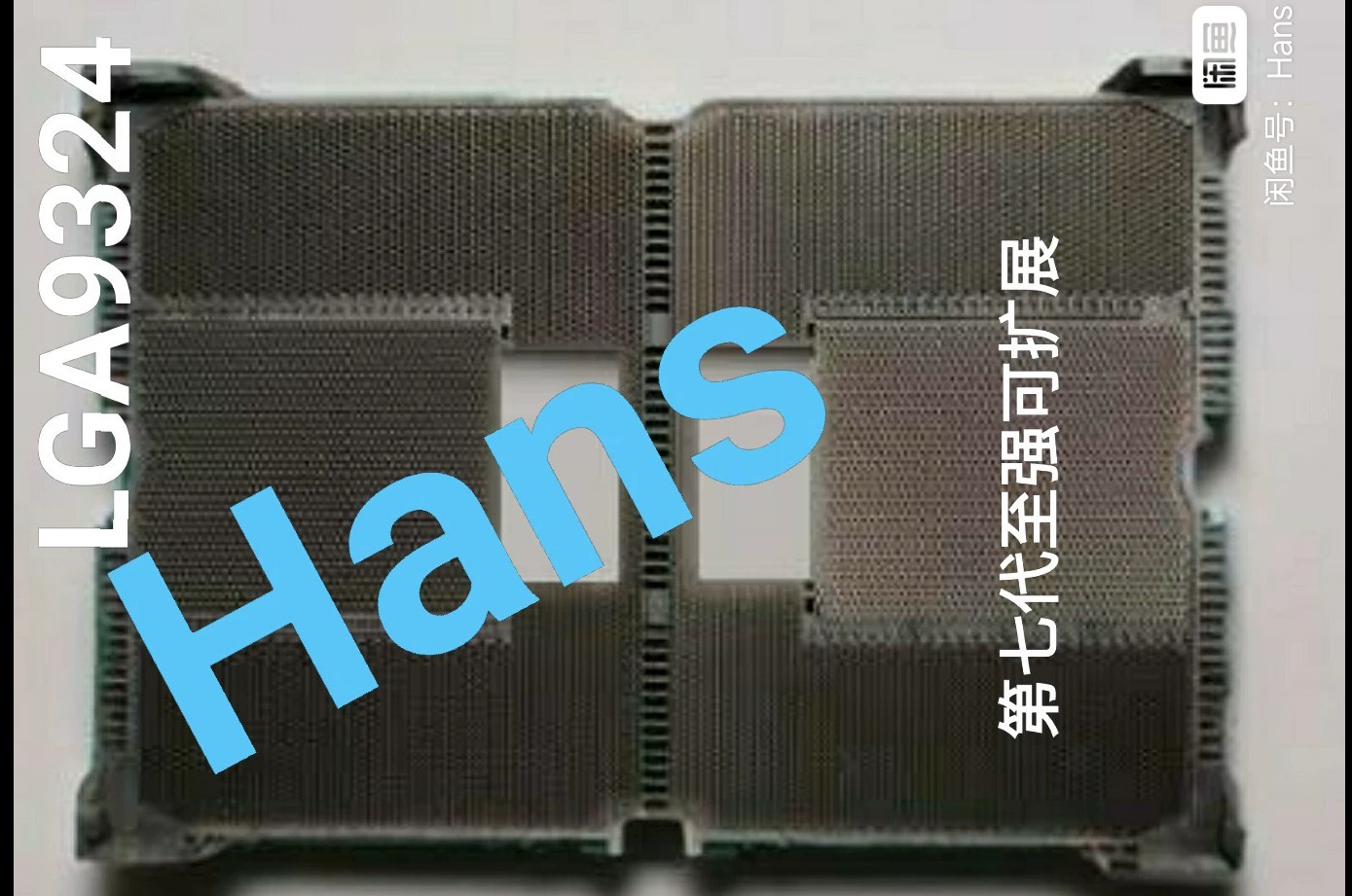

An alleged picture of the socket that is expected to host Intel's next-generation Diamond Rapids (Xeon seventhgeneration) family of server CPUs has emerged, as spotted by HXL on X. The LGA9324 socket reportedly carries over 10,000 pins, once you consider debug pins and the like. This will likely be the largest LGA CPU socket yet, unless future Venice offerings from AMD exceed this amount.

Last August, test tool listings for partners indicated that Intel's future Diamond Rapids processors will reportedly require a new Oak Stream platform with its LGA9324 socket. Under the Xeon 7 family, these CPUs are expected to supersede existing Granite Rapids offerings, across AP (Advanced Performance) Xeon 6900P and SP (Scalable Performance) Xeon 6700P/6500P offerings. Prototype cooler designs from Dynatron suggest Intel will fragment Diamond Rapids into AP and SP flavors, and so we might see a toned-down socket under the Oak Stream family for Diamond Rapids-SP.

Currently, Intel's largest socket, LGA7529, features at least 7,529 contacts, while AMD's SP5 offers 6,096. Xeon 6900P CPUs, utilizing the LGA7529 socket, offer up to 128 P-cores, support 12 DDR5 memory channels, and can reach a TDP of 500W. With a nearly 30% increase in pin-counts, expect more I/O, memory channels, increased TDPs, and even core counts. While we don't have a banana for scale, visually, this is a massive socket, dwarfing LGA1851 (used by Arrow Lake) by almost five times.

The Goofish listing mentions that the model in the shared image was apparently acquired from a scrap yard and was initially part of a thermal test board. What this means is that Intel is probably validating Diamond Rapids as we speak with their partners, and who knows if benchmarks or even an actual CPU sample surfaces soon?

Diamond Rapids is expected to leverage the Panther Cove-X architecture, which is believed to serve as a server analogue to Coyote Cove on Nova Lake. Rumored to be built using 18A, the launch of Diamond Rapids hinges greatly on its High Volume Manufacturing (HVM) readiness, with Panther Lake targeting HVM by late 2025.

This isn't an apples-to-apples comparison since Panther Lake's Compute Tile (Likely 18A) is expected to span between 100-150mm^2, while Compute Tiles on Intel's Xeon processors can be as large as 600mm^2. Even though Intel hasn't confirmed an exact release window for Diamond Rapids, we can expect these CPUs to arrive sometime in 2026, rivaling AMD's Venice.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

chaz_music In engineering design, the higher the number of connection points, the higher the inherent failure rate. Especially if there is high current through the CPU power pins. At least these are gold plated (oxidation) and restricted pin movement to reduce fretting failure.Reply

As my point: Has anyone heard about the high pin count 16 way GPU connectors failing? Whoever chose that configuration did not understand paralleling high current DC with standard pin-socket connectors. Derating is required for the current capacity per pin. This is well know and easily found in app notes. Recipe for disaster. I found similar pin-sockets used in those connectors, and the derating for a 16 pin 94V0 nylon housing was 5A. And the datasheets are expecting decent wire cooling to draw heat away from the connector housing, i.e., no "pretty" nylon shroud covering the wiring to allow for reasonable convection cooling of the wire. The wire is expected to act as a heatsink for the connector pins and sockets. -

rluker5 That is a lot of stuff going on. And the number of pins is just going to increase.Reply

Soon they will need holes through the middle parts of the package to even out the mounting pressure.

Good thing you can do that with chiplets. -

ak47jar3d It seems like they are pushing that platform to the limit. Degraded lga9324 cpus coming in hot?Reply -

jp7189 Reply

SP5's latch only holds the CPU in place. It relies on the heatsink to provide proper pressure and distribution of pressure.rluker5 said:That is a lot of stuff going on. And the number of pins is just going to increase.

Soon they will need holes through the middle parts of the package to even out the mounting pressure.

Good thing you can do that with chiplets. -

twin_savage Reply

At least they have enough pins to dedicate to power so they won't be forced to overdrive the pads like some of the recent issues with AMD's AM5 socket.chaz_music said:In engineering design, the higher the number of connection points, the higher the inherent failure rate. Especially if there is high current through the CPU power pins. At least these are gold plated (oxidation) and restricted pin movement to reduce fretting failure.

Socket creep is one of the biggest man-hour timesinks when designing these new sockets, apparently the issue has gotten bad with these big sockets.

^^ This is something not enough people are talking about. The copper wire wicks away a non-trivial amount of heat from the molex socket connector. Larger wire gauges could help too; technically all 12v2x6 cables are supposed to be 16awg by definition, but 14awg would be even better.chaz_music said:And the datasheets are expecting decent wire cooling to draw heat away from the connector housing, i.e., no "pretty" nylon shroud covering the wiring to allow for reasonable convection cooling of the wire. The wire is expected to act as a heatsink for the connector pins and sockets. -

bit_user Reply

Or, maybe you could just water-cool the power connectors!chaz_music said:the datasheets are expecting decent wire cooling to draw heat away from the connector housing, i.e., no "pretty" nylon shroud covering the wiring to allow for reasonable convection cooling of the wire. The wire is expected to act as a heatsink for the connector pins and sockets.

: D -

TerryLaze Reply

https://newsroom.intel.com/data-center/intel-shell-advance-immersion-cooling-xeon-based-data-centershttps://newsroom.intel.com/wp-content/uploads/2025/05/Intel-Shell_Xeon-3.jpgbit_user said:Or, maybe you could just water-cool the power connectors!

: D -

chaz_music Replybit_user said:Or, maybe you could just water-cool the power connectors!

: D

Tesla actually does that with their earlier EV chargers. A coolant flows through the cable and also cools the car power connection. I don't know of the newer ones still do that, but it is an interesting solution.