Nvidia Tech Radically Improves AI Inferencing Efficiency

Nvidia revealed the third generation of its TensorRT AI inferencing software, the DeepStream video analytics platform, and the ninth generation of its CUDA technology.

For decades, we’ve heard rumblings of artificial intelligence technology coming to the fore, but until recently, those ideas have been relegated to the world of science fiction. These days, artificial intelligence is no longer a thing of fiction—AI is rapidly becoming science fact. It’s no secret that Nvidia is bullish on artificial intelligence technology (and for good reason). The company positioned itself at the forefront of the AI and deep learning revolution in recent years, and it’s made rapid advancements in the segment year over year. And the company isn’t showing any sings of slowing down any time soon. Yesterday, at GTC China 2017, Jensen Huang, Nvidia’s Founder and CEO, revealed a handful of new technologies that improve the performance and efficiency of deep learning inferencing. The founder also gave an overview of the partnerships its forming within the industry to give us a glimpse of how Nvidia’s deep learning AI technology will shape our world in the years to come.

Third Generation TensorRT Technology

Last September, Nvidia replaced the GPU Interface Engine with the TensorRT deep learning inference engine. The first generation of Nvidia’s TensorRT inference engine offered double the performance of the GPU Interface Engine in tasks such as image classification, segmentation, and object detection.

Earlier this year, Nvidia released TensorRT 2, which improved INT8 precision performance by up to 45x. Nvidia also introduced “sequence based models for image captioning, language translation, and other applications.”

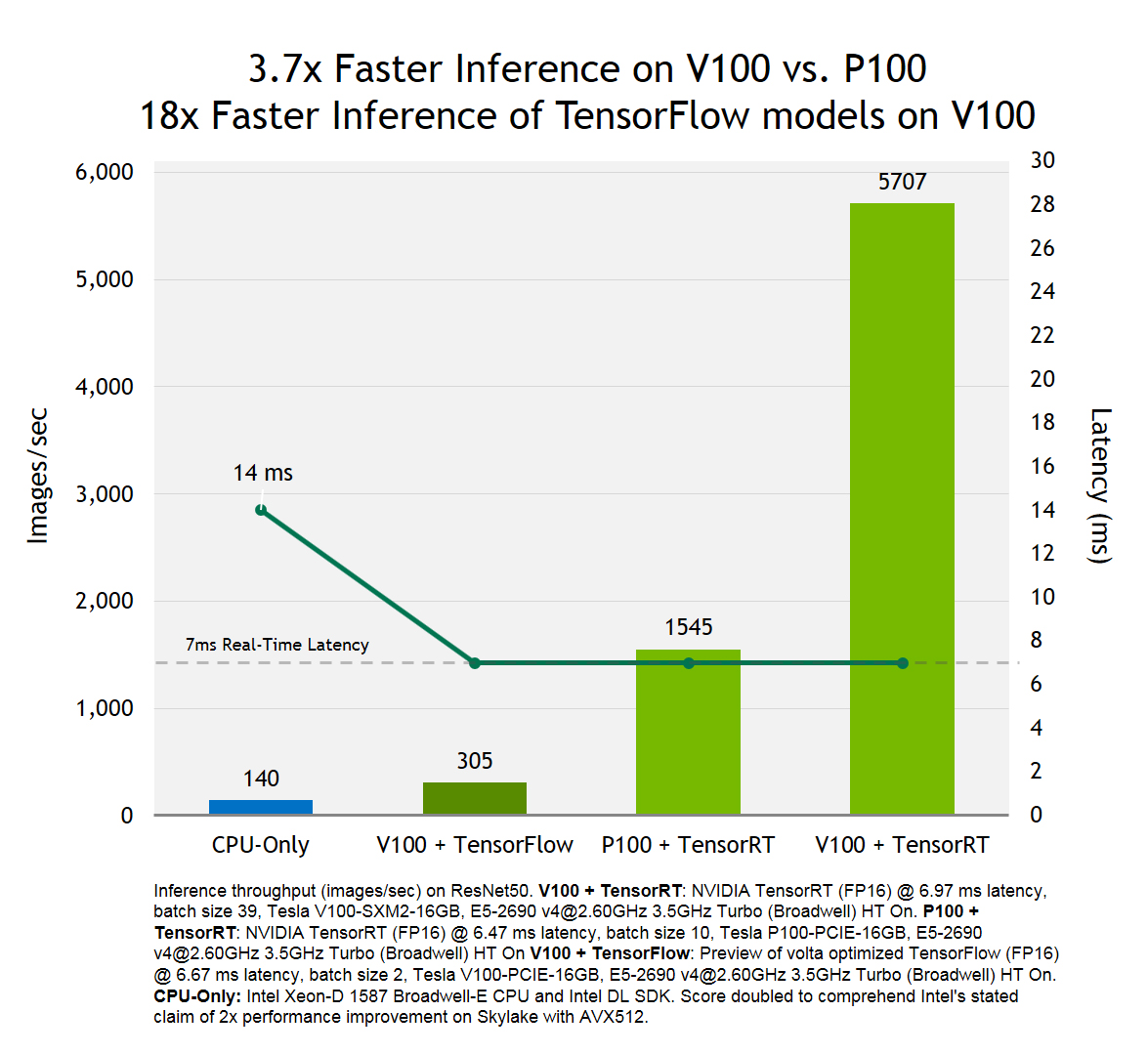

Nvidia now offers TensorRT 3, which enables 3.7x performance on Tesla V100 GPUs compared to Tesla P100 GPUs. TensorRT 3 can also “optimize and deploy TensorFlow models up to 18x faster” on Volta hardware than it can on a CPU-only interface. TensorRT 3 also offers a 40x speedup on ResNet-50, and 140x performance boost on the OpenNMT neural machine translation system when compared to CPU-based neural networks.

TensorRT 3 enables a new level of power efficiency for neural networks. Huang said a single HGX server with eight Telsa V100 GPUs would offer the equivalent computational performance as a CPU-based neural network with 160 dual-CPU servers. Nvidia didn’t say how much an 8-GPU HGX rack-mount server would cost, but the company said it would be cheaper than the $600,000 to $700,000 that the CPU-based severs would set you back. The GPU-based server would also reduce energy costs by a staggering margin from 65 kilowatts down to just 3 kilowatts of power.

Intelligent Video Analytics Simplified

Nvidia also introduced the DeepStream SDK, which “simplifies the development of scalable, intelligent video analytics (IVA) applications,” by combining AI inference technology with video transcoding and data curation technologies into a single API. Nvidia’s DeepStream SDK offers “image classification, scene understanding, video categorization, and content filtering” capabilities. Applications created with the DeepStream SDK run on Nvidia’s Tesla accelerated computer platform.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The DeepStream SDK includes sample code and pre-trained deep learning models to help developers create software that can classify video content and detect objects in video streams.

CUDA Turns 9

Along with the TensorRT 3 and DeepStream SDK releases, Nvidia also released the ninth generation of its CUDA technology. The latest version of Nvidia’s CUDA GPU-acceleration libraries takes advantage of the power of Nvidia’s Volta platform. The company said that HPC apps developed with CUDA 9 would offer up to 1.5x faster performance when running on Volta GPUs compared to apps built with CUDA 8.

CUDA 9 apps also demonstrate faster performance across multi-GPU configurations. Particularly with Volta GPUs, where its next generation NVLink technology can deliver twice the throughput of the prior generation.

Available Now

All three of Nvidia’s new technologies are available now to registered Nvidia developers. You can find more information about TensorRT 3, DeepStream SDK, and CUDA 9 on Nvidia’s developer resource website.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

bit_user I think these numbers are pretty much in line with what they already said about the V100. The big announcement is mostly just the SDK support for it.Reply

It will be interesting to know how much of the V100's capabilities are shared by the smaller Volta GPUs. I think it's safe to assume they won't have its tensor product engine.

Edit: okay, TensorRT's network optimization features are interesting. I didn't know it had those.