Nvidia Joins Open Compute Project With HGX-1 Hyperscale GPU Accelerator

Nvidia joined companies such as Facebook, Microsoft, Intel, and Google as part of the Open Compute Project (OCP), which aims to make servers more efficient without the high costs while also sharing the designs with the public. Specifically, what Nvidia brought to the table was a new hyperscale GPU accelerator.

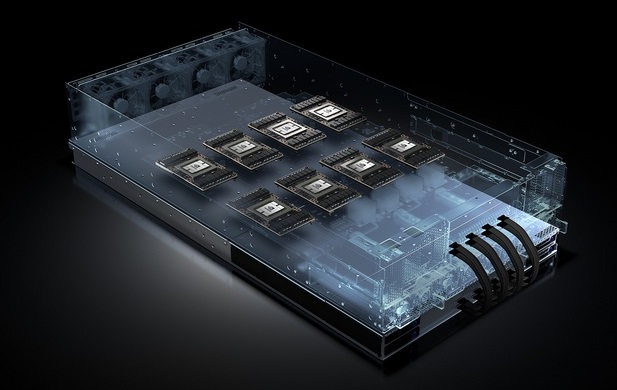

The HGX-1, as it’s called, is made up of eight Tesla P100 GPUs, all of which utilize the new Pascal architecture. Depending on the workload, the CPU can designate one or all of the GPUs in the accelerator, a switching procedure that's said to be based on Nvidia’s NVLink connection technology. When Nvidia announced its membership to the OCP, it did so by partnering with Microsoft, which will use the HGX-1 as part of its Project Olympus server design. The design also works with Intel’s latest Xeon Skylake CPUs, as well as AMD’s new 64-core Naples processor.

According to Roy Kim, Nvidia’s director of Tesla products, the time came for the company to join the OCP because it saw the opportunity to set a new standard in cloud computing.

“The fact is that industry standards accelerate innovation,” he said. “We saw that happening in the past when PC was just getting off the ground. We see that cloud computing is in the same junction in some sense. There’s some different designs in how to do AI, and we felt that working with Microsoft will come up with the standard that will quickly offer AI services.”

Kim also noted that the limit of eight GPUs per accelerator was due to power and design limitations. However, that doesn’t mean that companies won’t be able to connect multiple HGX-1s. Microsoft boasted that the Project Olympus design allows for up to four HGX-1 accelerators to be connected at the same time so that some workloads can utilize the full set of 32 GPUs. Kim mentioned that the HGX-1 isn't just exclusive to Project Olympus; you can use it on any server design because of its use of PCIe ports.

Because the goal of the OCP is open source-oriented, any cloud vendor can grab the design specs and partner with a manufacturing company to create their own servers. In that regard, Nvidia doesn’t seem to make any profit. Kim said that the goal was to just create a standard for cloud computing.

“HGX-1 is the first instance of what we hope to be a roadmap,” he said. “Standards evolve and workloads evolve, and we’ll continue to work with Microsoft. Our goal was to provide a standard that we believe… is the best design for hyperscalers today.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You can look at the Tesla P100 GPUs in detail on Nvidia’s website. As for the entire Project Olympus design, you can browse its hardware and specs on GitHub.

Rexly Peñaflorida is a freelance writer for Tom's Hardware covering topics such as computer hardware, video games, and general technology news.

-

Wisecracker ReplySounds good, lets see

The MI8 is the "Radeon Instinct" - aka R9 Nano @ "<175w," available in 8X clusters. The Mi25 is presumed to be Vega with AMD announcing it as the "Falcon Radeon Instinct Cluster with 16X Radeon Instinct MI25 @ 400 Teraflops." I suspect nVidia likely went with their 32X cluster to top the "Falcon Radeon Instinct Cluster." Last year AMD said '1H-2017' for availability --- likely to coincide with the release of the Naples enterprise platform.

Not sure how Chipzilla will respond with the "Phi" as they are unlikely to stand-pat with their "competition" BUT they do seem to be lagging in FP16 ...