AMD Demos Naples Server SoC, Launches Q2 2017

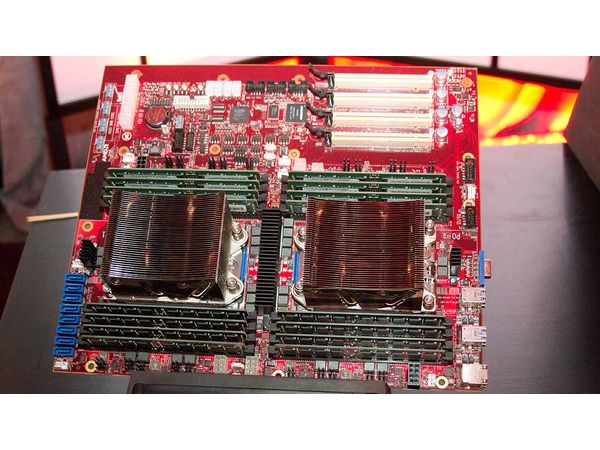

AMD demoed its 64-core/128 thread dual socket Naples server platform against a comparable Intel server at its recent Tech Day but timed the announcement to coincide with the OCP (Open Compute Project) Summit this week. AMD presented a narrow workload that doesn't do much to reveal Naples' broader performance trend, although it does compare favorably against the Intel comparison platform. More importantly, AMD also revealed more details on the architecture and design of its forthcoming server SoCs.

In the past, AMD's desktop PC market penetration has lagged woefully behind Intel's, but a quick glance at its data center share highlights an even larger disparity between the two companies. Intel currently commands ~99% of data center server sockets, and its dominance in the high-margin segment has been a key to its success on many fronts. Intel is leveraging its commanding Xeon lead as a springboard to attack other lucrative segments, such as networking with Omni-Path/silicon photonics and memory with 3D XPoint, but it leverages locked-down proprietary interconnects that have raised the ire of the broader industry.

AMD, by contrast, leverages open protocols where it can and participates in developmental efforts on several new open interconnects, such as CCIX, Gen-Z, and OpenCAPI, so for the broader industry, a competitive AMD represents more than just a cost advantage and second source. Let's dive in.

The High-Level

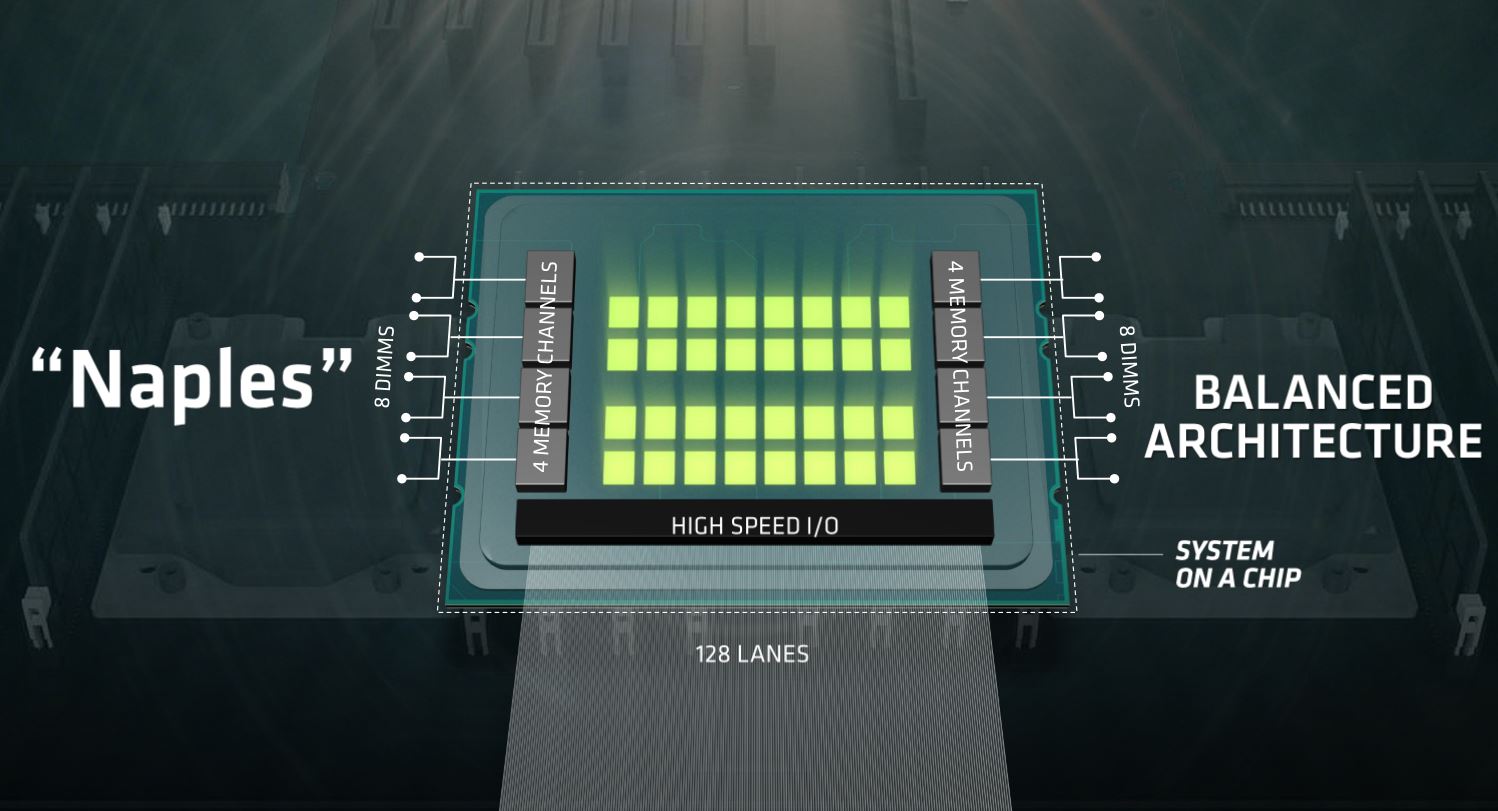

AMD's Naples brings Southbridge functionality on-die, so there is no need for an I/O hub or chipset. AMD claimed the integrated connectivity will increase performance and reduce cost. For instance, a dual-socket server can support up to 24 NVMe drives without additional hardware, and Naples features integrated Ethernet capabilities, although AMD did not specify performance metrics. The Zen microarchitecture infuses the power of SMT into the Naples platform, and the combination of 32 physical cores and 64 threads provides AMD with 45% more cores than Intel's flagship E5-2699 v4, which features 22 cores.

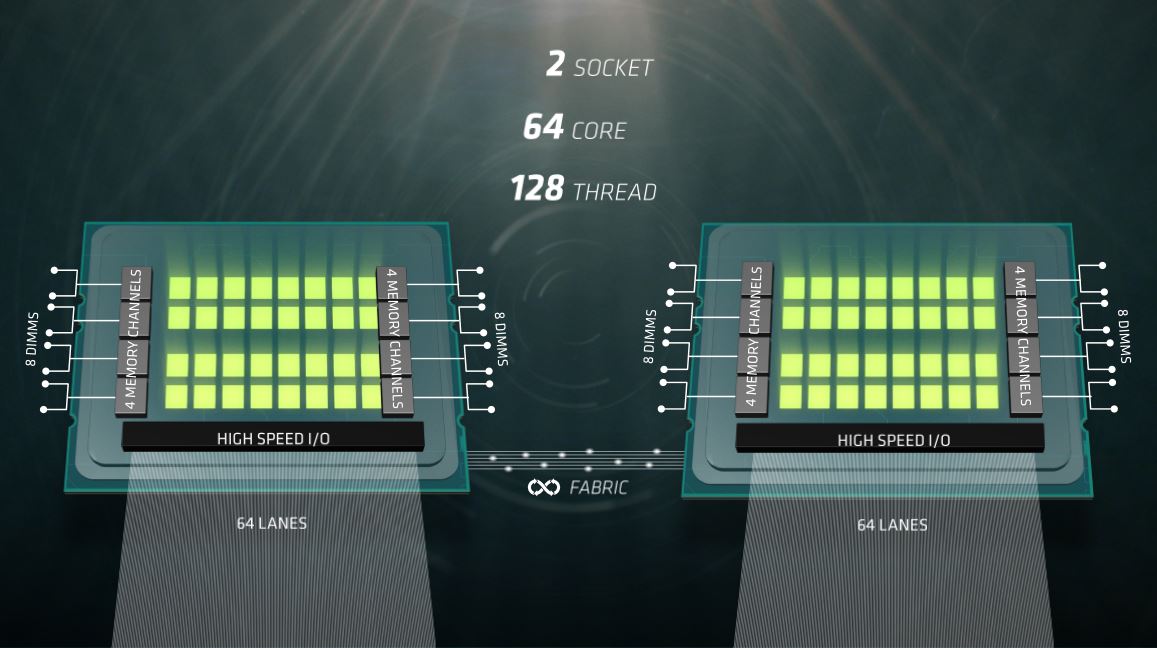

A single Naples SoC provides 128 PCIe 3.0 lanes compared to Intel's 40, which is another impressive improvement, though Naples loses some of that advantage in dual-socket servers. The generous PCIe lane allocation allows for six x16 connections, which AMD noted is particularly useful for machine learning applications that leverage GPUs. Naples features eight DDR4 memory channels per socket without the use of a memory buffer (such as Intel's Jordan Creek Scalable Memory Buffers), whereas Intel is limited to four channels. Naples' bulky memory provisioning supports up to 170.76 GB/s of throughput and a maximum capacity of 2.048TB of memory per processor, whereas Intel's E5-2699A v4 plateaus at 78.6 GB/s of throughput and 1.54TB of memory per processor.

AMD will release several SKUs with varying core counts and frequencies, but it isn't revealing clock speeds, TDP, or pricing at this time. The company noted that it will provide more information on the product stack when Naples comes to market (obviously). AMD will support a broad range of out-of-band management interfaces, such as IPMI, and although OS compatibility remains undefined, the company is working with a broad range of operating system and hypervisor vendors.

Expanding to two sockets yields an increase to 16 memory channels (32 DIMMs) that support up to 4TB of memory. AMD's Infinity Fabric connects the processors and communicates across the PCIe interface, so it consumes 64 PCIe lanes between the two Naples SoCs in a dual-socket configuration. The two-socket configuration provides 128 PCIe lanes to the host system, which still outweighs Intel's 80 lanes in a dual-socket server. AMD hasn't specified Infinity Fabric's bandwidth capabilities, among many other details. The Infinity Fabric lane requirements prevent AMD from employing Naples in quad-socket configurations, which would consume all of the available PCIe lanes for inter-socket communication. It isn't clear if AMD plans to bring a quad-socket competitor to market.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD hasn't released detailed information on its Infinity Fabric, though we do know that it's an updated version of the HyperTransport protocol. AMD did state that it has improved the protocol's QoS, security characteristics, and other key metrics, which helps with VM provisioning and management.

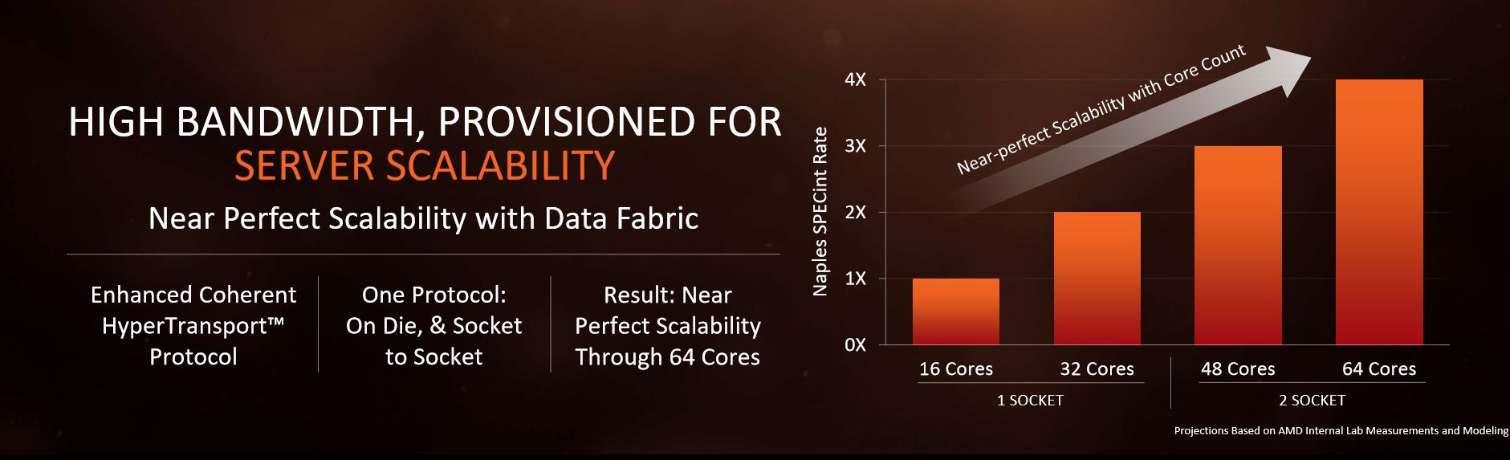

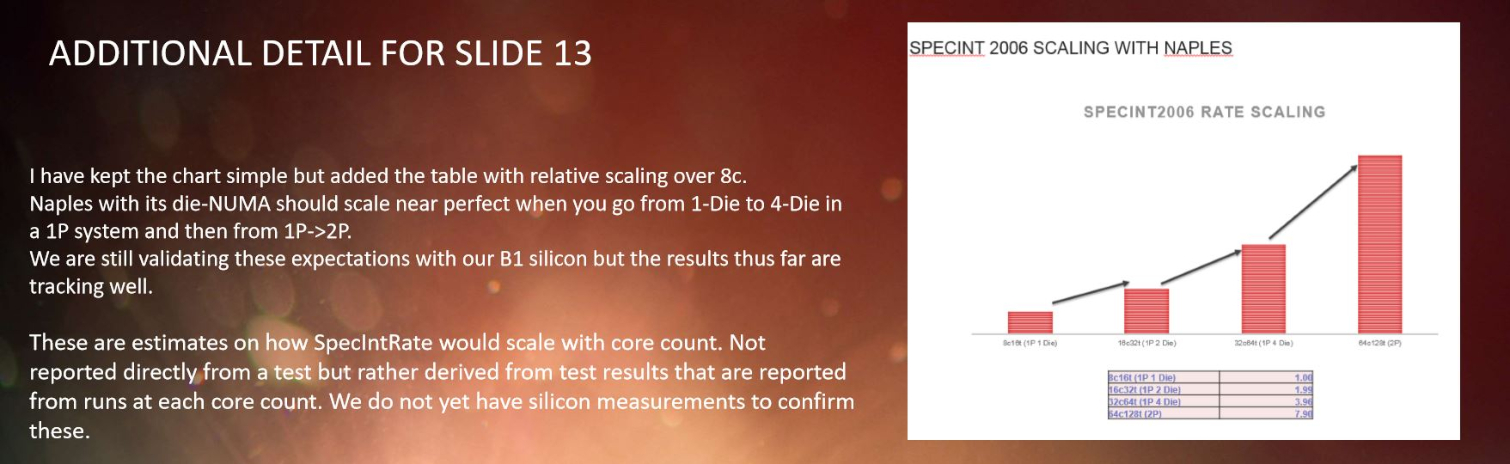

AMD also claimed that the Infinity Fabric provides near-linear scaling up to 64 cores, but as the footnote indicates, the company hasn't validated scaling with working silicon.

The Demos

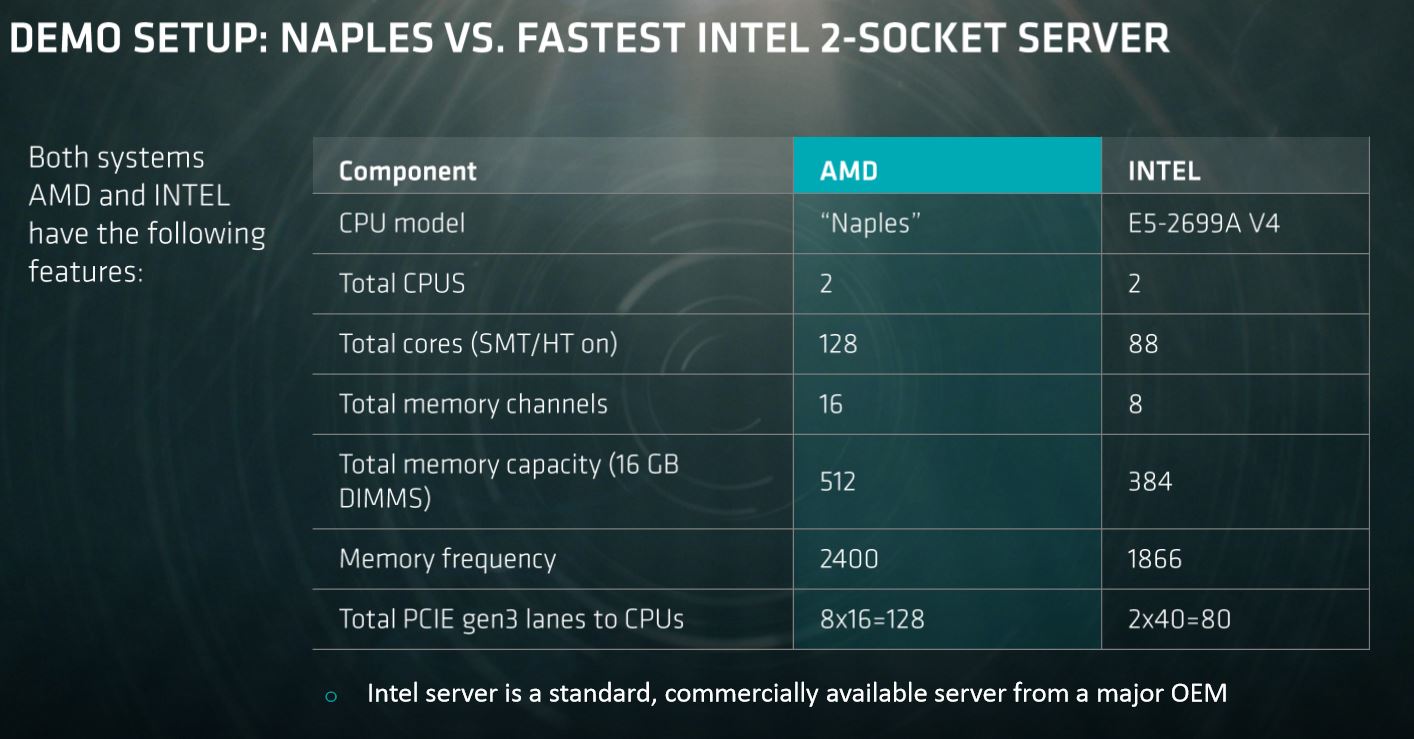

AMD configured two dual-socket test systems for its live demonstration, and the Naples "Speedway" reference server design enjoys a pronounced advantage in core count, memory capacity, memory speed, and PCIe lanes compared to the Broadwell server.

AMD was light on the details of its custom seismic workload, and although we do know that it employs AVX instructions, it is impossible to compare the results to standardized workloads or provide a detailed analysis of the tests. AMD indicated that the workload is a computationally intensive analysis involving iterations of 3D wave equations that stresses the CPU, memory, and I/O subsystem. We also weren't provided with more detailed system specifications or settings, so take the results with a grain of salt.

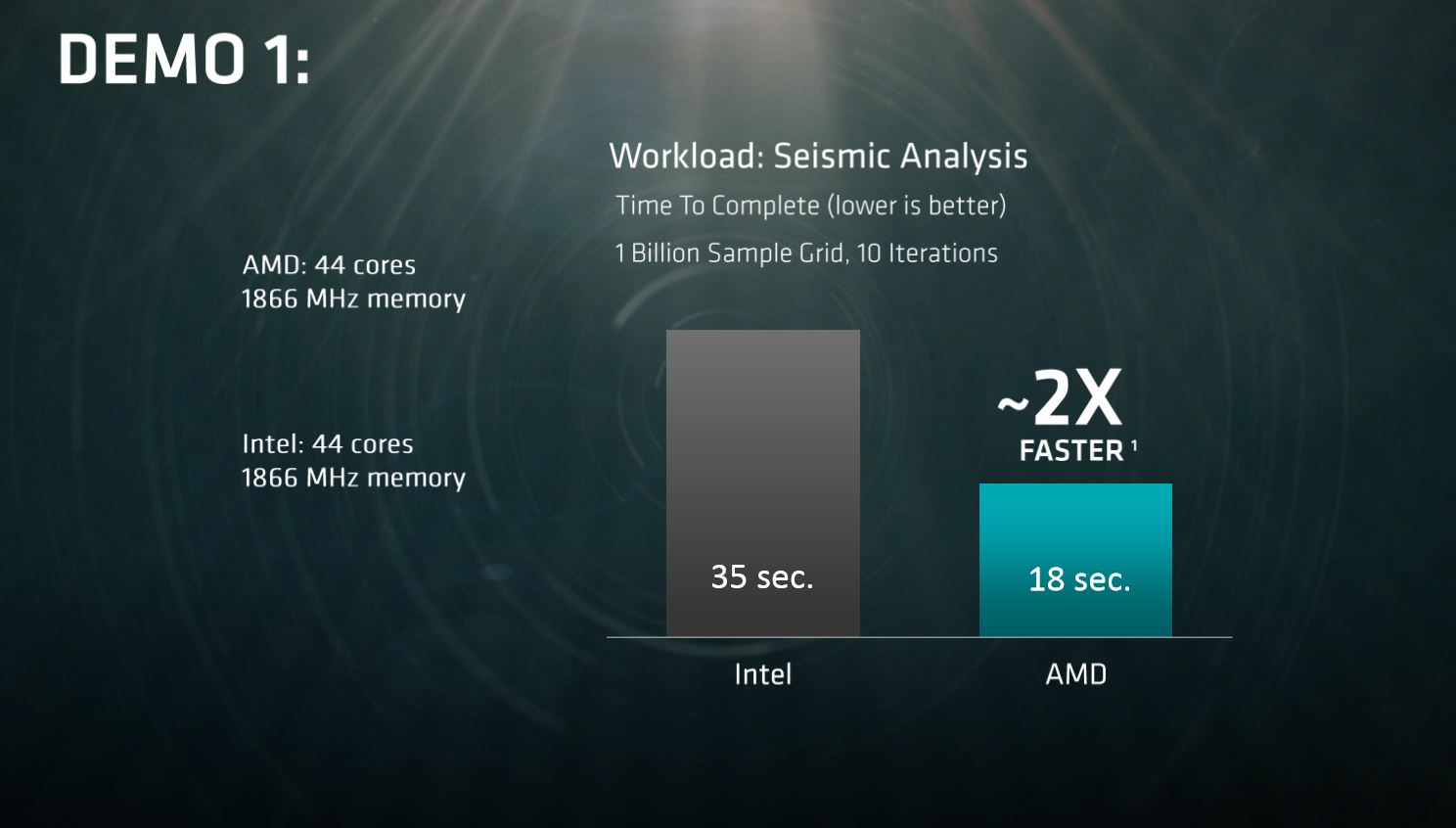

The first workload consisted of 10 iterations of a 1 billion sample grid. AMD restricted its core count and memory speed to match the Intel system, yet still managed to complete the workload in roughly half the time.

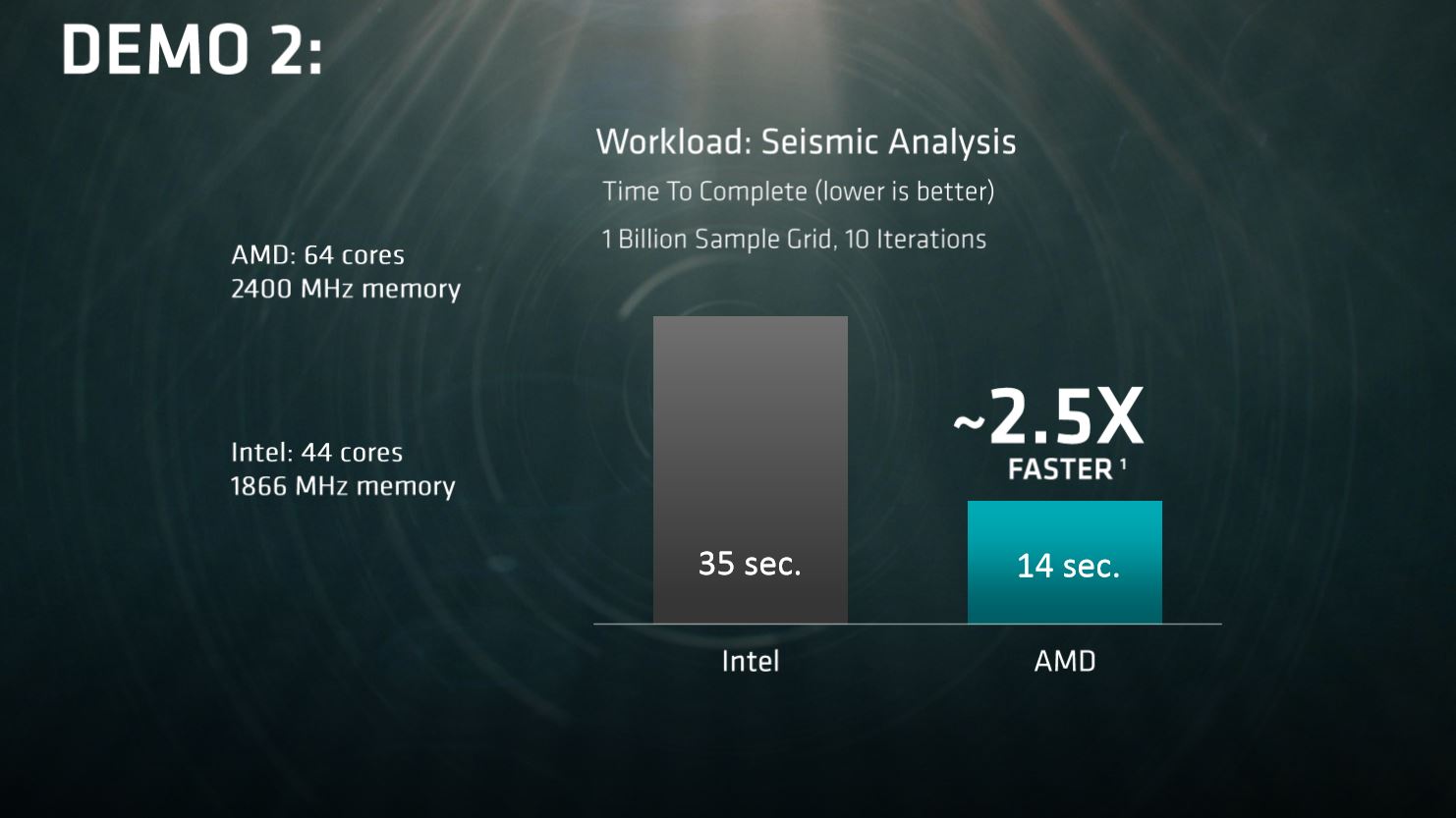

For the second test, AMD conducted the same test but brought all 64 cores to bear and bumped its memory speed up to 2,400MHz while the Intel system remained at 1,866MHz. Once again, AMD's carefully selected workload completed faster on the Naples system, yielding a 2.5X advantage. It's impossible to derive any useful scalability comparisons between the workload completion time of the 44-core Naples configuration and the 64-core native configuration due to a lack of information on the workload.

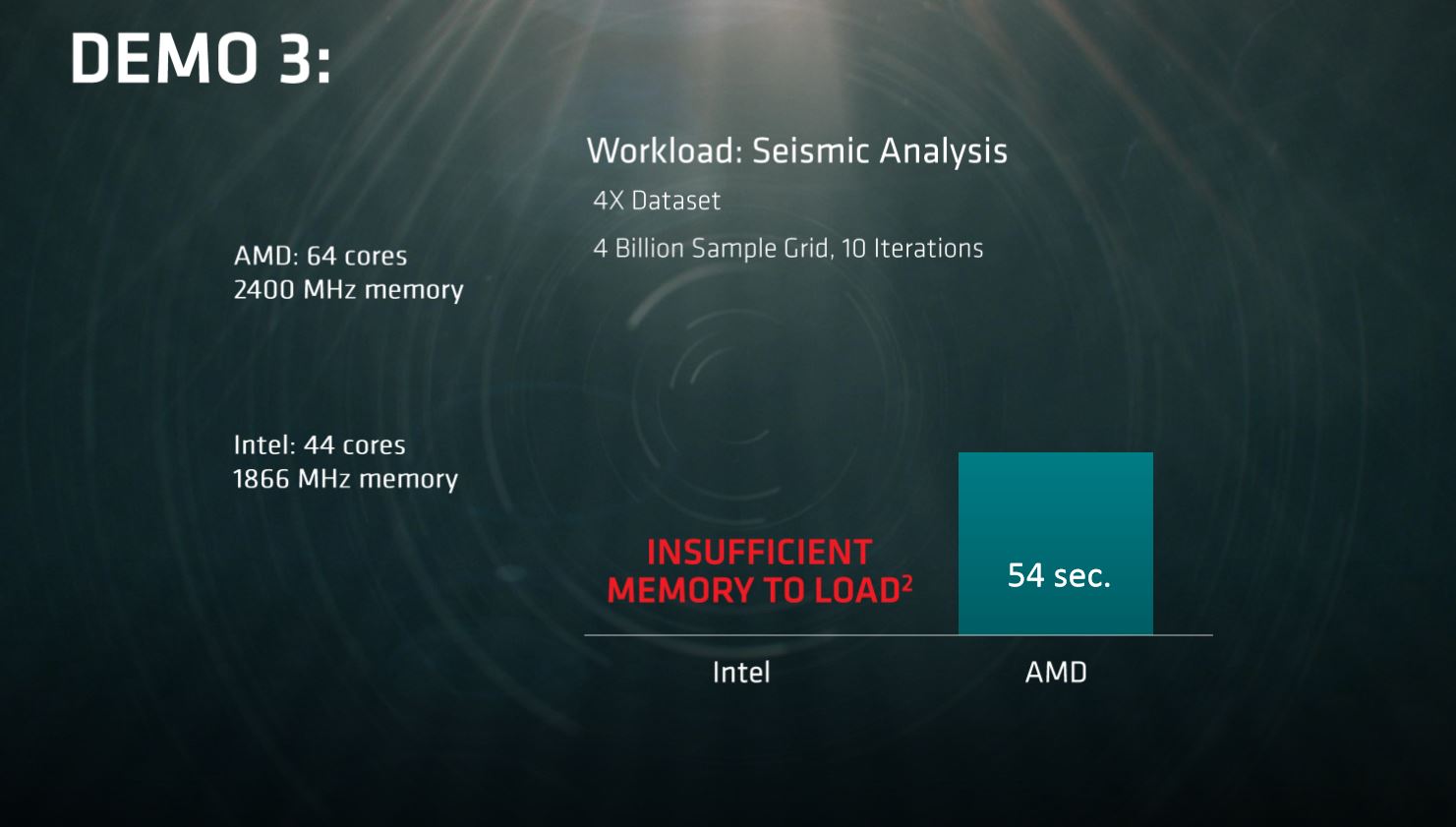

Finally, AMD provided a demo specifically designed to highlight its memory capacity advantage. The company increased the dataset to 10 iterations of a 4 billion sample grid, which simply couldn't run on the Intel system due to its memory capacity disadvantage.

The Takeaway

AMD's Naples design is impressive, and although the benchmarks are obviously very limited and designed to cast Naples in a favorable light, our initial Ryzen tests indicate the Zen architecture is well-optimized for HPC workloads. It will be interesting to see the actual Naples silicon in action in a wide range of industry-standard workloads.

Frankly, AMD faces an uphill battle against Intel in the data center. Intel has the advantage of a wide range of server SKUs and a burgeoning portfolio of new technologies at its disposal. Intel is bringing FPGAs on-package with its Xeons, and we expect them to come on-die in the future, which offers a tremendous amount of flexibility. Other emerging technologies, such as silicon photonics and 3D XPoint, are also major differentiators.

Perhaps Intel's greatest advantage in the data center is its long track record of reliable platforms. Data center operators tend to shy away from new architectures until they have proven their trustworthiness in the field, so we may see a slow adoption rate as AMD deploys its solutions. Intel also benefits from a wide range of applications that are almost solely optimized for its architecture, so AMD will have to expend a considerable amount of effort on ecosystem enablement.

AMD's biggest advantage will likely come in the form of its pricing structure. Intel's Xeon portfolio is prohibitively expensive, particularly on the high-end, so any competition is welcome. Unfortunately, most of the Naples story is yet to be told, so we will have to wait until launch in Q2 2017 for more information.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

redgarl Well, I just hope gaming at 1080p benches are not going to worry investors... just cmon...Reply -

aldaia Any data on CPU frequency and TDP?Reply

Xeon E5-2699A v4 is a 145W and runs at 2.4 Ghz. 2x the performance with same number of cores sugests that Naples is running at higher frequencies. -

Infidel_2016 The datacenter I just worked in had 5400 servers in it. Just over half were running dual and quad AMD 16 core chips. Old tech, but nice to see AMD in the farm.Reply -

aldaia Answering to myself, just realized the 2x could also be due to the benchmark being memory bound. Naples has 2x the chanels, and it gets to 2.5x the performance when memory freq is increased.Reply

Cores seem to be relatively irrelevant in this particular benchmark.

2x the performance with same number of cores sugests that Naples is running at higher frequencies.

v4 -

spiketheaardvark As glad as I am to see a decent AMD desktop chip, it is pretty clear AMD's real priority is servers. Those TDP numbers everyone glossed over in the Ryzen reviews are more important to AMD than I think most people gave credit to.Reply -

drajitsh Conservatism of data center managers might well nullify any advantage that amd has. Like it did in the p4/ opteron days, when AMD did have a brief lead.Reply -

firefoxx04 When AMD had their lead, massive cloud companies were not around. Google, Microsoft, IBM, Amazon are always adding servers to their cloud systems. I am sure there will be buyers.Reply -

Hand__ Power consumption alone will be the reason data centers dump Intel.Reply

An under-clocked Ryzen at 30w scoring 850 in cinebench, that is less power than Intel Atom processors under load at 33w.

That will be millions of dollars in power savings for companies with large data centers.

Intel is scared to death that people don't figure this out. AMD Desktop Ryzen cpu is beating their low power Atom processors.

https://forums.anandtech.com/threads/ryzen-strictly-technical.2500572/ -

bit_user According to this, Naples is just 4x desktop Ryzen dies, in a single package. This suggests there might be only 64-96 lanes of true PCIe bandwidth to the CPU cores, themselves.Reply

http://www.anandtech.com/show/11183/amd-prepares-32-core-naples-cpus-for-1p-and-2p-servers-coming-in-q2

It'd be interesting to see this thing go head-to-head with a KNL Xeon Phi.

-

genz Reply19392660 said:Answering to myself, just realized the 2x could also be due to the benchmark being memory bound. Naples has 2x the chanels, and it gets to 2.5x the performance when memory freq is increased.

Cores seem to be relatively irrelevant in this particular benchmark.

2x the performance with same number of cores sugests that Naples is running at higher frequencies.

v4

Doubling memory channels equates to a less than 20% performance advantage even in the most biased applications. AMD would have had to write their own benchmark specifically to get the figures you claim. Also with AMD still not having 3200 memory running on (already released) Ryzen at the time of this article what makes you believe that they would have it running on Naples multisocket engineering samples that they don't even have finalized chips on... especially when the competition is with top of the range 50+ core multisocket Intel hardware which is so much more difficult to get fast memory stable on than a comparitively simple i7.

19395495 said:According to this, Naples is just 4x desktop Ryzen dies, in a single package. This suggests there might be only 64-96 lanes of true PCIe bandwidth to the CPU cores, themselves.

http://www.anandtech.com/show/11183/amd-prepares-32-core-naples-cpus-for-1p-and-2p-servers-coming-in-q2

It'd be interesting to see this thing go head-to-head with a KNL Xeon Phi.

I would call false on that. PCI lanes are not that hard to test for and we would quite easily see if they lied on that point when the GPU cards start dropping to x8 speeds over 96 lanes. Without a major press release before launch (which would be major because of the all the bad press it would generate in combination with the precarious time it is right now for investors with the rating downgrade) AMD stands to be sued by business purchasers that expected 128 PCI lanes and got 96. With that kind of press release, AMD is still at threat of having major financial issues. What else could they have lied about is the next obvious question and the recalls will be much stronger than with the competition because they have a new product and a bad track record going back nearly 10 years (performance wise). Look at the fallout with GTX 970s having slower access to one side of the RAM and you'll see. I RMA'd mine because I had it mated to a 4K display.

You just don't do that kind of stuff to server buyers that are purchasing trays full of CPUs at $2000 a unit. You can get sued for millions in losses, lose huge business customers, and then get sued for millions more in damages because your lack of PCI lanes caused Google servers to run slowly and had to be worked around by a God level $15000 an hour tech team and revision of the load balancing code for the entire Google system. Big firms can't wait to redeploy and software solutions to hardware problems are not only expensive, but clearly not the fault of the company that makes them.

In short, FUD is being spread.