Nvidia GameWorks VR Multi-Res Shading And Other Parlor Tricks

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

As the countdown continues to serious, first generation, flagship-level virtual reality (see: HTC/Valve Vive, Q4 2015; Oculus Rift, Q1 2016), the companies making the serious graphics processing hardware to power it all are jockeying for pre-eminence, each taking turns laying claim to the rendering brawn in the forgotten boxes conveniently hidden for those mind-blowing VR demos, and then touting the sophisticated brains still required to eke out every last microsecond of latency. Better that minds are blown than chunks, as it were.

We know plenty about the Nvidia GeForce and AMD Radeon brawn. A little more now (see our Nvidia GeForce GTX 980 Ti review), and even more soon enough (Fiji bound, anyone?). As for the brains, late last year Nvidia provided details on VR Direct, early this year AMD rolled out Liquid VR, and it all sounded eerily similar, suggesting perhaps a strong bit of guidance from the likes of Oculus.

Now comes Nvidia's GameWorks VR, a collection of technology initiatives for VR game developers and headset makers to make VR games perform better. It's a subset of the overall Nvidia GameWorks development tools and libraries.

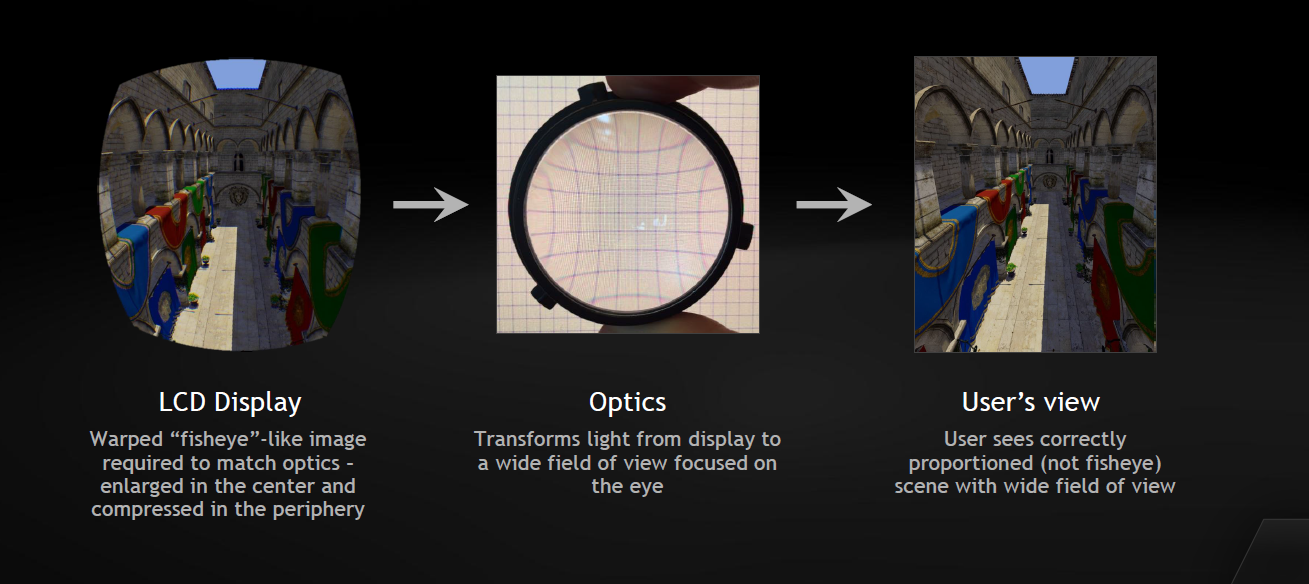

Nvidia has provided several details behind GameWorks VR, specifically related to image manipulation. For those who've tried on a VR headset, you know that it is, for all practical purposes, a completely immersive experience with a very wide field of view, accomplished by a spherical warping of light onto your eyes, pushing out focus to about 20 feet to ensure there's no eye strain.

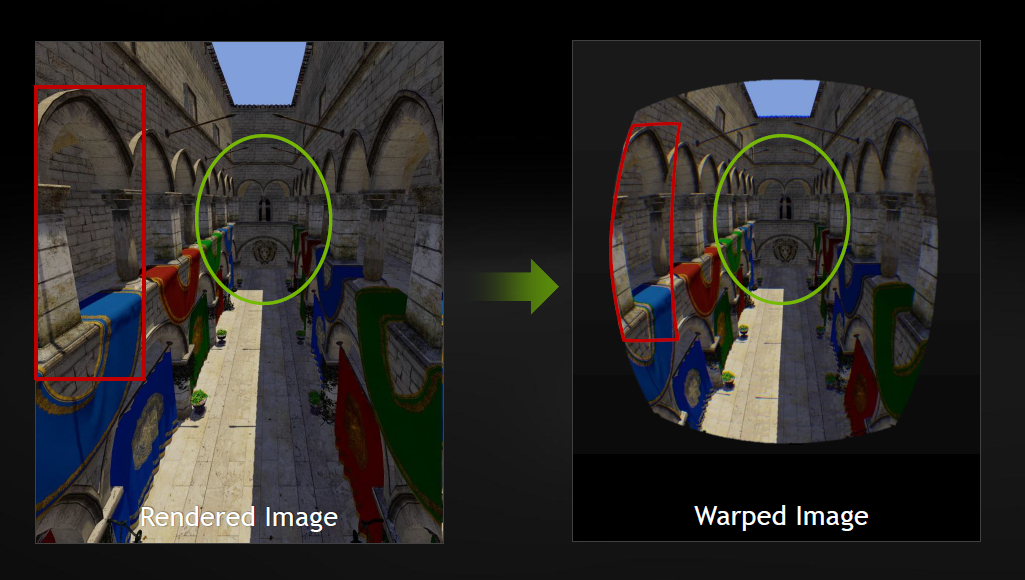

A warped image cannot be rendered natively, so the GPU warps the image first, squeezing the pixels on the edge of the image, while ballooning out the center, effectively gobbling up processing power only to compromise some of the fidelity of the original image geometry.

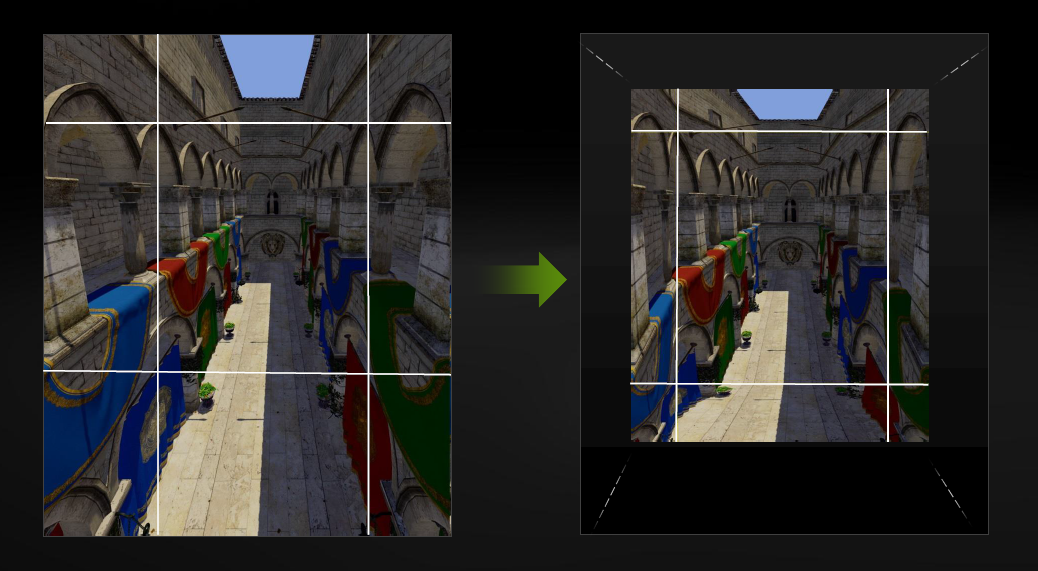

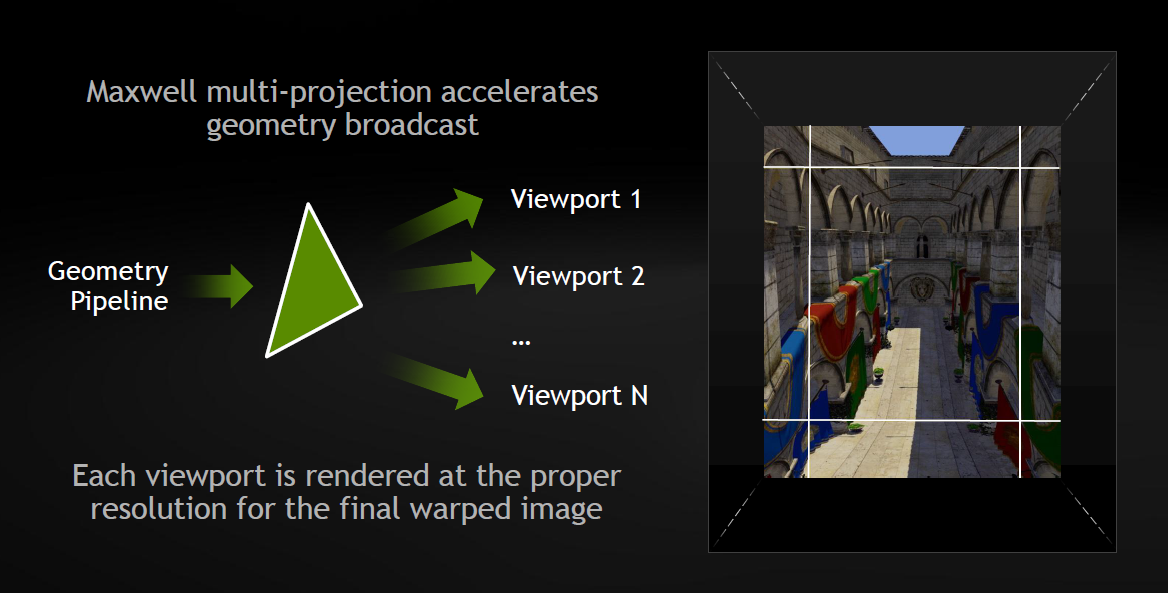

To overcome this, Nvidia employs multi-resolution shading, which is another way of saying that GPU cycles aren't spent rendering pixels you'll never see anyway. At a high level, Nvidia's technology compresses the outer edges of the image. It does so by dividing the image into nine regions, called viewports, each of which is instructed to render at a particular resolution and to remember that scaling factor. The center image (the sweet spot) might be rendered 1:1, but for the scaling at the edges changes the GPU does less work, and the performance rises. And you're none the wiser.

For those prone to sound smart at parties, this bit of optimization using viewports makes use of Maxwell's multi-projection acceleration geometry (specifically the second-gen GM2xx GPUs). The result is delivering just enough pixel density at maximum compression, just before the warped image is visible. Nvidia claimed that it can get up to twice the pixel shader performance improvement by doing so, and that the quality difference is imperceptible.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

I was able to see some of this in action. Nvidia cranked up the compression on an image little by little, turning compression on and off for before and after views. I paid special attention to the edges of the image, and in most cases I really couldn't see any difference. It was only when Nvidia turned compression up past around 50 percent that I could see a visible blur and shake at the outer edges, something Nvidia is continuing to improve upon.

Between now and the launch of products such as the HTC/Valve Vive and the Oculus Rift, expect to see more updates from both AMD and Nvidia. Nvidia's VR Direct, like AMD's Liquid VR, includes enhancements such as Asynchronous Timewarp, which is different than the warping that multi-resolution shading provides. Michael Antonov, chief software architect at Oculus, provided a pretty good explanation of it and its downfalls here, and we discussed how it works in our overview of AMD's Liquid VR here.

Nvidia's VR Direct also includes VR SLI so that multiple GPUs can update each eye separately, but also in sync; that is, the frames aren't rendered in an alternating fashion, for hopefully obvious reasons. VR Direct also makes use of Maxwell's DSR and MFAA. And all of these settings are delivered automatically with GeForce Experience.

When consumer headsets do ship, there will be game-ready drivers, or optimized game settings, including SLI profiles for VR, all delivered through GeForce Experience. These settings are specifically customized to a user's hardware.

Follow Fritz Nelson @fnelson. Follow us @tomshardware, on Facebook and on Google+.

-

thor220 The problem with GameWorks is that Nvidia doesn't allow the game devs to give AMD or any other GPU vendor access to code that uses Nvidia GameWorks IP (nor give tips or hits on what that code entails, enabling to optimize it).Reply

With the latest GameWorks title, Project cards (and yet another disaster for AMD users) one can be certain that only Nvidia users will have an enjoyable experience with any game branded with Nvidia GameWorks. -

Shankovich So this is nVidia's answer to Liquid VR I take it. Like what they're doing, very impressive, but if it's proprietary so...yea.Reply -

somebodyspecial Reply15965512 said:The problem with GameWorks is that Nvidia doesn't allow the game devs to give AMD or any other GPU vendor access to code that uses Nvidia GameWorks IP (nor give tips or hits on what that code entails, enabling to optimize it).

With the latest GameWorks title, Project cards (and yet another disaster for AMD users) one can be certain that only Nvidia users will have an enjoyable experience with any game branded with Nvidia GameWorks.

The problem with AMD's Mantle is it doesn't work with anyone else, so you should expect MANTLE AMD branded games to work best on them...LOL. Intel was turned down after looking to get hands on with Mantle code 4, count them FOUR times and gave up.

I really wish whiners would stop this crap. Also note, the recent debacle with Witcher 3 and project cars is AMD's fault for not having anything to do with them until the end. IE, AMD came to Witcher3 guys in the last two months before launch asking for TressFX to be in for launch (the devs said this!) while it was clear 2+ years ago NV's hairworks crap was being worked on IN the game as it was shown in public at a show. Why didn't AMD approach them at THAT time 2yrs ago to work with them on TressFX? Then they go around whining they were denied? Well duh, you can't approach people in the last two months of POLISHING whining about major feature support. Do that approach when it's being DEVELOPED years before. The second AMD saw the wolves on screen touting NV hair, they should have made a phone call to set up some dev time for TressFX. But they didn't. Also note how badly it affects non-maxwell cards. A simple config file change fixes it in both cases anyway or likely in a patch coming up no doubt. Clearly the major amount of time was spent on maximizing the potential of maxwell performance (duh, new gen). Patches are already adding perf to older cards and AMD is releasing drivers to supposedly fix their perf (as it should!).

Sure, if a company spends 2-3yrs working with a dev to showcase their hardware you should expect it will run great on their hardware. Both sides do it, so get over it. IF you come up with some REALLY great effects that run on your hardware excellent, that's your job and it isn't your job to share that hard work. Again, get over it.

Quit acting like a victim (AMD needs to do this too). AMD isn't a victim, unless it's by their own choice to go after consoles, mantle etc, instead of spending ALL of that money on CPU/GPU/DX Drivers. These 3 things are why they are behind in everything. I hope they fix it with ZEN, and start acting like a survivor instead of a victim (quit whining, put out better stuff that we want to buy).

https://steamcommunity.com/app/234630/discussions/0/613957600528900678/

Project Cars guy asks boss if they could post what he said about AMD blaming them:

"What can I say but he should take better stock of what's happening in his company. We're reaching out to AMD with all of our efforts. We've provided them 20 keys as I say. They were invited to work with us for years."

"Looking through company mails the last I can see they (AMD) talked to us was October of last year."

"We've had emails back and forth with them yesterday also. I reiterate that this is mainly a driver issue but we'll obviously do anything we can from our side."

Golly...I guess all that NV work on DirectX 11 drivers pays off. Duh. While AMD worked on mantle, they ignored DirectX. Their last WHQL driver was Dec I think? You should get the point.

There is more he said but those 3 pretty much say what the Witcher guys said. AMD's fault they didn't come to them. One the other side, Nvidia approached both. It isn't Nvidia's fault AMD doesn't have the cash (or is it willingness) to work with more people. It's AMD's fault for losing $6Billion in 12 years. If I started a business today, and in twelve years, my total profits was a NEGATIVE 6 BILLION dollars, I would not expect to be able to put out top tier products no matter what business I was in. Well DUH. Hats off to them for surviving this long under such terrible mismanagement. That tells you just how great the engineers are at AMD for actually putting out great hardware with basically no budget (negative budget that is). The PROBLEM is management. -

somebodyspecial "Some great gains we saw from an earlier driver they released have been lost in a later driver they released. So I'd say driver is where we start.Reply

Again, if there's anything we can do we will. "

More from the steam thread. I guess this explains why toms didn't get much from the new driver. Neither did the project cars guys apparently. -

somebodyspecial http://forum.wmdportal.com/search.php?searchid=9048835Reply

The original quotes from Ian Bell can be found on this forum, if you're a backer you probably already know about them. -

thor220 Reply15968400 said:15965512 said:The problem with GameWorks is that Nvidia doesn't allow the game devs to give AMD or any other GPU vendor access to code that uses Nvidia GameWorks IP (nor give tips or hits on what that code entails, enabling to optimize it).

With the latest GameWorks title, Project cards (and yet another disaster for AMD users) one can be certain that only Nvidia users will have an enjoyable experience with any game branded with Nvidia GameWorks.

The problem with AMD's Mantle is it doesn't work with anyone else, so you should expect MANTLE AMD branded games to work best on them...LOL. Intel was turned down after looking to get hands on with Mantle code 4, count them FOUR times and gave up.

I really wish whiners would stop this crap. Also note, the recent debacle with Witcher 3 and project cars is AMD's fault for not having anything to do with them until the end. IE, AMD came to Witcher3 guys in the last two months before launch asking for TressFX to be in for launch (the devs said this!) while it was clear 2+ years ago NV's hairworks crap was being worked on IN the game as it was shown in public at a show. Why didn't AMD approach them at THAT time 2yrs ago to work with them on TressFX? Then they go around whining they were denied? Well duh, you can't approach people in the last two months of POLISHING whining about major feature support. Do that approach when it's being DEVELOPED years before. The second AMD saw the wolves on screen touting NV hair, they should have made a phone call to set up some dev time for TressFX. But they didn't. Also note how badly it affects non-maxwell cards. A simple config file change fixes it in both cases anyway or likely in a patch coming up no doubt. Clearly the major amount of time was spent on maximizing the potential of maxwell performance (duh, new gen). Patches are already adding perf to older cards and AMD is releasing drivers to supposedly fix their perf (as it should!).

Sure, if a company spends 2-3yrs working with a dev to showcase their hardware you should expect it will run great on their hardware. Both sides do it, so get over it. IF you come up with some REALLY great effects that run on your hardware excellent, that's your job and it isn't your job to share that hard work. Again, get over it.

Quit acting like a victim (AMD needs to do this too). AMD isn't a victim, unless it's by their own choice to go after consoles, mantle etc, instead of spending ALL of that money on CPU/GPU/DX Drivers. These 3 things are why they are behind in everything. I hope they fix it with ZEN, and start acting like a survivor instead of a victim (quit whining, put out better stuff that we want to buy).

https://steamcommunity.com/app/234630/discussions/0/613957600528900678/

Project Cars guy asks boss if they could post what he said about AMD blaming them:

"What can I say but he should take better stock of what's happening in his company. We're reaching out to AMD with all of our efforts. We've provided them 20 keys as I say. They were invited to work with us for years."

"Looking through company mails the last I can see they (AMD) talked to us was October of last year."

"We've had emails back and forth with them yesterday also. I reiterate that this is mainly a driver issue but we'll obviously do anything we can from our side."

Golly...I guess all that NV work on DirectX 11 drivers pays off. Duh. While AMD worked on mantle, they ignored DirectX. Their last WHQL driver was Dec I think? You should get the point.

There is more he said but those 3 pretty much say what the Witcher guys said. AMD's fault they didn't come to them. One the other side, Nvidia approached both. It isn't Nvidia's fault AMD doesn't have the cash (or is it willingness) to work with more people. It's AMD's fault for losing $6Billion in 12 years. If I started a business today, and in twelve years, my total profits was a NEGATIVE 6 BILLION dollars, I would not expect to be able to put out top tier products no matter what business I was in. Well DUH. Hats off to them for surviving this long under such terrible mismanagement. That tells you just how great the engineers are at AMD for actually putting out great hardware with basically no budget (negative budget that is). The PROBLEM is management.

You make allot of claims about what AMD has and hasn't done but I wonder if you have any credible sources. I've read anything that's come out in that category and even investigated a bit myself. Here's a video with someone high up in the chain talking about it

https://www.youtube.com/watch?v=fZGV5z8YFM8

What we know right now for certain is that AMD will never be able to optimize for GameWorks games very well. Nvidia isn't AMD, they lock up their tech and hold it over everyone else's head.

I would be especially interested to get a link to where you got the info on Intel asking AMD for Mantle. Seeing as they allow Nvidia OpenCL, I highly doubt your claim has much truth to it.

FYI. Quoting the dev. that's signed up for GameWorks is like asking a husband if his wife is pretty. That isn't valid and you know it. -

somebodyspecial Reply15970176 said:15968400 said:15965512 said:The problem with GameWorks is that Nvidia doesn't allow the game devs to give AMD or any other GPU vendor access to code that uses Nvidia GameWorks IP (nor give tips or hits on what that code entails, enabling to optimize it).

With the latest GameWorks title, Project cards (and yet another disaster for AMD users) one can be certain that only Nvidia users will have an enjoyable experience with any game branded with Nvidia GameWorks.

The problem with AMD's Mantle is it doesn't work with anyone else, so you should expect MANTLE AMD branded games to work best on them...LOL. Intel was turned down after looking to get hands on with Mantle code 4, count them FOUR times and gave up.

I really wish whiners would stop this crap. Also note, the recent debacle with Witcher 3 and project cars is AMD's fault for not having anything to do with them until the end. IE, AMD came to Witcher3 guys in the last two months before launch asking for TressFX to be in for launch (the devs said this!) while it was clear 2+ years ago NV's hairworks crap was being worked on IN the game as it was shown in public at a show. Why didn't AMD approach them at THAT time 2yrs ago to work with them on TressFX? Then they go around whining they were denied? Well duh, you can't approach people in the last two months of POLISHING whining about major feature support. Do that approach when it's being DEVELOPED years before. The second AMD saw the wolves on screen touting NV hair, they should have made a phone call to set up some dev time for TressFX. But they didn't. Also note how badly it affects non-maxwell cards. A simple config file change fixes it in both cases anyway or likely in a patch coming up no doubt. Clearly the major amount of time was spent on maximizing the potential of maxwell performance (duh, new gen). Patches are already adding perf to older cards and AMD is releasing drivers to supposedly fix their perf (as it should!).

Sure, if a company spends 2-3yrs working with a dev to showcase their hardware you should expect it will run great on their hardware. Both sides do it, so get over it. IF you come up with some REALLY great effects that run on your hardware excellent, that's your job and it isn't your job to share that hard work. Again, get over it.

Quit acting like a victim (AMD needs to do this too). AMD isn't a victim, unless it's by their own choice to go after consoles, mantle etc, instead of spending ALL of that money on CPU/GPU/DX Drivers. These 3 things are why they are behind in everything. I hope they fix it with ZEN, and start acting like a survivor instead of a victim (quit whining, put out better stuff that we want to buy).

https://steamcommunity.com/app/234630/discussions/0/613957600528900678/

Project Cars guy asks boss if they could post what he said about AMD blaming them:

"What can I say but he should take better stock of what's happening in his company. We're reaching out to AMD with all of our efforts. We've provided them 20 keys as I say. They were invited to work with us for years."

"Looking through company mails the last I can see they (AMD) talked to us was October of last year."

"We've had emails back and forth with them yesterday also. I reiterate that this is mainly a driver issue but we'll obviously do anything we can from our side."

Golly...I guess all that NV work on DirectX 11 drivers pays off. Duh. While AMD worked on mantle, they ignored DirectX. Their last WHQL driver was Dec I think? You should get the point.

There is more he said but those 3 pretty much say what the Witcher guys said. AMD's fault they didn't come to them. One the other side, Nvidia approached both. It isn't Nvidia's fault AMD doesn't have the cash (or is it willingness) to work with more people. It's AMD's fault for losing $6Billion in 12 years. If I started a business today, and in twelve years, my total profits was a NEGATIVE 6 BILLION dollars, I would not expect to be able to put out top tier products no matter what business I was in. Well DUH. Hats off to them for surviving this long under such terrible mismanagement. That tells you just how great the engineers are at AMD for actually putting out great hardware with basically no budget (negative budget that is). The PROBLEM is management.

You make allot of claims about what AMD has and hasn't done but I wonder if you have any credible sources. I've read anything that's come out in that category and even investigated a bit myself. Here's a video with someone high up in the chain talking about it

https://www.youtube.com/watch?v=fZGV5z8YFM8

What we know right now for certain is that AMD will never be able to optimize for GameWorks games very well. Nvidia isn't AMD, they lock up their tech and hold it over everyone else's head.

I would be especially interested to get a link to where you got the info on Intel asking AMD for Mantle. Seeing as they allow Nvidia OpenCL, I highly doubt your claim has much truth to it.

FYI. Quoting the dev. that's signed up for GameWorks is like asking a husband if his wife is pretty. That isn't valid and you know it.

http://www.pcworld.com/article/2365909/intel-approached-amd-about-access-to-mantle.html

Jeez, 3 second google. PC world lies? AMD confirmed. You can google further to find out AMD never turned it over (well heck, we all know this now right?).

""I know that Intel have approached us for access to the Mantle interfaces, et cetera," Huddy said. " And right now, we've said, give us a month or two, this is a closed beta, and we'll go into the 1.0 phase sometime this year, which is less than five months if you count forward from June. They have asked for access, and we will give it to them when we open this up, and we'll give it to anyone who wants to participate in this." "

Pretty much done with that topic correct? A simple google of "intel denied mantle"...jeez. Huddy wouldn't lie about his own company saying "not now" right? Which of course turned into NEVER. You can find that covered by many sites.

Considering AMD works fine under win10 with project cars, as the dev CORRECTLY noted, you should start at the driver end. Feel free to google how well project cars runs in win10 vs. win7/8 on AMD. OR heck go straight to the source on the dev link I gave before. As a backer you can read everything you want. :)

FYI quoting AMD for saying they can't get the job done due to Nvidia probably is exactly as your saying to me right? Did you expect him to say, umm, we suck so we'll never be able to optimize for gameworks. I fully expect that guy to trash it period (though he shouldn't without proof). You're acting like you can't turn gameworks off also. I didn't make claims, the DEVELOPERS did. You can't trash me for quoting them, can't trash me for quoting Intel (and AMD in this case) on Mantle etc. For someone who done some homework, clearly you wasted your time.

https://techreport.com/news/26682/intel-asked-amd-for-mantle-api-spec

Simple Intel rebuffed mantle gets the above. What tech sites do you read?

"We have publicly asked them to share the spec with us several times as part of examination of potential ways to improve APIs and increase efficiencies. At this point though we believe that DirectX 12 and ongoing work with other industry bodies and OS vendors will address the issues that game developers have noted."

Intel spokesman, asked SEVERAL TIMES, decided DX12 the way to go due to this. So what we know is, AMD did EXACTLY what you're ACCUSING Nvidia of right? Then when it failed to gain anything meaningful and became apparent DX12/Vulkan were going to rule they were forced to give some info up...LOL. OK, AMD is free with info (yeah, only when forced by market forces just like anyone else). Your AMD trust is pretty phenomenal at this point. Your quoting the company that is complaining here? LOL.

Who allows Nvidia to use OpenCL? Khronos Group develops it and Nvidia heads Khronos. It's a standard not supported by anyone (money wise), which is why CUDA dominates after 8yrs of NV pouring money into it. I don't see your point here.

https://en.wikipedia.org/wiki/OpenCL

One more point on huddy, he worked at Nvidia, AMD then went to Intel (no success there), now back at AMD after mass brain drain at AMD. I'm not impressed by him. Developer relations don't seem to be going to well either ;)

It would seem, according to you, we should ignore what devs say, and just claim NV is evil AMD is right especially if AMD says it. OK...No thanks. Evidence (and cash/profits etc) show that we shouldn't be surprised they can't optimize for much as the make NO money, and are already spread far too thin (hence R&D down 4yrs, while NV upped each year concentrating on less stuff). Why anyone is surprised ZERO profits (heck losses, 6Bil in 12yrs) and 1/3 people laid off, far less R&D etc=bad products and drivers, is beyond my comprehension. This should be expected. I'm shocked they're even in the gpu game still, and not shocked they're out of the cpu game for years. Hopefully ZEN is a sign they're going BACK to the correct direction: see Dirk Meyer who said we need to get CORE stuff right then go to other areas (like apu/mobile etc). IE, if you don't have a dominant product making cash, no point in trying other crap you have basically no skills and certainly no dominance in. They fired him...ROFL. Yet here comes ZEN. TrueAudio and Mantle however just wasted resources that could have been spent on CPU/GPU themselves.

AMD license TrueAudio yet? AFAIK it only works on AMD stuff right? So your only complaint here really is that AMD is losing the idea war? ;) Liquid VR vs. GeForce VR (or gameworks VR) whatever, all the same story. Everyone does this crap, and the only time I've seen ANYONE go OPEN is when they can't afford to fund CLOSED. IE, OpenCL vs. Cuda. Freesync vs. Gsync etc. You push Open when you can't force Closed and gain some traction. So you go open to hopefully stop the other guys closed :) I'll always opt for open unless it is NOT as good. When better is proprietary OPEN can kiss my butt if I can afford it...LOL. I want the best experience I can afford, no matter who makes it or how they do it.

Don't forget the gameworks stuff is currently making NV older cards suck too, but easily fixed as some have already done with config file mods etc. Dev probably needed money, so all this stuff gets fixed AFTER the fact. People might have a complaint if it ran great on 780 etc.

http://www.pcworld.com/article/2928652/amd-releases-radeon-beta-drivers-to-improve-witcher-3-project-cars-performance.html

Clearly drivers have at least SOMETHING to do with it (not to mention settings that are easily changed).

"AMD says you can expect to see up to 10 percent performance gains in The Witcher, and up to 17 percent gains in Project CARS."

It's AMD's fault they didn't have game ready drivers out (or any WHQL driver since Dec!). Project cars isn't even a gameworks title (it's just using physx).

http://www.pcworld.com/article/2925074/amd-radeon-graphics-run-witcher-3-just-fine-unless-nvidia-hairworks-is-enabled-and-thats-ok.html

Witcher 3 runs just like 980 with hairworks OFF. No surprise, and as he said no biggie, and as he notes at the time there were no witcher 3 drivers unlike NV, so to say witcher 3 wasn't optimized for AMD is a joke. Not optimized for Nvidia's own tech yet (or maybe never)? Big deal. For AMD players playing with it off is nothing, as if NV hadn't created it there would be no hair effects in it other than normal crap. So it's just a bonus for NV until (if) AMD can get drivers optimized, which really should be the case as they showed it 2+yrs ago on stage, so clearly in from the get go.

"Update: AMD's published a knowledge base article stating that Witcher 3 drivers are coming, and it also details Catalyst Control Center settings you can tweak for enhanced performance with Nvidia's HairWorks."

So already a fix, which from pics I've seen makes VERY little difference with most not even being able to tell quality was dropped on hairworks, and drivers were on the way when the article was written.

"For what it’s worth, Nvidia spokesperson Brian Burke says that Radeon hardware could've been optimized for HairWorks in a few different ways. While game developers aren’t allowed to share GameWorks source code with AMD, licensees (like CD Projekt Red) can request GameWorks code and optimize it in their games for AMD hardware. AMD could also attempt to optimize performance at a binary level, rather than a source code level, Burke said—though that’s far more difficult for AMD than dealing with direct source code. Finally, Burke says, AMD could’ve worked to get its own TressFX technology inside of The Witcher 3, much like how GTA V for PC featured proprietary shadow technology from both AMD and Nvidia.

AMD representatives didn't respond to a request for comment."

ROFL. Are we done? No comment from AMD? Well, er, uh, umm...They're right. Well you don't want to have to answer this NV response right? Sorry, so far I don't see anything but AMD+fanboys whining over nothing. Is it Nvidia's fault AMD is losing money and can't afford to approach more devs with TressFX etc? They did it for GTA V as noted (no surprise, biggest title ever right?). No surprise they can't afford to do it more, keep up drivers for every game, freesync, zen, apus, gpus etc. That's a lot of crap to keep up for a company LOSING $6Billion over 12 years (meaning NO PROFITS). I'm baffled anyone blames Nvidia for AMD's lack of results. Blame AMD for paying 3x the price for ATI and then again for exacerbating the problem by going into console instead of passing like NV who claimed it would have detracted from CORE products. YEP, we see it in AMD now. Mantle launch botched, freesync botched (pass until gen 2 when they MIGHT dictate part choices for manufacturers better), drivers botched right and left for game launches, cpu race quit (hopefully ZEN fixes this), APU race been over since it began (Intel prices them to death), no ARM cores while NV on rev7 (8 coming xmas on 14nm, counting both K1's here) etc. If you're shocked at AMD's performance in all things, you haven't been paying attention to reality, nor balance sheets/financial reports. I've been predicting this for years. It will get worse unless ZEN is a killer. AMD's new gpus shortly won't have pricing power most likely, but ZEN might.

-

thor220 Reply15975422 said:15970176 said:15968400 said:15965512 said:The problem with GameWorks is that Nvidia doesn't allow the game devs to give AMD or any other GPU vendor access to code that uses Nvidia GameWorks IP (nor give tips or hits on what that code entails, enabling to optimize it).

With the latest GameWorks title, Project cards (and yet another disaster for AMD users) one can be certain that only Nvidia users will have an enjoyable experience with any game branded with Nvidia GameWorks.

The problem with AMD's Mantle is it doesn't work with anyone else, so you should expect MANTLE AMD branded games to work best on them...LOL. Intel was turned down after looking to get hands on with Mantle code 4, count them FOUR times and gave up.

I really wish whiners would stop this crap. Also note, the recent debacle with Witcher 3 and project cars is AMD's fault for not having anything to do with them until the end. IE, AMD came to Witcher3 guys in the last two months before launch asking for TressFX to be in for launch (the devs said this!) while it was clear 2+ years ago NV's hairworks crap was being worked on IN the game as it was shown in public at a show. Why didn't AMD approach them at THAT time 2yrs ago to work with them on TressFX? Then they go around whining they were denied? Well duh, you can't approach people in the last two months of POLISHING whining about major feature support. Do that approach when it's being DEVELOPED years before. The second AMD saw the wolves on screen touting NV hair, they should have made a phone call to set up some dev time for TressFX. But they didn't. Also note how badly it affects non-maxwell cards. A simple config file change fixes it in both cases anyway or likely in a patch coming up no doubt. Clearly the major amount of time was spent on maximizing the potential of maxwell performance (duh, new gen). Patches are already adding perf to older cards and AMD is releasing drivers to supposedly fix their perf (as it should!).

Sure, if a company spends 2-3yrs working with a dev to showcase their hardware you should expect it will run great on their hardware. Both sides do it, so get over it. IF you come up with some REALLY great effects that run on your hardware excellent, that's your job and it isn't your job to share that hard work. Again, get over it.

Quit acting like a victim (AMD needs to do this too). AMD isn't a victim, unless it's by their own choice to go after consoles, mantle etc, instead of spending ALL of that money on CPU/GPU/DX Drivers. These 3 things are why they are behind in everything. I hope they fix it with ZEN, and start acting like a survivor instead of a victim (quit whining, put out better stuff that we want to buy).

https://steamcommunity.com/app/234630/discussions/0/613957600528900678/

Project Cars guy asks boss if they could post what he said about AMD blaming them:

"What can I say but he should take better stock of what's happening in his company. We're reaching out to AMD with all of our efforts. We've provided them 20 keys as I say. They were invited to work with us for years."

"Looking through company mails the last I can see they (AMD) talked to us was October of last year."

"We've had emails back and forth with them yesterday also. I reiterate that this is mainly a driver issue but we'll obviously do anything we can from our side."

Golly...I guess all that NV work on DirectX 11 drivers pays off. Duh. While AMD worked on mantle, they ignored DirectX. Their last WHQL driver was Dec I think? You should get the point.

There is more he said but those 3 pretty much say what the Witcher guys said. AMD's fault they didn't come to them. One the other side, Nvidia approached both. It isn't Nvidia's fault AMD doesn't have the cash (or is it willingness) to work with more people. It's AMD's fault for losing $6Billion in 12 years. If I started a business today, and in twelve years, my total profits was a NEGATIVE 6 BILLION dollars, I would not expect to be able to put out top tier products no matter what business I was in. Well DUH. Hats off to them for surviving this long under such terrible mismanagement. That tells you just how great the engineers are at AMD for actually putting out great hardware with basically no budget (negative budget that is). The PROBLEM is management.

You make allot of claims about what AMD has and hasn't done but I wonder if you have any credible sources. I've read anything that's come out in that category and even investigated a bit myself. Here's a video with someone high up in the chain talking about it

https://www.youtube.com/watch?v=fZGV5z8YFM8

What we know right now for certain is that AMD will never be able to optimize for GameWorks games very well. Nvidia isn't AMD, they lock up their tech and hold it over everyone else's head.

I would be especially interested to get a link to where you got the info on Intel asking AMD for Mantle. Seeing as they allow Nvidia OpenCL, I highly doubt your claim has much truth to it.

FYI. Quoting the dev. that's signed up for GameWorks is like asking a husband if his wife is pretty. That isn't valid and you know it.

http://www.pcworld.com/article/2365909/intel-approached-amd-about-access-to-mantle.html

Jeez, 3 second google. PC world lies? AMD confirmed. You can google further to find out AMD never turned it over (well heck, we all know this now right?).

""I know that Intel have approached us for access to the Mantle interfaces, et cetera," Huddy said. " And right now, we've said, give us a month or two, this is a closed beta, and we'll go into the 1.0 phase sometime this year, which is less than five months if you count forward from June. They have asked for access, and we will give it to them when we open this up, and we'll give it to anyone who wants to participate in this." "

Pretty much done with that topic correct? A simple google of "intel denied mantle"...jeez. Huddy wouldn't lie about his own company saying "not now" right? Which of course turned into NEVER. You can find that covered by many sites.

Considering AMD works fine under win10 with project cars, as the dev CORRECTLY noted, you should start at the driver end. Feel free to google how well project cars runs in win10 vs. win7/8 on AMD. OR heck go straight to the source on the dev link I gave before. As a backer you can read everything you want. :)

FYI quoting AMD for saying they can't get the job done due to Nvidia probably is exactly as your saying to me right? Did you expect him to say, umm, we suck so we'll never be able to optimize for gameworks. I fully expect that guy to trash it period (though he shouldn't without proof). You're acting like you can't turn gameworks off also. I didn't make claims, the DEVELOPERS did. You can't trash me for quoting them, can't trash me for quoting Intel (and AMD in this case) on Mantle etc. For someone who done some homework, clearly you wasted your time.

https://techreport.com/news/26682/intel-asked-amd-for-mantle-api-spec

Simple Intel rebuffed mantle gets the above. What tech sites do you read?

"We have publicly asked them to share the spec with us several times as part of examination of potential ways to improve APIs and increase efficiencies. At this point though we believe that DirectX 12 and ongoing work with other industry bodies and OS vendors will address the issues that game developers have noted."

Intel spokesman, asked SEVERAL TIMES, decided DX12 the way to go due to this. So what we know is, AMD did EXACTLY what you're ACCUSING Nvidia of right? Then when it failed to gain anything meaningful and became apparent DX12/Vulkan were going to rule they were forced to give some info up...LOL. OK, AMD is free with info (yeah, only when forced by market forces just like anyone else). Your AMD trust is pretty phenomenal at this point. Your quoting the company that is complaining here? LOL.

Who allows Nvidia to use OpenCL? Khronos Group develops it and Nvidia heads Khronos. It's a standard not supported by anyone (money wise), which is why CUDA dominates after 8yrs of NV pouring money into it. I don't see your point here.

https://en.wikipedia.org/wiki/OpenCL

One more point on huddy, he worked at Nvidia, AMD then went to Intel (no success there), now back at AMD after mass brain drain at AMD. I'm not impressed by him. Developer relations don't seem to be going to well either ;)

It would seem, according to you, we should ignore what devs say, and just claim NV is evil AMD is right especially if AMD says it. OK...No thanks. Evidence (and cash/profits etc) show that we shouldn't be surprised they can't optimize for much as the make NO money, and are already spread far too thin (hence R&D down 4yrs, while NV upped each year concentrating on less stuff). Why anyone is surprised ZERO profits (heck losses, 6Bil in 12yrs) and 1/3 people laid off, far less R&D etc=bad products and drivers, is beyond my comprehension. This should be expected. I'm shocked they're even in the gpu game still, and not shocked they're out of the cpu game for years. Hopefully ZEN is a sign they're going BACK to the correct direction: see Dirk Meyer who said we need to get CORE stuff right then go to other areas (like apu/mobile etc). IE, if you don't have a dominant product making cash, no point in trying other crap you have basically no skills and certainly no dominance in. They fired him...ROFL. Yet here comes ZEN. TrueAudio and Mantle however just wasted resources that could have been spent on CPU/GPU themselves.

AMD license TrueAudio yet? AFAIK it only works on AMD stuff right? So your only complaint here really is that AMD is losing the idea war? ;) Liquid VR vs. GeForce VR (or gameworks VR) whatever, all the same story. Everyone does this crap, and the only time I've seen ANYONE go OPEN is when they can't afford to fund CLOSED. IE, OpenCL vs. Cuda. Freesync vs. Gsync etc. You push Open when you can't force Closed and gain some traction. So you go open to hopefully stop the other guys closed :) I'll always opt for open unless it is NOT as good. When better is proprietary OPEN can kiss my butt if I can afford it...LOL. I want the best experience I can afford, no matter who makes it or how they do it.

Don't forget the gameworks stuff is currently making NV older cards suck too, but easily fixed as some have already done with config file mods etc. Dev probably needed money, so all this stuff gets fixed AFTER the fact. People might have a complaint if it ran great on 780 etc.

http://www.pcworld.com/article/2928652/amd-releases-radeon-beta-drivers-to-improve-witcher-3-project-cars-performance.html

Clearly drivers have at least SOMETHING to do with it (not to mention settings that are easily changed).

"AMD says you can expect to see up to 10 percent performance gains in The Witcher, and up to 17 percent gains in Project CARS."

It's AMD's fault they didn't have game ready drivers out (or any WHQL driver since Dec!). Project cars isn't even a gameworks title (it's just using physx).

http://www.pcworld.com/article/2925074/amd-radeon-graphics-run-witcher-3-just-fine-unless-nvidia-hairworks-is-enabled-and-thats-ok.html

Witcher 3 runs just like 980 with hairworks OFF. No surprise, and as he said no biggie, and as he notes at the time there were no witcher 3 drivers unlike NV, so to say witcher 3 wasn't optimized for AMD is a joke. Not optimized for Nvidia's own tech yet (or maybe never)? Big deal. For AMD players playing with it off is nothing, as if NV hadn't created it there would be no hair effects in it other than normal crap. So it's just a bonus for NV until (if) AMD can get drivers optimized, which really should be the case as they showed it 2+yrs ago on stage, so clearly in from the get go.

"Update: AMD's published a knowledge base article stating that Witcher 3 drivers are coming, and it also details Catalyst Control Center settings you can tweak for enhanced performance with Nvidia's HairWorks."

So already a fix, which from pics I've seen makes VERY little difference with most not even being able to tell quality was dropped on hairworks, and drivers were on the way when the article was written.

"For what it’s worth, Nvidia spokesperson Brian Burke says that Radeon hardware could've been optimized for HairWorks in a few different ways. While game developers aren’t allowed to share GameWorks source code with AMD, licensees (like CD Projekt Red) can request GameWorks code and optimize it in their games for AMD hardware. AMD could also attempt to optimize performance at a binary level, rather than a source code level, Burke said—though that’s far more difficult for AMD than dealing with direct source code. Finally, Burke says, AMD could’ve worked to get its own TressFX technology inside of The Witcher 3, much like how GTA V for PC featured proprietary shadow technology from both AMD and Nvidia.

AMD representatives didn't respond to a request for comment."

ROFL. Are we done? No comment from AMD? Well, er, uh, umm...They're right. Well you don't want to have to answer this NV response right? Sorry, so far I don't see anything but AMD+fanboys whining over nothing. Is it Nvidia's fault AMD is losing money and can't afford to approach more devs with TressFX etc? They did it for GTA V as noted (no surprise, biggest title ever right?). No surprise they can't afford to do it more, keep up drivers for every game, freesync, zen, apus, gpus etc. That's a lot of crap to keep up for a company LOSING $6Billion over 12 years (meaning NO PROFITS). I'm baffled anyone blames Nvidia for AMD's lack of results. Blame AMD for paying 3x the price for ATI and then again for exacerbating the problem by going into console instead of passing like NV who claimed it would have detracted from CORE products. YEP, we see it in AMD now. Mantle launch botched, freesync botched (pass until gen 2 when they MIGHT dictate part choices for manufacturers better), drivers botched right and left for game launches, cpu race quit (hopefully ZEN fixes this), APU race been over since it began (Intel prices them to death), no ARM cores while NV on rev7 (8 coming xmas on 14nm, counting both K1's here) etc. If you're shocked at AMD's performance in all things, you haven't been paying attention to reality, nor balance sheets/financial reports. I've been predicting this for years. It will get worse unless ZEN is a killer. AMD's new gpus shortly won't have pricing power most likely, but ZEN might.

I read the article and at no point does AMD deny intel. Furthermore, you stated they asked three times. The article only mentions them asking once. Seeing as mantle is giving way to Valcon and DX12, AMD is canceling Mantle.

"Considering AMD works fine under win10 with project cars, as the dev CORRECTLY noted, you should start at the driver end. Feel free to google how well project cars runs in win10 vs. win7/8 on AMD. OR heck go straight to the source on the dev link I gave before. As a backer you can read everything you want."

I fail to see the correlation between project cars working better on windows 10 then other versions and poor AMD optimization. Is there any way you can show the AMD drivers are specifically to blame? Otherwise, the game could simply work better on the newer OS.

"FYI quoting AMD for saying they can't get the job done due to Nvidia probably is exactly as your saying to me right? Did you expect him to say, umm, we suck so we'll never be able to optimize for gameworks. I fully expect that guy to trash it period (though he shouldn't without proof)."

It's not just quoting AMD, Nvidia confirmed they don't allow AMD access to GameWorks IP in a response video.

" You're acting like you can't turn gameworks off also. I didn't make claims, the DEVELOPERS did. You can't trash me for quoting them, can't trash me for quoting Intel (and AMD in this case) on Mantle etc. For someone who done some homework, clearly you wasted your time."

In many games you can't turn GameWorks off. Certain games has critical features like shaders and tessellation made using GameWorks.

"https://techreport.com/news/26682/intel-asked-amd-for-m...

Simple Intel rebuffed mantle gets the above. What tech sites do you read?"

This article refers to the same instance you linked before. Intel asked AMD publicly once for access to mantle during it's beta period and AMD replied with "when games actually ship, you'll have access". By the time that happened, DX12 was announced and we haven't heard anything else.

"It would seem, according to you, we should ignore what devs say, and just claim NV is evil AMD is right especially if AMD says it. OK...No thanks. Evidence (and cash/profits etc) show that we shouldn't be surprised they can't optimize for much as the make NO money, and are already spread far too thin (hence R&D down 4yrs, while NV upped each year concentrating on less stuff). Why anyone is surprised ZERO profits (heck losses, 6Bil in 12yrs) and 1/3 people laid off, far less R&D etc=bad products and drivers, is beyond my comprehension. This should be expected. I'm shocked they're even in the gpu game still, and not shocked they're out of the cpu game for years. Hopefully ZEN is a sign they're going BACK to the correct direction: see Dirk Meyer who said we need to get CORE stuff right then go to other areas (like apu/mobile etc). IE, if you don't have a dominant product making cash, no point in trying other crap you have basically no skills and certainly no dominance in. They fired him...ROFL. Yet here comes ZEN. TrueAudio and Mantle however just wasted resources that could have been spent on CPU/GPU themselves."

A long rant by you that shows your bias. This does nothing to refute my point that the Project Cars Dev were given money and free resource by Nvidia, thus biased. You honestly believe they are going to bite the hand that feeds them.

"AMD license TrueAudio yet? AFAIK it only works on AMD stuff right? So your only complaint here really is that AMD is losing the idea war? ;) "

True audio is a hardware solution, it cannot be licensed. A pointless argument to make. It would be like asking Nvidia to License CUDA cores.

"Liquid VR vs. GeForce VR (or gameworks VR) whatever, all the same story. Everyone does this crap, and the only time I've seen ANYONE go OPEN is when they can't afford to fund CLOSED. IE, OpenCL vs. Cuda. Freesync vs. Gsync etc. You push Open when you can't force Closed and gain some traction. So you go open to hopefully stop the other guys closed :) I'll always opt for open unless it is NOT as good. When better is proprietary OPEN can kiss my butt if I can afford it...LOL. I want the best experience I can afford, no matter who makes it or how they do it."

OpenCL - Open (make by a group of companies) vs CUDA (Nvidia only)

Freesync - open, a display standard by VESA vs GSync - Nvidia only, costs $200 extra, doesn't have as many features as Freesync

I would go on, like how AMD started HDMI too, but I'll just stick by comparing the one's you've given. More often than not, Nvidia's tech doesn't stick around because they decide to no longer drive it.

"http://www.pcworld.com/article/2928652/amd-releases-rad...

Clearly drivers have at least SOMETHING to do with it (not to mention settings that are easily changed).

"AMD says you can expect to see up to 10 percent performance gains in The Witcher, and up to 17 percent gains in Project CARS."

It's AMD's fault they didn't have game ready drivers out (or any WHQL driver since Dec!). Project cars isn't even a gameworks title (it's just using physx)."

So, because AMD is able to eek more performance out somehow means they have bad drivers? No, AMD wasn't able to find want Nvidia GameWorks crippled until the game released. It's a know fact that Nvidia will sacrifice some of their own performance for a large decrease in AMD's.

Another point

https://www.reddit.com/r/pcgaming/comments/366iqs/nvidia_gameworks_project_cars_and_why_we_should/

Not only is it a GameWorks title but it's GameWorks features cannot be turned off, like you so ignorantly claimed earlier.

"witcher 3 wasn't optimized for AMD is a joke"

Comprehension fail. I said Crysis 3. Stop skimming posts and actually read.

"ROFL. Are we done?"

Yes, I think we are. If you are going to respond, take time it write it, quality over quantity. A wall of text that amounts to crap doesn't do much good. -

somebodyspecial Reply15979912 said:snipped

.

https://techreport.com/news/26682/intel-asked-amd-for-mantle-api-spec

"Now, don't mistake Intel's interest in Mantle for a commitment to the low-level API. Here's what the company told PC World:

"At the time of the initial Mantle announcement, we were already investigating rendering overhead based on game developer feedback," an Intel spokesman said in an email. "Our hope was to build consensus on potential approaches to reduce overhead with additional data. We have publicly asked them to share the spec with us several times as part of examination of potential ways to improve APIs and increase efficiencies. At this point though we believe that DirectX 12 and ongoing work with other industry bodies and OS vendors will address the issues that game developers have noted.""

For the 2nd time...READ THE BOLD PART! They asked several times. You're wasting my time ignoring what I said.