Nvidia Hints at DLSS 10 Delivering Full Neural Rendering, Potentially Replacing Rasterization and Ray Tracing

The company demoed a basic neural network-rendered world back in 2018.

A future version of DLSS is likely to include full neural rendering, hinted Bryan Catanzaro, a Nvidia VP of Applied Deep Learning Research. In a round table discussion organized by Digital Foundry (video), various video game industry experts talked about the future of AI in the business. During the discussion, Nvidia’s Catanzaro raised a few eyebrows with his openness to predict some key features of a hypothetical "DLSS 10."

We've seen significant developments in Nvidia’s DLSS technology over the years. First launched with the RTX 20-series GPUs, many wondered about the true value of technologies like the Tensor cores being included in gaming GPUs. The first ray tracing games, and the first version of DLSS, were of questionable merit. However, DLSS 2.X improved the tech and made it more useful, leading to it being more widely utilized — and copied, first via FSR2 and later with XeSS.

DLSS 3 debuted with the RTX 40-series graphics cards, adding Frame Generation technology. With 4x upscaling and frame generation, neural rendering potentially allows a game to only fully render 1/8 (12.5%) of the pixels. Most recently, DLSS 3.5 offered improved denoising algorithms for ray tracing games with the introduction of Ray Reconstruction technology.

The above timeline raises questions about where Nvidia might go next with future versions of DLSS. And of course, "Deep Learning Super Sampling" no longer really applies, as the last two additions have targeted other aspects of rendering. Digital Foundry asked that question to the group: “Where do you see DLSS in the future? What other problem areas could machine learning tackle in a good way?”

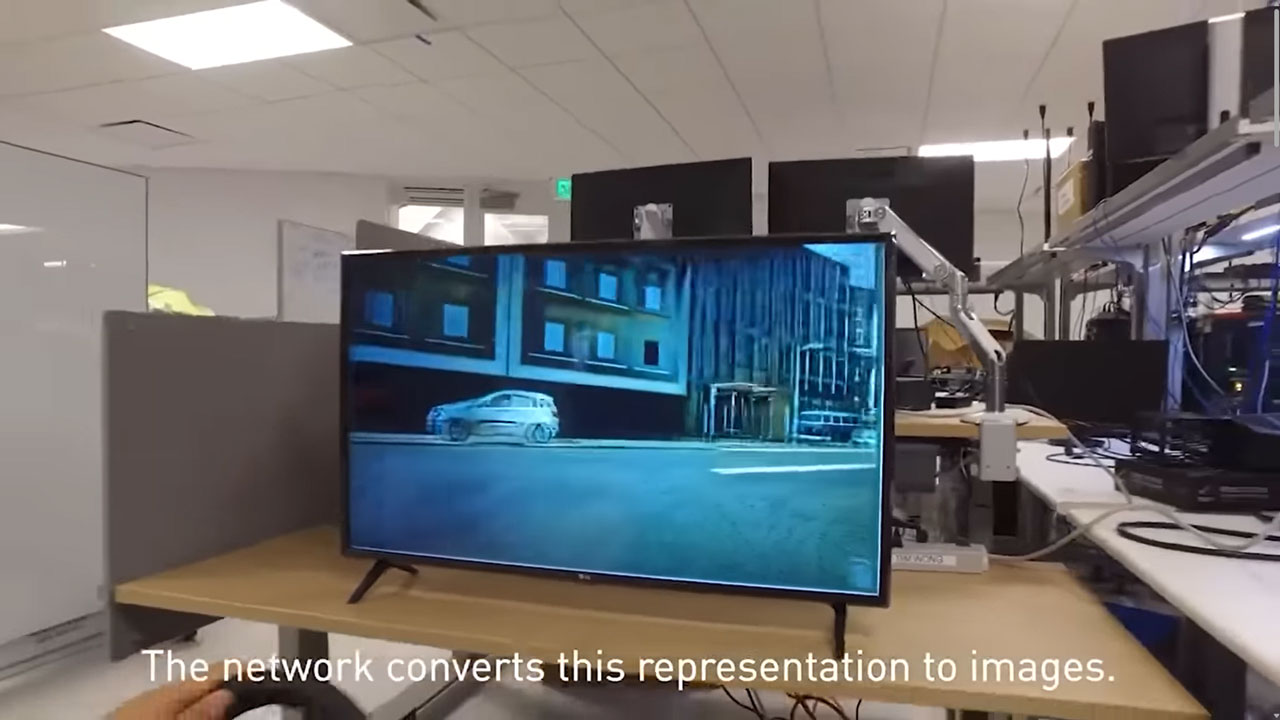

Bryan Catanzaro immediately brought up the topic of full neural rendering. This idea isn’t quite as far out as it may seem. Catanzaro reminded the panel that, at the NeurIPS conference in 2018, Nvidia researchers showed an open-world demo of a world being rendered in real-time using a neural network. During that demo the UE4 game engine provided data about what objects were in a scene, where they were, and so on, and the neural rendering provided all the on-screen graphics.

The graphics back in 2018 were rather basic — “Not anything close to Cyberpunk,” admitted Catanzaro. Advances in AI image generation have been quite incredible in the interim, though. Look at the leaps in quality we've seen in AI image generators over the last year, for example.

Catanzaro suggested the 2018 demo was a peek into a major growth area of (generative) AI in gaming. “DLSS 10 (in the far far future) is going to be a completely neural rendering system,” he speculated. The result will be “more immersive and more beautiful” games than most can imagine today.

Between now and DLSS 10, Catanzaro believes we'll see a gradual process, developer controllable, and coherent. Developers are now experienced in tools that allow them to steer their vision using traditional game engines and 2D / 3D rendering tech. They need similar finely controlled tools ready for generative AI, noted the Nvidia VP.

Another interesting perspective on the future of AI in gaming was provided by Jakub Knapik, VP Art and Global Art Director at CD Projekt RED. Knapik was fully transparent in saying Catanzaro’s easily believable vision of generative AI graphics “scares me like crazy.” However, he sounded more excited about AI enhancing the interactive and immersive worlds that make gaming so much fun, not just graphics.

Currently, 90% of a game might be prescriptive, noted the senior CDPR developer. In contrast, he referenced Nvidia’s ‘Cyberpunky’ demo of an AI-powered interactive NPC as providing a window into deeper AI-enhanced games. Knapik went on to note that for CDPR, the story is king, and that’s where he sees the best use of AI going forward.

Both Catanzaro and Knapik seemed to agree that developers need extensive control over AI to realize their creative visions. People won’t just be able to conjure up an AAA game from a chat prompt, joked the Nvidia VP. We can see it now: "/Imagine: A dystopian future first-person game with RPG elements in a violent world dominated by corporations."

Wherever we go in the interim, by the time DLSS 10 rolls out, we could see game engines wielding AI-generated visuals for better real-time graphics quality and performance, with AI-enhanced characters, stories, and environments contributing to what might become a whole new category of media.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Maybe i went through this piece of news faster than i should, but i don't think i read anything about potentially replacing Ray Tracing. Unless, of course, this kind of development is implied as a sort of natural evolution, in the process of improving graphics quality.Reply

In any case, i very much like what Nvidia has done so far with DLSS and RT, and i'm sure it will only get better by time. -

salgado18 Reply

Either the generated graphics don't need raytracing, or the neural engine uses raytracing hardware to create the graphics.kraylbak said:Maybe i went through this piece of news faster than i should, but i don't think i read anything about potentially replacing Ray Tracing. Unless, of course, this kind of development is implied as a sort of natural evolution, in the process of improving graphics quality.

In any case, i very much like what Nvidia has done so far with DLSS and RT, and i'm sure it will only get better by time.

But while I agree that their advances are great, they need to contribute to the general ecosystem to make all gamers enjoy at least part of the benefits, or work with some existing standards. It's not fun to play Batman Arkham games and seeing that toggle "Enable PhysX" disabled, because I don't have an Nvidia card. How would games be today if PhysX were supported in every GPU from the start? And I don't want that for DLSS 10 or whatever. -

The Hardcard I think it will replace ray tracing and shaders. I see game developers providing assets, objects and a story and DLSS 10 generating 70 - 120 Stable Diffusion (or probably more advanced AI algorithms) frames per second.Reply

I think this is the future. I think it will look far more realistic and natural than shaders and hardware ray tracing can hope for while simultaneously needing less growth in transistors and power budgets to scale up performance.

Interestingly, using AI algorithms to generate the image stream is also going to make it easier for other companies than Nvidia, AMD, and Intel to provide gaming hardware. -

baboma >Maybe i went through this piece of news faster than i should, but i don't think i read anything about potentially replacing Ray Tracing. Unless, of course, this kind of development is implied as a sort of natural evolution, in the process of improving graphics quality.Reply

Yeah, note the DLSS 10. Assuming linear progress, it took ~4.5 years from 1.0 (Feb 2019) to 3.5 now, and another 6.5 iterations would take another ~8+ years. But progress isn't linear (for AI), so if the '10' holds true, I'd say a bit sooner than that.

Anyway, the sentiment coming from an Nvidia VP of "Applied Deep Learning Research" is just one more indication of where Nvidia is heading for its GPUs--more AI, less brute power.

>In any case, i very much like what Nvidia has done so far with DLSS and RT, and i'm sure it will only get better by time.

I'm excited more for the non-graphical possibilities of AI in games. More intelligent NPCs for starters...better combat AI...no more canned convos...the list is endless. -

Replysalgado18 said:But while I agree that their advances are great, they need to contribute to the general ecosystem to make all gamers enjoy at least part of the benefits, or work with some existing standards.

That would be nice, if DLSS wasn't one of those features that give Nvidia the edge over its competitors.

We all want this to happen, but, unfortunately, it's not a reasonable thing to expect. -

Murissokah At that point wouldn't it be inaccurate to call it DLSS if it's a rendering engine? (maybe DLR?)Reply

I hope they get it stable enough before trying to ship it as such, otherwise we'll have a bunch of psychedelic releases full of AI hallucinations. -

TechyInAZ Replykraylbak said:Maybe i went through this piece of news faster than i should, but i don't think i read anything about potentially replacing Ray Tracing. Unless, of course, this kind of development is implied as a sort of natural evolution, in the process of improving graphics quality.

In any case, i very much like what Nvidia has done so far with DLSS and RT, and i'm sure it will only get better by time.

They applied that subtly. If you create a image completely rendered by AI, the AI will duplicate what path tracing does naturally, and render the image to mimic pathtraced/raytraced images.

Of course, you can probably have AI reference an actual image rendered with real path tracing/ray tracing, but that's not very efficient when the AI can be taught to mimick RT all on its own.

Ray Reconstruction in a way already does this... -

edzieba Reply

Like with most things Nvidia are the first-mover on and heavily invest in, it will inevitably roll out elsewhere as that knowledge becomes public and demand is demosntrated. GPGPU (CUDA), variable monitor refresh (G-Sync), etc. May take time, but even now after dragging their feet for a while AMD are starting to implement hardware RT acceleration and deep-learning based resampling, just as they followed suit on GPGPU and VFR.kraylbak said:That would be nice, if DLSS wasn't one of those features that give Nvidia the edge over its competitors.

We all want this to happen, but, unfortunately, it's not a reasonable thing to expect. -

hotaru.hino If such tech aims to replace rendering, then it better have a path to provide backwards compatibility.Reply

It was a thing on my mind when ray tracing finally hit the scene. It only gets better the more resources you throw at it, but we may hit a point where we have more ray tracing resources that rasterization performance stops improving.