Intel will retire rarely-used 16x MSAA support on Xe3 GPUs — AI upscalers like XeSS, FSR, and DLSS provide better, more efficient results

AI methods are taking over.

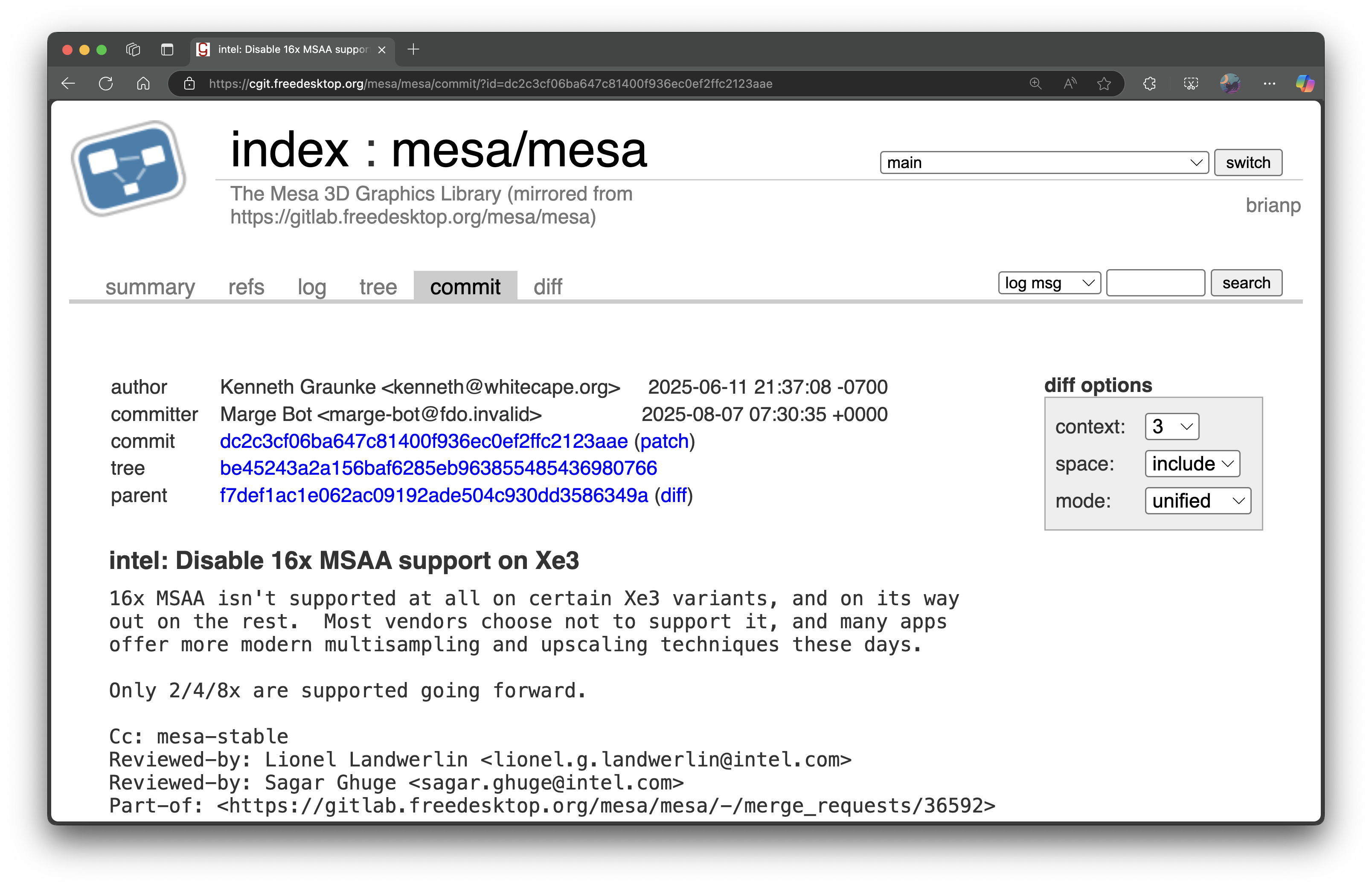

Intel has begun phasing out 16x MSAA support in its upcoming Xe3 graphics,. As revealed by engineer Kenneth Graunke in a recent Mesa driver commit, “16x MSAA isn’t supported at all on certain Xe3 variants, and on its way out on the rest. Most vendors choose not to support it, and many apps offer more modern multisampling and upscaling techniques these days. Only 2/4/8x are supported going forward.” The change has already landed in the Mesa 25.3-devel branch and is being back-ported to earlier 25.1 and 25.2 releases.

This marks a clear shift away from brute-force anti-aliasing toward AI-accelerated upscaling and smarter sampling. Multi-sample anti-aliasing like 16x MSAA once offered clean edges by sampling geometry multiple times per pixel, but at a steep performance cost.

Even at lower tiers, MSAA can be demanding, especially on complex scenes, and it fails to smooth transparencies or shader artifacts well. It once used to be the king of its domain but that era is long gone; it hasn't been useful in about a decade and the 16x flavor is simply unrealistic to begin with.

Today, modern techniques like XeSS, AMD’s FSR, and Nvidia’s DLSS provide anti-aliasing plus resolution (up)scaling and image reconstruction, often outperforming traditional MSAA with much less GPU strain, not to mention frame generation capabilities. These upscalers can all be run in native AA mode to improve image quality and can outperform temporal AA methods like TAA in reducing flicker and preserving detail.

MSAA works by detecting edges and, as a consequence of contemporary reliance on upscaling tech, we've stopped chasing higher internal rendering resolutions that would otherwise be required for MSAA to work effectively. AI-based temporal solutions simply do it all, and continue to get the most amount of community support as well.

Intel’s XeSS (Xe Super Sampling), while primarily intended for upscaling and frame generation, also delivers modern anti-aliasing capabilities across the frame. Its latest SDK supports not only Intel’s own GPUs but also works on Nvidia and AMD hardware—giving developers a vendor-agnostic path to integrate advanced sampling and latency optimizations.

So why drop 16x MSAA? Intel, like other vendors, recognizes the diminishing returns of ultra-heavy MSAA combined with the rising quality and efficiency of temporal and AI-powered alternatives. Game engines, especially those relying on deferred rendering, often disable higher MSAA levels altogether. Community feedback echoes this; users report that while MSAA used to be “great,” newer techniques provide better visuals with smoother performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Comment from r/pcgaming

By limiting supported MSAA to 2x, 4x, and 8x going forward, Intel simplifies driver maintenance and encourages developers to adopt modern upscaling pipelines. For Linux gamers and developers working with Iris Gallium3D or Vulkan (ANV), it’s a signal that if you want high-quality anti-aliasing, it’s time to lean on XeSS, FSR, DLSS—or open standards like TAA and smart post-processing—rather than brute multisampling.

In the context of Xe3’s launch — likely paired with the Panther Lake CPU family — this rollback of legacy MSAA echoes broader industry trends: more AI, less brute-force. It may prompt engine architects to optimize around hybrid AA strategies (e.g., XeSS SR + TAA), focus on motion clarity, and preserve performance headroom for real-time ray tracing or VR workloads. This could serve as a turning point in how Linux graphics drivers treat image quality, hinting that the future of anti-aliasing lies in smarter, more adaptive methods, not higher sample counts.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

coolitic I don't know why we care about Redditor opinions for news articles, but, the lack of MSAA in most games has little to do w/ the performance-cost (it's not that much more expensive than the more complicated temporal techniques), but rather mainly to do w/ its incompatibility w/ deferred shading techniques, which have dominated the games industry since like 2010.Reply

The "incompatible w/ transparency" isn't strictly true either (but there are certainly complications), and it's actually quite amusing/ironic considering that deferred-shading itself pairs quite poorly w/ transparency, in a way that's (imo) significantly worse in terms of performance and/or appearance, versus a simple absence of MSAA ("absent" meaning strictly in the transparent portions).

As an aside: the reason deferred-shading had become dominant at that time is because it allowed for negligible per-light cost for dynamic lighting (at least, for shadow-less lights), versus traditional forward-shading which scales quite poorly w/ dynamic light-count (4-ish being the conventional peak).

However, "clustered-forward" shading catches up to deferred-shading w/ regards to dynamic-light cost, and doesn't suffer from many of the same limitations/difficulties that deferred does (ie. transparency, MSAA). The two main reasons (that I see) for clustered-forward not being widely-used is 1. complacency/tech-lag and 2. many screen-space techniques make use of the G-buffer that deferred provides. -

applito1994 Reply

This is a solid comment that explains things better than the articlecoolitic said:I don't know why we care about Redditor opinions for news articles, but, the lack of MSAA in most games has little to do w/ the performance-cost (it's not that much more expensive than the more complicated temporal techniques), but rather mainly to do w/ its incompatibility w/ deferred shading techniques, which have dominated the games industry since like 2010.

The "incompatible w/ transparency" isn't strictly true either (but there are certainly complications), and it's actually quite amusing/ironic considering that deferred-shading itself pairs quite poorly w/ transparency, in a way that's (imo) significantly worse in terms of performance and/or appearance, versus a simple absence of MSAA ("absent" meaning strictly in the transparent portions).

As an aside: the reason deferred-shading had become dominant at that time is because it allowed for negligible per-light cost for dynamic lighting (at least, for shadow-less lights), versus traditional forward-shading which scales quite poorly w/ dynamic light-count (4-ish being the conventional peak).

However, "clustered-forward" shading catches up to deferred-shading w/ regards to dynamic-light cost, and doesn't suffer from many of the same limitations/difficulties that deferred does (ie. transparency, MSAA). The two main reasons (that I see) for clustered-forward not being widely-used is 1. complacency/tech-lag and 2. many screen-space techniques make use of the G-buffer that deferred provides. -

IinLight Reply

While ue supports forward+, I believe unreal implements "clustered deferred" shading as it's default renderer, thus harvesting the benefits of being disaggregated while keeping availability of g-buffer.coolitic said:I don't know why we care about Redditor opinions for news articles, but, the lack of MSAA in most games has little to do w/ the performance-cost (it's not that much more expensive than the more complicated temporal techniques), but rather mainly to do w/ its incompatibility w/ deferred shading techniques, which have dominated the games industry since like 2010.

The "incompatible w/ transparency" isn't strictly true either (but there are certainly complications), and it's actually quite amusing/ironic considering that deferred-shading itself pairs quite poorly w/ transparency, in a way that's (imo) significantly worse in terms of performance and/or appearance, versus a simple absence of MSAA ("absent" meaning strictly in the transparent portions).

As an aside: the reason deferred-shading had become dominant at that time is because it allowed for negligible per-light cost for dynamic lighting (at least, for shadow-less lights), versus traditional forward-shading which scales quite poorly w/ dynamic light-count (4-ish being the conventional peak).

However, "clustered-forward" shading catches up to deferred-shading w/ regards to dynamic-light cost, and doesn't suffer from many of the same limitations/difficulties that deferred does (ie. transparency, MSAA). The two main reasons (that I see) for clustered-forward not being widely-used is 1. complacency/tech-lag and 2. many screen-space techniques make use of the G-buffer that deferred provides.