Oculus's 'Half-Dome' Headset Offers Wide Field Of View, Better Close-Ups

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

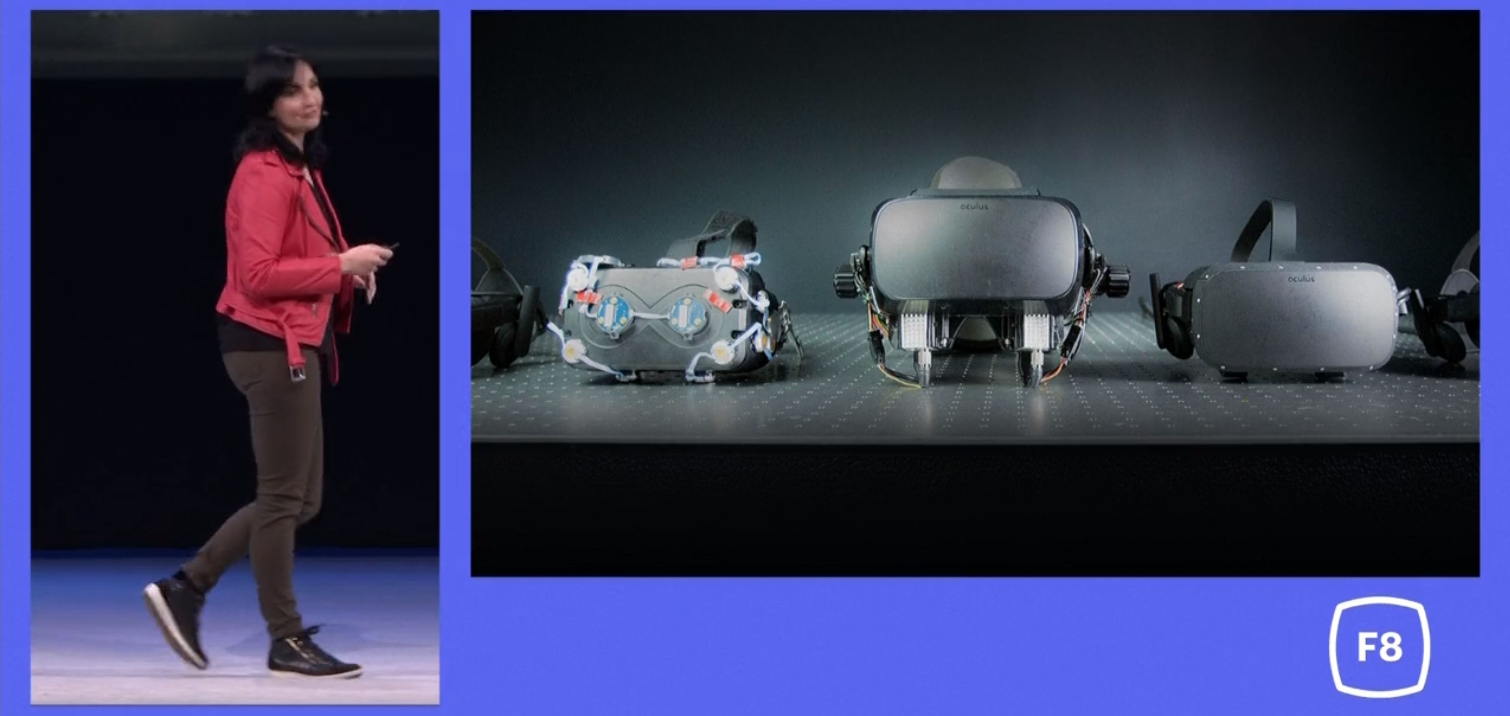

The next Oculus headset could give you a whole lot more to look at. Today, at Facebook's F8 conference, Oculus Head of Project Management Maria Gernandez Guajardo unveiled "Half-Dome," a new prototype headset with a wider field of view and improved visibility for objects that are close to your face. She also revealed that Oculus is developing advanced hand tracking capabilities so you can use your real fingers in the virtual world.

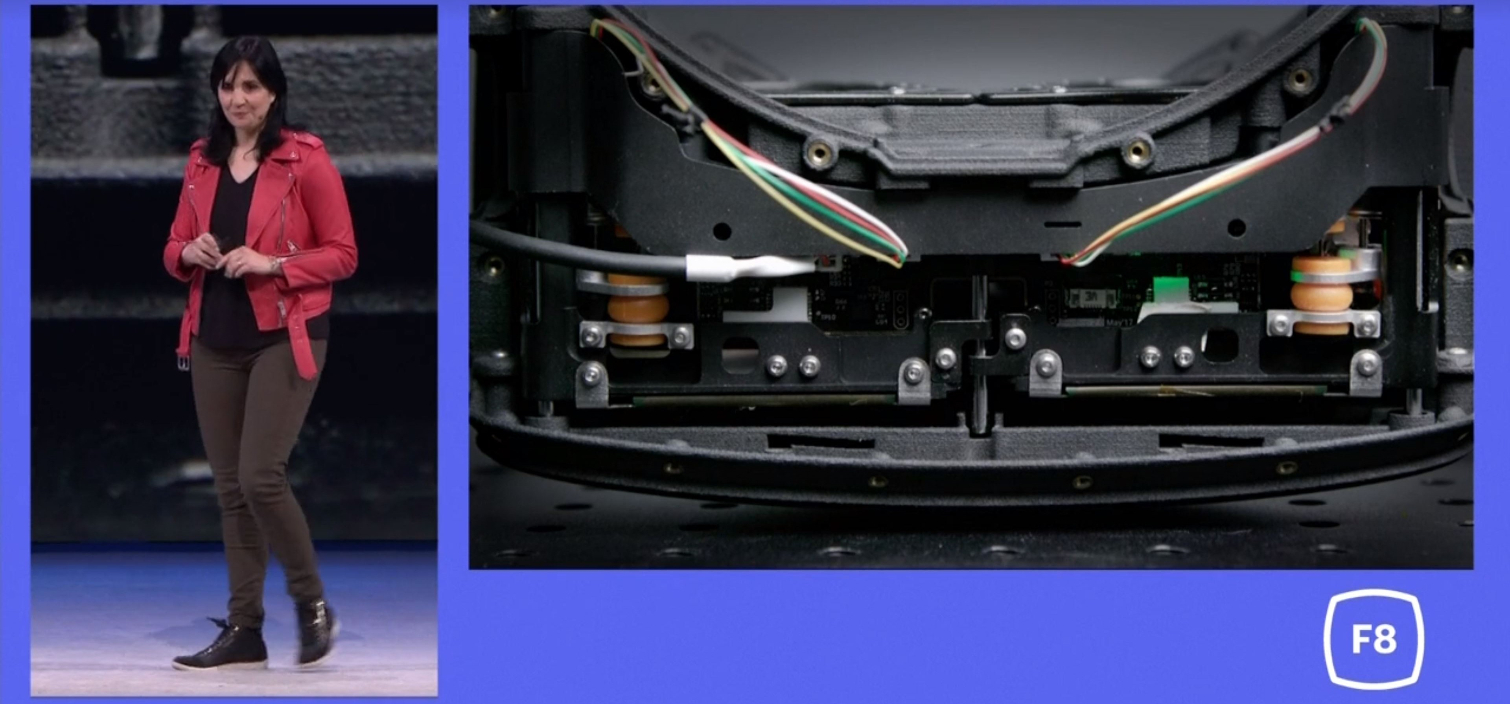

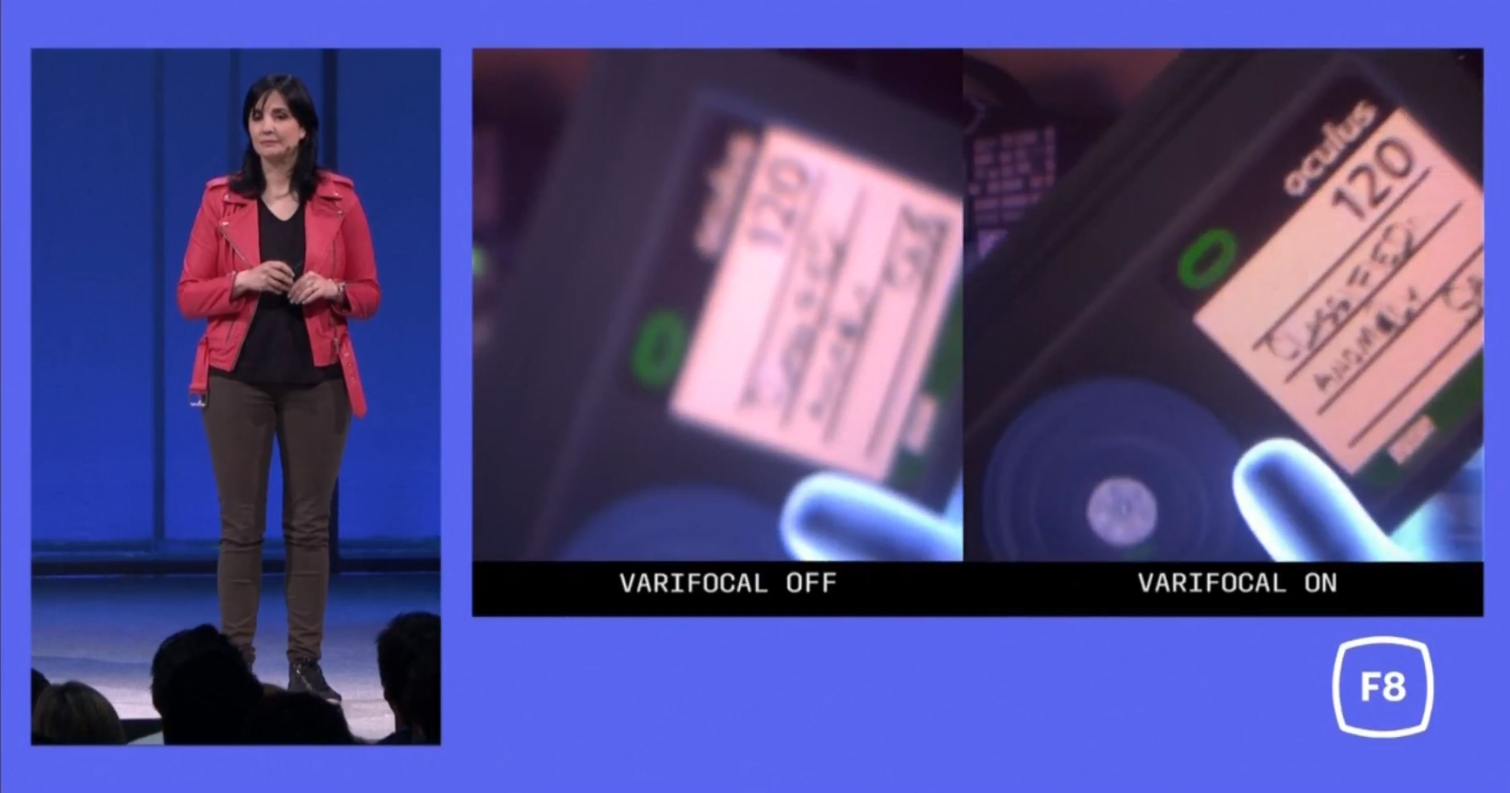

Ever try reading a notepad in VR? Current headsets have fixed focal distances, which works well for viewing things a few feet away from you, but not so great for objects that are right in front of your face. The company's new Varifocal technology moves the internal displays closer or further from the lenses based on the content.

Guarjardo’s team also managed to increase the field of view in the headset to 140-degrees, which is roughly a 40-degree improvement over the Rift headset. And Oculus’s engineers managed to squeeze these improvements into a headset that's the same form factor and weight as the Rift.

In addition to the increased field of view and adjustment focal distance, the new headset features motion-capture cameras on the front to track hand and finger movement. Guajardo said that Oculus is “investing in new AI technology” to help bring your hands into VR in a more convincing way.

Today, the Oculus Touch controllers allow hand interaction in VR, but Oculus wants to bring your real hands into VR. For several years, Leap Motion has been leading the charge in finger and hand tracking, but Oculus’s new solution could surpass the capability of Leap Motion’s current tech for complex VR interactions. Oculus developed a motion capture technique called Deep Marker Labeling, which uses machine learning to interpret hand gestures as tracking markers.

Oculus also uses the front-mounted cameras for 3D capture of the environment around you. The headset pumps the camera data through computer vision algorithms, which it can use to recreate a 3D rendering of the real world around you with stunning accuracy. If Oculus’s demonstration is to be believed, the company’s room-scanning technology seems to be more advanced than you'll find on Microsoft’s HoloLens.

Oculus didn’t say when we would see a product with the advanced cameras and focal system. We hope to see these innovations in Oculus’s upcoming Santa Cruz standalone VR headset. However, if we had to bet, we’d say the 140-degree FOV would be reserved for the next desktop connected Oculus headset, whenever that may materialize.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

bit_user The varifocal lens sounds potentially bulky, noisy, slow, fragile, and frustrating (e.g. if it focuses on a bush in front of you that you're trying to look through to see the baddies on the other side). Magic Leap's approach is more brute-force, but really so much more elegant.Reply

On the other hand, I'm all for room scanning. Definitely hope we see that in Santa Cruz. Makes much more sense in an untethered HMD, although it would probably burn a lot of battery power.

The extra FoV sounds nice, but not sure that's the way I'd want to spend so much additional resolution. If they have a good foveated rendering approach (i.e. better than the static one in Oculus Go) that can deliver a "free" resolution bump to accommodate, then perhaps. -

milkod2001 3D TVs have failed because people refused to wear literary paper weight glasses. VR will never get to this size and weight of glasses. Even if ,it will fail for the very same reasons. Wonder why they still push for this already dead tech. Hoping for miracles?Reply -

Sakkura Reply20936748 said:The varifocal lens sounds potentially bulky, noisy, slow, fragile, and frustrating (e.g. if it focuses on a bush in front of you that you're trying to look through to see the baddies on the other side). Magic Leap's approach is more brute-force, but really so much more elegant.

On the other hand, I'm all for room scanning. Definitely hope we see that in Santa Cruz. Makes much more sense in an untethered HMD, although it would probably burn a lot of battery power.

The extra FoV sounds nice, but not sure that's the way I'd want to spend so much additional resolution. If they have a good foveated rendering approach (i.e. better than the static one in Oculus Go) that can deliver a "free" resolution bump to accommodate, then perhaps.

The new lens system fits in the same space as the old one, so the bulk isn't a major issue. But there are definitely other questions to answer. I still think it's worth investigating whether the upsides can outweigh the downsides.

Magic Leap is not comparable at all, they're doing AR and with vastly inferior FOV. And yes, FOV is quite important for immersion. We do need to get to higher FOV for next-gen devices.

20937328 said:3D TVs have failed because people refused to wear literary paper weight glasses. VR will never get to this size and weight of glasses. Even if ,it will fail for the very same reasons. Wonder why they still push for this already dead tech. Hoping for miracles?

VR and 3D are completely different things. Equating them just shows you have no idea what you're talking about. -

McWhiskey Does this incorporate eye tracking at all? IMO Using the eye instead of content would be a far superior approach. The mechanism would have to be lightning quick (maybe unrealistically so.) But being able to choose what you want to focus on and have that adjusted on the fly would be insanely better than being forced into being near sighted or far sighted.Reply

This would also give the added bonus of requiring less resources to fill the peripheral with low res content. -

bit_user Reply

Someone apparently doesn't understand the concept of cost vs. benefit.20937328 said:3D TVs have failed because people refused to wear literary paper weight glasses. VR will never get to this size and weight of glasses. Even if ,it will fail for the very same reasons. Wonder why they still push for this already dead tech. Hoping for miracles?

Besides, 3D TV is a bad example, since it has other compromises and doesn't add much to the experience. I have several dozen 3D blu-rays and even I can't be particularly bothered about whether or not I can see a movie in 3D. It's nice, but if it's a good movie I tend to forget about the 3D. If it's a bad movie, the 3D doesn't usually redeem the experience. In contrast, playing a VR vs. non-VR game is fundamentally different.

That said, I've heard good things about watching 3D blu-rays in VR. Maybe it makes the experience more worthwhile, even though you can still see it only from exactly one angle. -

bit_user Reply

That's how I'm imagining it works.20938102 said:Does this incorporate eye tracking at all? IMO Using the eye instead of content would be a far superior approach. The mechanism would have to be lightning quick (maybe unrealistically so.) But being able to choose what you want to focus on and have that adjusted on the fly would be insanely better than being forced into being near sighted or far sighted.

But see my point about looking through virtual bushes or a virtual window - unless the eye tracking can read the shape of the your eye's lens, it won't always correctly guess on what you're trying to focus. -

237841209 It's up to the software developer who creates the 'bush' to make it work well with the technology. That's how it's always been.Reply -

bit_user Reply

But you're assuming the content is custom-made for a device with the varifocal lenses. But if you consider Oculus Go, they can run stuff made for Gear VR. Presumably, this HMD would be running stuff made for Go or Rift, etc. So, it would need to work well without developer customization and tuning.20940531 said:It's up to the software developer who creates the 'bush' to make it work well with the technology. That's how it's always been.

I'm just saying it feels a bit gimmicky to me. And how often would it have to focus on the wrong thing or "hunt", before users get frustrated and turn it off? A feature like this needs to be rock-solid. -

cryoburner Reply

Actually, since you would need eye tracking to enable proper foveated rendering, that could also likely be used in conjunction with the motorized lenses for more accurate focusing. If the eye tracking is suitably accurate, it should be able to detect your eyes crossing slightly, as happens when viewing something up close, and based on their positions, triangulate the approximate distance that they would naturally be focusing at. The lenses could in turn move to the appropriate position to allow the viewer to focus on things more naturally. To look right, this would likely also need to be combined with simulated depth of field on the software side of things, since the lenses would be adjusting the focus of the entire screen at once. The lenses being able to focus might also eliminate the need for many eyeglass wearers to wear glasses inside the headset, since a simple calibration could keep the screens within their focal range.20936748 said:The varifocal lens sounds potentially bulky, noisy, slow, fragile, and frustrating (e.g. if it focuses on a bush in front of you that you're trying to look through to see the baddies on the other side). Magic Leap's approach is more brute-force, but really so much more elegant.

...

The extra FoV sounds nice, but not sure that's the way I'd want to spend so much additional resolution. If they have a good foveated rendering approach (i.e. better than the static one in Oculus Go) that can deliver a "free" resolution bump to accommodate, then perhaps.

Such a focusing system might not necessarily have issues like being noisy or slow either. Depending on how the lenses are designed, they might not need to move very far, or be particularly heavy, and unlike most cameras with autofocus, the headset should have better data to work with to know where the lenses should be positioned, so that they can instantly move there.