Researchers Use Phase-Functioned Neural Networks For Character Control

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

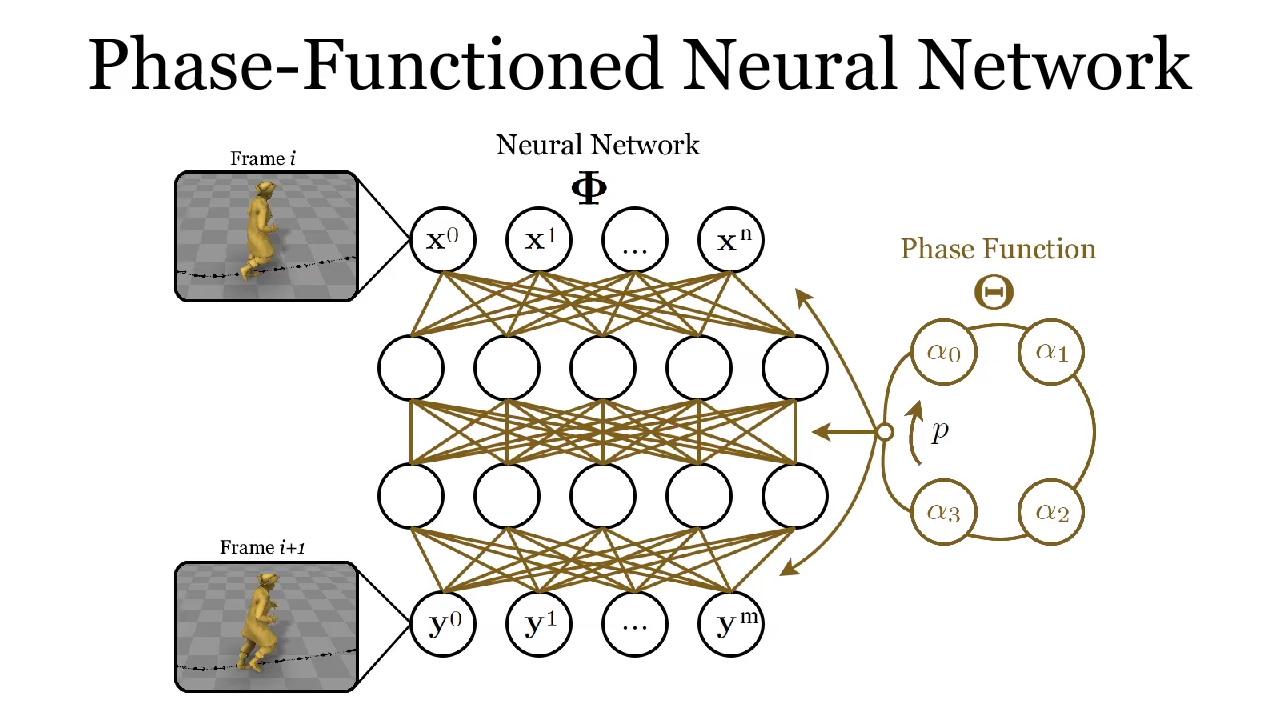

Researchers from the University of Edinburgh have developed a novel learning framework called a Phase-Functioned Neural Network (PFNN) that uses machine learning for character animation and other applications. Daniel Holden, a researcher at Ubisoft Montreal and lead researcher on this project, described PFNN as:

A learning framework that is suitable for generating cyclic behavior such as human locomotion. We also design the input and output parameters of the network for real-time data-driven character control in complex environments with detailed user interaction. Despite its compact structure, the network can learn from a large, high dimensional dataset thanks to a phase function that varies smoothly over time to produce a large variation of network configurations. We also propose a framework to produce additional data for training the PFNN where the human locomotion and the environmental geometry are coupled. Once trained our system is fast, requires little memory, and produces high quality motion without exhibiting any of the common artefacts found in existing methods.

Holden went on to say that, once trained, PFNN is extremely fast and compact, requiring only milliseconds of execution time and a few megabytes of memory, even when trained on gigabytes of motion data.

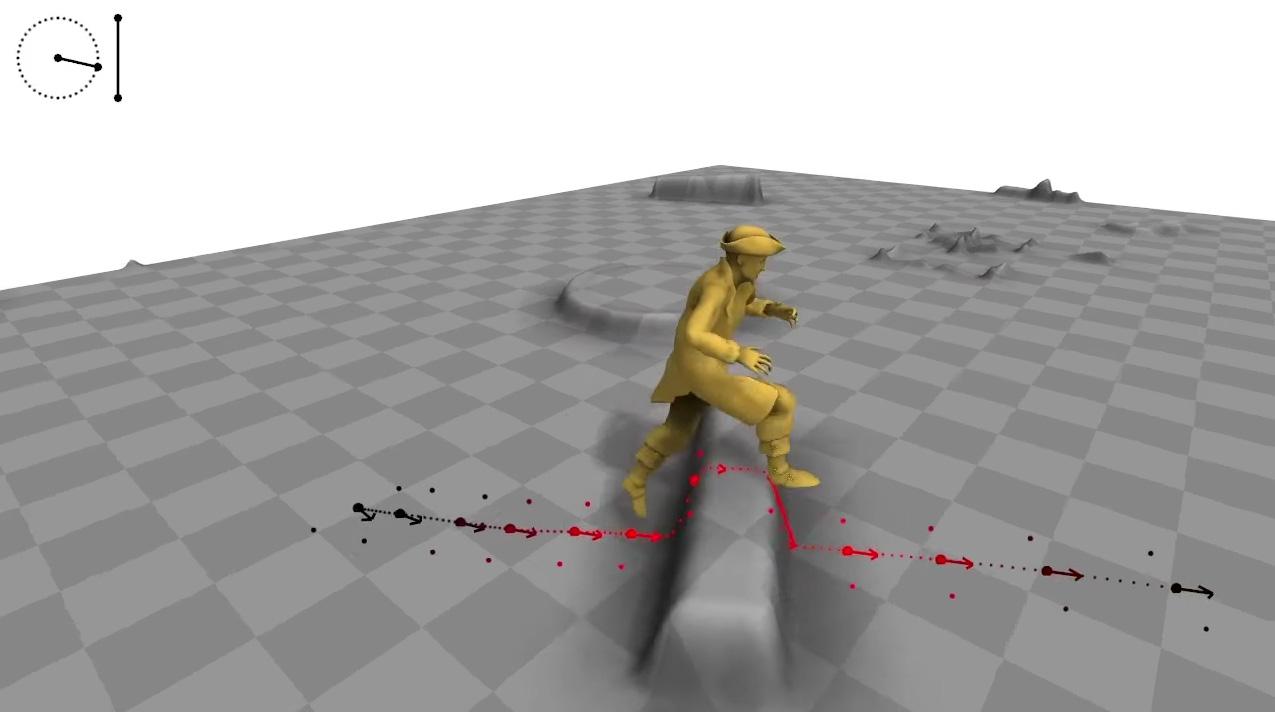

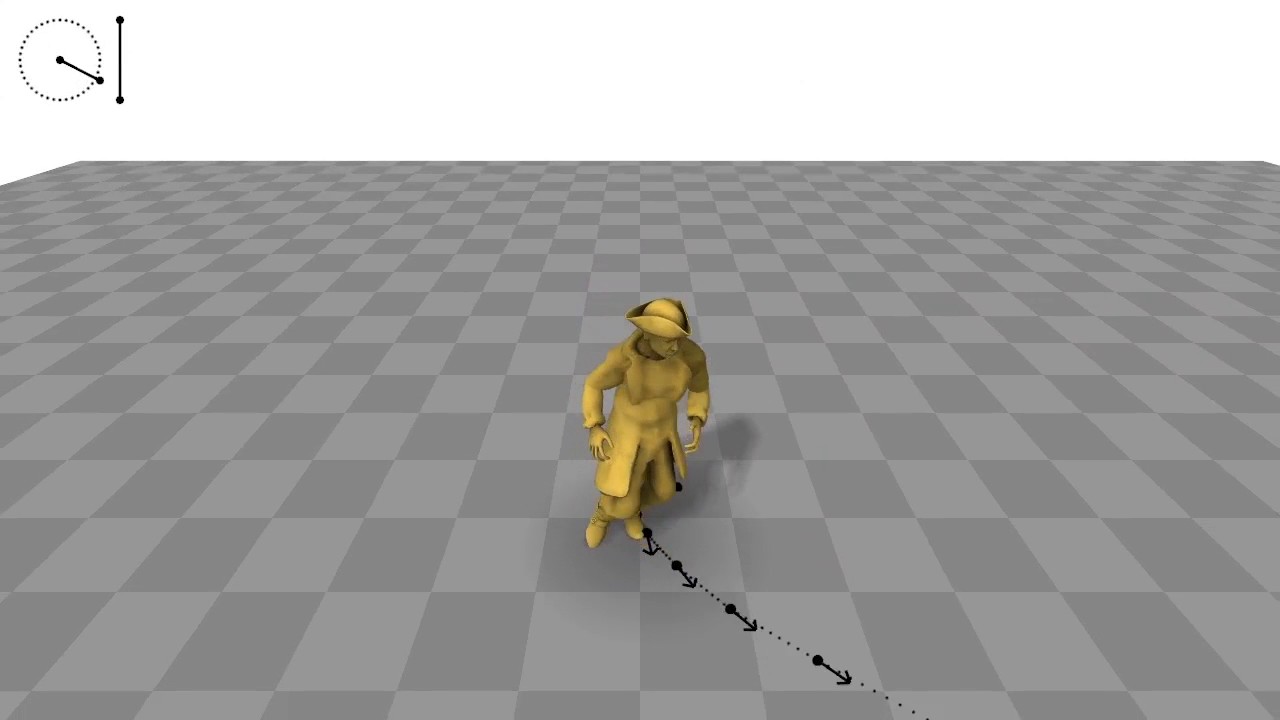

PFNN is trained in an end-to-end fashion on a large data set composed of movements such as walking, running, jumping, and climbing fitted into virtual environments. The system is able to automatically produce motions where the character adapts to different geometric environments such as walking and running over rough terrain, climbing over large rocks, jumping over obstacles, and crouching under low ceilings.

There are three stages to the Phase-Functioned Neural Network system: the preprocessing stage, the training stage, and the run time stage. During the preprocessing stage, the training data is prepared to automatically extract the control parameters that will later be supplied by the user. This process includes fitting terrain data to the captured motion data using a separate database of heightmaps. During the training stage, the PFNN is trained using this data such that it produces the motion of the character in each frame given the control parameters. During the run time stage, the input parameters to the PFNN are collected from the user input as well as the environment, and input into the system to determine the motion of the character.

This real-time character control mechanism employs a neural network where the system takes user controls, the previous state of the character, and the geometry of the scene and automatically produces high quality motions that achieves realistic character movement. This control mechanism would be ideal for controlling characters in interactive scenes such as video games and virtual reality systems. The researchers stated that, if trained with a non-cyclic phase function, the PFNN could easily be used on other tasks such as punching and kicking.

Holden plans to present this new neural network at SIGGRAPH in August.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Steven Lynch is a contributor for Tom’s Hardware, primarily covering case reviews and news.

-

cwolf78 Man, that sure will be a huge boon for MMO type games. I always found character animations in MMO's to be pretty rigid and robotic-looking which I'm assuming that it's computationally expensive to realistically animate tons of characters on screen at the same time, especially when including terrain interaction. It would be really impressive to see this tech used to realistically animate a ton of on-screen characters at the same time.Reply